Deploying Stable Diffusion 3.5 on RunPod: A Deep Dive into Serverless GPU Computing

The artificial intelligence landscape is evolving at a breathtaking pace. Every week seems to bring new breakthroughs, with organizations pushing the boundaries of what’s possible. Recent developments in the Stability AI News cycle, alongside continuous advancements from titans like Google DeepMind and Meta AI, highlight a significant trend: models are becoming more powerful, but also more resource-intensive. For developers, MLOps engineers, and researchers, this presents a critical challenge: how to access and deploy these state-of-the-art models without managing a complex and expensive GPU infrastructure. This is where platforms like RunPod have carved out a crucial niche, offering a streamlined, cost-effective solution for GPU-accelerated computing. This article provides a comprehensive technical guide to leveraging RunPod, using the deployment of a large, modern model like Stable Diffusion 3.5 as a practical example to explore its serverless capabilities, advanced features, and best practices for production-ready AI applications.

The Core of RunPod: Choosing Your GPU Compute Model

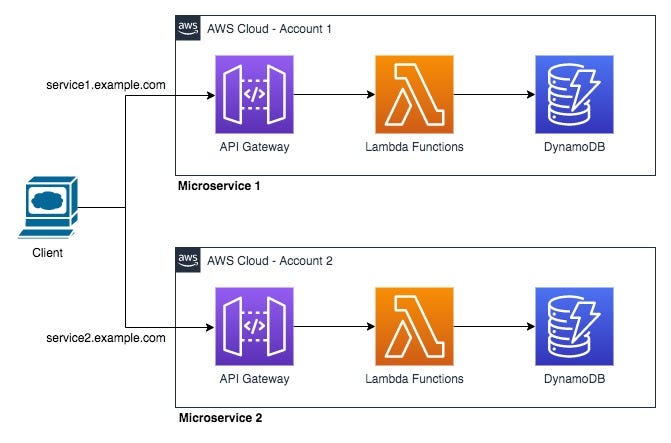

Before diving into deployment, it’s essential to understand RunPod’s two primary compute models: Secure Cloud Pods and Serverless Endpoints. Choosing the right one depends entirely on your workload, budget, and stage of development. This flexibility is a key advantage over more rigid platforms like AWS SageMaker or Azure Machine Learning, which often have a steeper learning curve.

Secure Cloud Pods: Your Persistent GPU Workstation

Think of a Cloud Pod as your personal, persistent GPU-powered virtual machine in the cloud. It’s a stateful environment where you have full control. You can start a pod, SSH into it, install libraries, run Jupyter notebooks, and develop your code interactively. This makes it an ideal environment for tasks like model training, fine-tuning, and exploratory data analysis. If you’re accustomed to using Google Colab for experiments but need more power, longer runtimes, and a persistent file system, Cloud Pods are the logical next step. You can use them to train models with popular frameworks covered in PyTorch News or TensorFlow News, and once your work is done, you can stop the pod to only pay for storage.

Serverless Endpoints: Scalable, On-Demand Inference

For production inference, a persistent, always-on server is often inefficient. This is where RunPod Serverless shines. It operates on a pay-per-second model where your code is packaged into a container and deployed as an endpoint. When a request comes in, RunPod automatically spins up a worker (a container instance on a GPU), processes the job, and then spins it down. This model is incredibly cost-effective for applications with variable or intermittent traffic. It abstracts away the complexity of Kubernetes, auto-scaling, and load balancing. The primary trade-off is the “cold start” time—the initial delay required to start a worker for the first request. We’ll explore how to mitigate this later. This serverless paradigm is becoming increasingly popular, with similar offerings from platforms like Modal News and Replicate News, but RunPod’s focus on GPU variety and community pricing is a strong differentiator.

# runpod.yml: A simple configuration for a serverless worker

# This file defines the environment for our serverless endpoint.

workers:

- name: stable-diffusion-3-5-worker

image: your-dockerhub-username/sd35-runpod:latest

gpu: "NVIDIA RTX A6000" # Specify the GPU type

minInstances: 0 # Scale down to zero to save costs

maxInstances: 5 # Maximum concurrent workers

# Define environment variables, including secrets

env:

HF_TOKEN: "your_hugging_face_read_token"

MODEL_NAME: "stabilityai/stable-diffusion-3-medium-diffusers"

# Define the ports your container exposes

ports:

- "8080/http"

# Define the container disk size

containerDiskInGb: 20Step-by-Step Guide: Deploying Stable Diffusion 3.5 on RunPod Serverless

Let’s move from theory to practice. Deploying a cutting-edge image generation model involves packaging the model, its dependencies, and the inference logic into a reproducible container. This process ensures that the model runs consistently every time a serverless worker is initialized.

Preparing Your Handler File

The core of a RunPod Serverless worker is the handler file, typically named handler.py. This Python script must contain a function that RunPod will execute for each incoming API request. The recommended practice is to use a class structure with an __init__ method for setup and a `handler` method for inference. The __init__ method is called only once when the worker starts, making it the perfect place to load the model into VRAM. This ensures the model is ready and waiting for subsequent requests, minimizing latency. We’ll use the diffusers library, a key part of the ecosystem often featured in Hugging Face News, to load the Stable Diffusion model from the Hugging Face Hub.

import runpod

import torch

from diffusers import StableDiffusion3Pipeline

from PIL import Image

import base64

from io import BytesIO

import os

# Load the model during worker initialization

class SD3Handler:

def __init__(self):

"""

The __init__ method is called once when the worker is initialized.

"""

self.pipe = None

self.load_model()

def load_model(self):

"""

Load the Stable Diffusion 3.5 model into memory.

"""

hf_token = os.environ.get("HF_TOKEN")

model_name = os.environ.get("MODEL_NAME", "stabilityai/stable-diffusion-3-medium-diffusers")

self.pipe = StableDiffusion3Pipeline.from_pretrained(

model_name,

use_auth_token=hf_token,

torch_dtype=torch.float16

).to("cuda")

print("Model loaded successfully.")

def handler(self, job):

"""

The handler method is called for each job request.

"""

job_input = job['input']

prompt = job_input.get('prompt', 'a photo of an astronaut riding a horse on mars')

negative_prompt = job_input.get('negative_prompt', '')

num_inference_steps = job_input.get('num_inference_steps', 28)

guidance_scale = job_input.get('guidance_scale', 7.0)

# Generate the image

image = self.pipe(

prompt=prompt,

negative_prompt=negative_prompt,

num_inference_steps=num_inference_steps,

guidance_scale=guidance_scale,

).images[0]

# Convert image to base64 string for JSON response

buffered = BytesIO()

image.save(buffered, format="PNG")

img_str = base64.b64encode(buffered.getvalue()).decode("utf-8")

return {"image": img_str}

# Start the handler

if __name__ == "__main__":

runpod.serverless.start({"handler": SD3Handler})Building Your Docker Image

To ensure all dependencies are correctly installed, we need to create a Dockerfile. This file defines the steps to build a container image. We start from a base image provided by RunPod that already includes CUDA and PyTorch, which saves significant setup time. Then, we copy our project files and install any additional Python libraries listed in a requirements.txt file. This containerization approach is a fundamental MLOps practice, ensuring portability and reproducibility across different environments.

# Use a RunPod base image with PyTorch and CUDA pre-installed

FROM runpod/pytorch:2.1.0-py3.10-cuda12.1.1-devel

# Set the working directory

WORKDIR /app

# Copy requirements file and install dependencies

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy the handler and any other necessary files

COPY handler.py .

# Command to run when the container starts

CMD ["python", "-u", "handler.py"]Beyond the Basics: Advanced RunPod Features and Integrations

Once you have a basic deployment running, you can explore more advanced features to optimize for cost, performance, and functionality. This is where RunPod’s flexibility truly stands out, allowing you to integrate with a wide range of tools from the modern AI stack, including those featured in LangChain News for orchestration and vector databases like Pinecone or Weaviate for RAG pipelines.

Optimizing LLM Inference with vLLM

For Large Language Models (LLMs), inference speed and throughput are critical. Standard Hugging Face pipelines can be inefficient for concurrent requests. This is where specialized inference servers come in. As often highlighted in NVIDIA AI News, frameworks like NVIDIA’s Triton Inference Server and TensorRT can provide significant speedups. Another popular open-source option is vLLM. Integrating vLLM News into your RunPod worker can dramatically increase token generation speed and allow for much higher request batching, leading to both lower latency and reduced cost per request. You can adapt your handler to use the vLLM engine for text generation tasks, making your endpoint far more efficient.

import runpod

from vllm import LLM, SamplingParams

import os

class vLLMHandler:

def __init__(self):

"""

Initialize the vLLM engine.

"""

model_name = os.environ.get("MODEL_NAME", "mistralai/Mistral-7B-Instruct-v0.2")

# For vLLM, it's often better to use a larger GPU and specify tensor parallelism

self.llm = LLM(model=model_name, tensor_parallel_size=1)

print("vLLM engine initialized.")

def handler(self, job):

"""

Handle inference requests using the vLLM engine.

"""

job_input = job['input']

prompt = job_input.get('prompt')

max_tokens = job_input.get('max_tokens', 256)

temperature = job_input.get('temperature', 0.7)

if not prompt:

return {"error": "Prompt is required."}

sampling_params = SamplingParams(

temperature=temperature,

max_tokens=max_tokens

)

# Generate text in a batched and optimized way

outputs = self.llm.generate(prompt, sampling_params)

# Extract the generated text

generated_text = outputs[0].outputs[0].text

return {"text": generated_text}

if __name__ == "__main__":

runpod.serverless.start({"handler": vLLMHandler})Integrating with MLOps and Experiment Tracking

A production model is more than just an endpoint; it’s part of a larger ecosystem. For robust MLOps, you need to track experiments, monitor performance, and version your models. Tools like MLflow News, Weights & Biases News, and Comet ML News are industry standards for this. You can easily integrate their client libraries into your RunPod Pods during the training phase. For instance, you can log metrics, parameters, and model artifacts from a training script running on a RunPod Pod directly to your Weights & Biases dashboard. This creates a complete feedback loop, connecting your cloud-based GPU experiments with your central MLOps platform.

Best Practices for Efficient and Cost-Effective Deployments

Deploying a model is one thing; running it efficiently in production is another. Following best practices can save you significant money and improve the user experience of your application, whether it’s a chatbot powered by models from Cohere News or an internal tool built with Streamlit or Gradio.

Minimizing Cold Start Times

The most common pain point with serverless GPU endpoints is the cold start. This delay can be anywhere from a few seconds to over a minute, depending on your model size and container image. To minimize it:

- Optimize Your Docker Image: Keep your image as small as possible. Use multi-stage builds and avoid installing unnecessary packages.

- Pre-download and Cache Models: Instead of downloading the model from Hugging Face Hub every time a worker starts, bake the model weights directly into your Docker image. This moves the download time from the startup phase to the build phase.

- Use Faster Model Formats: Use formats like

safetensors, which are generally faster and safer to load than traditional PyTorch pickles. - Configure a Minimum Number of Workers: For latency-sensitive applications, you can configure your serverless endpoint to keep at least one worker warm (

minInstances: 1). This eliminates cold starts for the first user but incurs a constant cost.

Cost Management and GPU Selection

RunPod’s main appeal is its cost-effectiveness. To maximize savings, choose the right tool for the job. Use the Community Cloud for development, research, and non-sensitive workloads to get access to peer-to-peer GPUs at a fraction of the price. For production, use the Secure Cloud for reliability and data privacy. Most importantly, select the correct GPU. Don’t provision an A100 for a model that fits comfortably on an RTX A4000. Analyze your model’s VRAM requirements and computational needs to make an informed choice.

Conclusion: Your Gateway to Scalable AI

RunPod effectively democratizes access to high-end GPU computing, bridging the gap between cutting-edge research and practical application. By offering a flexible choice between persistent Pods for development and auto-scaling Serverless Endpoints for inference, it caters to the entire lifecycle of an AI project. As models from sources like Anthropic News and Mistral AI News continue to grow in complexity, platforms that simplify deployment and manage costs will become increasingly indispensable. By following the practical steps and best practices outlined in this guide—from building an efficient container and handler to optimizing for cost and performance—you can confidently deploy even the most demanding models. The next step is to take these concepts and apply them to your own projects. Start with a RunPod template, experiment with different GPUs, and unlock the power of scalable, on-demand AI.