Building Embodied AI: A Developer’s Guide to Concurrent Robotics Control with Go

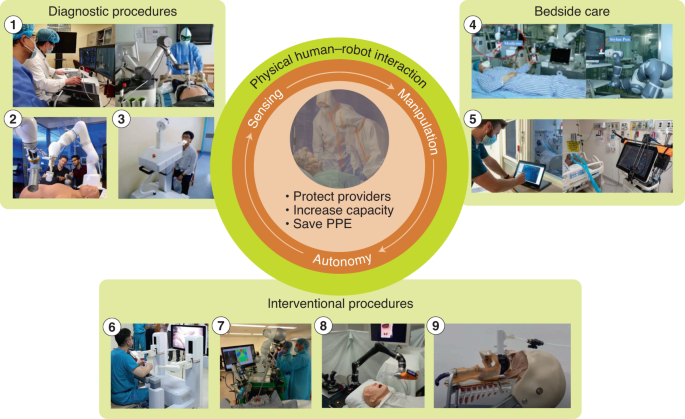

The field of artificial intelligence is undergoing a monumental shift. For years, the focus has been on models that exist purely in the digital realm—mastering language, generating images, and analyzing data. However, the latest wave of innovation, highlighted by advancements in multimodal understanding and reasoning, is pushing AI into the physical world. This new frontier, often called Embodied AI, involves creating agents that can perceive, reason about, and interact with their physical surroundings. As we see from the latest Google DeepMind News, the goal is to build AI systems that can perform complex, multi-step tasks in real-world environments, bridging the gap between digital intelligence and physical action.

Powering these physical agents requires a robust, concurrent, and highly performant software backbone. While Python dominates the AI model training landscape, languages like Go are exceptionally well-suited for building the control systems, data pipelines, and API servers that orchestrate these robotic agents. Go’s native support for concurrency via goroutines and channels provides an elegant and powerful paradigm for managing the simultaneous streams of sensor data, model inference, and actuator commands that are fundamental to robotics. This article explores how to architect such a system using Go, providing practical code examples to illustrate core concepts for building the next generation of embodied AI.

The Architectural Pillars of an Embodied AI System

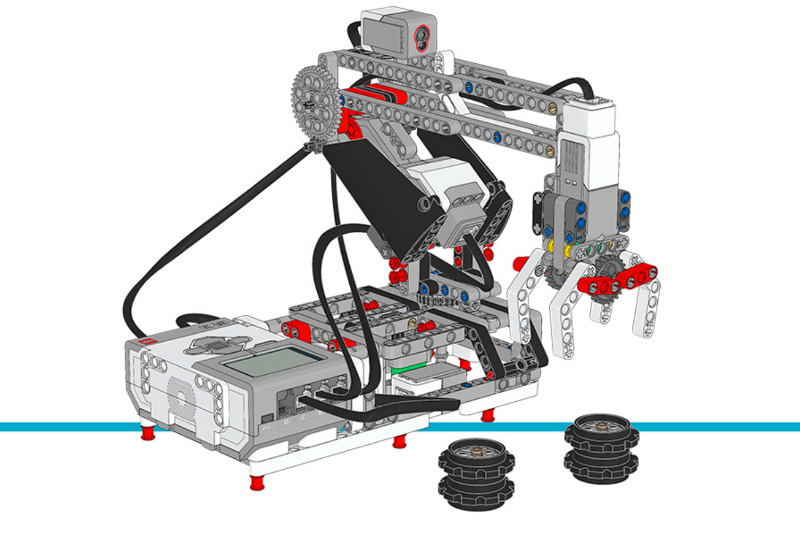

An embodied AI system can be broken down into three fundamental components: Perception, Planning, and Action. This loop continuously repeats, allowing the agent to operate autonomously.

- Perception: This is how the agent senses the world. It involves processing data from various sensors like cameras, LiDAR, tactile sensors, and microphones. This raw data is often pre-processed and fed into a multimodal AI model.

- Planning: The “brain” of the operation. A sophisticated AI model, often a large multimodal model (LMM) trained using frameworks mentioned in TensorFlow News or PyTorch News, takes the processed perception data as input. It reasons about the state of the world, understands the user’s command (e.g., “pick up the red apple”), and breaks it down into a sequence of executable actions. This is where news from OpenAI News and Anthropic News becomes highly relevant, as their models’ reasoning capabilities are key.

- Action: The agent executes the plan using its actuators—motors, grippers, and other physical components. This involves sending precise, low-level commands to the robot’s hardware.

In Go, we can model this system cleanly using interfaces. An interface defines a set of methods that a type must implement, allowing us to write generic, decoupled code. For our robot, we can define an Actuator interface that standardizes how the system executes a physical action, regardless of the specific hardware.

Defining Actions with Go Interfaces

Let’s define a simple Actuator interface and a few concrete types that implement it. This allows our planning module to generate a list of actions without needing to know the low-level details of how a robotic arm moves or a gripper closes.

package main

import (

"fmt"

"time"

)

// Actuator defines the interface for any action a robot can perform.

type Actuator interface {

Execute() error

}

// MoveArm represents an action to move the robot's arm to a target position.

type MoveArm struct {

X, Y, Z float64

}

// Execute implements the Actuator interface for MoveArm.

func (m MoveArm) Execute() error {

fmt.Printf("Executing: Move arm to (%.2f, %.2f, %.2f)\n", m.X, m.Y, m.Z)

// Simulate the time it takes to move the arm.

time.Sleep(1 * time.Second)

fmt.Println("Action 'MoveArm' completed.")

return nil

}

// ControlGripper represents an action to open or close the gripper.

type ControlGripper struct {

Open bool

}

// Execute implements the Actuator interface for ControlGripper.

func (g ControlGripper) Execute() error {

action := "Closing"

if g.Open {

action = "Opening"

}

fmt.Printf("Executing: %s gripper\n", action)

// Simulate the time it takes to control the gripper.

time.Sleep(500 * time.Millisecond)

fmt.Printf("Action 'ControlGripper' completed.\n")

return nil

}

func main() {

// A plan generated by the AI model.

plan := []Actuator{

MoveArm{X: 0.5, Y: 0.2, Z: 1.0},

ControlGripper{Open: false}, // Close gripper

MoveArm{X: 0.0, Y: 0.0, Z: 1.5},

}

fmt.Println("Starting execution of the plan...")

for _, action := range plan {

err := action.Execute()

if err != nil {

fmt.Printf("Failed to execute action: %v\n", err)

break // Stop if an action fails

}

}

fmt.Println("Plan execution finished.")

}Implementing a Concurrent Control Loop with Goroutines and Channels

A real robot doesn’t execute a static plan and then stop. It must continuously perceive and react. This is where Go’s concurrency model shines. We can use goroutines (lightweight threads managed by the Go runtime) and channels (typed conduits for communication between goroutines) to build a responsive, non-blocking control loop.

Let’s design a system with three concurrent processes:

- Sensor Goroutine: Simulates a camera, continuously capturing “frames” and sending them over a channel.

- Planner Goroutine: Represents the AI brain. It receives frames from the sensor channel, makes a decision (in our simulation, a simple one), and sends an

Actuatorcommand to an action channel. This component would, in a real system, interact with models from sources like Hugging Face Transformers News or be deployed on platforms like Vertex AI or AWS SageMaker. - Controller Goroutine: Listens on the action channel and executes the commands it receives using the robot’s physical hardware.

This architecture decouples the components, allowing them to run independently and communicate safely without complex locks or mutexes. It’s a scalable pattern used in high-performance systems, and its principles are echoed in distributed computing frameworks discussed in Ray News and Apache Spark MLlib News.

package main

import (

"fmt"

"math/rand"

"time"

)

// SensorData represents a snapshot from a robot's sensor.

type SensorData struct {

Timestamp time.Time

ImageFrame []byte // Simplified representation of an image

ObjectFound bool

}

// Actuator defines the interface for any action a robot can perform.

type Actuator interface {

Execute() error

}

// GraspObject is a concrete action.

type GraspObject struct{}

func (g GraspObject) Execute() error {

fmt.Println("--> ACTION: Executing GraspObject.")

time.Sleep(1 * time.Second)

fmt.Println("--> ACTION: GraspObject complete.")

return nil

}

// sensor simulates a camera feeding data into a channel.

func sensor(sensorDataChan chan<- SensorData) {

for {

found := rand.Intn(10) == 0 // 10% chance to "find" an object

data := SensorData{

Timestamp: time.Now(),

ImageFrame: []byte("...image_data..."),

ObjectFound: found,

}

fmt.Printf("SENSOR: Object found: %v. Sending data.\n", found)

sensorDataChan <- data

time.Sleep(500 * time.Millisecond) // New frame every 500ms

}

}

// planner simulates the AI brain making decisions.

func planner(sensorDataChan <-chan SensorData, actionChan chan<- Actuator) {

for data := range sensorDataChan {

fmt.Printf("PLANNER: Received sensor data from %s.\n", data.Timestamp.Format(time.RFC3339))

if data.ObjectFound {

fmt.Println("PLANNER: Object detected! Planning to grasp.")

actionChan <- GraspObject{}

}

}

}

// controller executes actions received from the planner.

func controller(actionChan <-chan Actuator) {

for action := range actionChan {

fmt.Println("CONTROLLER: Received new action.")

err := action.Execute()

if err != nil {

fmt.Printf("CONTROLLER: Error executing action: %v\n", err)

}

}

}

func main() {

// Create channels for communication

sensorDataChan := make(chan SensorData)

actionChan := make(chan Actuator)

// Start the concurrent processes as goroutines

go sensor(sensorDataChan)

go planner(sensorDataChan, actionChan)

go controller(actionChan)

// Let the simulation run for a while

fmt.Println("Robotics control system is running. Press CTRL+C to exit.")

select {} // Block forever

}Integrating External AI Services and Managing State

Our previous example used a simplistic planner. In a real-world scenario, the planner goroutine would make an API call to a powerful AI model hosted on a service like Google Colab for prototyping or a production-grade platform like Azure Machine Learning or Amazon Bedrock News. This model could be a fine-tuned version of a foundation model from providers covered in Cohere News or Mistral AI News.

Let’s modify our planner to call a hypothetical external inference service. This function demonstrates making an HTTP POST request with sensor data and parsing the resulting action. For more complex interactions, developers might use tools from the LangChain News or LlamaIndex News ecosystems to structure prompts and parse outputs.

Calling an External Planner Service

package main

import (

"bytes"

"encoding/json"

"fmt"

"net/http"

"time"

)

// Represents the request payload sent to the AI service.

type PlannerRequest struct {

SensorID string `json:"sensor_id"`

ImageData []byte `json:"image_data"`

RobotState string `json:"robot_state"`

}

// Represents the action returned by the AI service.

type PlannerResponse struct {

ActionName string `json:"action_name"`

Params map[string]interface{} `json:"params"`

}

// callPlannerService simulates making an API call to a model inference endpoint.

// In a real system, this endpoint could be powered by Triton Inference Server or vLLM.

func callPlannerService(data []byte) (*PlannerResponse, error) {

// In a real app, this URL would point to your deployed model.

// e.g., a service on Vertex AI, AWS SageMaker, or using a tool like Modal or Replicate.

url := "https://api.example.com/plan"

reqPayload := PlannerRequest{

SensorID: "cam-01",

ImageData: data,

RobotState: "idle",

}

reqBody, err := json.Marshal(reqPayload)

if err != nil {

return nil, fmt.Errorf("failed to marshal request: %w", err)

}

// Simulate network latency

time.Sleep(150 * time.Millisecond)

// In a real application, you would make an actual HTTP request:

// resp, err := http.Post(url, "application/json", bytes.NewBuffer(reqBody))

// For this example, we'll return a mock response.

fmt.Println("PLANNER: Calling external AI service...")

mockResponse := &PlannerResponse{

ActionName: "MoveArm",

Params: map[string]interface{}{

"X": 0.7,

"Y": -0.3,

"Z": 1.1,

},

}

// Check for a successful response and decode it.

// if resp.StatusCode != http.StatusOK { ... }

// err = json.NewDecoder(resp.Body).Decode(&response)

return mockResponse, nil

}

func main() {

// Simulate receiving some image data

simulatedImageData := []byte("{'pixels': '...'}")

plan, err := callPlannerService(simulatedImageData)

if err != nil {

fmt.Printf("Error getting plan: %v\n", err)

return

}

fmt.Printf("Received plan from AI service: Action=%s, Params=%v\n", plan.ActionName, plan.Params)

}Best Practices and Optimization Considerations

Building robust embodied AI systems requires attention to detail beyond the basic architecture. Here are some critical best practices and optimization strategies.

Graceful Shutdown and Error Handling

Your application must be able to shut down cleanly. Use Go’s context package to signal cancellation to all running goroutines. When a shutdown is initiated, the context is canceled, and each goroutine should detect this and terminate its loop, close resources, and exit.

State Management

While channels are great for communication, you’ll still need to manage shared state (e.g., the robot’s current position, gripper status). Use a struct to hold this state and protect concurrent read/write access with a sync.RWMutex to prevent race conditions.

Inference Optimization

The latency of the “Planning” step is often the bottleneck. To minimize this, use optimized inference runtimes and hardware. Technologies featured in NVIDIA AI News, such as GPUs combined with TensorRT for model optimization and the Triton Inference Server for efficient serving, are crucial. Compiling models to formats like ONNX can also provide significant performance boosts across different hardware.

Experiment Tracking and MLOps

Developing the AI models for these agents is an iterative process. Use MLOps platforms like those mentioned in MLflow News, Weights & Biases, or ClearML News to track experiments, manage datasets, and version models. This disciplined approach is essential for creating reliable and reproducible behavior in physical agents.

Conclusion: Go-ing into the Physical World

The convergence of advanced AI and robotics is creating a new paradigm of intelligent, autonomous systems. As seen in the latest developments from labs like Google DeepMind, the ability to translate complex reasoning into physical action is becoming a reality. Building the software that powers these systems requires a language designed for concurrency, performance, and simplicity.

Go, with its first-class support for goroutines and channels, provides an exceptional toolkit for orchestrating the complex, asynchronous interactions between sensors, AI planners, and actuators. By leveraging clean interface-based design and a concurrent control loop architecture, developers can build a robust and scalable foundation for embodied AI applications. As AI models continue to grow in capability, the underlying control systems built with languages like Go will be more critical than ever in safely and effectively bringing that intelligence into our physical world.