Unpacking PyTorch 2.8: A Deep Dive into CPU-Accelerated LLM Inference

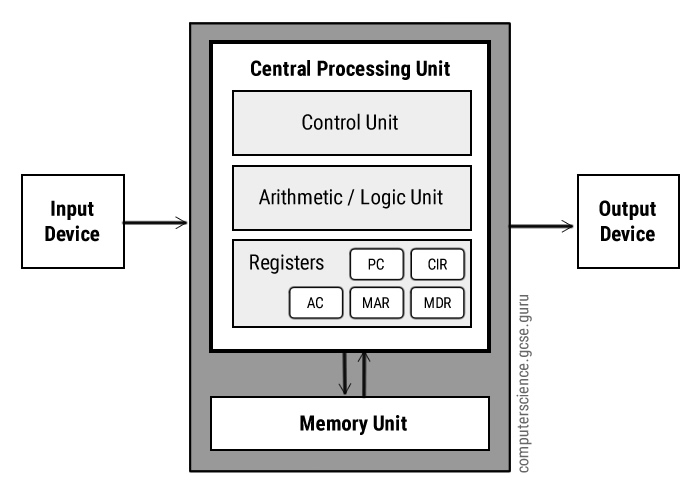

The world of artificial intelligence has long been dominated by the narrative that high-performance computing, especially for Large Language Models (LLMs), is the exclusive domain of specialized, power-hungry GPUs. While GPUs remain the undisputed champions for large-scale training and high-throughput batch inference, a significant shift is underway. The latest developments in the AI ecosystem, particularly highlighted in recent PyTorch News, are democratizing access to powerful AI by dramatically enhancing inference performance on ubiquitous Central Processing Units (CPUs).

The release of PyTorch 2.8 marks a pivotal moment in this evolution. Through a deep collaboration between Meta AI and Intel, this version introduces a suite of powerful optimizations that specifically target modern CPU architectures. These advancements, centered around the `torch.compile` backend and the Intel® Extension for PyTorch (IPEX), unlock unprecedented speed for LLM inference. This means developers and organizations can now deploy sophisticated models more cost-effectively, run them on edge devices, or simply leverage existing CPU infrastructure without compromising too much on performance. This article provides a comprehensive technical exploration of these new features, complete with practical code examples and best practices for harnessing the full power of CPU-based LLM inference.

The Core Components: `torch.compile` and Intel Extensions

The performance gains in PyTorch 2.8 are not magic; they are the result of sophisticated software engineering that bridges the gap between high-level Python code and low-level hardware instructions. Two components are central to this achievement: PyTorch’s native compiler, `torch.compile`, and the specialized Intel Extension for PyTorch (IPEX).

Understanding `torch.compile`

Introduced in PyTorch 2.0, torch.compile is a just-in-time (JIT) compiler that transforms PyTorch programs into faster, optimized kernel code. It works by capturing a graph of your model’s operations and then applying a series of optimizations before compiling it into a highly efficient backend. This process eliminates Python overhead, fuses multiple operations into single kernels, and optimizes memory access patterns. For CPU inference, torch.compile can leverage backends specifically designed to take advantage of CPU instruction sets like AVX2 and AVX-512.

Using it is remarkably simple. You can wrap your model or a specific function with this single line of code, and PyTorch handles the complex compilation process behind the scenes. This non-intrusive approach allows developers to gain significant speedups with minimal code changes.

import torch

import torch.nn as nn

# A simple neural network model

class SimpleModel(nn.Module):

def __init__(self):

super().__init__()

self.layer1 = nn.Linear(128, 256)

self.relu = nn.ReLU()

self.layer2 = nn.Linear(256, 10)

def forward(self, x):

x = self.layer1(x)

x = self.relu(x)

x = self.layer2(x)

return x

# Create an instance of the model

model = SimpleModel()

# Optimize the model with torch.compile

# The 'inductor' backend is the default and highly optimized

optimized_model = torch.compile(model, backend="inductor")

# Now, running inference with 'optimized_model' will be significantly faster

input_tensor = torch.randn(32, 128)

output = optimized_model(input_tensor)

print("Model compiled successfully!")

print("Output shape:", output.shape)The Power of Intel® Extension for PyTorch (IPEX)

While torch.compile provides a general optimization framework, the Intel® Extension for PyTorch (IPEX) provides hardware-specific enhancements. IPEX works in tandem with PyTorch to unlock the full potential of Intel Xeon processors. It brings optimizations for both training and inference, including:

- Advanced Operator Fusing: Combining multiple operations into a single, highly optimized kernel using libraries like oneDNN.

- Low-Precision Data Types: Efficient handling of BFloat16 (BF16) for training and INT8 for inference, which drastically reduces memory footprint and accelerates computation.

- Graph Optimization: Automatically converting the model graph to a more efficient representation for Intel hardware.

Integrating IPEX is as straightforward as importing the library and applying its optimize function to your model and optimizer.

Practical Implementation: Accelerating a Hugging Face LLM

Let’s move from theory to practice. A common use case is accelerating an LLM from the Hugging Face Hub for a task like text generation or feature extraction. We’ll use the Hugging Face Transformers library and demonstrate how to apply IPEX and torch.compile to boost inference speed on a CPU.

Setup and Baseline Inference

First, ensure you have the necessary libraries installed. You’ll need torch, transformers, and intel_extension_for_pytorch. We will use a relatively small model, like GPT-2, to make the example easy to run.

The following code establishes a baseline by measuring the inference time of a standard, unoptimized Hugging Face model.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

import time

# Ensure you are running on a CPU

device = "cpu"

model_name = "gpt2"

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval() # Set model to evaluation mode

# Input text

prompt = "PyTorch 2.8 has introduced significant performance improvements for"

inputs = tokenizer(prompt, return_tensors="pt").to(device)

# --- Baseline Inference ---

print("Running baseline inference...")

start_time = time.time()

with torch.no_grad():

output = model.generate(**inputs, max_new_tokens=50, num_beams=1)

end_time = time.time()

baseline_time = end_time - start_time

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(f"Generated Text (Baseline): {generated_text}")

print(f"Baseline Inference Time: {baseline_time:.4f} seconds")

Applying IPEX and `torch.compile` for Optimization

Now, let’s apply the optimizations. The key steps are importing intel_extension_for_pytorch and using its optimize method before compiling with torch.compile. The ipex.optimize() function prepares the model by replacing certain PyTorch modules and functions with their optimized Intel counterparts. This synergy between IPEX and the Inductor backend of `torch.compile` is what unlocks maximum performance.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

import time

import intel_extension_for_pytorch as ipex

# Ensure you are running on a CPU

device = "cpu"

model_name = "gpt2"

# Load model and tokenizer

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name).to(device)

model.eval()

# Input text

prompt = "PyTorch 2.8 has introduced significant performance improvements for"

inputs = tokenizer(prompt, return_tensors="pt").to(device)

# --- Optimized Inference ---

print("\nOptimizing model with IPEX and torch.compile...")

# 1. Apply IPEX optimization

# The dtype=torch.bfloat16 can provide further speedups on supported hardware

optimized_model = ipex.optimize(model.eval(), dtype=torch.bfloat16)

# 2. Apply torch.compile

# Note: For text generation, we compile the model's forward pass.

# The .generate() method internally calls this many times.

# For complex generation logic, you might need more advanced compilation strategies.

# Here we'll compile the core model for demonstration.

compiled_model = torch.compile(optimized_model, backend="inductor")

print("Running optimized inference...")

# Warm-up run for JIT compilation

with torch.no_grad():

_ = compiled_model.generate(**inputs, max_new_tokens=50, num_beams=1)

# Timed run

start_time = time.time()

with torch.no_grad():

output_optimized = compiled_model.generate(**inputs, max_new_tokens=50, num_beams=1)

end_time = time.time()

optimized_time = end_time - start_time

generated_text_optimized = tokenizer.decode(output_optimized[0], skip_special_tokens=True)

print(f"Generated Text (Optimized): {generated_text_optimized}")

print(f"Optimized Inference Time: {optimized_time:.4f} seconds")

When you run this code, you will observe a substantial reduction in inference time. This showcases how just a few lines of code can leverage the latest advancements from Meta AI News and Intel, making CPU inference a viable option for many applications previously requiring GPUs.

Advanced Technique: Low-Precision Inference with INT8 Quantization

To push performance even further, we can employ quantization. This technique involves converting the model’s weights and/or activations from 32-bit floating-point numbers (FP32) to lower-precision integers, such as 8-bit integers (INT8). This reduces the model’s memory footprint and allows the CPU to perform calculations much faster using specialized integer arithmetic instructions. IPEX provides powerful and easy-to-use APIs for this purpose.

Weight-Only Quantization (WOQ) for LLMs

For LLMs, a particularly effective method is Weight-Only Quantization (WOQ). In this approach, only the massive weight matrices of the linear layers are quantized to INT8 or even INT4, while the activations (the data flowing between layers) remain in a higher precision format like BFloat16. This strikes an excellent balance between performance gain and accuracy preservation, which is critical for the sensitivity of LLMs. The latest OpenVINO News also highlights similar techniques, showing an industry-wide trend towards optimizing for low-precision inference.

IPEX simplifies this process with its quantization recipes. You define a configuration and apply it during the optimization step.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

import intel_extension_for_pytorch as ipex

from intel_extension_for_pytorch.quantization import WoqRecipe, woq_self_recipe

# Load a model (e.g., a larger one where quantization benefits are more pronounced)

model_name = "EleutherAI/gpt-neo-125M"

model = AutoModelForCausalLM.from_pretrained(model_name).eval()

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Define the Weight-Only Quantization configuration

# We'll use INT8 for weights

qconfig = WoqRecipe(

bits=8, # Use 8-bit quantization

group_size=-1 # -1 means per-channel quantization

)

# Apply the quantization recipe during IPEX optimization

# The `prepare_for_woq` function readies the model

model = ipex.prepare_for_woq(model, qconfig)

print("Model prepared for INT8 Weight-Only Quantization.")

# Example inference

prompt = "Quantization is a technique used in deep learning to"

inputs = tokenizer(prompt, return_tensors="pt")

with torch.no_grad():

output = model.generate(**inputs, max_new_tokens=30)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(f"\nGenerated Text (INT8 Quantized): {generated_text}")

This INT8-quantized model will be smaller on disk, consume less RAM, and execute inference faster than its FP32 or BF16 counterparts. This technique is crucial for deploying LLMs on edge devices or in resource-constrained cloud environments, a topic often covered in AWS SageMaker News and Azure AI News.

Best Practices and Ecosystem Integration

Achieving optimal performance involves more than just applying a compiler. Here are some best practices and considerations for integrating these CPU-optimized models into your MLOps workflow.

Performance Tuning and Considerations

- Use Profilers: Use PyTorch’s built-in profiler or Intel’s VTune Profiler to identify performance bottlenecks in your code before and after optimization.

- Leverage Modern Memory Allocators: For multi-core CPU inference, using a more efficient memory allocator like `jemalloc` or `tcmalloc` can prevent contention and improve scaling.

- Batching: While these optimizations significantly improve single-request latency, batching inputs can still provide higher throughput. Experiment with different batch sizes to find the sweet spot for your application.

- CPU Core Pinning: For multi-socket systems or to avoid “noisy neighbor” problems, use tools like `numactl` to pin your inference process to specific CPU cores and memory nodes.

Integration with MLOps and Serving Frameworks

An optimized model is only useful if it can be deployed effectively. These CPU-accelerated PyTorch models integrate seamlessly with the broader AI ecosystem.

- Experiment Tracking: Log performance metrics (latency, throughput) and model artifacts for different optimization strategies (e.g., BF16 vs. INT8) using tools like MLflow News or Weights & Biases News.

- Model Serving: Serve your compiled and quantized models via high-performance web frameworks like FastAPI News or dedicated serving solutions like Triton Inference Server News. The ONNX format, often featured in ONNX News, is another excellent target for exporting models for cross-platform serving.

- Cloud Deployment: These optimizations make it more cost-effective to deploy models on CPU instances on platforms like AWS SageMaker, Google Colab, or Vertex AI News, reducing reliance on expensive GPU instances for certain workloads.

Conclusion

The release of PyTorch 2.8 represents a significant leap forward in making high-performance AI more accessible and affordable. The powerful combination of torch.compile, the Intel Extension for PyTorch, and advanced quantization techniques has effectively turned modern CPUs into formidable engines for LLM inference. This is not just an incremental update; it is a paradigm shift that empowers developers to build and deploy sophisticated AI applications without the mandatory requirement of specialized hardware.

As we see in broader trends from TensorFlow News to JAX News, the entire industry is focused on optimization and efficiency. By embracing these new tools in PyTorch, you can reduce operational costs, unlock new deployment scenarios on the edge, and build faster, more responsive AI-powered products. The next step is to take these code examples, apply them to your own models, and explore the impressive performance gains waiting to be unlocked on the hardware you already have.