The Next Leap in Semantic Search: Integrating ColBERT and Late Interaction Models with Sentence Transformers

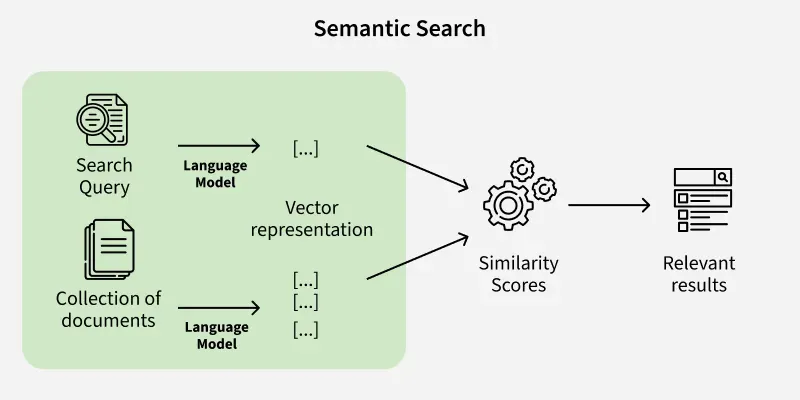

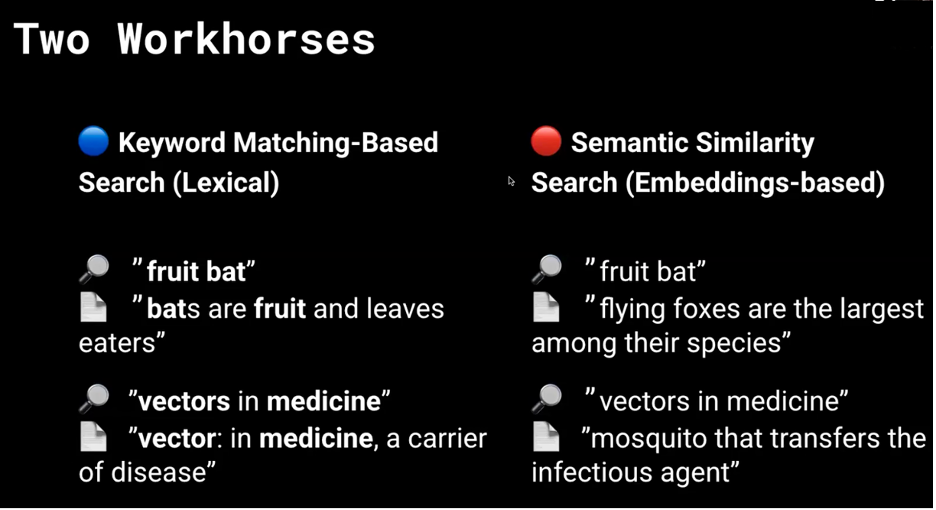

For years, the Sentence Transformers library has been the cornerstone of semantic search, enabling developers to effortlessly convert text into meaningful dense vector embeddings. This bi-encoder approach, where a query and a document are independently encoded into single vectors, has powered countless applications from recommendation engines to question-answering systems. However, as the demand for more nuanced and accurate information retrieval grows, the limitations of a single-vector representation are becoming apparent. The latest Sentence Transformers News signals a paradigm shift: the integration of more sophisticated architectures like ColBERT, moving beyond simple dense vectors towards fine-grained, late-interaction models.

This evolution addresses a core challenge in semantic search: the “semantic averaging” problem, where a single vector struggles to capture all the specific details and keywords within a long document. Late interaction models, by contrast, maintain token-level representations and perform comparisons at query time, offering a much higher degree of precision. This article provides a comprehensive technical deep-dive into this exciting development. We’ll explore the core concepts behind ColBERT, demonstrate its practical implementation within the Sentence Transformers ecosystem, and discuss advanced techniques and best practices for deploying these powerful models in production. This shift is a significant topic in the broader landscape of Hugging Face Transformers News and is set to redefine how we build next-generation retrieval systems.

From Dense Vectors to Late Interaction: A Conceptual Shift

To appreciate the innovation of ColBERT, we must first understand the architecture it improves upon. The traditional approach, popularized by Sentence Transformers, is a bi-encoder model. This architecture is elegant in its simplicity and efficiency, making it a go-to for many retrieval tasks.

The Bi-Encoder: Speed and Simplicity

In a bi-encoder system, two identical Transformer-based models (like BERT or RoBERTa) are used. One processes the query, and the other processes the document. Each is independently passed through the network to produce a single, fixed-size embedding (e.g., a 768-dimensional vector). The relevance score is then calculated using a simple similarity metric, typically cosine similarity.

The primary advantage is speed. You can pre-compute and index embeddings for your entire document corpus in a vector database like Pinecone News, Weaviate News, or Qdrant News. At query time, you only need to encode the user’s query and perform a fast nearest neighbor search against the pre-indexed vectors using libraries like FAISS News or Milvus News. This is incredibly efficient for large-scale systems.

# Standard Bi-Encoder approach using sentence-transformers

from sentence_transformers import SentenceTransformer, util

# Load a pre-trained bi-encoder model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Our document corpus

documents = [

"The new NVIDIA Hopper GPU architecture delivers unprecedented performance.",

"PyTorch 2.0 introduced a new compiler called torch.compile for faster execution.",

"ColBERT is a late interaction model for information retrieval.",

"AWS SageMaker provides a suite of tools for building, training, and deploying ML models."

]

# 1. Pre-compute and "index" document embeddings

document_embeddings = model.encode(documents, convert_to_tensor=True)

# 2. A query arrives at search time

query = "What is ColBERT?"

# 3. Encode the query and find the most similar document

query_embedding = model.encode(query, convert_to_tensor=True)

# Compute cosine similarity

cosine_scores = util.cos_sim(query_embedding, document_embeddings)

# Find the best match

best_match_idx = cosine_scores.argmax()

print(f"Query: {query}")

print(f"Best matching document: '{documents[best_match_idx]}' with score: {cosine_scores[0][best_match_idx]:.4f}")However, this approach compresses the entire meaning of a document into one vector, potentially losing critical keywords or details. A query for “fast PyTorch compiler” might match the PyTorch document, but the model’s ability to specifically pinpoint “torch.compile” is limited.

Enter ColBERT: Contextualized Late Interaction

ColBERT (Contextualized Late Interaction over BERT) proposes a more granular approach. Instead of creating a single embedding for an entire document, it generates a contextualized embedding for *each token* in the document. The same is done for the query.

The “late interaction” happens at search time. Instead of comparing two single vectors, ColBERT compares each query token embedding to all document token embeddings. For each query token, it finds the most similar document token (Maximum Similarity, or MaxSim). These maximum similarity scores are then summed up to produce the final relevance score for the document. This process allows the model to match specific phrases and keywords with high fidelity, overcoming the semantic averaging issue. This architectural innovation is a key topic in recent PyTorch News and Meta AI News, as it showcases a move towards more complex, yet powerful, model designs.

Practical Implementation: Building a ColBERT-Powered Search Engine

While the theory is powerful, the real excitement comes from the increasing accessibility of these models. Libraries like `neural-cherche` are making it possible to implement ColBERT using familiar components from the Sentence Transformers and Hugging Face ecosystems, significantly lowering the barrier to entry.

Setting Up the Environment and Model

First, you’ll need to install the necessary libraries. This typically includes `torch`, `sentence-transformers`, and the library providing the ColBERT implementation. The models themselves are often hosted on the Hugging Face Hub, a central theme in Hugging Face News.

# Install the required libraries

# !pip install neural-cherche torch sentence-transformers

from neural_cherche import models, retrieve

# Our document collection with unique IDs

documents = {

"doc1": "The new NVIDIA Hopper GPU architecture delivers unprecedented performance, power efficiency, and scalability.",

"doc2": "PyTorch 2.0 introduced a new compiler called torch.compile for faster execution on various hardware backends.",

"doc3": "ColBERT is a contextualized late interaction model that computes relevance by matching query tokens to document tokens.",

"doc4": "LangChain and LlamaIndex are popular frameworks for building applications with large language models.",

"doc5": "Using ONNX Runtime can significantly accelerate model inference across different platforms."

}

# Initialize a ColBERT model from the Hugging Face Hub

# This model is specifically fine-tuned for retrieval tasks

colbert = models.ColBERT(

model_name_or_path="raphaelsty/colbert-tiny"

)

# Create a retriever pipeline

retriever = retrieve.ColBERT(

key="id",

on=["text"],

model=colbert

)Indexing Documents with Token-Level Embeddings

Unlike a bi-encoder, indexing with ColBERT is a more involved process. The model must generate and store embeddings for every token in every document. This results in a larger index but is the key to its high-resolution retrieval capabilities. The `neural-cherche` library abstracts away much of this complexity.

# Create a list of dictionaries for the retriever

doc_list = [{"id": k, "text": v} for k, v in documents.items()]

# Index the documents. This will generate and store token-level embeddings.

# In a real application, this index would be persisted to disk.

retriever.add(documents=doc_list)

print(f"Successfully indexed {len(doc_list)} documents.")

# Let's try a query

query = "What is the fast compiler in PyTorch?"

# The retriever handles query encoding and the MaxSim operation

results = retriever(q=query, k=3) # Retrieve top 3 documents

# Print the results

print(f"\nQuery: '{query}'")

for result in results:

print(f" - ID: {result['id']}, Score: {result['score']:.4f}, Text: {documents[result['id']]}")Notice how the query, which contains multiple specific concepts (“fast”, “compiler”, “PyTorch”), is able to precisely match the correct document. A standard bi-encoder might have been distracted by other documents mentioning related tech terms.

Advanced Techniques and Ecosystem Integration

Deploying a ColBERT-based system in production requires considering its unique characteristics and integrating it with the broader MLOps and AI application ecosystem, a space where we see constant updates from LangChain News and LlamaIndex News.

Fine-Tuning for Domain-Specific Performance

Pre-trained ColBERT models are a great starting point, but for maximum performance, fine-tuning on your specific domain (e.g., legal documents, medical papers, customer support tickets) is crucial. The fine-tuning process involves training the model on triplets of (query, positive_passage, negative_passage) to teach it the nuances of your data. Tracking these experiments is vital, and tools featured in MLflow News or Weights & Biases News are indispensable for managing model versions, parameters, and performance metrics. The computational resources required for this often lead developers to platforms like AWS SageMaker, Google Colab, or Azure Machine Learning News.

Powering Retrieval-Augmented Generation (RAG)

The most significant application for advanced retrieval is Retrieval-Augmented Generation (RAG). The quality of the context provided to a Large Language Model (LLM) from providers like OpenAI News, Cohere News, or Mistral AI News directly determines the quality of the final generated answer. By using ColBERT as the retriever, you can feed the LLM highly relevant, factually dense passages that contain the specific details needed to answer a query accurately.

# This is a conceptual example showing integration with an LLM framework

# Assume 'retriever' is the indexed ColBERT retriever from the previous example

# Assume 'llm' is an initialized LLM client (e.g., from OpenAI, Anthropic)

from langchain.prompts import ChatPromptTemplate

from langchain.schema.output_parser import StrOutputParser

from langchain.schema.runnable import RunnablePassthrough

# A hypothetical LLM client

# from langchain_openai import ChatOpenAI

# llm = ChatOpenAI(model="gpt-4-turbo")

# 1. Define the retrieval function

def get_retrieved_context(query: str) -> str:

results = retriever(q=query, k=2) # Get top 2 documents

context = "\n---\n".join([documents[res['id']] for res in results])

return context

# 2. Create a RAG prompt template

template = """

Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# 3. Build the RAG chain using LangChain Expression Language

rag_chain = (

{"context": RunnablePassthrough() | get_retrieved_context, "question": RunnablePassthrough()}

| prompt

# | llm # This would be the LLM call

| StrOutputParser()

)

# 4. Invoke the chain

user_query = "How does ColBERT calculate relevance?"

# In a real run, this would call the retriever and then the LLM.

# We simulate the context retrieval part here for demonstration.

retrieved_docs = get_retrieved_context(user_query)

print("--- Retrieved Context for LLM ---")

print(retrieved_docs)

print("\n--- Final Prompt to LLM (Conceptual) ---")

print(prompt.format(context=retrieved_docs, question=user_query))This synergy between a high-precision retriever and a powerful generator is at the heart of modern AI applications, from chatbots to automated research assistants. Frameworks discussed in Haystack News are also rapidly adopting these advanced retrieval strategies.

Best Practices and Optimization Considerations

While ColBERT offers superior accuracy, it comes with trade-offs that require careful management in a production environment. Adhering to best practices is key to a successful deployment.

Managing Computational and Storage Costs

The most significant difference from bi-encoders is the resource footprint.

- Storage: A ColBERT index is significantly larger because it stores token-level embeddings. This requires careful planning of your storage solution, whether it’s on-premise or a cloud service.

- Computation: The late-interaction mechanism is more computationally intensive than a simple vector similarity search. For ultra-low latency requirements, you may need to employ powerful hardware like the latest GPUs discussed in NVIDIA AI News.

A common strategy is a two-stage retrieval pipeline: first, use a fast bi-encoder to retrieve a candidate set of (e.g., 100) documents, and then use ColBERT to re-rank this smaller set. This hybrid approach balances speed and accuracy effectively.

Optimizing for Inference

To maximize throughput, model optimization is critical. Techniques and tools like those from ONNX News or TensorRT News can be used to convert and compile the PyTorch model into a more efficient format for inference. Serving the model via a high-performance server like Triton Inference Server can further reduce latency and manage concurrent requests. For developers looking for managed solutions, platforms like Modal News or Replicate offer serverless GPU infrastructure that simplifies the deployment of complex models like ColBERT.

Monitoring and Evaluation

Once deployed, continuous monitoring is essential. Track metrics like retrieval latency, Mean Reciprocal Rank (MRR), and Recall. Tools like LangSmith News are emerging to help developers trace and evaluate the performance of complex RAG chains, providing invaluable insights into how your retriever is performing and where it might be failing.

Conclusion: The Future of Retrieval is Fine-Grained

The integration of late-interaction models like ColBERT into the mainstream Sentence Transformers ecosystem marks a pivotal moment for semantic search and information retrieval. We are moving from a world of “good enough” semantic similarity to one of high-fidelity, keyword-aware, and contextually precise retrieval. This leap in capability unlocks new potential for RAG systems, enabling them to provide more accurate, reliable, and trustworthy answers.

For developers and data scientists, this means embracing a new set of tools and trade-offs. While bi-encoders will remain a valuable tool for speed-critical applications, ColBERT and similar architectures represent the new frontier. By understanding their principles, learning the practical implementation patterns, and integrating them thoughtfully into the broader AI stack—from MLOps platforms like ClearML News to LLM frameworks like LangChain—we can build the next generation of intelligent applications that truly understand the nuances of human language. The latest Sentence Transformers News is clear: the future of retrieval is not just dense, it’s deep and detailed.