The Next Wave of AutoML: From Automated Model Building to Production-Ready MLOps

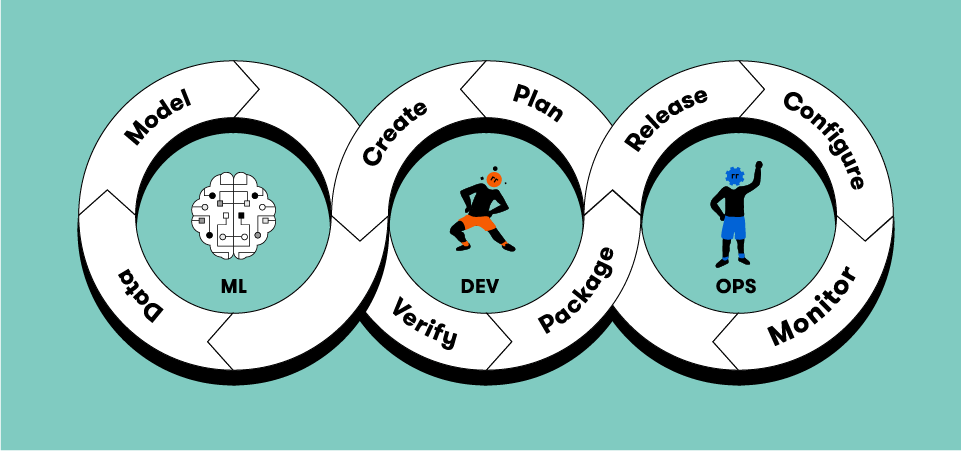

The landscape of machine learning is in a constant state of flux, driven by a relentless pursuit of efficiency, power, and accessibility. For years, Automated Machine Learning (AutoML) has been a beacon of this progress, promising to democratize AI by automating the complex, iterative, and often tedious tasks of model development. Early AutoML platforms focused primarily on hyperparameter optimization and algorithm selection. However, the latest AutoML News reveals a significant maturation. The conversation is no longer just about finding the best model; it’s about building reliable, transparent, and production-ready AI systems.

Today’s leading-edge AutoML solutions are deeply integrated into the MLOps lifecycle. They offer simplified development environments, but also provide real-time model insights, robust versioning, and seamless deployment pipelines. This evolution marks a critical shift from AutoML as a “black box” tool for data scientists to an indispensable, transparent component of the modern enterprise AI stack. This article explores this new wave of AutoML, diving into the core concepts, practical implementations with code, advanced techniques, and best practices that bridge the gap between automated experimentation and reliable, real-world results.

The Modern AutoML Stack: Beyond Hyperparameter Tuning

The core promise of AutoML is to automate the end-to-end process of applying machine learning to real-world problems. While hyperparameter tuning is a famous component, the modern AutoML stack covers a much broader and more sophisticated pipeline, making it a powerful accelerator for any data science team.

From Feature Engineering to Model Selection

A comprehensive AutoML workflow automates several critical stages that traditionally require significant manual effort and expertise:

- Data Preprocessing: Automatically handling missing values, encoding categorical features (one-hot, ordinal), and scaling numerical data (standardization, normalization).

- Feature Engineering & Selection: Creating new, potentially more predictive features from existing ones (e.g., polynomial features) and intelligently selecting the most impactful subset of features to reduce noise and model complexity.

- Algorithm Selection: Evaluating a wide range of model architectures, from classic algorithms like logistic regression and random forests to more complex ones like gradient boosting machines (XGBoost, LightGBM) and even neural networks built with TensorFlow or PyTorch.

- Hyperparameter Optimization (HPO): Systematically searching for the optimal set of hyperparameters for the chosen algorithm, using techniques like Bayesian optimization, genetic algorithms, or successive halving.

Libraries like auto-sklearn, built on the well-regarded scikit-learn library, encapsulate this entire process. It uses Bayesian optimization to efficiently search through the space of possible pipelines. Let’s see a practical example of how quickly you can build a high-performing classifier.

# First, ensure you have the necessary libraries installed:

# pip install auto-sklearn scikit-learn

import autosklearn.classification

import sklearn.model_selection

import sklearn.datasets

import sklearn.metrics

# 1. Load a sample dataset

X, y = sklearn.datasets.load_digits(return_X_y=True)

X_train, X_test, y_train, y_test = \

sklearn.model_selection.train_test_split(X, y, random_state=42)

# 2. Initialize the AutoML classifier

# We give it a time limit of 120 seconds for the search

# and specify that it should use 4 parallel cores.

automl = autosklearn.classification.AutoSklearnClassifier(

time_left_for_this_task=120,

per_run_time_limit=30,

n_jobs=4,

seed=42

)

# 3. Start the search for the best model pipeline

print("Starting AutoML search...")

automl.fit(X_train, y_train)

print("AutoML search finished.")

# 4. Print the final ensemble and statistics

print("\n--- AutoML Results ---")

print(automl.show_models())

print("\n--- Leaderboard ---")

print(automl.leaderboard())

# 5. Evaluate the final model on the test set

y_hat = automl.predict(X_test)

accuracy = sklearn.metrics.accuracy_score(y_test, y_hat)

print(f"\nAccuracy score on test data: {accuracy:.4f}")This single block of code replaces what could be hundreds of lines of manual preprocessing, model training, and tuning. This is the foundational power of modern AutoML tools, providing a strong baseline model with minimal effort.

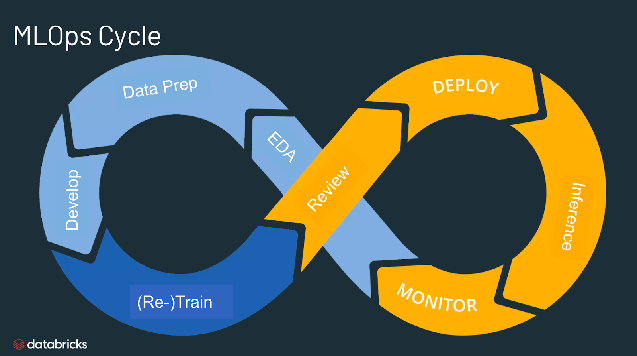

Implementing a Production-Ready AutoML Workflow with MLOps

Finding a great model is only the first step. To deliver real business value, that model must be reproducible, auditable, and deployable. This is where the integration of AutoML and MLOps platforms like MLflow News, Weights & Biases News, or cloud-native solutions like AWS SageMaker News and Vertex AI News becomes critical.

Integrating AutoML with Experiment Tracking

When an AutoML tool runs, it may evaluate hundreds of different pipelines. Without a robust tracking system, these results are ephemeral and difficult to compare. Integrating an experiment tracking tool like MLflow allows you to log every aspect of the AutoML search process.

By logging runs, you can:

- Preserve Results: Save the metrics, parameters, and model artifacts of every pipeline tested.

- Ensure Reproducibility: Capture the exact code, data version, and environment used to produce a model.

- Model Lineage: Maintain a clear history of how a model was created, which is crucial for governance and debugging.

– Compare and Analyze: Easily compare different AutoML runs (e.g., with different time budgets or data subsets) to understand what works best.

Here’s how you can extend the previous example to automatically log all trials from auto-sklearn directly into MLflow.

# Prerequisites:

# pip install auto-sklearn scikit-learn mlflow

import autosklearn.classification

import sklearn.model_selection

import sklearn.datasets

import sklearn.metrics

import mlflow

# Load data (same as before)

X, y = sklearn.datasets.load_digits(return_X_y=True)

X_train, X_test, y_train, y_test = \

sklearn.model_selection.train_test_split(X, y, random_state=42)

# Set up MLflow experiment

mlflow.set_experiment("AutoML_Digits_Classification")

with mlflow.start_run(run_name="AutoSklearn_Run") as run:

# Initialize the AutoML classifier

automl = autosklearn.classification.AutoSklearnClassifier(

time_left_for_this_task=120,

per_run_time_limit=30,

seed=42,

# Log all intermediate models to a directory

tmp_folder='/tmp/autosklearn_mlflow_log_tmp',

# This is the key for integration - we tell auto-sklearn where to find MLflow

logging_config={'mlflow': {'experiment': 'AutoML_Digits_Classification', 'run': run}}

)

print("Starting AutoML search with MLflow logging...")

automl.fit(X_train, y_train, dataset_name='digits')

print("AutoML search finished.")

# Log parameters and metrics for the final best model

mlflow.log_param("total_search_time_seconds", 120)

# Evaluate and log the final model's performance

y_hat = automl.predict(X_test)

accuracy = sklearn.metrics.accuracy_score(y_test, y_hat)

mlflow.log_metric("final_test_accuracy", accuracy)

print(f"\nFinal Test Accuracy: {accuracy:.4f}")

# Log the best model itself for later deployment

mlflow.sklearn.log_model(automl, "best_automl_model")

print("\nModel, parameters, and metrics logged to MLflow.")

print(f"To view the UI, run 'mlflow ui' in your terminal.")After running this script, you can launch the MLflow UI to see a detailed, nested view of every single pipeline that auto-sklearn evaluated, making the entire process transparent and ready for production handoff.

Advanced AutoML: Gaining Transparency and Control

One of the most persistent criticisms of AutoML has been its “black box” nature. However, the field is rapidly evolving to provide greater transparency and developer control, moving away from a fully automated approach to a more collaborative “human-in-the-loop” paradigm.

Demystifying Models with Explainability

Understanding *why* a model makes certain predictions is essential for building trust, debugging, and ensuring fairness. Modern AutoML libraries are increasingly incorporating model explainability features. The final model produced by auto-sklearn is a scikit-learn compatible pipeline, meaning you can use standard tools like SHAP (SHapley Additive exPlanations) or inspect model properties directly.

For tree-based models, which are often top performers in AutoML tasks, we can directly access feature importances to understand which data attributes are most influential.

# This code assumes you have a trained 'automl' object from the previous examples.

# Note: This will only work if the best model found is a tree-based ensemble.

import numpy as np

import matplotlib.pyplot as plt

# The final model is an ensemble. We can inspect the individual models.

# The weights and models are stored in the `automl.get_models_with_weights()` method.

# For simplicity, let's get the leader model's pipeline steps.

leaderboard = automl.leaderboard(detailed=True)

leader_model_config = leaderboard.iloc[0]['configuration']

# This is a simplified example. A robust implementation would need to parse

# the configuration to find the final estimator step in the pipeline.

# Let's assume for this example we can access the final estimator.

# In a real scenario, you'd iterate through the pipeline steps.

# Let's get the final fitted model from the internal model object

final_model = automl.get_models_with_weights()[0][1]

# Check if the final step of the pipeline has feature_importances_

try:

# The actual estimator is usually the last step in the pipeline

estimator = final_model.steps[-1][1]

importances = estimator.feature_importances_

# Create a simple plot

indices = np.argsort(importances)[::-1]

plt.figure(figsize=(12, 6))

plt.title("Feature Importances from AutoML Best Model")

plt.bar(range(X_train.shape[1]), importances[indices], align="center")

plt.xticks(range(X_train.shape[1]), indices)

plt.xlim([-1, X_train.shape[1]])

plt.xlabel("Feature Index")

plt.ylabel("Importance")

plt.show()

print("Top 5 most important feature indices:", indices[:5])

except AttributeError:

print("The best model found does not have a 'feature_importances_' attribute (e.g., it might be an SVM or KNN).")

except Exception as e:

print(f"An error occurred: {e}")Customizing the Search Space for Expert Guidance

While letting AutoML explore freely is powerful, domain experts often have valuable intuition. Advanced usage involves guiding the search process. For instance, you can constrain the search space to include or exclude specific algorithms or preprocessors. This is particularly useful when you know certain models are too slow for your inference requirements or that specific data preparation steps are necessary. Libraries like Optuna News and Ray News, which often power these AutoML frameworks, provide fine-grained control over the optimization process, allowing for this level of customization.

The Future is Composable: AutoML in the Age of LLMs

The latest OpenAI News and Hugging Face News are dominated by Large Language Models (LLMs), and AutoML is adapting to this new paradigm. Instead of just optimizing traditional ML models, AutoML principles are now being applied to optimize complex, multi-step LLM-powered pipelines.

AutoML for Optimizing RAG Pipelines

Retrieval-Augmented Generation (RAG) is a popular technique for making LLMs more accurate and context-aware. A RAG pipeline has many “hyperparameters” that can be tuned, such as:

- The chunking strategy for documents.

- The type of embedding model used (e.g., from Sentence Transformers).

- The number of documents to retrieve (`top_k`).

- The prompt template fed to the LLM.

Frameworks like LangChain News and LlamaIndex News make it easy to build these pipelines, and AutoML tools like Optuna can be used to optimize them. We can define an “objective function” that measures the quality of the RAG output and let the optimizer find the best combination of parameters.

# This is a conceptual example demonstrating the logic.

# A full implementation requires a dataset and evaluation metric for RAG.

# pip install optuna llama-index-core

import optuna

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.core.node_parser import SimpleNodeParser

# Assume 'evaluate_rag_quality' is a function you've defined

# that takes a response and ground truth, and returns a quality score.

def evaluate_rag_quality(response, ground_truth):

# E.g., using RAGAs or a simple keyword match

return float("placeholder_implementation" in response)

# 1. Define the objective function for Optuna

def objective(trial):

# Suggest hyperparameters to tune

chunk_size = trial.suggest_int("chunk_size", 256, 1024, step=128)

top_k = trial.suggest_int("top_k", 1, 5)

# --- Build and run the RAG pipeline with these parameters ---

# Load documents (assuming they are in a './data' directory)

documents = SimpleDirectoryReader("./data").load_data()

# Create a parser with the suggested chunk_size

parser = SimpleNodeParser.from_defaults(chunk_size=chunk_size)

nodes = parser.get_nodes_from_documents(documents)

# Create the index

index = VectorStoreIndex(nodes)

# Create the query engine with the suggested top_k

query_engine = index.as_query_engine(similarity_top_k=top_k)

# --- Evaluate the pipeline ---

query = "What is the main topic of the documents?"

ground_truth_answer = "placeholder_implementation" # Your expected answer

response = query_engine.query(query)

quality_score = evaluate_rag_quality(str(response), ground_truth_answer)

return quality_score

# 2. Create and run the Optuna study

study = optuna.create_study(direction="maximize")

study.optimize(objective, n_trials=50)

# 3. Print the best parameters found

print("Best trial:")

trial = study.best_trial

print(f" Value: {trial.value}")

print(" Params: ")

for key, value in trial.params.items():

print(f" {key}: {value}")This approach transforms LLM pipeline development from a manual, guess-and-check process into a systematic, data-driven optimization task, showcasing the versatility of AutoML principles.

Best Practices and Common Pitfalls

To leverage AutoML effectively, it’s crucial to follow best practices and be aware of potential pitfalls.

Best Practices

- Start with a Strong Baseline: Before turning to AutoML, establish a simple, manually-tuned baseline model. This helps you judge whether the complexity of AutoML is providing a significant lift.

- Understand Your Data: The “garbage in, garbage out” principle still applies. Ensure your data is clean, relevant, and well-understood before feeding it into an automated pipeline.

- Set Realistic Time Budgets: AutoML can be computationally intensive. Define clear time constraints for the search process to manage costs and get results in a reasonable timeframe.

- Use MLOps from Day One: As demonstrated, integrate tools like MLflow or ClearML News from the beginning to ensure your experiments are tracked and reproducible.

- Hold Out a Final Test Set: AutoML optimizes models on a validation set. Always evaluate the final, chosen model on a completely separate, held-out test set to get an unbiased estimate of its real-world performance.

Common Pitfalls

- Overfitting the Validation Set: Because AutoML tests so many models, it can sometimes find a pipeline that performs well on the validation data by chance. A final hold-out test is the best defense.

- Ignoring Domain Knowledge: Don’t let automation replace critical thinking. Use your domain expertise to guide feature engineering and constrain the model search space.

- Computational Cost Overruns: Failing to set limits on search time or resources can lead to unexpectedly high cloud computing bills.

- Choosing the Wrong Optimization Metric: Blindly optimizing for accuracy can be misleading in cases of class imbalance. Choose a metric (e.g., F1-score, AUC) that aligns with your actual business goal.

Conclusion

The narrative around AutoML has fundamentally changed. It has evolved from a niche tool for automated model selection into a cornerstone of the modern, efficient MLOps stack. The latest trends in AutoML News emphasize not just automation, but also transparency, control, and deep integration with production systems. By combining the power of automated search with the rigor of MLOps platforms like MLflow and the flexibility to guide the process, teams can dramatically accelerate their path from raw data to deployed, value-generating AI.

Furthermore, the extension of AutoML principles to optimize complex new architectures, such as LLM-based RAG systems, demonstrates its enduring relevance. The future of AI development isn’t about choosing between manual control and full automation; it’s about finding the intelligent synthesis of both. As you embark on your next machine learning project, consider how a modern, MLOps-integrated AutoML workflow can serve not as a replacement for your expertise, but as a powerful amplifier of it.