A Deep Dive into Direct Preference Optimization (DPO) on AWS SageMaker for Advanced LLM Customization

Introduction: Beyond Supervised Fine-Tuning

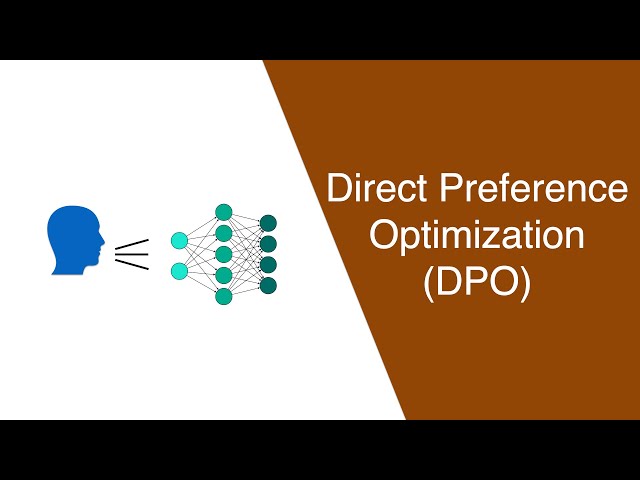

The landscape of Large Language Models (LLMs) is evolving at a breakneck pace. While supervised fine-tuning (SFT) has become a standard practice for adapting foundation models to specific domains, it often falls short in capturing the nuanced, subjective qualities that define a truly helpful and aligned AI. How do we teach a model a specific tone, a particular conversational style, or adherence to complex safety guidelines? The answer has traditionally been Reinforcement Learning from Human Feedback (RLHF), a powerful but notoriously complex and unstable process. Recent developments, highlighted in the latest AWS SageMaker News, are changing the game by championing a more direct and efficient approach: Direct Preference Optimization (DPO).

DPO reframes the alignment problem, moving away from the multi-stage complexity of RLHF—which involves training a separate reward model and then using reinforcement learning—to a more elegant, end-to-end classification task. It allows developers to directly optimize a language model on a dataset of human preferences, making the process of instilling desired behaviors more stable, computationally cheaper, and easier to implement. This article provides a comprehensive technical guide on how to leverage DPO within the robust and scalable AWS SageMaker ecosystem to customize foundation models, turning generic text generators into highly specialized and aligned AI assistants.

Section 1: Core Concepts – The Shift from RLHF to DPO

To fully appreciate the innovation of DPO, it’s essential to understand the paradigm it improves upon. The quest for AI alignment is one of the most critical topics in the AI community, with discussions frequently appearing in OpenAI News and Google DeepMind News.

The Complexity of Reinforcement Learning from Human Feedback (RLHF)

RLHF has been the gold standard for aligning powerful models like GPT-4 and Claude. However, its implementation is a significant engineering challenge involving three distinct stages:

- Supervised Fine-Tuning (SFT): A base model is first fine-tuned on a high-quality dataset of prompt-response pairs to adapt it to a specific domain or task.

- Reward Model Training: Human annotators are presented with several responses to a single prompt and asked to rank them. This preference data is used to train a separate “reward model” that learns to predict which response a human would prefer.

- RL Optimization: The SFT model is further fine-tuned using a reinforcement learning algorithm, typically Proximal Policy Optimization (PPO). The reward model provides the “reward” signal, guiding the LLM to generate responses that score higher on the human preference scale.

This process is brittle, computationally expensive, and requires careful hyperparameter tuning to avoid issues like “reward hacking,” where the model exploits the reward model’s flaws instead of genuinely improving.

Direct Preference Optimization (DPO): A More Elegant Solution

DPO, introduced in the paper “Direct Preference Optimization: Your Language Model is Secretly a Reward Model,” simplifies this entire workflow. The key insight is that the RLHF objective can be optimized directly on the preference data without explicitly training a reward model. DPO uses a simple binary cross-entropy loss to increase the relative log-probability of preferred responses over rejected ones.

The only requirement is a preference dataset, which consists of triplets: (prompt, chosen_response, rejected_response). This is the same data used to train an RLHF reward model. Here’s what a sample from such a dataset might look like:

# Example structure for a preference dataset

preference_dataset = [

{

"prompt": "Explain the concept of quantum entanglement in simple terms.",

"chosen": "Imagine you have two coins that are magically linked. If you flip one and it lands on heads, you instantly know the other one is tails, no matter how far apart they are. That's the basic idea of entanglement.",

"rejected": "Quantum entanglement is a physical phenomenon that occurs when a pair or group of particles is generated in such a way that the quantum state of each particle of the pair cannot be described independently of the state of the others."

},

{

"prompt": "Write a short, upbeat marketing email for a new coffee blend.",

"chosen": "Subject: Your Morning Just Got Brighter! ☀️ Say hello to 'Sunrise Brew,' our vibrant new blend designed to kickstart your day with a smile. Taste the sunshine!",

"rejected": "Subject: New Product. This email is to inform you of the launch of our new coffee product, 'Sunrise Brew.' It is a new blend of beans."

}

]By directly optimizing on these pairs, DPO provides a stable, lightweight, and highly effective method for model alignment, making it a hot topic in recent Meta AI News and a perfect fit for managed platforms like AWS SageMaker.

Section 2: Practical Implementation with SageMaker and Hugging Face

Let’s move from theory to practice. Implementing DPO is remarkably accessible thanks to the Hugging Face ecosystem, particularly the TRL (Transformer Reinforcement Learning) library, which can be seamlessly integrated with AWS SageMaker for scalable training.

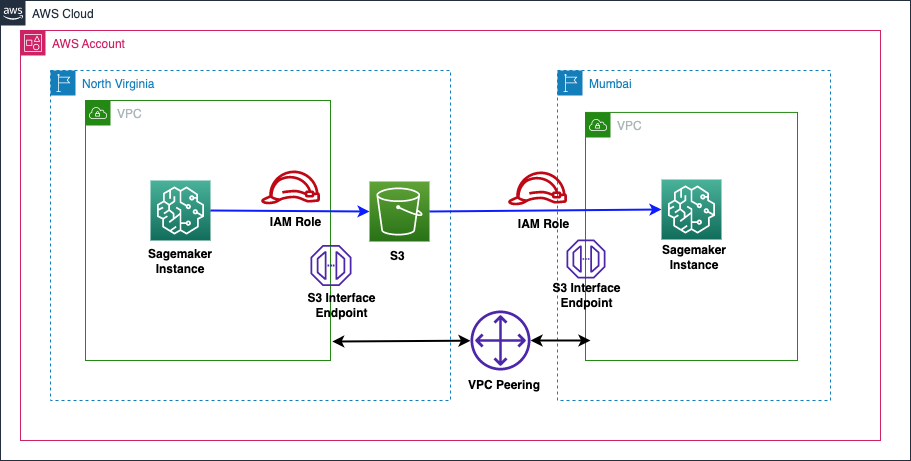

Preparing the Environment and Dataset

First, you need an AWS account and a SageMaker Studio environment. Your preference dataset should be formatted and uploaded to Amazon S3. The Hugging Face datasets library is an excellent tool for loading and preparing your data. The dataset must contain three columns, typically named prompt, chosen, and rejected.

You can create or load your dataset and push it to the Hugging Face Hub or directly to S3 for SageMaker to access. This interoperability is a key theme in recent Hugging Face Transformers News.

Building the DPO Training Script

The core of the implementation is the training script. TRL’s DPOTrainer abstracts away most of the complexity. Here is a foundational Python script that you would use as the entry point for a SageMaker Training Job.

import os

import torch

from datasets import load_dataset

from transformers import AutoModelForCausalLM, AutoTokenizer, TrainingArguments

from trl import DPOTrainer

from peft import LoraConfig

# 1. Load Model and Tokenizer

# It's recommended to start from an SFT-tuned model

model_name = "mistralai/Mistral-7B-Instruct-v0.2"

model = AutoModelForCausalLM.from_pretrained(model_name, torch_dtype=torch.bfloat16)

tokenizer = AutoTokenizer.from_pretrained(model_name)

if tokenizer.pad_token is None:

tokenizer.pad_token = tokenizer.eos_token

# 2. Load Preference Dataset

# This assumes your dataset is hosted on the Hugging Face Hub

dataset = load_dataset("trl-internal-testing/hh-rlhf-helpful-base-prompt-format")

train_dataset = dataset["train"]

# 3. Configure PEFT with LoRA for efficient training

peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

target_modules=["q_proj", "v_proj"],

bias="none",

task_type="CAUSAL_LM",

)

# 4. Configure Training Arguments

# These arguments are passed to the SageMaker Estimator as hyperparameters

training_args = TrainingArguments(

output_dir="./dpo_results",

per_device_train_batch_size=4,

gradient_accumulation_steps=2,

learning_rate=5e-5,

logging_steps=10,

num_train_epochs=1,

report_to="wandb", # Integrate with Weights & Biases

save_strategy="epoch",

)

# 5. Initialize the DPOTrainer

dpo_trainer = DPOTrainer(

model,

args=training_args,

beta=0.1, # The beta parameter is a key hyperparameter in DPO

train_dataset=train_dataset,

tokenizer=tokenizer,

peft_config=peft_config,

)

# 6. Start Training

dpo_trainer.train()

# 7. Save the final model

# SageMaker will automatically capture the model artifacts from the output directory

dpo_trainer.save_model("./dpo_results/final_model")This script showcases several best practices, including using a strong base model, leveraging PEFT with LoRA for efficiency, and integrating with experiment tracking tools, a common theme in MLflow News and Weights & Biases News.

Section 3: Scaling Up with SageMaker Training and Deployment

Running the script locally is fine for testing, but for large models and datasets, you need the power of the cloud. This is where AWS SageMaker shines, providing managed infrastructure for training and deployment.

Launching a SageMaker Training Job

The SageMaker Python SDK allows you to package your training script and run it on powerful GPU instances. You define a HuggingFace estimator, which handles the environment setup, data transfer, and execution.

import sagemaker

from sagemaker.huggingface import HuggingFace

# Initialize SageMaker session

sagemaker_session = sagemaker.Session()

role = sagemaker.get_execution_role()

# Define hyperparameters to pass to the training script

hyperparameters = {

'model_name': 'mistralai/Mistral-7B-Instruct-v0.2',

'dataset_name': 'trl-internal-testing/hh-rlhf-helpful-base-prompt-format',

'num_train_epochs': 1,

'per_device_train_batch_size': 4,

'learning_rate': 5e-5

}

# Create a HuggingFace estimator

huggingface_estimator = HuggingFace(

entry_point='train_dpo.py', # Your training script

source_dir='./scripts', # Directory containing the script

instance_type='ml.g5.2xlarge', # Choose a suitable GPU instance

instance_count=1,

role=role,

transformers_version='4.36',

pytorch_version='2.1',

py_version='py310',

hyperparameters=hyperparameters

)

# Launch the training job

huggingface_estimator.fit({'train': 's3://your-bucket/path-to-data/'}) # Optionally pass S3 data path

print(f"Training job started: {huggingface_estimator.latest_training_job.name}")This setup abstracts away the complexities of provisioning servers and configuring environments. You can easily scale up by changing the instance_type to more powerful hardware, a key advantage highlighted in NVIDIA AI News for users of their latest GPUs.

Deploying the Tuned Model for Inference

Once the training job is complete, SageMaker stores the resulting model artifacts in S3. Deploying this model as a real-time endpoint is incredibly straightforward:

# Deploy the trained model to a SageMaker real-time endpoint

dpo_predictor = huggingface_estimator.deploy(

initial_instance_count=1,

instance_type='ml.g5.xlarge'

)

# Example of how to invoke the endpoint

prompt = "Explain the DPO training process."

data = {"inputs": prompt}

response = dpo_predictor.predict(data)

print(response)For production workloads, you can further optimize this endpoint using tools like SageMaker’s multi-model endpoints, autoscaling, or compiling the model with TensorRT. This seamless transition from training to production is a core strength of integrated platforms like SageMaker, often drawing comparisons to offerings discussed in Vertex AI News and Azure Machine Learning News.

Section 4: Best Practices, Pitfalls, and Optimization

While DPO is simpler than RLHF, success still requires attention to detail. Following best practices can significantly improve your results.

Best Practices for DPO

- Data Quality is King: The performance of your DPO-tuned model is entirely dependent on the quality and diversity of your preference dataset. Ensure your

chosenresponses are genuinely better and cover a wide range of scenarios. - Start with a Strong SFT Base: DPO is for alignment, not for teaching the model core knowledge. Always start with a model that has been instruction-tuned or fine-tuned on your specific domain.

- Iterate and Refine: Treat alignment as an iterative process. Deploy your first DPO model, collect examples where it fails, create new preference pairs from these failures, and retrain. Tools like LangSmith News are emerging to help with this feedback loop.

- Tune the Beta Hyperparameter: The

betaparameter in DPO controls how much the model deviates from its original SFT policy. A low beta makes training more conservative, while a high beta can lead to more significant changes but risks instability. Experiment to find the right balance.

Common Pitfalls to Avoid

- Over-Optimization: If your preference data is too narrow, the model might “overfit” to that style and lose its general capabilities, a phenomenon known as catastrophic forgetting. Using PEFT methods like LoRA helps mitigate this by only updating a small subset of the model’s weights.

- Inconsistent Preferences: If your human labelers provide inconsistent or contradictory preferences, the model will struggle to learn a coherent policy. Establishing clear labeling guidelines is crucial.

- Ignoring the Reference Model: DPO implicitly uses the initial SFT model as a reference to prevent the policy from changing too drastically. Drifting too far can degrade overall performance.

Conclusion: The Future of Model Alignment on AWS

Direct Preference Optimization represents a significant step forward in making LLM alignment more accessible, stable, and efficient. By moving away from the complexities of RLHF, DPO empowers more teams to create highly customized models that better reflect their desired tone, style, and safety guidelines. The combination of DPO’s algorithmic elegance with the scalable, managed infrastructure of AWS SageMaker creates a powerful toolkit for any developer looking to push the boundaries of AI customization.

The key takeaways are clear: DPO lowers the barrier to entry for preference tuning, and platforms like SageMaker eliminate the operational overhead of training and deployment. As a next step, consider building your own preference dataset based on your application’s specific needs. Explore open-source preference datasets to get started, and begin experimenting with DPO to see how it can elevate your models from generic tools to truly aligned and valuable AI partners. The ongoing advancements in frameworks like those covered by PyTorch News and tools like LangChain News will only make this process more streamlined in the future.