Unlocking Data Insights with Snowflake Cortex: A Deep Dive into Serverless AI and LLMs

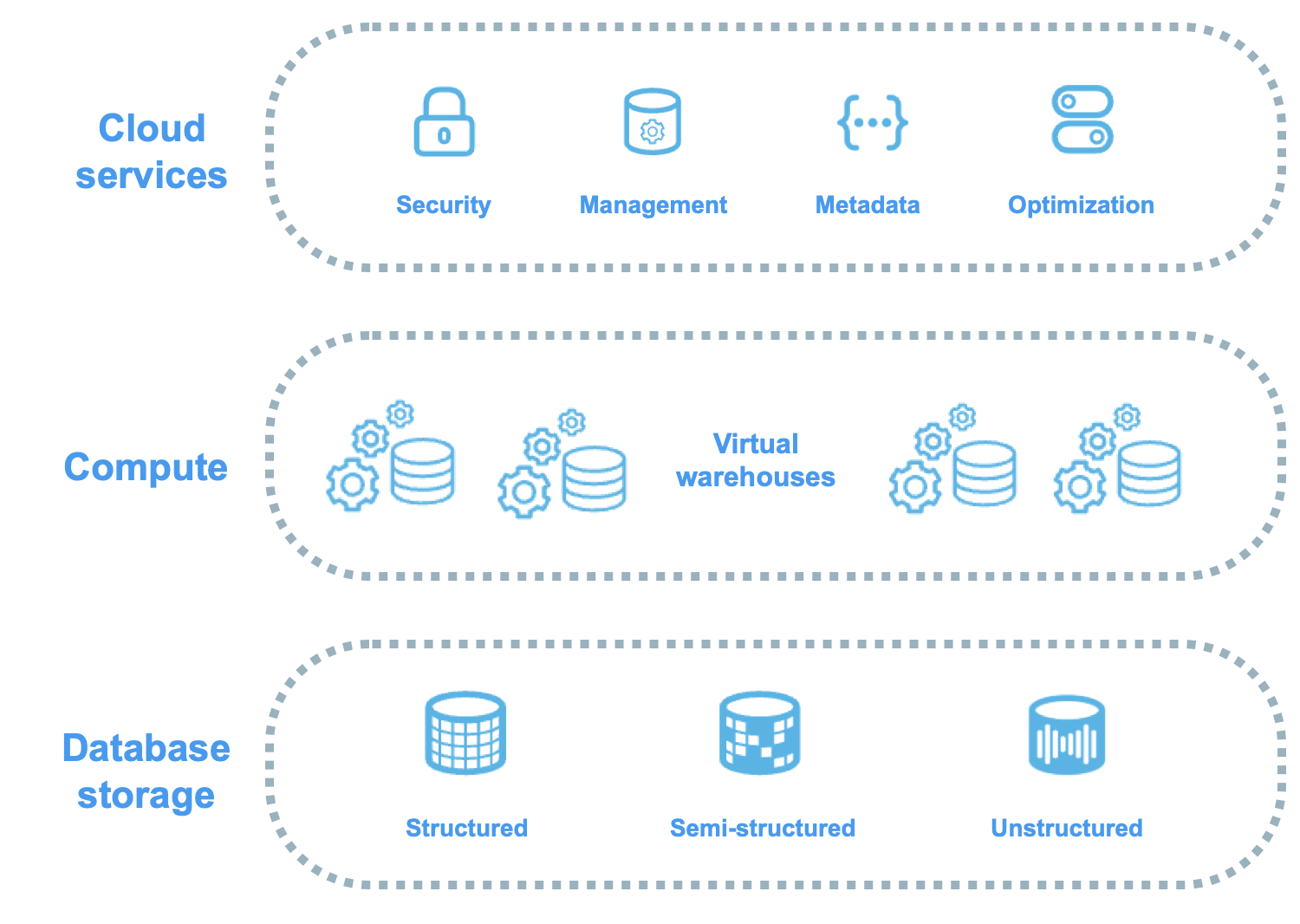

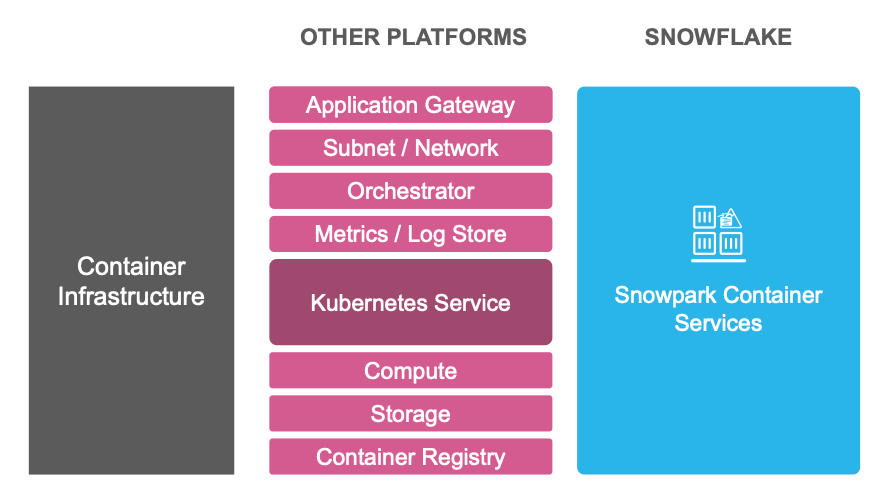

The artificial intelligence landscape is undergoing a monumental shift. The paradigm of moving massive datasets to separate machine learning platforms for training and inference is being challenged by a more efficient, secure, and integrated approach: bringing AI directly to the data. At the forefront of this movement is Snowflake, which has supercharged its Data Cloud with Snowflake Cortex, a fully managed service that embeds AI and machine learning capabilities directly within the data warehouse. This eliminates data silos, reduces latency, and democratizes access to powerful models for data analysts, engineers, and scientists alike.

Snowflake Cortex isn’t just another API wrapper; it’s a comprehensive suite of serverless functions that allows users to leverage state-of-the-art large language models (LLMs) and ML models using familiar SQL and Python. From sentiment analysis and text summarization to time-series forecasting and anomaly detection, Cortex provides the building blocks for creating sophisticated AI-powered applications without the operational overhead of managing infrastructure. This article provides a technical deep-dive into Snowflake Cortex, exploring its core functions, practical implementation patterns, advanced use cases like Retrieval-Augmented Generation (RAG), and best practices for optimization and cost management. As developments in the AI world, from OpenAI News to Meta AI News, continue to accelerate, integrated platforms like Cortex are becoming essential for enterprises to stay competitive.

Section 1: The Core of Snowflake Cortex: Serverless Functions and LLMs

At its heart, Snowflake Cortex is an intelligent functions layer that abstracts away the complexity of deploying and scaling AI models. It provides two main categories of functions that can be called directly from your Snowflake environment: LLM Functions and ML-Based Functions. This dual offering allows teams to tackle a wide range of business problems, from unstructured text processing to predictive analytics on structured data.

LLM Functions for Natural Language Processing

Cortex provides access to a curated set of powerful LLMs from providers like Google and Mistral AI, accessible through simple SQL functions. This is a game-changer for anyone working with text data, as it allows for sophisticated NLP tasks to be performed at scale, directly on data stored in Snowflake. Key functions include:

- COMPLETE: A general-purpose function for prompt-based text generation. You provide a prompt and select a model (e.g., ‘gemma-7b’, ‘mixtral-8x7b’), and it returns a generated completion.

- SUMMARIZE: Extracts a concise summary from a longer piece of text.

- TRANSLATE: Translates text from a source language to a target language.

- SENTIMENT: Analyzes text to determine its sentiment score, typically ranging from -1 (negative) to 1 (positive).

- EXTRACT_ANSWER: Given a question and a block of text (context), this function extracts the specific answer from the context.

Let’s see how easy it is to analyze customer feedback. Imagine you have a table called customer_reviews with a column review_text. You can quickly gauge sentiment across all reviews with a single SQL query.

-- Analyze sentiment of customer reviews

SELECT

review_id,

review_text,

SNOWFLAKE.CORTEX.SENTIMENT(review_text) AS sentiment_score

FROM

customer_reviews

LIMIT 10;ML-Based Functions for Predictive Analytics

Beyond LLMs, Cortex offers a suite of classical machine learning models as functions, abstracting away the complexities often associated with tools like Apache Spark MLlib News or custom-built models in AWS SageMaker News. These functions are designed for structured data and cover common predictive tasks:

- FORECASTING: Predicts future values in a time-series dataset (e.g., sales, inventory levels).

- ANOMALY_DETECTION: Identifies unusual patterns or outliers in time-series data that do not conform to expected behavior.

- CLASSIFICATION: Predicts a categorical label for a given set of features (e.g., customer churn, lead scoring).

For example, to forecast the next 30 days of sales, you would first train a model on your historical data and then use it for prediction, all within SQL.

-- 1. Train a forecasting model

CREATE OR REPLACE SNOWFLAKE.ML.FORECAST my_sales_forecast(

INPUT_DATA => SYSTEM$REFERENCE('TABLE', 'daily_sales'),

TIMESTAMP_COLNAME => 'sale_date',

TARGET_COLNAME => 'total_sales'

);

-- 2. Use the model to predict the next 30 days

CALL my_sales_forecast!FORECAST(FORECASTING_PERIODS => 30);Section 2: Practical Implementation: From SQL to Snowpark

The beauty of Snowflake Cortex lies in its accessibility. Whether you’re an analyst who lives in SQL or a data engineer building complex Python pipelines with Snowpark, Cortex meets you where you are. This flexibility is key to its rapid adoption and a significant trend also seen in updates from Azure AI News and Vertex AI News.

The SQL-First Approach for Rapid Analysis

For many use cases, SQL is the fastest path from data to insight. Imagine you’re a product manager with a table of user-submitted feature requests, and you want to categorize them and extract the core problem each user is trying to solve. The COMPLETE function can act as a powerful, zero-shot classifier and extractor.

-- Use an LLM to categorize and summarize feature requests

WITH requests AS (

SELECT

request_id,

request_text

FROM feature_requests

)

SELECT

request_id,

request_text,

SNOWFLAKE.CORTEX.COMPLETE(

'mixtral-8x7b',

CONCAT(

'You are an expert product analyst. Read the following user feature request and perform two tasks:\n',

'1. Categorize it into one of the following: "UI/UX", "Performance", "Reporting", "Integration", "Security", or "Other".\n',

'2. Summarize the core user problem in one sentence.\n',

'Format your response as a JSON object with keys "category" and "summary".\n\n',

'Request: "', request_text, '"'

)

) AS analysis

FROM requests

LIMIT 5;This single query enriches your raw data with structured, AI-generated insights without ever leaving the Snowflake UI. The results in the analysis column would be a clean JSON string that can be easily parsed for further analysis or dashboarding.

Python Integration with Snowpark for Advanced Workflows

For more complex, programmatic workflows, Snowpark for Python provides a seamless bridge. You can leverage the full power of Python’s ecosystem while executing Cortex functions on Snowflake’s compute. This is ideal for building data pipelines, creating APIs, or integrating with other systems. The snowflake.cortex library makes this incredibly straightforward.

Here’s how you could build a Python UDF (User-Defined Function) that takes a job description and extracts key skills using Cortex. This approach encapsulates the logic and makes it reusable across your organization.

from snowflake.snowpark import Session

from snowflake.snowpark.functions import udf, col

import snowflake.cortex as cortex

def extract_skills_from_description(session: Session, description: str) -> str:

"""

Uses Snowflake Cortex to extract a list of skills from a job description.

"""

prompt = f"""

From the following job description, extract a comma-separated list of the top 5 most important technical skills.

Do not add any preamble. Just provide the list.

Job Description:

{description}

"""

response = cortex.complete(

model="mixtral-8x7b",

prompt=prompt

)

return response

def main(session: Session):

# Register the UDF

extract_skills_udf = udf(

lambda desc: extract_skills_from_description(session, desc),

name="extract_skills",

return_type="string",

input_types=["string"],

is_permanent=True,

stage_location="@my_udf_stage",

replace=True

)

# Apply the UDF to a table of job postings

job_postings_df = session.table("job_postings")

enriched_df = job_postings_df.with_column(

"extracted_skills",

extract_skills_udf(col("description"))

)

enriched_df.write.mode("overwrite").save_as_table("enriched_job_postings")

return "Successfully enriched job postings table."

# To run this, you would need a Snowpark session object.

# if __name__ == "__main__":

# session = Session.builder.getOrCreate()

# main(session)Section 3: Advanced Applications: Building RAG with Cortex Vector Search

One of the most powerful applications of LLMs today is Retrieval-Augmented Generation (RAG), which grounds model responses in your own proprietary data. This mitigates hallucinations and allows LLMs to answer questions about specific, private information. The latest Snowflake Cortex News highlights its native support for the entire RAG workflow, positioning it as a strong competitor to specialized vector databases like those featured in Pinecone News or Weaviate News.

The Components of RAG in Snowflake

Snowflake provides all the necessary primitives for building a RAG system end-to-end:

- EMBED_TEXT_768: A Cortex function that converts text into dense vector embeddings using a state-of-the-art model.

- VECTOR Data Type: A native data type for storing these embeddings efficiently.

- VECTOR_L2_DISTANCE: A function to calculate the similarity between two vectors, which is the core of semantic search.

Let’s walk through building a simple RAG-based Q&A system over a knowledge base of internal company documents.

-- Step 1: Create a table to store document chunks and their embeddings

CREATE OR REPLACE TABLE knowledge_base (

doc_id INT,

chunk_text VARCHAR,

embedding VECTOR(FLOAT, 768)

);

-- Step 2: Ingest data and generate embeddings using Cortex

INSERT INTO knowledge_base (doc_id, chunk_text, embedding)

SELECT

d.doc_id,

d.chunk_text,

SNOWFLAKE.CORTEX.EMBED_TEXT_768('e5-base-v2', d.chunk_text)

FROM

raw_documents d;

-- Step 3: Create a User-Defined Function (UDF) to query the knowledge base

CREATE OR REPLACE FUNCTION ask_my_kb(question VARCHAR)

RETURNS VARCHAR

LANGUAGE SQL

AS

$$

SELECT SNOWFLAKE.CORTEX.COMPLETE(

'mixtral-8x7b',

CONCAT(

'You are a helpful assistant. Answer the following question based ONLY on the provided context. If the answer is not in the context, say "I do not have enough information."\n\n',

'Context:\n',

(

SELECT chunk_text

FROM knowledge_base

ORDER BY VECTOR_L2_DISTANCE(

embedding,

SNOWFLAKE.CORTEX.EMBED_TEXT_768('e5-base-v2', question)

)

LIMIT 1

),

'\n\nQuestion: ',

question

)

)

$$;

-- Step 4: Ask a question!

SELECT ask_my_kb('What was our revenue in Q4 2023?');

This example demonstrates a complete, serverless RAG pipeline built entirely within Snowflake. It’s a powerful pattern that can be orchestrated by frameworks like those in the LangChain News or LlamaIndex News, using Snowflake as the vector store and generation engine, simplifying the tech stack significantly.

Section 4: Best Practices, Optimization, and Cost Management

While Snowflake Cortex is incredibly powerful and easy to use, adopting it at scale requires attention to best practices, performance, and cost. Following these guidelines will ensure you get the most value from the service.

Development and Implementation Tips

- Prompt Engineering is Key: The quality of your output from functions like

COMPLETEis highly dependent on the quality of your prompt. Be clear, specific, and provide examples (few-shot prompting) within the prompt for better results. - Choose the Right Model: Cortex offers multiple models. For simple tasks, a smaller, faster model like `gemma-7b` might be sufficient and more cost-effective. For complex reasoning, a larger model like `mixtral-8x7b` is a better choice.

- Batch Processing: When applying a Cortex function to millions of rows, avoid row-by-row execution. Let Snowflake’s query optimizer handle parallelization by applying the function in a single SQL statement.

- Leverage UDFs for Reusability: For complex logic or prompts, encapsulate them in a SQL or Python UDF as shown earlier. This promotes code reuse, consistency, and easier maintenance.

Cost Management and Monitoring

Cortex functions consume Snowflake credits, and costs can add up quickly if not monitored. The cost is based on the model used and the number of tokens processed (both input and output).

- Monitor Usage: Regularly check the

SNOWFLAKE.ACCOUNT_USAGE.QUERY_HISTORYview. You can filter for queries that call Cortex functions to understand consumption patterns and identify costly operations. - Set Resource Monitors: Use Snowflake’s built-in resource monitors to set credit quotas on warehouses that are heavily used for AI workloads. This can prevent runaway costs by suspending the warehouse when a threshold is reached.

- Cache Results: If you frequently ask the same questions or process the same text, consider materializing the results of Cortex functions in a table to avoid re-computing them.

By staying mindful of these considerations, you can build scalable, cost-effective AI applications that harness the full potential of the Snowflake Data Cloud.

Conclusion: The Future of AI is Integrated

Snowflake Cortex represents a significant step forward in making advanced AI and ML accessible and practical for the enterprise. By embedding these capabilities directly into the data layer, it eliminates friction, enhances security, and empowers a broader range of users to build data-driven applications. The ability to perform complex NLP, predictive analytics, and even build sophisticated RAG systems with simple SQL and Python commands is a testament to the power of this integrated approach.

As the AI landscape, filled with updates from NVIDIA AI News to Google DeepMind News, continues to evolve, platforms that bring computation to the data will have a distinct advantage. Snowflake Cortex is a prime example of this trend, offering a robust, serverless, and scalable solution for turning data into actionable intelligence. For any organization invested in the Snowflake ecosystem, exploring Cortex is no longer just an option—it’s a strategic imperative for unlocking the next wave of innovation.