Unlocking Flexibility in Keras for R: A Deep Dive into the New Matrix Multiplication Update

Introduction: The Quiet Revolution in Developer Experience

In the fast-paced world of artificial intelligence, headlines are often dominated by groundbreaking model releases and major framework updates. We see a constant stream of Keras News, TensorFlow News, and PyTorch News, alongside exciting developments from research labs like Google DeepMind News and Meta AI News. While these large-scale innovations shape the future of the field, the smaller, more subtle updates to our favorite tools often have the most immediate impact on our daily productivity and code clarity. These quality-of-life improvements reduce friction, eliminate boilerplate, and make complex operations feel more natural and intuitive.

One such significant yet understated enhancement has recently arrived for the R community using the Keras package. A new update has changed how the familiar R matrix multiplication operator, %*%, interacts with TensorFlow tensors. It now dispatches to a more flexible, broadcasting-aware backend operation, fundamentally simplifying how developers handle batched computations and complex tensor manipulations. This article provides a comprehensive technical deep dive into this update, exploring what changed, why it matters, and how you can leverage it to write cleaner, more powerful, and more idiomatic R code for your deep learning projects.

Section 1: The Bedrock of Neural Networks: Matrix Multiplication

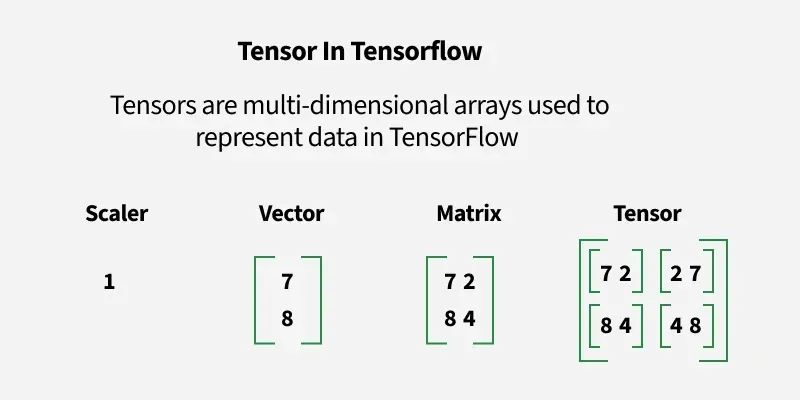

At the heart of nearly every modern neural network lies a fundamental mathematical operation: matrix multiplication. From the simple weighted sums in a dense layer to the complex self-attention mechanisms in Transformer architectures (a cornerstone of topics in Hugging Face Transformers News), matrix multiplication is the computational workhorse. In the R programming language, data scientists have a long-established and idiomatic operator for this: %*%.

Traditionally, matrix multiplication has strict shape requirements. To multiply matrix A by matrix B (A %*% B), the number of columns in A must equal the number of rows in B. For instance, a matrix of shape (m, k) can be multiplied by a matrix of shape (k, n), resulting in an output matrix of shape (m, n). Any deviation from this rule would result in an error.

When using Keras and TensorFlow in R, we are no longer working with standard R matrices but with TensorFlow tensors. While these objects can be manipulated in R, the underlying operations are executed by the highly optimized TensorFlow backend. Previously, when using %*% with TensorFlow tensors, the operator was often mapped to TensorFlow’s tf$matmul(), which largely adheres to these strict, classical shape constraints.

The Challenge of Batched Data

This strictness becomes a hurdle in deep learning, where we almost always work with batches of data to process multiple samples simultaneously for efficiency. For example, instead of a single input vector of shape (1, k), we might have a batch of 32 inputs, resulting in a tensor of shape (32, 1, k). Multiplying this batch with a single weight matrix of shape (k, n) is a common requirement. Under the old, stricter rules, this operation would fail due to incompatible dimensions, forcing developers to write cumbersome code involving loops or manual tensor tiling/reshaping to make the shapes conform.

# Load necessary libraries

library(keras)

library(tensorflow)

# Ensure we are using TensorFlow backend

use_implementation("tensorflow")

# Define a batch of 3 matrices, each 2x3

# Shape: (3, 2, 3)

batch_of_matrices <- tf$constant(array(1:18, dim = c(3, 2, 3)), dtype = "float32")

# Define a single matrix to multiply with each matrix in the batch

# Shape: (3, 4)

single_matrix <- tf$constant(array(1:12, dim = c(3, 4)), dtype = "float32")

# In older versions of Keras, this would likely fail or require a different approach

# because the inner dimensions (3 and 3) match, but the batching is not

# automatically handled by the standard matmul logic.

# The expected behavior is to multiply each 2x3 matrix in the batch

# by the 3x4 matrix.

# We will see in the next section how the new update makes this seamless.

print(dim(batch_of_matrices))

print(dim(single_matrix))This example highlights the friction point. The intent is clear—apply the same transformation to every item in the batch—but the tooling required extra steps to express it. This is precisely the problem the latest Keras update solves.

Section 2: The Update Explained: Broadcasting with `op_matmul`

The core of the recent Keras for R update is a change in the dispatch mechanism for the %*% operator when applied to TensorFlow tensors. It now dispatches to op_matmul(), which is a Keras backend function that typically maps to TensorFlow’s more powerful and flexible `tf.linalg.matmul` or a similar operation. The key difference between the older tf$matmul() and this new backend operation is its intelligent support for broadcasting.

What is Broadcasting?

Broadcasting is a powerful mechanism that allows operations on tensors of different shapes without requiring the developer to manually expand the smaller tensor. The backend automatically “stretches” or “duplicates” the smaller tensor along the necessary dimensions to make the shapes compatible. In the context of matrix multiplication, this most commonly applies to the batch dimensions.

With broadcasting-enabled matrix multiplication, the operation understands the concept of batches. When you multiply a tensor of shape (batch_size, m, k) with a tensor of shape (k, n), the backend effectively broadcasts the second matrix, treating it as if it were a batch of identical matrices of shape (batch_size, k, n). The multiplication is then performed element-wise across the batch, resulting in a final output tensor of shape (batch_size, m, n). This is exactly the behavior needed for efficient deep learning computations.

A Practical “Before and After” Demonstration

Let’s revisit our previous code example and see how it behaves with the updated Keras package. The new %*% operator can now handle this batched operation directly and intuitively.

# Load necessary libraries

library(keras)

library(tensorflow)

# Ensure we are using TensorFlow backend

use_implementation("tensorflow")

# Define a batch of 3 matrices, each 2x3

# Shape: (3, 2, 3)

batch_of_matrices <- tf$constant(array(1:18, dim = c(3, 2, 3)), dtype = "float32")

# Define a single matrix to multiply with each matrix in the batch

# Shape: (3, 4)

single_matrix <- tf$constant(array(1:12, dim = c(3, 4)), dtype = "float32")

# With the new Keras update, this operation now works seamlessly!

# The `%*%` operator dispatches to a broadcasting-aware matmul.

# It understands that `single_matrix` should be multiplied with each of the

# 3 matrices in `batch_of_matrices`.

result <- batch_of_matrices %*% single_matrix

# Let's check the output shape

# Expected output shape: (3, 2, 4)

# (batch_dim, m, k) %*% (k, n) -> (batch_dim, m, n)

# (3, 2, 3) %*% (3, 4) -> (3, 2, 4)

cat("Shape of the input batch:", dim(batch_of_matrices), "\n")

cat("Shape of the single matrix:", dim(single_matrix), "\n")

cat("Shape of the result:", dim(result), "\n")

# Print the result to see the computed values

print(result)As the example demonstrates, no loops, reshaping, or manual tiling is required. The code is clean, concise, and perfectly reflects the mathematical intent. This seemingly small change significantly enhances the developer experience, bringing the behavior of tensor operations in R closer to what users of Python frameworks like TensorFlow and PyTorch expect. This alignment is crucial for an ecosystem where cross-platform collaboration and knowledge sharing are common, often discussed in forums like Kaggle News or implemented on platforms like Google Colab and AWS SageMaker.

Section 3: Advanced Applications in Modern Architectures

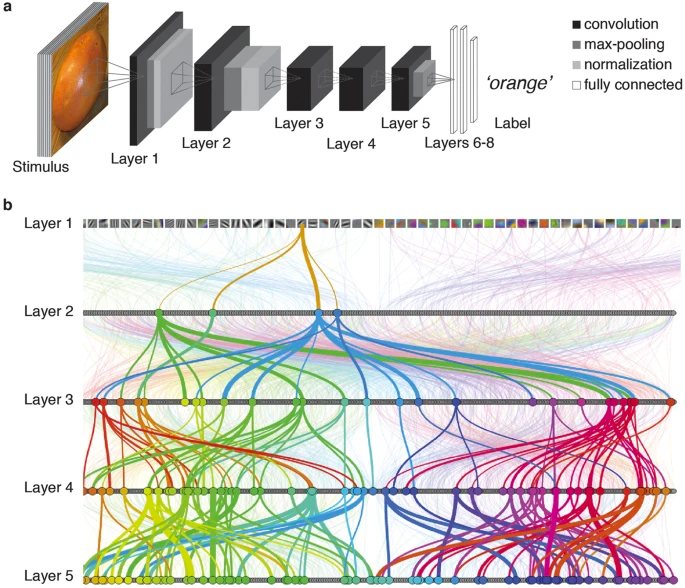

The benefits of this update extend beyond simple batch processing. The enhanced flexibility of the %*% operator streamlines the implementation of more advanced neural network components, particularly those found in state-of-the-art models.

Simplifying Custom Keras Layers

When building custom layers in Keras, you define the forward pass logic in the call method. This is where the improved matrix multiplication becomes incredibly valuable. Imagine creating a custom dense layer that needs to process a sequence of inputs (e.g., a time series or sentence embeddings), which would have a shape like (batch_size, timesteps, features). Applying a dense transformation to each timestep requires a batched matrix multiplication.

# Load Keras

library(keras)

# Define a custom Keras layer

CustomTimeDistributedDense <- new_custom_layer(

"CustomTimeDistributedDense",

initialize = function(units) {

super()$initialize()

self$units <- units

},

build = function(input_shape) {

# The last dimension of the input is the feature dimension

last_dim <- tail(input_shape, 1)

# Create the weight matrix for the dense transformation

self$w <- self$add_weight(

shape = list(last_dim, self$units),

initializer = "random_normal",

trainable = TRUE,

name = "kernel"

)

# Create the bias vector

self$b <- self$add_weight(

shape = list(self$units),

initializer = "zeros",

trainable = TRUE,

name = "bias"

)

},

call = function(inputs) {

# `inputs` has shape (batch_size, timesteps, features)

# `self$w` has shape (features, units)

# Thanks to the update, we can use `%*%` directly!

# The backend broadcasts the weight matrix across the batch and timesteps.

output <- inputs %*% self$w + self$b

# Apply an activation function

keras$activations$relu(output)

}

)

# --- Test the custom layer ---

# Create a sample input tensor: 32 samples, 10 timesteps, 16 features

sample_input <- tf$random$normal(shape = c(32, 10, 16))

# Instantiate our custom layer with 8 output units

custom_layer <- CustomTimeDistributedDense(units = 8)

# Pass the input through the layer

output <- custom_layer(sample_input)

# The output shape should be (32, 10, 8)

cat("Input Shape:", dim(sample_input), "\n")

cat("Output Shape:", dim(output), "\n")In this code, the line output <- inputs %*% self$w + self$b is the star of the show. The %*% operator elegantly handles the multiplication of the 3D input tensor with the 2D weight matrix, applying the same transformation at each of the 10 timesteps for all 32 samples in the batch. This makes the code for custom layers cleaner and more readable.

Relevance to Attention Mechanisms

This update is also highly relevant to implementing attention mechanisms, the core component of Transformer models. The famous attention formula, Attention(Q, K, V) = softmax( (Q K^T) / sqrt(d_k) ) V, is built entirely on matrix multiplications. In a real implementation, the Query (Q), Key (K), and Value (V) tensors are not single matrices but batches of matrices, often with additional dimensions for multiple attention heads, e.g., (batch_size, num_heads, sequence_length, d_k). Being able to perform these batched matrix multiplications using the idiomatic %*% operator significantly lowers the barrier to experimenting with and implementing such advanced architectures directly in R with Keras.

Section 4: Best Practices and Optimization Considerations

While the new, more flexible %*% operator is a powerful convenience, it's important to adopt best practices to leverage it effectively and avoid potential pitfalls.

1. Always Be Mindful of Shapes

The convenience of broadcasting can sometimes mask shape-related bugs. An operation might execute without error but produce an output with an unintended shape due to unexpected broadcasting rules. Always be diligent about checking the dimensions of your tensors before and after multiplication.

# Good practice: Assert or print shapes to verify your assumptions

input_tensor <- tf$random$normal(shape = c(32, 50, 128))

weight_matrix <- tf$random$normal(shape = c(128, 64))

# Before multiplying, confirm your understanding of the shapes

stopifnot(tail(dim(input_tensor), 1) == head(dim(weight_matrix), 1))

result <- input_tensor %*% weight_matrix

# After multiplying, verify the output shape is as expected

cat("Expected output shape: (32, 50, 64)\n")

cat("Actual output shape: ", dim(result), "\n")

stopifnot(all(dim(result) == c(32, 50, 64)))2. Embrace Idiomatic R

The primary benefit of this update is allowing you to write more natural, "R-like" code. Lean into this. Prefer %*% for matrix multiplication over more verbose calls like tf$linalg$matmul(a, b) unless you need access to specific arguments of the underlying function (e.g., adjoint_a=TRUE). This improves code readability for you and other R developers.

3. Trust the Backend for Performance

The underlying op_matmul is a highly optimized operation that executes on the TensorFlow backend, leveraging accelerated hardware like GPUs or TPUs if available. By using %*%, you are tapping directly into this performance. Avoid implementing matrix multiplication in R-level loops, as this will be orders of magnitude slower. The journey from high-level code to optimized execution is a key theme in tools across the AI landscape, from inference servers like Triton Inference Server to optimization frameworks like TensorRT News and OpenVINO News.

4. Know When to Use Other Tools

For very complex tensor contractions that go beyond batched matrix multiplication, %*% might not be the most explicit tool. For these scenarios, consider using TensorFlow's `tf$einsum`, which provides a powerful and unambiguous mini-language for specifying tensor operations. Using the right tool for the job ensures both correctness and clarity.

Conclusion: A Small Change with a Big Impact

The recent update to the Keras for R package, which realigns the %*% operator to a broadcasting-aware backend, is a perfect example of a developer-centric improvement that yields significant benefits. By making batched matrix multiplication more intuitive and syntactically clean, this change lowers the cognitive overhead for developers, reduces the likelihood of bugs from manual reshaping, and makes R a more expressive and powerful environment for modern deep learning.

This enhancement allows R users to more easily implement complex architectures, from custom layers to attention mechanisms, using idiomatic code they are already familiar with. As the AI ecosystem continues its rapid evolution, with constant news from platforms like Azure AI, Vertex AI, and MLOps tools like MLflow News and Weights & Biases News, it is these thoughtful improvements to the core developer experience that ensure our tools remain productive, powerful, and enjoyable to use. For R developers in the deep learning space, this is a welcome step forward, making the path from idea to implementation just a little bit smoother.