JAX: Unifying High-Performance Computing and Machine Learning for the Next Generation of AI

In the rapidly evolving landscape of artificial intelligence, the tools we use define the boundaries of what’s possible. While frameworks like TensorFlow and PyTorch dominate the mainstream, a powerful contender, JAX, has steadily gained prominence, especially within the research community and at institutions like Google DeepMind. JAX isn’t just another deep learning library; it’s a fundamental shift in how we approach high-performance numerical computing. Its power lies in a profound act of unification: combining the familiar, intuitive syntax of NumPy with a suite of composable function transformations for automatic differentiation, JIT compilation, and advanced vectorization.

This synergy creates a platform that is both remarkably flexible and blazingly fast, offering researchers and engineers the ability to design novel architectures and scale them to massive datasets with unprecedented ease. As discussions around JAX News intensify, it’s clear that this library is more than an academic curiosity; it’s a critical tool shaping the future of models discussed in OpenAI News and Anthropic News. This article provides a comprehensive technical dive into JAX, exploring its core pillars, demonstrating its practical application by building a neural network, and covering advanced techniques and best practices for harnessing its full potential.

The Core Pillars of JAX: A Powerful Union

JAX’s design philosophy is centered on augmenting a well-established standard—NumPy—with modern compiler and machine learning capabilities. This approach lowers the barrier to entry while providing a direct path to extreme performance on accelerators like GPUs and TPUs. This unification is built on three key function transformations.

Familiar Foundations with jax.numpy

At its heart, JAX offers a near-complete reimplementation of the NumPy API, accessible through jax.numpy (commonly aliased as jnp). This means that anyone with experience in the scientific Python ecosystem can be productive with JAX almost immediately. You can create arrays, perform mathematical operations, and manipulate tensors using the same function names and conventions you already know. This seamless transition is a significant advantage, allowing developers to leverage existing knowledge without a steep learning curve.

import jax

import jax.numpy as jnp

# Use a pseudorandom number generator key for reproducibility

key = jax.random.PRNGKey(0)

# Create JAX arrays using familiar NumPy syntax

x = jnp.arange(10.0)

W = jax.random.normal(key, (10, 5))

b = jnp.ones(5)

# Perform standard linear algebra operations

y = jnp.dot(x, W) + b

print(y)

# Output: [2.8123524 2.4243574 5.321394 0.78532696 3.484223 ]Automatic Differentiation with grad

Here is where JAX begins to diverge powerfully from NumPy. JAX introduces jax.grad, a function transformation that can automatically differentiate native Python and JAX code. You can take any function that returns a scalar value and, with grad, create a new function that computes the gradient of the original function with respect to its arguments. This is the cornerstone of modern machine learning, enabling gradient-based optimization for training complex models. The latest PyTorch News and TensorFlow News often highlight new features in their respective autodiff engines, but JAX’s functional approach offers unique elegance and power.

import jax

import jax.numpy as jnp

# Define a simple polynomial function

def predict(params, x):

return params['w'] * x**2 + params['b']

def loss_fn(params, x, y_true):

y_pred = predict(params, x)

return jnp.mean((y_pred - y_true)**2)

# Create a function that computes the gradient of the loss

# argnums=0 specifies that we want the gradient with respect to the first argument (params)

grad_fn = jax.grad(loss_fn, argnums=0)

# Initialize parameters and create dummy data

params = {'w': 2.0, 'b': 1.0}

x_data = jnp.array([1.0, 2.0, 3.0])

y_data = jnp.array([3.0, 9.0, 19.0]) # Ideal is w=2, b=1

# Calculate the gradients

gradients = grad_fn(params, x_data, y_data)

print(gradients)

# Output: {'b': Array(0., dtype=float32), 'w': Array(0., dtype=float32)}

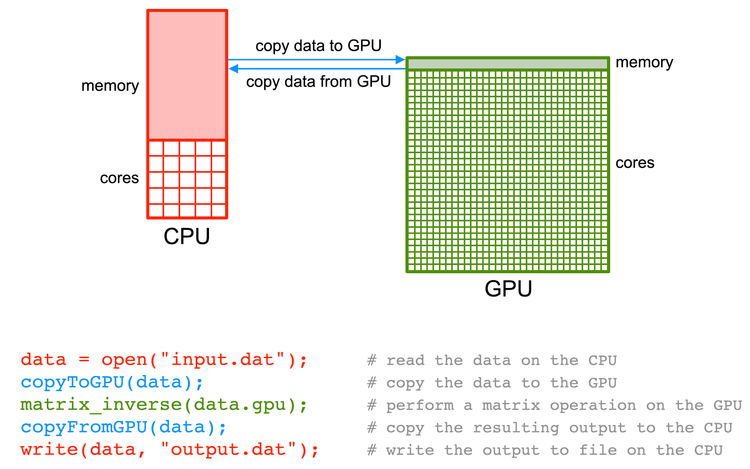

# Gradients are zero because our initial params perfectly fit the data.Just-In-Time (JIT) Compilation with jit

The final core pillar is jax.jit, which enables just-in-time compilation. By applying the @jit decorator or wrapping a function with jit(), you instruct JAX to use Google’s XLA (Accelerated Linear Algebra) compiler to translate your Python function into highly optimized machine code kernels that run directly on GPUs or TPUs. This process can lead to dramatic speedups by fusing operations, minimizing memory transfers, and taking full advantage of the underlying hardware. This performance is crucial for training large models, a constant theme in NVIDIA AI News and a key reason for JAX’s adoption in demanding environments like those found on Google Colab News and Vertex AI News.

Building a Neural Network: JAX in Practice

To truly understand JAX, we must see how these pillars work together. Building a simple neural network from scratch reveals JAX’s functional programming paradigm, a key distinction from the object-oriented approach of frameworks like PyTorch or Keras.

The Functional Programming Paradigm

In JAX, everything is a function. Models are functions that transform input data and parameters into predictions. Training steps are functions that take the current model parameters and a batch of data and return updated parameters. This “pure function” approach means that functions do not have side effects; they don’t modify global state. All state, such as model weights, must be explicitly passed into and out of functions. This immutability can seem verbose at first, but it makes code easier to reason about, debug, and parallelize. It also aligns well with the principles of MLOps platforms like MLflow News or Weights & Biases News, where reproducible experiments are paramount.

A Simple Multi-Layer Perceptron (MLP)

Let’s build a simple MLP to see this in action. We will define the model’s forward pass, the loss function, and an update step that uses gradients to optimize the parameters. For more complex models, libraries like Flax (from Google) and Haiku (from DeepMind) provide helpful abstractions on top of JAX, similar to the role Keras News plays for TensorFlow.

import jax

import jax.numpy as jnp

from jax import grad, jit, random

# 1. Initialize network parameters

def init_mlp_params(layer_widths, parent_key):

params = []

keys = random.split(parent_key, len(layer_widths) - 1)

for in_dim, out_dim, key in zip(layer_widths[:-1], layer_widths[1:], keys):

w_key, b_key = random.split(key)

# Glorot/Xavier initialization

w = random.normal(w_key, (out_dim, in_dim)) * jnp.sqrt(2.0 / in_dim)

b = jnp.zeros(out_dim)

params.append({'w': w, 'b': b})

return params

# 2. Define the model's forward pass

def predict(params, x):

# Apply layers with ReLU activation, no activation on final layer

activations = x

for layer in params[:-1]:

outputs = jnp.dot(layer['w'], activations) + layer['b']

activations = jax.nn.relu(outputs)

final_layer = params[-1]

logits = jnp.dot(final_layer['w'], activations) + final_layer['b']

return logits

# 3. Define the loss function

def loss_fn(params, x_batch, y_batch):

preds = predict(params, x_batch)

return -jnp.mean(preds * y_batch) # Example: simple cross-entropy for one-hot

# 4. Define the update step

@jit

def update(params, x, y, learning_rate=0.01):

grads = grad(loss_fn)(params, x, y)

# This is a simple SGD update. Note: we must return the new params.

# JAX arrays are immutable, so we can't update in place.

return [

{'w': p['w'] - learning_rate * g['w'], 'b': p['b'] - learning_rate * g['b']}

for p, g in zip(params, grads)

]

# --- Example Usage ---

key = random.PRNGKey(42)

layer_widths = [784, 512, 256, 10] # For an MNIST-like problem

mlp_params = init_mlp_params(layer_widths, key)

# Create dummy data

dummy_x = jnp.ones((784, 1))

dummy_y = jnp.zeros((10, 1))

# Perform one update step

new_params = update(mlp_params, dummy_x, dummy_y)

print("First layer weights updated:", not jnp.allclose(mlp_params[0]['w'], new_params[0]['w']))

# Output: First layer weights updated: TrueAdvanced Transformations for Scalability and Performance

Beyond grad and jit, JAX offers more advanced transformations that are essential for scaling up to the large models and datasets that are common topics in Hugging Face Transformers News and Meta AI News.

Automatic Vectorization with vmap

One of JAX’s most powerful features is jax.vmap (vectorizing map). It allows you to transform a function written for a single data point into one that can process an entire batch of data in parallel, without writing any loops. You simply write the logic for one example, and vmap handles the batching dimension for you. This leads to cleaner code and lets JAX’s compiler generate highly efficient, vectorized kernels.

import jax

import jax.numpy as jnp

# Original predict function processes one input vector (e.g., shape [784])

# Let's assume a simple linear model for clarity

def predict_single(W, b, x):

return jnp.dot(W, x) + b

# Create a batched version of the predict function using vmap

# in_axes=(None, None, 0) means:

# - Don't map over W (it's shared)

# - Don't map over b (it's shared)

# - Map over the first axis (axis 0) of x (the batch dimension)

batched_predict = jax.vmap(predict_single, in_axes=(None, None, 0))

# Example usage

key = jax.random.PRNGKey(0)

W = jax.random.normal(key, (10, 784))

b = jnp.ones(10)

# A batch of 128 images

image_batch = jnp.ones((128, 784))

# Run the batched prediction

# The input `image_batch` has shape (128, 784)

# The output `predictions` will have shape (128, 10)

predictions = batched_predict(W, b, image_batch)

print("Input batch shape:", image_batch.shape)

print("Output predictions shape:", predictions.shape)Parallelization with pmap

For scaling across multiple devices (e.g., 8 GPUs on a single machine or multiple TPU cores), JAX provides jax.pmap (parallel map). It enables the Single Program, Multiple Data (SPMD) programming model, where the same code is run on multiple devices, but each device operates on a different slice of the data. This is the key to data parallelism and is fundamental to training foundation models. Managing distributed training is a complex topic, often involving tools like Ray News or DeepSpeed News in the PyTorch world, but JAX provides a powerful, low-level primitive to build such systems.

Managing State and Randomness

In a pure functional setting, randomness requires careful handling. JAX requires you to explicitly manage pseudorandom number generator (PRNG) state. You create a main `PRNGKey` and must split it to generate new, independent sub-keys for every random operation. This ensures that your code is perfectly reproducible, a critical feature for scientific research and debugging. While it can feel cumbersome initially, this explicit approach eliminates a common source of non-determinism in machine learning workflows.

The JAX Ecosystem and Best Practices

While JAX provides the core engine, a rich ecosystem is growing around it to support the entire machine learning lifecycle, from experimentation to deployment.

The Broader Ecosystem

JAX is the foundation for high-level libraries like Flax and Haiku, which simplify model building. For inference, while the path to production can be more complex than for PyTorch models, options exist. JAX models can be served via servers like the Triton Inference Server News by first converting them to a standard format like StableHLO or ONNX. Experiment tracking is seamless with integrations for Comet ML News and Weights & Biases. In the broader AI landscape, JAX is often used to prototype novel architectures that might later be integrated into complex pipelines built with tools like LangChain News or deployed on cloud platforms such as AWS SageMaker News and Azure Machine Learning News.

Optimization and Common Pitfalls

- JIT Scope: Apply

@jitto large, computationally-intensive functions (like your entire training update step), not small, trivial ones. The overhead of compilation can outweigh the benefits for tiny functions. - Python Control Flow: Avoid using native Python

if/elsestatements inside a JIT-compiled function if the condition depends on the value of a JAX array. The function is compiled based on the *shapes* of the inputs, not their values. Use JAX’s structured control flow operators likejax.lax.condorjax.lax.scaninstead. - Tracing vs. Execution: Understand that JAX traces your Python code once to generate the XLA graph. A common error is placing a `print()` statement inside a jitted function and expecting it to print on every call; it will only print during the initial trace.

- Data Types: Be mindful of data types. By default, JAX often uses 64-bit precision, which can be slower on GPUs. Explicitly use

jnp.float32where appropriate for better performance.

Conclusion

JAX represents a powerful unification of ideas: the simplicity of NumPy’s API, the necessity of automatic differentiation, and the raw performance of modern compilers and hardware accelerators. Its functional, composable design provides a uniquely flexible and scalable platform for machine learning research and high-performance computing. By embracing explicit state management and function transformations like jit, grad, and vmap, developers can build systems that are not only fast but also reproducible and easier to scale across multiple devices.

As the AI community continues to push the boundaries of model size and complexity, the performance and scalability offered by JAX will become increasingly vital. Whether you are a researcher designing a novel architecture, an engineer optimizing a training pipeline, or simply a developer curious about the future of numerical computing, exploring JAX offers a glimpse into a more powerful and efficient paradigm for AI development. The ongoing stream of innovations highlighted in JAX News confirms that it is a critical tool for anyone serious about building the next generation of artificial intelligence.