From Pixels to Policy: How Embodied AI is Learning to Replicate Tasks from Video

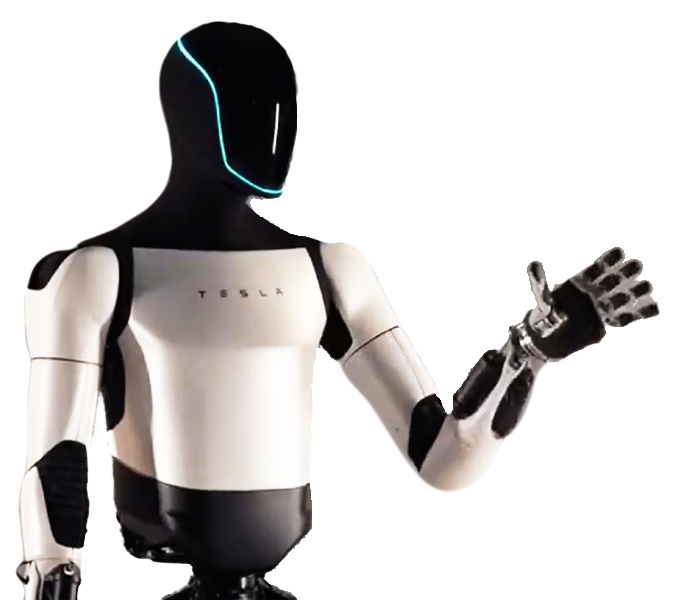

The field of artificial intelligence is witnessing a monumental shift, moving beyond digital text and images into the physical world. Embodied AI, the concept of intelligent agents that can perceive, reason, and act within a physical environment, is no longer a distant dream. A key catalyst for this revolution is the ability of AI models to learn complex tasks simply by observing them. Imagine a robot watching a YouTube tutorial on assembling furniture and then replicating the process flawlessly. This “watch and learn” paradigm is rapidly becoming a reality, fueled by breakthroughs in computer vision, large-scale foundation models, and accessible cloud computing platforms. This convergence is not just a topic of academic curiosity; it represents a fundamental change in how we approach robotics, automation, and human-AI interaction.

Recent developments, highlighted in the latest Google DeepMind News and Meta AI News, showcase models that can interpret unstructured video data and translate it into a sequence of executable actions for a robot. This process bypasses the need for laborious, hand-coded instructions or millions of trial-and-error cycles in reinforcement learning. Instead, it taps into the largest and most diverse dataset available: the internet’s vast repository of video content. In this article, we will delve into the technical underpinnings of this technology, exploring the core concepts, implementation pipelines, advanced techniques, and the critical role of platforms that are making this future accessible, with a particular focus on insights relevant to the latest Replicate News and the broader MLOps ecosystem.

The Core Concepts: Translating Video into Action

At its heart, teaching a robot to learn from video is a problem of translation—converting a sequence of pixels into a sequence of physical motor commands. This process can be broken down into three fundamental stages: video understanding, state representation, and policy learning. Each stage leverages state-of-the-art models and techniques from the wider AI landscape.

1. Video Understanding: The Eyes of the Agent

Before a robot can act, it must first perceive and comprehend the content of a video. This is far more complex than static image recognition. The model must understand temporal dynamics, object permanence, causal relationships, and human intent. Modern approaches utilize powerful architectures like Vision Transformers (ViT) and their video-centric variants (e.g., VideoMAE, TimeSformer). These models, often found in libraries discussed in Hugging Face Transformers News, excel at capturing spatio-temporal features from a sequence of video frames. They process the video and output a rich, high-dimensional embedding—a numerical representation that encodes the essential information about the actions being performed.

# Example: Extracting video features using Hugging Face Transformers

# This demonstrates the first step: understanding the video content.

import torch

from transformers import VideoMAEImageProcessor, VideoMAEForVideoClassification

import numpy as np

import av # for video decoding

# Load a pre-trained video understanding model and processor

processor = VideoMAEImageProcessor.from_pretrained("MCG-NJU/videomae-base")

model = VideoMAEForVideoClassification.from_pretrained("MCG-NJU/videomae-base")

# Helper function to read video frames

def read_video_pyav(container, indices):

frames = []

container.seek(0)

for i, frame in enumerate(container.decode(video=0)):

if i in indices:

frames.append(frame.to_ndarray(format="rgb24"))

return np.stack(frames)

# Assume 'video_path' is the path to a video file (e.g., "assembly_task.mp4")

# For this example, we'll generate a dummy video tensor

num_frames = 16

dummy_video_frames = list(np.random.randn(num_frames, 3, 224, 224))

# Process the video frames and get model inputs

inputs = processor(dummy_video_frames, return_tensors="pt")

# Forward pass to get the video embeddings (features)

# We can access the hidden states before the classification head

with torch.no_grad():

outputs = model(**inputs, output_hidden_states=True)

# The last hidden state serves as a powerful embedding of the video's content

video_embedding = outputs.hidden_states[-1].mean(dim=1) # Average pooling over frames

print("Shape of the video embedding:", video_embedding.shape)

# Expected Output: Shape of the video embedding: torch.Size([1, 768])2. State Representation and Policy Learning

The video embedding is the “what,” and the robot’s actions are the “how.” The bridge between them is the policy network. This network, typically built using frameworks like PyTorch News or TensorFlow News, learns a mapping (a “policy”) from a given state to a specific action. In imitation learning, the “state” is derived from the video embedding, and the model is trained to predict the actions that a human took in the video. This is a form of supervised learning where the model minimizes the difference between its predicted actions and the ground-truth actions from a dataset of demonstrations. This approach is significantly more data-efficient than traditional reinforcement learning for many manipulation tasks.

Building the “Watch and Learn” Implementation Pipeline

Creating a system that can learn from video involves a complex pipeline that spans data processing, model architecture, and robotics integration. The challenge lies in aligning the visual domain of online videos with the physical domain of a robot’s actuators.

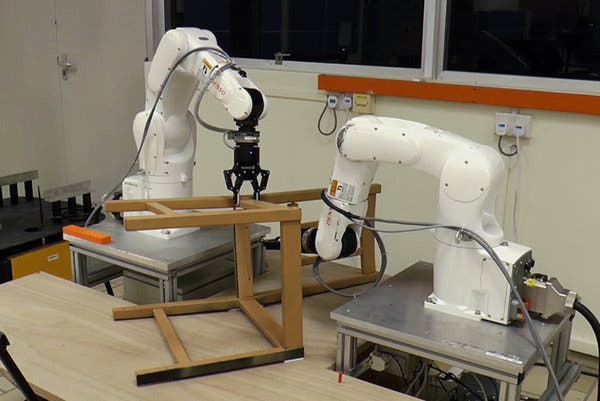

Data Collection and Simulation

The biggest hurdle is the “correspondence problem”: how to map actions seen in a 2D video (e.g., a human hand turning a screwdriver) to the specific joint angles and gripper forces of a robot. One solution is to collect paired data: videos of a robot being teleoperated to perform a task, paired with the exact motor commands sent during the operation. However, this is expensive and time-consuming. A more scalable approach, often highlighted in NVIDIA AI News, is to use hyper-realistic simulators like NVIDIA Isaac Sim. In a simulator, we can generate vast amounts of perfectly-labeled data, capturing video frames and corresponding robot actions simultaneously. Techniques like domain randomization (varying textures, lighting, and object positions) are then used to help the model generalize from simulation to the real world (sim-to-real transfer).

Model Architecture for Imitation

A common architectural pattern for this task is an encoder-decoder model, often based on the Transformer architecture.

- Encoder: A pre-trained vision model (as described above) processes the video frames and produces a sequence of embeddings.

- Decoder: A Transformer decoder or an RNN takes these embeddings as input and autoregressively generates a sequence of actions. Each predicted action might be a 7-dimensional vector representing the desired change in the robot arm’s end-effector position (x, y, z) and orientation (quaternion), plus a value for the gripper state (open/close).

This architecture allows the model to learn the temporal dependencies of a task—for example, that it must first grasp the screwdriver before it can turn the screw.

# Conceptual PyTorch code for a simple policy network

# This model takes a video embedding and outputs a sequence of actions.

import torch

import torch.nn as nn

class VideoToActionPolicy(nn.Module):

def __init__(self, embedding_dim, action_dim, num_decoder_layers, nhead):

super(VideoToActionPolicy, self).__init__()

self.embedding_dim = embedding_dim

self.action_dim = action_dim

# A linear layer to project video embedding to the model's dimension

self.input_proj = nn.Linear(embedding_dim, 512)

# A standard Transformer Decoder

decoder_layer = nn.TransformerDecoderLayer(d_model=512, nhead=nhead)

self.transformer_decoder = nn.TransformerDecoder(decoder_layer, num_layers=num_decoder_layers)

# The output layer that predicts the action

self.action_head = nn.Linear(512, action_dim)

# A learnable token to start the action generation sequence

self.tgt_token = nn.Parameter(torch.randn(1, 1, 512))

def forward(self, video_embedding, max_actions=50):

# video_embedding shape: (batch_size, embedding_dim)

# Project the video embedding to serve as the memory for the decoder

memory = self.input_proj(video_embedding).unsqueeze(0) # Shape: (1, batch_size, 512)

# Start with the initial target token

tgt = self.tgt_token.repeat(1, video_embedding.size(0), 1) # Shape: (1, batch_size, 512)

actions = []

for _ in range(max_actions):

output = self.transformer_decoder(tgt, memory)

# Get the output from the last token in the sequence

last_output = output[-1, :, :]

action = self.action_head(last_output)

actions.append(action)

# This part is simplified; in a real scenario, the predicted action

# would be embedded and fed back into the next step of the decoder.

# For simplicity, we just generate a fixed number of actions here.

return torch.stack(actions, dim=1) # Shape: (batch_size, max_actions, action_dim)

# --- Usage ---

# embedding_dim = 768 (from VideoMAE)

# action_dim = 7 (e.g., x,y,z position, quaternion orientation, gripper)

policy_model = VideoToActionPolicy(embedding_dim=768, action_dim=7, num_decoder_layers=4, nhead=8)

dummy_embedding = torch.randn(1, 768)

predicted_actions = policy_model(dummy_embedding)

print("Shape of predicted actions:", predicted_actions.shape)

# Expected Output: Shape of predicted actions: torch.Size([1, 50, 7])Advanced Techniques and The Role of Foundation Models

The latest breakthroughs are moving beyond simple imitation and toward more generalized, flexible robotic agents. This is where large-scale foundation models, similar to those discussed in OpenAI News and Anthropic News, are making a significant impact.

One-Shot and Language-Conditioned Learning

Instead of training a model from scratch for every new task, researchers are fine-tuning massive, pre-trained multimodal models. A model like Google’s RT-2 (Robotic Transformer 2) is a Vision-Language-Action (VLA) model. It’s pre-trained on vast web-scale text and image data, which gives it a general understanding of objects, concepts, and semantics. By fine-tuning this model on a relatively small dataset of robotic data, it can exhibit emergent capabilities. It can reason about novel tasks and generalize from just one or a few demonstrations (one-shot or few-shot learning). Furthermore, you can condition its behavior with natural language, instructing it to “pick up the apple” instead of the “red ball,” and it will understand the semantic difference and act accordingly.

Democratizing Access with Cloud Platforms

Training and deploying these massive models requires immense computational power, often beyond the reach of individual developers or smaller companies. This is where cloud ML platforms become essential. Services like AWS SageMaker and Azure Machine Learning provide the raw infrastructure, but newer platforms are focusing on developer experience and ease of use. As per recent Replicate News, their platform allows developers to run and fine-tune state-of-the-art models via a simple API call, abstracting away the complexities of GPU management, dependency handling, and model scaling. This is crucial for robotics, where a developer might want to call a powerful vision model to interpret a scene without having to manage the inference server themselves.

# Example: Using the Replicate Python client to run a video model

# This shows how a complex, pre-trained model can be accessed via a simple API,

# accelerating development for robotics applications.

import replicate

import os

# Make sure to set your Replicate API token in your environment variables

# os.environ["REPLICATE_API_TOKEN"] = "your_api_token"

# Let's use a video-to-text model as an example of processing video input.

# A robotics pipeline could use a similar model to get a description of a task

# before attempting it.

print("Running a video-to-text model on Replicate...")

output = replicate.run(

"nateraw/video-llava:a494250c04691c45d2a5a58433634562a6b0100754585690b347e012f45211f4",

input={

"video_path": "https://replicate.delivery/pbxt/IV2YvjQ0W2a0xryG2i9S3LLkA4ANJQA3t9rCC4xaK6A5gGEiA/video.mp4",

"prompt": "What is the person in the video doing? Describe the steps."

}

)

# The 'output' is a generator that yields the text as it's generated.

print("Model Output:")

description = ""

for item in output:

description += item

print(item, end="")

print("\n\nThis text description could be used as input for a language-conditioned policy.")Best Practices, Challenges, and Optimization

While the “watch and learn” paradigm is powerful, several significant challenges and considerations remain on the path to widespread adoption.

1. Bridging the Sim-to-Real Gap

Models trained exclusively in simulation often fail in the real world due to subtle differences in physics, lighting, and sensor noise. Best practices involve extensive domain randomization in the simulator and fine-tuning the model on a smaller, real-world dataset. Experiment tracking tools, as seen in Weights & Biases News or MLflow News, are critical for managing these complex sim-to-real experiments.

2. The Unstructured Data Problem

The ultimate goal is to learn from any video, like those on YouTube. However, these videos lack paired action data. This is an active area of research. Some approaches involve using human pose estimation to infer human actions, while others use self-supervised learning techniques to build powerful representations from unlabeled video before fine-tuning on a smaller set of labeled robotic data. Storing and querying the embeddings from these vast video datasets could leverage vector databases, a topic often covered in Pinecone News or Milvus News.

3. Safety and Robustness

A robot that misinterprets a video can be dangerous. Building safety constraints, anomaly detection, and robust error handling is paramount. The policy network must be reliable and predictable, especially when encountering situations not seen during training. This is a critical aspect of moving from lab research to real-world deployment.

4. Inference Optimization

For a robot to act in real-time, the model must run with extremely low latency. This requires significant optimization. Tools like NVIDIA’s TensorRT News and the open standard ONNX News are used to compile and accelerate trained models for deployment on the robot’s onboard compute hardware. Efficient inference servers like the Triton Inference Server News also play a key role in deploying these models at scale.

Conclusion: The Dawn of Embodied Intelligence

The ability of AI to learn physical tasks by watching videos represents a profound leap forward for robotics and automation. We’ve moved from a world of explicitly programmed instructions to one of implicit learning and generalization. This paradigm shift is enabled by the convergence of powerful vision-language models, scalable simulation environments, and cloud platforms like Replicate that democratize access to cutting-edge AI. While significant challenges like the sim-to-real gap and data correspondence remain, the pace of innovation is staggering.

For developers and engineers, the next steps involve exploring multimodal model architectures, leveraging open-source robotics frameworks, and experimenting with platforms that simplify the MLOps lifecycle. As foundation models continue to grow in capability and robotic hardware becomes more accessible, the agents that populate our factories, homes, and hospitals will increasingly be those that learned not from a programmer’s code, but from observing us.