Unlocking 6x Faster Model Training: A Deep Dive into DeepSpeed ZeRO-Offload++

The relentless growth in the scale of AI models, a trend constantly highlighted in OpenAI News and Meta AI News, has pushed GPU memory to its absolute limits. Training models with billions, or even trillions, of parameters is no longer a theoretical exercise but a practical challenge for research labs and enterprises alike. The primary bottleneck is often not raw computational power, but the finite VRAM available on a single GPU. While distributed training across multiple GPUs is a standard solution, it introduces communication overhead and significant infrastructure costs. In this landscape, innovations that optimize memory usage are not just beneficial; they are essential for progress.

Microsoft’s DeepSpeed library has consistently been at the forefront of this battle, and its latest innovation, ZeRO-Offload++, represents a paradigm shift in how we approach large-scale model training. Moving beyond simple offloading, this new technique establishes a collaborative “Twin-Flow” architecture between the CPU and GPU. By intelligently partitioning and parallelizing tasks, ZeRO-Offload++ can achieve up to a 6x increase in training throughput without any loss in data precision. This article provides a comprehensive technical exploration of ZeRO-Offload++, detailing its core concepts, practical implementation, and best practices for maximizing its performance in your own projects, particularly within the popular PyTorch News and Hugging Face Transformers News ecosystems.

The Evolution of ZeRO: From Offload to a Collaborative Twin-Flow

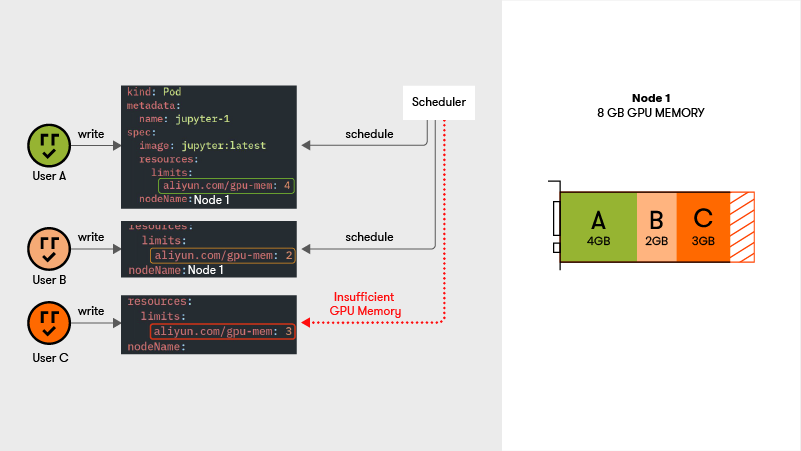

To fully appreciate the ingenuity of ZeRO-Offload++, it’s crucial to understand its foundation. The Zero Redundancy Optimizer (ZeRO) family of technologies was designed to eliminate memory redundancy in data-parallel training, where each GPU traditionally holds a full copy of the model parameters, gradients, and optimizer states.

From ZeRO to ZeRO-Offload

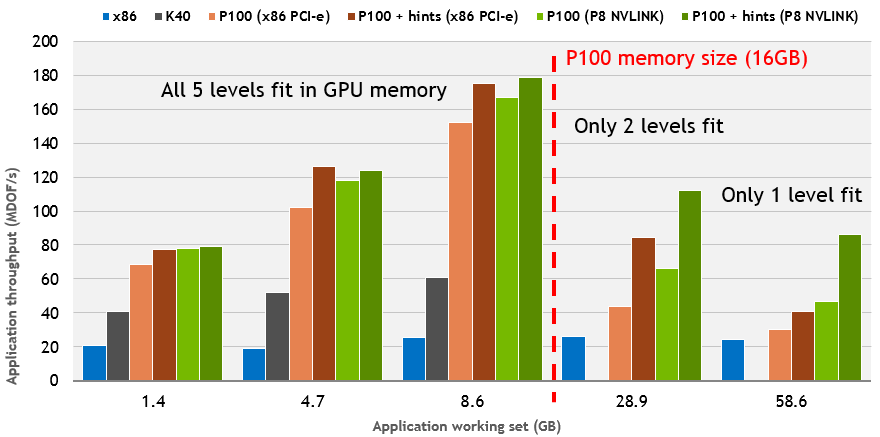

ZeRO addressed this by partitioning these components across the available GPUs. ZeRO-Offload took this a step further by recognizing that the CPU’s main memory, while slower than GPU VRAM, is significantly larger. It allowed developers to offload the optimizer states and gradients—which consume a vast amount of memory but are used less frequently than model parameters—to the host CPU RAM. This freed up precious VRAM to accommodate larger models or bigger batch sizes. However, this came at the cost of data transfer latency over the PCIe bus, which could sometimes become a new bottleneck.

Introducing the “Twin-Flow” Architecture

ZeRO-Offload++ fundamentally re-architects this process. Instead of a sequential “compute on GPU, then transfer to CPU” workflow, it creates two parallel, asynchronous data flows:

- GPU Compute Flow: This stream is dedicated to what GPUs do best: massively parallel computation. It handles the forward and backward propagation through the model layers, operating on the parameters that reside in high-speed VRAM.

- CPU Update Flow: This stream leverages the CPU’s large memory capacity and powerful cores. It receives gradients from the GPU and performs the parameter updates using the optimizer states stored in CPU RAM.

The magic of the Twin-Flow system lies in its ability to overlap these operations. While the GPU is performing the backward pass for layer L, the CPU is concurrently updating the parameters for the already-computed layer L+k. This intelligent scheduling effectively hides the latency of the CPU-GPU data transfers, ensuring that neither processor is sitting idle waiting for the other. The result is a system where the CPU is no longer just a task scheduler but an active, collaborative partner in the training process, a principle gaining traction across the AI hardware landscape, as seen in recent NVIDIA AI News.

Enabling this powerful feature is surprisingly straightforward. It’s controlled through the DeepSpeed configuration file, where you specify the offload targets for both parameters and optimizer states.

{

"train_batch_size": 16,

"steps_per_print": 2000,

"optimizer": {

"type": "AdamW",

"params": {

"lr": 0.001,

"betas": [

0.9,

0.999

],

"eps": 1e-8,

"weight_decay": 0.01

}

},

"zero_optimization": {

"stage": 3,

"offload_param": {

"device": "cpu",

"pin_memory": true

},

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"overlap_comm": true,

"contiguous_gradients": true,

"stage3_prefetch_bucket_size": "auto",

"stage3_param_persistence_threshold": "auto"

},

"fp16": {

"enabled": true

}

}A Practical Guide to Implementing ZeRO-Offload++

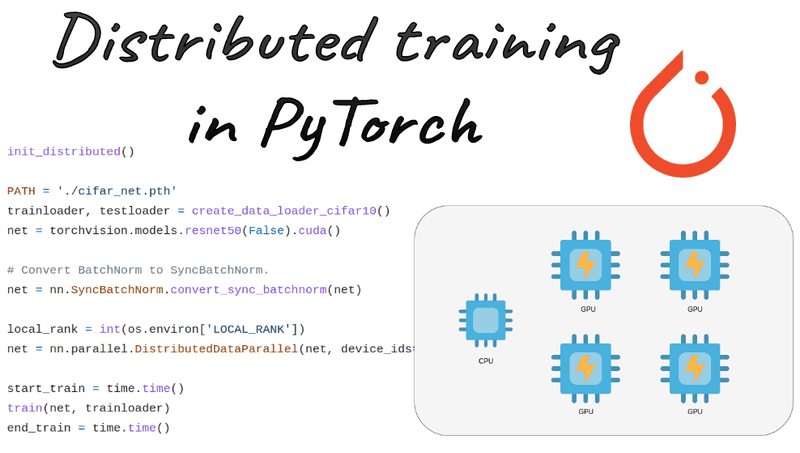

Integrating DeepSpeed into an existing PyTorch training script requires minimal code changes, making it accessible even for complex projects. The primary modifications involve initializing DeepSpeed and replacing standard PyTorch calls with their DeepSpeed equivalents.

Standard PyTorch vs. DeepSpeed-Powered Training

Let’s consider a typical training scenario using a model from the Hugging Face Hub. Your standard training loop involves creating the model and optimizer, then iterating through your data, performing forward/backward passes, and stepping the optimizer.

To enable DeepSpeed, you first create a configuration file (like the JSON example above) and then use the deepspeed.initialize function to wrap your model and optimizer. This function handles all the complex backend setup for distributed training, partitioning, and offloading based on your configuration.

Activating ZeRO-Offload++ with `deepspeed.initialize`

The code modifications are focused on the training script’s setup and loop. DeepSpeed’s API is designed to be a lightweight wrapper that enhances, rather than replaces, your existing PyTorch logic. This seamless integration is a key reason for its popularity and a frequent topic in DeepSpeed News.

Here is a practical example demonstrating the transformation of a standard PyTorch training script to one powered by DeepSpeed with ZeRO-Offload++.

import torch

import deepspeed

from transformers import AutoModelForCausalLM, AutoTokenizer

# 1. Add deepspeed command line arguments

# (DeepSpeed runner will provide these)

import argparse

parser = argparse.ArgumentParser()

parser.add_argument("--local_rank", type=int, default=-1, help="local rank passed from distributed launcher")

parser = deepspeed.add_config_arguments(parser)

args = parser.parse_args()

# 2. Initialize the model and tokenizer

model_name = "gpt2-large"

model = AutoModelForCausalLM.from_pretrained(model_name)

tokenizer = AutoTokenizer.from_pretrained(model_name)

# Dummy input data

input_ids = torch.randint(0, tokenizer.vocab_size, (4, 512))

# 3. Initialize DeepSpeed Engine

# The 'deepspeed_config.json' file contains the ZeRO-Offload++ settings

model_engine, optimizer, _, _ = deepspeed.initialize(

args=args,

model=model,

model_parameters=model.parameters()

)

# Move inputs to the correct device

input_ids = input_ids.to(model_engine.device)

# 4. Modified Training Loop

for step in range(10):

# Forward pass

outputs = model_engine(input_ids, labels=input_ids)

loss = outputs.loss

print(f"Step {step}, Loss: {loss.item()}")

# Backward pass - use the model engine

model_engine.backward(loss)

# Optimizer step - use the model engine

model_engine.step()

Notice the key changes: The model is wrapped by deepspeed.initialize, and the standard loss.backward() and optimizer.step() are replaced by model_engine.backward(loss) and model_engine.step(). This simple change unlocks the powerful backend optimizations, and the performance data can be easily logged using tools like MLflow News or Weights & Biases News for analysis.

Advanced Optimizations and Performance Tuning

While the default ZeRO-Offload++ configuration provides significant gains, you can extract even more performance by tuning its parameters and combining it with other DeepSpeed features. This level of control is crucial for production environments on platforms like Azure Machine Learning News or AWS SageMaker News.

Fine-Tuning the Twin-Flow

The performance of the Twin-Flow architecture depends on a delicate balance between CPU computation, GPU computation, and the data transfer bandwidth of the PCIe bus. The DeepSpeed configuration file offers several knobs to tune this balance:

pin_memory: Setting this totrueforoffload_paramandoffload_optimizeris highly recommended. It instructs the CUDA driver to use “pinned” (or page-locked) host memory, which enables much faster, asynchronous data transfers between the CPU and GPU.overlap_comm: This boolean flag explicitly enables the communication-computation overlap that is central to the Twin-Flow design. It should almost always be set totruewhen using offloading.stage3_prefetch_bucket_size: This controls the size of the buckets used to prefetch parameters from the CPU to the GPU just before they are needed for the forward/backward pass. Setting it to “auto” is a good starting point, but manual tuning can sometimes yield better performance based on your specific model architecture and hardware.

Synergy with Mixed Precision and Activation Checkpointing

ZeRO-Offload++ is not an isolated feature; its true power is realized when combined with other memory-saving techniques. Using it alongside FP16/BF16 mixed-precision training and activation checkpointing creates a formidable optimization stack.

- Mixed Precision: Reduces the memory footprint of model weights, gradients, and activations by half, which also speeds up computation on modern GPUs with Tensor Cores.

- Activation Checkpointing: Instead of storing all activations in VRAM during the forward pass for use in the backward pass, this technique recomputes them on the fly. This trades a small amount of computation for a massive reduction in memory usage.

By combining these, you can train models that are orders of magnitude larger than what would fit in a single GPU’s VRAM. This comprehensive approach is essential for tackling the next generation of models discussed in Google DeepMind News and Mistral AI News.

{

"train_micro_batch_size_per_gpu": 4,

"gradient_accumulation_steps": 8,

"steps_per_print": 100,

"zero_optimization": {

"stage": 3,

"offload_param": {

"device": "cpu",

"pin_memory": true

},

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"overlap_comm": true,

"contiguous_gradients": true,

"reduce_bucket_size": "auto",

"stage3_prefetch_bucket_size": "auto",

"stage3_param_persistence_threshold": "auto",

"sub_group_size": 1e9

},

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"activation_checkpointing": {

"partition_activations": true,

"cpu_checkpointing": true,

"contiguous_memory_optimization": false,

"synchronize_checkpoint_boundary": false

},

"wall_clock_breakdown": false

}Best Practices, Pitfalls, and System Considerations

To fully harness the power of ZeRO-Offload++, it’s important to consider the entire system, not just the software configuration. The hardware and environment play a critical role in its effectiveness.

Maximizing Throughput: A Checklist for Success

- High-Performance CPU: Since the CPU is now an active participant, a slow or underpowered CPU will become the new bottleneck. Systems with high core counts and high clock speeds will see the best results.

- PCIe Bandwidth: The communication between the CPU and GPU happens over the PCIe bus. A system with PCIe Gen4 or Gen5 will offer significantly higher bandwidth than older generations, reducing data transfer latency.

- Sufficient RAM: Ensure your system has enough main memory to hold the offloaded parameters and optimizer states. For large models, this can easily exceed 100-200 GB of RAM.

- Profile Your Workloads: Use the DeepSpeed profiler or NVIDIA Nsight to understand where time is being spent. This will help you identify whether your bottleneck is CPU compute, GPU compute, or I/O, allowing for more targeted tuning.

Common Pitfalls to Avoid

- Ignoring the CPU: A common mistake is pairing a top-of-the-line GPU with a budget CPU. With ZeRO-Offload++, the CPU’s performance is directly tied to overall training throughput.

- Misconfigured Environment: Using outdated drivers, CUDA versions, or communication libraries (like NCCL) can lead to suboptimal performance or even runtime errors. Always work within the recommended software stack.

- Over-Optimization: Starting with a highly complex configuration can make debugging difficult. It’s best to start with a simple ZeRO-Offload++ setup, verify it works, and then incrementally add features like mixed precision and activation checkpointing.

While platforms like Google Colab News offer a great entry point, achieving the headline-grabbing 6x speedup requires a well-balanced, server-grade machine, typically found in dedicated cloud environments like Vertex AI News or Azure AI News.

Conclusion: A New Era of Training Efficiency

DeepSpeed ZeRO-Offload++ is more than just an incremental improvement; it’s a fundamental rethinking of the roles of the CPU and GPU in deep learning. By creating a synergistic Twin-Flow architecture, it masterfully hides data transfer latency and transforms the CPU into a powerful computational partner. This unlocks unprecedented training throughput, enabling teams to iterate faster, train larger models, and explore new architectures on existing hardware.

The key takeaways are clear: developers can achieve significant performance gains with minimal code changes, all without sacrificing model accuracy. As models continue to grow, driven by innovations from the entire AI community, from Anthropic News to Cohere News, techniques like ZeRO-Offload++ will be indispensable. For anyone working on large-scale model training within the PyTorch ecosystem, exploring and adopting this technology is no longer just an option—it’s a competitive necessity for staying at the cutting edge of artificial intelligence.