Inverting the Design Paradigm: Goal-Driven Generation with AI

The traditional design process is a forward-facing endeavor. An engineer, artist, or developer creates a candidate solution, which is then tested against a set of performance criteria. This iterative cycle of “design-then-evaluate” has powered innovation for centuries. However, recent advancements in artificial intelligence, particularly from research hubs like the MIT-IBM Watson AI Lab, are flipping this model on its head. Welcome to the era of inverse design, or goal-driven generation, where we define the desired outcome first and task AI with discovering the optimal design to achieve it. This paradigm shift is not just an academic curiosity; it’s a powerful, practical approach that is reshaping industries from materials science and drug discovery to software engineering and creative arts.

Instead of asking, “What does this design do?” we now ask, “What design achieves this specific goal?” This inversion is made possible by the convergence of powerful generative models, sophisticated optimization algorithms, and scalable cloud computing. This article explores the technical underpinnings of this transformative approach, providing practical code examples and a roadmap for implementing goal-driven generation in your own projects. We will delve into the core concepts, examine implementation strategies using modern AI frameworks, explore advanced techniques, and discuss the best practices and tooling ecosystem that brings this vision to life, touching on the latest developments highlighted in IBM Watson News and beyond.

Understanding the Core Concepts of Inverse Design

At its heart, inverse design reframes a problem from evaluation to generation. The traditional “forward” model is a function f(x) = y, where x is the set of design parameters and y is the performance outcome. We spend our time tweaking x to get a desirable y. The inverse problem seeks to find a function g(y) = x, where we provide the desired outcome y and the function generates the design parameters x. This is often an ill-posed problem, as multiple designs (x) could lead to the same outcome (y). AI provides the tools to navigate this complex, multi-modal landscape.

From Forward Simulation to Goal-Driven Optimization

Let’s start with a simple forward model. Imagine we’re designing a simple chemical mixture where the final pH is determined by the volumes of two solutions, Acid (A) and Base (B). The forward model is a simple simulation.

import numpy as np

def simulate_ph(vol_acid, vol_base):

"""

A simplified forward model simulating the pH of a mixture.

This is a placeholder for a real, complex simulation.

"""

# Assume Acid has pH of 2, Base has pH of 12

# This is a highly simplified, non-physical model for illustration

if vol_acid + vol_base == 0:

return 7.0 # Neutral

# A simple weighted average for demonstration

ph = (vol_acid * 2.0 + vol_base * 12.0) / (vol_acid + vol_base)

# Add some non-linear noise to make it more interesting

ph += np.sin(vol_acid - vol_base) * 0.1

return ph

# Forward problem: What is the pH for a given design?

design_x = {'vol_acid': 50, 'vol_base': 50}

outcome_y = simulate_ph(**design_x)

print(f"Forward Model: For design {design_x}, the pH is {outcome_y:.2f}")The inverse problem is: “What volumes of Acid and Base do I need to achieve a target pH of 8.5?” We can solve this using an optimizer. This is the simplest form of inverse design, framing it as an optimization task.

from scipy.optimize import minimize

def objective_function(params, target_ph):

"""

The function we want to minimize: the difference between simulated and target pH.

"""

vol_acid, vol_base = params

# Add a penalty for negative volumes

if vol_acid < 0 or vol_base < 0:

return 1e9 # A large number as a penalty

simulated_ph = simulate_ph(vol_acid, vol_base)

return (simulated_ph - target_ph)**2 # Mean Squared Error

# Inverse problem: Find the design that results in a target pH

target_ph = 8.5

initial_guess = [50, 50] # Start with a reasonable guess

bounds = [(0, 100), (0, 100)] # Constrain the search space

result = minimize(objective_function, initial_guess, args=(target_ph,), bounds=bounds)

if result.success:

optimal_design = {'vol_acid': result.x[0], 'vol_base': result.x[1]}

final_ph = simulate_ph(**optimal_design)

print(f"Inverse Solution: To achieve pH ~{target_ph}, use design:")

print(f" - Acid Volume: {optimal_design['vol_acid']:.2f} ml")

print(f" - Base Volume: {optimal_design['vol_base']:.2f} ml")

print(f"Resulting pH: {final_ph:.2f}")

else:

print("Optimization failed:", result.message)This simple example demonstrates the fundamental shift. While effective, this optimization approach can be slow if the `simulate_ph` function is computationally expensive (e.g., a full CFD simulation). This is where deep learning and generative models, central to the latest PyTorch News and TensorFlow News, come into play.

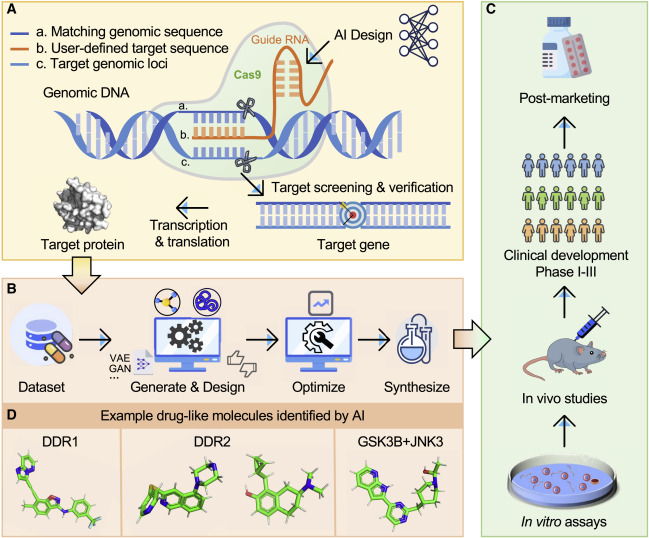

Implementation with Modern Generative Models

Generative models learn the underlying distribution of a dataset, enabling them to create new, similar data points. In inverse design, we can use them to learn the distribution of valid and high-performing designs. Instead of searching the entire parameter space, we can sample from a learned “design manifold,” dramatically accelerating the discovery process.

Using a Variational Autoencoder (VAE) for Design Generation

A VAE is a type of generative model that learns a compressed, continuous latent representation of the data. We can train a VAE on a dataset of existing designs (e.g., molecular structures, airfoil shapes). Once trained, the decoder part of the VAE acts as our generative function. We can then search the much simpler latent space for points that decode into designs with our desired properties.

Here’s a conceptual example using PyTorch to define a VAE decoder. We assume the VAE has already been trained on a dataset of 2D shapes represented by a vector of points.

import torch

import torch.nn as nn

# Assume a pre-trained VAE on a dataset of 2D shapes

# Each shape is represented by a flattened vector of size 128 (e.g., 64 x,y coordinates)

latent_dim = 16

design_dim = 128

class Decoder(nn.Module):

def __init__(self, latent_dim, hidden_dim, output_dim):

super(Decoder, self).__init__()

self.fc1 = nn.Linear(latent_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, hidden_dim)

self.fc3 = nn.Linear(hidden_dim, output_dim)

self.relu = nn.ReLU()

def forward(self, z):

h = self.relu(self.fc1(z))

h = self.relu(self.fc2(h))

return torch.sigmoid(self.fc3(h)) # Output normalized coordinates

# --- Goal-Driven Generation ---

# 1. Load the pre-trained decoder

decoder = Decoder(latent_dim, 64, design_dim)

# decoder.load_state_dict(torch.load('decoder_weights.pth')) # In a real scenario

decoder.eval()

# 2. Define a "black box" performance evaluator (e.g., a physics simulator)

def evaluate_design_performance(design_vector):

# This function would call an external simulator (e.g., aerodynamics)

# For this example, let's create a dummy function

# It favors designs with a larger average coordinate value

return torch.mean(design_vector)

# 3. Optimize in the latent space

target_performance = 0.8

# Start with a random point in the latent space

z = torch.randn(1, latent_dim, requires_grad=True)

optimizer = torch.optim.Adam([z], lr=0.01)

print("Optimizing in latent space to find a design with high performance...")

for i in range(200):

optimizer.zero_grad()

generated_design = decoder(z)

performance = evaluate_design_performance(generated_design)

# Loss is the difference between current and target performance

loss = (performance - target_performance)**2

loss.backward()

optimizer.step()

if i % 20 == 0:

print(f"Step {i}, Loss: {loss.item():.4f}, Performance: {performance.item():.4f}")

final_design = decoder(z.detach())

print(f"\nOptimization complete. Final design performance: {evaluate_design_performance(final_design):.4f}")This approach is incredibly powerful. The optimization happens in the low-dimensional latent space, which is much more efficient. This technique is being explored heavily in drug discovery and materials science, often accelerated by hardware discussed in NVIDIA AI News and managed with platforms like AWS SageMaker News.

Advanced Techniques: Combining Generative AI and Reinforcement Learning

For more complex design spaces where the relationship between parameters and outcomes is highly non-linear, we can employ even more advanced techniques. Combining generative models with reinforcement learning (RL) or leveraging Large Language Models (LLMs) for structured design generation opens up new frontiers.

Reinforcement Learning for Design Policy

In an RL setup, a generative model acts as the “agent.” The “action” is to generate a design or modify an existing one. The “environment” is the simulation or evaluation function, which returns a “reward” based on the design’s performance. The agent’s goal is to learn a policy that generates designs maximizing the cumulative reward.

This is particularly useful for sequential design problems, like generating a complex molecule bond-by-bond or designing a multi-step manufacturing process. While a full RL code example is extensive, the conceptual loop is key. Frameworks like Ray and libraries from the Google DeepMind News sphere are pivotal for scaling such complex RL workloads.

Leveraging LLMs for Code and Configuration Generation

The latest advancements from OpenAI News, Anthropic News, and Mistral AI News have shown that LLMs excel at generating structured data, including code, configuration files, and descriptive languages. We can use this for inverse design by providing the LLM with a set of goals and constraints in natural language.

Imagine designing a web page. The goal is “a landing page for a new SaaS product that maximizes user sign-ups, with a blue and white color scheme and a prominent call-to-action button.” Using a framework like LangChain News or the Hugging Face ecosystem, we can prompt an LLM to generate the HTML and CSS code.

from transformers import pipeline

# This uses a text-generation model from the Hugging Face Hub

# In a real application, you might use a more powerful API or a fine-tuned model.

generator = pipeline('text-generation', model='mistralai/Mistral-7B-Instruct-v0.2')

def generate_html_design(prompt):

# Using a simple prompt template

full_prompt = f"""

[INST] You are an expert web designer. Your task is to generate the complete HTML and CSS for a web page based on the following requirements. The code should be self-contained in a single HTML file.

Requirements: {prompt}

Provide only the HTML code. [/INST]

"""

# Note: Access to powerful models like Mistral 7B might require specific hardware/API setup.

# This is a conceptual representation.

# response = generator(full_prompt, max_new_tokens=1024)

# return response[0]['generated_text']

# For demonstration, we'll return a hardcoded example of what the LLM might produce.

return """

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>SaaS Product</title>

<style>

body { font-family: sans-serif; background-color: #f0f8ff; color: #333; }

.container { max-width: 800px; margin: 40px auto; padding: 20px; text-align: center; }

.cta-button { background-color: #007bff; color: white; padding: 15px 30px; border: none; border-radius: 5px; font-size: 1.2em; cursor: pointer; }

</style>

</head>

<body>

<div class="container">

<h1>Our Revolutionary SaaS Product</h1>

<p>Solve all your problems with our innovative solution.</p>

<button class="cta-button">Sign Up Now</button>

</div>

</body>

</html>

"""

# Inverse problem: Define the goal in natural language

design_goal = "A simple, clean landing page for a SaaS product. It must have a blue and white color scheme and a prominent call-to-action button for sign-ups."

# Generate the design

generated_code = generate_html_design(design_goal)

print("--- Generated HTML Code ---")

print(generated_code)This approach, powered by tools from the Hugging Face Transformers News, allows for design at a much higher level of abstraction, democratizing the creation of complex artifacts.

Best Practices and the Tooling Ecosystem

Successfully implementing an inverse design pipeline requires more than just a model; it demands a robust MLOps strategy and careful consideration of the problem setup.

Defining the Objective and Constraints

The success of any goal-driven approach hinges on a well-defined objective function. This function must accurately capture the desired performance metric. It’s crucial to also define constraints to ensure the generated designs are physically realizable, cost-effective, or compliant with regulations. Tools like Optuna or frameworks within Azure AI News and Vertex AI News provide sophisticated ways to handle multi-objective optimization and complex constraints.

Managing Experiments and Data

Inverse design is an iterative, experiment-heavy process. Tracking which generative model architectures, hyperparameters, and optimization strategies work best is critical. This is where MLOps platforms are invaluable. Tools covered in MLflow News, Weights & Biases News, and Comet ML News allow you to log every experiment, compare results, and version your models and datasets, ensuring reproducibility and collaboration.

The Importance of a Fast and Accurate Simulator

The optimization loop is only as fast as its slowest component, which is often the performance evaluation function (the “simulator”). If each evaluation takes hours, the process is impractical. A key strategy is to train a surrogate model—a neural network that learns to approximate the simulator’s output. This AI-based proxy can provide near-instantaneous performance predictions, dramatically speeding up the design loop. This is an active area of research, with progress often highlighted in Kaggle News competitions.

Deployment and Inference

Once a generative model is trained, deploying it for real-time design generation requires optimization. Tools like NVIDIA’s TensorRT and Triton Inference Server News, or frameworks like ONNX and OpenVINO News, are essential for converting trained models into highly efficient formats for fast inference on edge devices or in the cloud.

Conclusion: The Future is Generative

The shift from forward evaluation to inverse, goal-driven generation represents a fundamental change in how we approach complex design problems. By clearly defining our desired outcomes, we can unleash the creative and problem-solving power of AI to explore vast design spaces and uncover novel solutions that might elude human intuition. This paradigm, championed by research from institutions like the MIT-IBM Watson AI Lab, is no longer theoretical. With the maturation of generative models, powerful optimization libraries, and a robust MLOps ecosystem—from PyTorch and TensorFlow for model building to LangChain for LLM orchestration and MLflow for management—the tools are readily available.

The next steps for engineers, data scientists, and developers are clear. Begin by identifying problems that can be reframed with an inverse perspective. Start with simpler optimization-based approaches and gradually incorporate more sophisticated generative models as needed. By embracing this new way of thinking, we can accelerate innovation, automate complex creative tasks, and solve some of the world’s most challenging design problems.