Supercharge GitHub Copilot: Integrating Open-Source LLMs from Hugging Face into VS Code

The world of AI-assisted software development is undergoing a profound transformation. For years, developers have increasingly relied on powerful tools like GitHub Copilot, primarily powered by proprietary models from providers like OpenAI. While incredibly effective, this approach has often meant a one-size-fits-all solution. The latest developments, however, are shattering this paradigm. A groundbreaking integration now allows developers to connect GitHub Copilot Chat in Visual Studio Code directly to a vast universe of open-source Large Language Models (LLMs) hosted on Hugging Face and other platforms. This is a monumental piece of Hugging Face News, empowering developers with unprecedented choice, control, and customization over their AI coding assistants.

This article provides a comprehensive technical guide on how to leverage this powerful new capability. We will explore the core concepts, walk through practical implementation steps for both managed endpoints and local models, discuss advanced techniques, and cover best practices for optimizing your development workflow. By the end, you’ll be equipped to replace or augment the default Copilot experience with specialized models tailored to your specific needs, whether it’s for enhanced privacy, domain-specific knowledge, or simply exploring the cutting edge of open-source AI. This shift signals a broader trend in the industry, echoing advancements seen in Meta AI News with Llama models and Mistral AI News with their powerful open-weight releases.

Understanding the Core Integration: A New Era for AI Code Assistants

At its heart, this integration is about redirecting the API calls that GitHub Copilot Chat makes. Instead of sending your prompts and code context to GitHub’s default model service, you configure VS Code to send them to a different, OpenAI-compatible API endpoint. This endpoint can be a managed service like Hugging Face Inference Endpoints or a model you are running locally on your own machine. This seemingly simple change has profound implications for developers and organizations.

Why Use a Custom LLM with Copilot?

- Model Specialization: The Hugging Face Hub contains thousands of models. You can choose a model specifically fine-tuned for your programming language (e.g., Python, Rust), a particular domain (e.g., data science, web development), or a specific task (e.g., code generation, debugging, documentation).

- Enhanced Privacy and Security: For organizations with strict data privacy policies, sending proprietary code to a third-party service is a non-starter. By using a self-hosted model via tools like Ollama or a private endpoint on AWS SageMaker or Azure Machine Learning, code context remains within your control.

- Cost Control and Performance: While managed endpoints have costs, they can sometimes be more predictable or cost-effective than per-token pricing models, especially for teams with heavy usage. Running models locally eliminates external costs entirely, though it requires capable hardware.

- Access to the Latest Innovations: The open-source AI community moves at a blistering pace. This integration allows you to immediately experiment with the latest and greatest models as soon as they are released, keeping you on the cutting edge of PyTorch News and TensorFlow News as new architectures emerge.

The key to making this work is the OpenAI API compatibility layer. Many model serving tools, including Hugging Face Inference Endpoints and local servers like vLLM, can expose an endpoint that mimics the OpenAI API structure. The GitHub Copilot extension is built to communicate with such endpoints, making the switch seamless from a user-experience perspective.

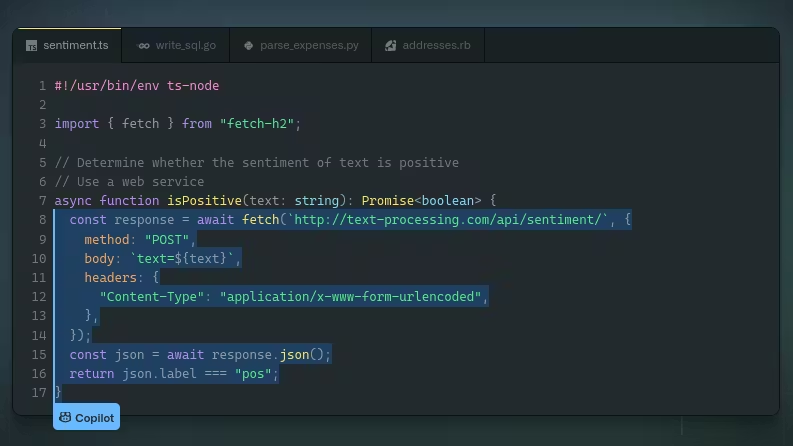

Initial Configuration in VS Code

The entire process begins by modifying your VS Code settings.json file. You need to tell the Copilot extension the URL of your model’s endpoint and, if necessary, provide an authorization header. This simple JSON configuration is the gateway to unlocking a world of new models.

{

"github.copilot.advanced": {

"chat.model": "huggingface/codellama-70b", // A descriptive name for your model

"chat.url": "https://your-inference-endpoint.huggingface.cloud",

"chat.headers": {

"Authorization": "Bearer hf_YOUR_HUGGING_FACE_TOKEN"

}

}

}In this example, we’re pointing Copilot to a hypothetical Hugging Face Inference Endpoint for Code Llama 70B. The chat.model field is an identifier you choose, while the chat.url and chat.headers contain the critical connection details.

Practical Implementation with Hugging Face Inference Endpoints

Using a managed service like Hugging Face Inference Endpoints is the easiest and most reliable way to get started. It abstracts away the complexities of GPU management, environment setup, and model optimization, allowing you to focus on integration and usage. This approach is similar in spirit to managed services like Amazon Bedrock News or Google’s Vertex AI, but with a focus on the open-source Hugging Face ecosystem.

Step 1: Choose and Deploy a Model

First, navigate to the Hugging Face Hub and choose a model suitable for coding tasks. Look for instruction-tuned models, as they are designed to follow commands given in a chat format. Excellent choices include:

mistralai/Mixtral-8x7B-Instruct-v0.1codellama/CodeLlama-70b-Instruct-hfgoogle/gemma-7b-it

Once you’ve selected a model, go to the “Deploy” button on the model page and choose “Inference Endpoints.” You’ll need to select your cloud provider, region, and the instance type (GPU). For larger models, you’ll need a powerful GPU like an NVIDIA A10G or H100. After configuring, deploy the endpoint. It may take several minutes to provision and become active.

Step 2: Verify the Endpoint Programmatically

Before configuring VS Code, it’s a good practice to ensure your endpoint is working correctly. You can do this with a simple Python script using the huggingface_hub library. This script helps you programmatically find your endpoint’s URL, which you’ll need for the next step.

from huggingface_hub import HfApi, list_inference_endpoints

# Make sure you are logged in via `huggingface-cli login`

# or have HUGGING_FACE_HUB_TOKEN set as an environment variable

# List all your active inference endpoints

endpoints = list_inference_endpoints()

# Find the specific endpoint you deployed

# Replace 'your-endpoint-name' with the actual name from the HF UI

target_endpoint_name = "your-endpoint-name"

my_endpoint = next((ep for ep in endpoints if ep.name == target_endpoint_name), None)

if my_endpoint:

print(f"Endpoint found: {my_endpoint.name}")

print(f"Status: {my_endpoint.status}")

print(f"URL: {my_endpoint.url}")

# You can now use this URL in your VS Code settings.json

# Note: The actual chat endpoint might be at a subpath like /v1/chat/completions

# Check the Hugging Face documentation for the exact path.

# For OpenAI compatibility, it's often the base URL.

else:

print(f"Endpoint '{target_endpoint_name}' not found or not active.")

This script confirms your endpoint is running and provides the exact URL needed for your VS Code configuration. This programmatic approach is a core principle in MLOps, where tools like MLflow and ClearML help automate and track deployments.

Advanced Integration: Running LLMs Locally with Ollama

For ultimate control, privacy, and cost savings (hardware permitting), running your coding assistant locally is the gold standard. Tools like Ollama have made this process incredibly accessible. Ollama bundles model weights, configuration, and a GPU-accelerated inference server into a single, easy-to-use package. It also crucially provides an OpenAI-compatible API endpoint out of the box.

Step 1: Install and Run a Model with Ollama

First, download and install Ollama from its official website. Then, from your terminal, you can pull and run a model with a single command. Let’s use Mistral, a popular and powerful model:

# Pull the Mistral 7B instruct model

ollama pull mistral

# Run the model. This will start the local server if it's not already running.

ollama run mistralBy default, Ollama starts a server on http://localhost:11434. You can now interact with this server via its REST API.

Step 2: Test the Local OpenAI-Compatible Endpoint

Before connecting Copilot, verify the local API is working. Ollama exposes an OpenAI-compatible endpoint at /v1/chat/completions. You can test it using a cURL command.

curl http://localhost:11434/v1/chat/completions -H "Content-Type: application/json" -d '{

"model": "mistral",

"messages": [

{

"role": "system",

"content": "You are a helpful Python coding assistant."

},

{

"role": "user",

"content": "Write a Python function to calculate the factorial of a number."

}

]

}'If you receive a JSON response with a Python function, your local server is ready. This ability to self-host is a game-changer and a frequent topic in NVIDIA AI News, as it relies on powerful local GPUs and optimization libraries like TensorRT for high performance.

Step 3: Configure VS Code for Ollama

Finally, update your settings.json to point to your local Ollama server. Note that no authorization header is needed for a default local setup.

{

"github.copilot.advanced": {

"chat.model": "ollama/mistral", // Use a descriptive name

"chat.url": "http://localhost:11434/v1/chat/completions"

// No headers needed for default local Ollama

}

}After saving the file and reloading VS Code, your GitHub Copilot Chat will now be powered by the Mistral model running directly on your machine. All your queries and code snippets will be processed locally, never leaving your computer.

Best Practices and The Broader Ecosystem

Successfully integrating a custom LLM into your workflow goes beyond the initial setup. Following best practices will ensure you get the most out of this powerful combination.

Model Selection and Prompt Engineering

Choose the Right Tool for the Job: Don’t just pick the largest model. A smaller, domain-specific model (e.g., a fine-tuned Code Llama) may outperform a larger, general-purpose model (like Mixtral) on coding tasks and will have lower latency. Keep an eye on Kaggle News and leaderboards for benchmarks on new code-centric models.

Master Your Prompts: The model has changed, but the principles of effective prompting have not. Be specific in your requests. Use Copilot Chat’s features like @workspace to provide broad context or select specific code snippets to focus the model’s attention. A well-crafted prompt is the difference between a generic answer and a perfect code suggestion.

Performance and Optimization

Local Hardware Matters: If running models locally, your GPU is the bottleneck. A GPU with more VRAM can run larger models. Use tools like vLLM or leverage quantization (e.g., GGUF models with Ollama) to run larger models more efficiently on consumer hardware. The latest DeepSpeed and ONNX runtime updates often bring performance improvements for local inference.

Latency vs. Quality: Be aware of the trade-off. A massive 70B parameter model on a managed endpoint might have higher latency than a 7B model running locally. Experiment to find the sweet spot between response speed and suggestion quality that works for you.

Connecting to the AI Ecosystem

This integration is not an isolated feature; it’s a gateway to a wider ecosystem. Imagine building a custom VS Code extension that uses Copilot Chat (powered by your custom model) as part of a more complex workflow orchestrated by LangChain or LlamaIndex. For example, you could create a command that uses the @workspace context to perform Retrieval-Augmented Generation (RAG) against your entire codebase, using a vector database like Chroma or Pinecone to find relevant code snippets before generating an answer. Tracking the performance of these complex chains is where tools featured in LangSmith News and Weights & Biases News become invaluable.

Conclusion: The Future of Development is Open and Customizable

The integration of Hugging Face’s open-source models into GitHub Copilot Chat is more than just a new feature; it’s a fundamental shift in how developers interact with AI. It moves us from a world of monolithic, proprietary systems to an open, flexible, and collaborative ecosystem. Developers now have the power to choose the perfect AI partner for their specific needs, balancing performance, privacy, and cutting-edge capabilities.

Whether you opt for the convenience of a managed Inference Endpoint or the privacy and control of a locally-run Ollama model, the steps outlined in this article provide a clear path forward. The key takeaway is empowerment. You are no longer just a consumer of an AI service; you are an active participant, able to select, configure, and fine-tune your tools. As the worlds of open-source AI and developer tools continue to merge, this integration stands as a landmark achievement, heralding a future where our AI assistants are as unique and specialized as the software we build with them.