Navigating the AI Acceleration: How Fast.ai Empowers Developers in a Rapidly Evolving Landscape

The field of artificial intelligence is advancing at a breathtaking pace. Keeping up with the latest breakthroughs from research labs like Google DeepMind and OpenAI can feel like a full-time job. New models, architectures, and techniques are announced almost weekly, creating a landscape of constant change. For developers and data scientists, this acceleration presents both a challenge and an opportunity. The challenge is staying current and avoiding technical debt; the opportunity is leveraging these state-of-the-art tools to build more powerful and innovative applications than ever before.

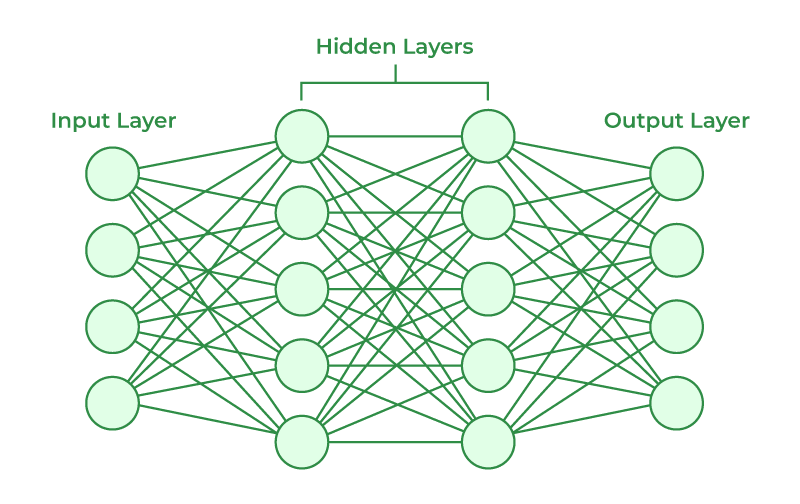

In this dynamic environment, the choice of framework is critical. While low-level libraries like PyTorch and TensorFlow provide ultimate flexibility, they often require significant boilerplate code and a deep understanding of implementation details. This is where high-level libraries shine. Among them, Fast.ai has carved out a unique niche. Built on top of PyTorch, Fast.ai is not just a library but a philosophy—one that prioritizes developer productivity, accessibility, and the practical application of cutting-edge deep learning techniques. This article explores how Fast.ai serves as a powerful ally for developers looking to navigate the rapid currents of modern AI, enabling them to go from idea to state-of-the-art results with remarkable efficiency.

The Core Philosophy: Simplicity Meets State-of-the-Art

The fundamental promise of Fast.ai is to make complex deep learning techniques accessible without sacrificing performance. It achieves this through a carefully designed layered API, sensible defaults, and a tight integration with the PyTorch ecosystem. This approach allows beginners to get started quickly while giving experts the tools to customize and extend functionality as needed.

Sensible Defaults and the DataBlock API

One of the most significant hurdles in any machine learning project is data preparation. Getting data into the right format for a model can be a tedious and error-prone process. The Fast.ai library abstracts this complexity away with its powerful DataBlock API. This declarative API lets you describe the components of your dataset—what your inputs are, what your targets are, how to split the data, and what transformations to apply—in a clear and readable way.

The library then handles the intricate process of assembling DataLoaders, which feed data to the model in batches. This, combined with sensible defaults for hyperparameters and model architectures, means you can often train a highly effective baseline model with just a few lines of code.

For example, training a world-class image classifier on a standard dataset like PETS can be accomplished with remarkable brevity. This is a cornerstone of the latest Fast.ai News: maintaining simplicity while incorporating powerful backend features.

# Import necessary components from the fastai library

from fastai.vision.all import *

# Define the path to the dataset

path = untar_data(URLs.PETS)

# Use the DataBlock API to define the data pipeline

# 1. Input: Images (ImageBlock)

# 2. Target: Categories from a regex pattern (CategoryBlock)

# 3. Get Items: Find all image files

# 4. Splitter: 20% of data for validation

# 5. Get Y: Extract label from the filename

# 6. Item Transforms: Resize all images to a standard size

dls = DataBlock(

blocks=(ImageBlock, CategoryBlock),

get_items=get_image_files,

splitter=RandomSplitter(valid_pct=0.2, seed=42),

get_y=using_attr(RegexLabeller(r'([^/]+)_\d+.jpg$'), 'name'),

item_tfms=Resize(460),

batch_tfms=aug_transforms(size=224, min_scale=0.75)

).dataloaders(path/"images")

# Create a convolutional neural network learner

# Uses a pre-trained resnet34 architecture and specifies accuracy as the metric

learn = vision_learner(dls, resnet34, metrics=error_rate)

# Fine-tune the model for 2 epochs

learn.fine_tune(2)This single block of code handles data downloading, processing, augmentation, model creation, and training. It’s a testament to the library’s focus on abstracting away repetitive boilerplate, allowing the developer to focus on the experiment itself. This efficiency is crucial when the latest PyTorch News or NVIDIA AI News announces a new optimization that you want to test immediately.

Integrating with the Broader AI Ecosystem

A modern framework cannot exist in a vacuum. The AI landscape is a rich ecosystem of specialized tools, from model hubs to experiment trackers. A key strength of Fast.ai is its seamless integration with other popular libraries, most notably Hugging Face Transformers. This interoperability ensures that developers using Fast.ai are never siloed and can leverage the best tools for the job.

Fine-Tuning Transformers with a Fast.ai Learner

The rise of large language models (LLMs) has been a dominant theme in recent OpenAI News and Mistral AI News. The Hugging Face Transformers library has become the de facto standard for working with these models. Fast.ai provides first-class support for integrating with Transformers, allowing you to combine the power of Hugging Face’s models and tokenizers with Fast.ai’s streamlined training loop and rich callback system.

Below is an example of how to fine-tune a DistilBERT model from Hugging Face for sentiment analysis on the IMDB dataset using a Fast.ai Learner. This workflow is perfect for tasks where you need a custom-tuned model for a specific domain, which can then be used in larger systems built with tools like LangChain or LlamaIndex.

# Make sure you have the necessary libraries installed

# !pip install "fastai>=2.7.0" "transformers>=4.24.0" "datasets"

from transformers import AutoTokenizer, AutoModelForSequenceClassification

from fastai.text.all import *

# Load a dataset from Hugging Face Datasets

imdb = load_dataset('imdb')

# Load a pretrained tokenizer and model from Hugging Face Hub

model_name = "distilbert-base-uncased"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name)

# Define a function to tokenize the dataset

def tokenize_function(examples):

return tokenizer(examples["text"], padding="max_length", truncation=True)

# Apply the tokenizer to the dataset

tokenized_datasets = imdb.map(tokenize_function, batched=True)

# Create Fastai DataLoaders from the tokenized dataset

dls = DataLoaders.from_hf_dataset(

tokenized_datasets,

text_col='text',

label_col='label',

bs=16

)

# Create a Learner, combining the Hugging Face model with Fastai's training capabilities

learn = Learner(dls,

model,

loss_func=CrossEntropyLossFlat(),

metrics=accuracy,

cbs=[ShowGraphCallback()]).to_fp16()

# Fine-tune the model

learn.fit_one_cycle(1, 2e-5)This integration is powerful. You get the vast model selection from the Hugging Face Hub, the robust data handling of the Datasets library, and the high-level, callback-driven training loop of Fast.ai. You can easily add callbacks for experiment tracking with tools like Weights & Biases or MLflow, making your workflow both fast and reproducible.

Advanced Techniques Made Accessible

Fast.ai’s mission extends beyond simplifying basic training loops. It also democratizes advanced techniques that were once the domain of seasoned researchers. Features like the learning rate finder, discriminative learning rates, and a flexible callback system empower developers to achieve state-of-the-art results without needing to implement complex algorithms from scratch.

The Learning Rate Finder and Custom Callbacks

Choosing the right learning rate is one of the most critical decisions in training a neural network. A rate that’s too high can cause the model to diverge, while one that’s too low can lead to painfully slow convergence. Fast.ai popularised the “Learning Rate Finder,” an algorithm that helps you find an optimal learning rate by incrementally increasing it and observing the loss.

The true power of Fast.ai for advanced users lies in its callback system. Callbacks are small pieces of code that can be injected at various points in the training loop (e.g., at the beginning of an epoch, after a batch, etc.) to perform custom actions. This is how Fast.ai implements most of its own features, and it provides an elegant way for users to extend functionality.

For instance, you could write a custom callback to log model predictions to a platform like Comet ML or to monitor for early signs of overfitting. Here’s a simple example of a custom callback that prints the validation loss and accuracy at the end of each epoch.

# Continuing from the first vision example

from fastai.vision.all import *

from fastai.callback.core import Callback

# Define a simple custom callback

class CustomLoggerCallback(Callback):

def after_epoch(self):

# Access metrics from the learner

val_loss = self.learn.recorder.final_record[1]

accuracy_val = self.learn.recorder.final_record[2]

print(f"\n--- End of Epoch {self.learn.epoch} ---")

print(f"Validation Loss: {val_loss:.4f}")

print(f"Accuracy: {accuracy_val:.4f}")

print("--------------------------\n")

# Re-create the learner

path = untar_data(URLs.PETS)

dls = ImageDataLoaders.from_name_re(

path, get_image_files(path/"images"), valid_pct=0.2, seed=42,

label_re=r'([^/]+)_\d+.jpg$', item_tfms=Resize(224))

learn = vision_learner(dls, resnet34, metrics=error_rate)

# Train the model with the custom callback

learn.fine_tune(2, cbs=[CustomLoggerCallback()])This modular system makes it easy to add complex behaviors like advanced logging, model checkpointing, or even dynamic hyperparameter adjustments during training. It’s a key reason why Fast.ai is more than just a beginner’s tool; it’s a powerful framework for rapid, iterative experimentation, which is essential for keeping up with the latest Kaggle News and competitive trends.

Best Practices for Prototyping and Production

To make the most of Fast.ai, it’s important to understand its ideal place in the MLOps lifecycle. It excels at rapid prototyping, establishing strong baselines, and iterating on ideas quickly. Here are some best practices for leveraging the library effectively.

When to Use Fast.ai

- Rapid Prototyping: When you have a new idea or dataset, Fast.ai is unparalleled for quickly building and training a model to see if the approach is viable.

- Baseline Models: It’s an excellent tool for establishing a strong baseline performance metric against which more complex or custom models can be compared.

- Transfer Learning: The library’s defaults and helper functions are heavily optimized for transfer learning, which is the most common paradigm in vision and NLP today.

- Democratizing AI: It’s a fantastic teaching tool and a great way to empower teams with varied skill levels to build deep learning models.

Path to Production

While Fast.ai is a development-first framework, models trained with it are fundamentally PyTorch models and can be deployed in any environment that supports PyTorch. A common workflow is to export the trained model to a more universal format like ONNX (Open Neural Network Exchange). An ONNX model can then be optimized with tools like NVIDIA’s TensorRT and served via high-performance inference servers like Triton Inference Server.

You can export a Fast.ai learner with the learn.export() method, which saves the model architecture and weights. For deployment, you can load this model and use it for inference. For creating quick interactive demos, you can easily integrate your exported model with tools like Gradio or Streamlit, providing a user-friendly interface for stakeholders to test the model’s capabilities.

This workflow allows you to benefit from Fast.ai’s rapid development speed while still leveraging robust, production-grade deployment tools available on platforms like AWS SageMaker, Azure Machine Learning, or Vertex AI.

Conclusion: Staying Ahead in the AI Race

The pace of AI innovation shows no signs of slowing down. As models become larger and more capable, the tools we use to harness their power must also evolve. Fast.ai represents a crucial layer in the modern AI stack—an abstraction that manages complexity and empowers developers to focus on creating value rather than wrestling with boilerplate code.

By providing a simple yet powerful API, integrating seamlessly with the broader ecosystem of tools like Hugging Face and PyTorch, and making advanced techniques accessible, Fast.ai helps level the playing field. It allows individuals and small teams to experiment with state-of-the-art methods that were once the exclusive domain of large, well-funded research labs. Whether you are building your first image classifier or fine-tuning a sophisticated language model, Fast.ai is an indispensable tool for staying productive, innovative, and ahead of the curve in this exciting and rapidly accelerating field.