Scaling Python to Petabytes: A Deep Dive into Dask for Multi-GPU High-Performance Computing

The Challenge of Scale in Modern Data Science

In the age of big data, Python’s ease of use and rich ecosystem have made it the lingua franca of data science and machine learning. Libraries like Pandas and NumPy are staples in any data analyst’s toolkit, offering powerful and intuitive APIs for data manipulation. However, these tools were primarily designed for in-memory computation on a single machine. When datasets grow from megabytes to gigabytes or even terabytes, they hit a hard wall: the physical limits of RAM. This bottleneck forces developers into complex, often inefficient workarounds, hindering productivity and slowing down the pace of discovery. The latest Dask News highlights a powerful solution to this pervasive problem.

Dask emerges as a flexible, open-source library for parallel computing in Python, designed to natively scale the familiar APIs of NumPy, Pandas, and scikit-learn. It allows you to work with larger-than-memory datasets on a single machine or scale out computations across a cluster of hundreds of nodes. Unlike more rigid systems, Dask integrates seamlessly into the existing Python ecosystem, making it a natural extension for developers already comfortable with the PyData stack. As the demand for processing massive datasets for everything from scientific simulation to training large language models grows, Dask’s role becomes increasingly critical, positioning it as a key enabler alongside frameworks discussed in Ray News and Apache Spark MLlib News.

The Core of Dask: Lazy Evaluation and Parallel Collections

To understand Dask’s power, one must first grasp its two fundamental components: dynamic task scheduling and parallel collections. Dask cleverly extends popular Python libraries by breaking down large computations into many smaller, manageable tasks, which can then be executed in parallel.

Parallel Collections: Familiar APIs for Distributed Data

Dask provides data structures that mirror the APIs of their single-machine counterparts, making the transition intuitive for developers. The three primary collections are:

- Dask DataFrame: A large parallel DataFrame composed of many smaller, in-memory Pandas DataFrames, partitioned along the index. This allows you to perform Pandas-like queries on datasets that don’t fit in RAM.

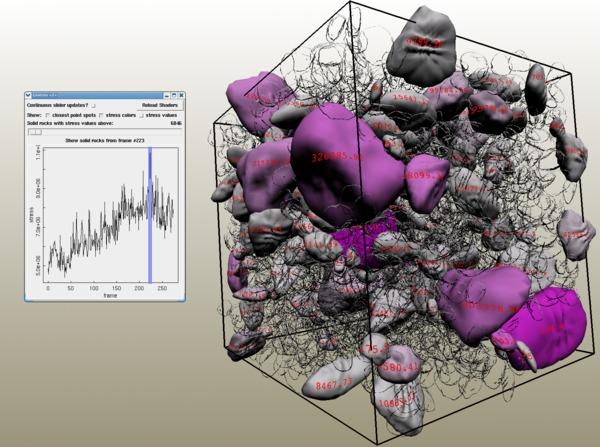

- Dask Array: A large parallel array composed of many smaller NumPy arrays, chunked along various dimensions. It enables scalable N-dimensional array computations for scientific and numerical applications.

- Dask Bag: A parallel collection for processing semi-structured or unstructured data, behaving like a parallel version of Python iterators or a list.

Lazy Evaluation and Task Graphs

The true magic of Dask lies in its “lazy” evaluation model. When you apply an operation to a Dask collection, it doesn’t execute immediately. Instead, Dask builds a task graph—a directed acyclic graph (DAG)—representing the steps required for the computation. Each node in the graph is a Python function, and the edges represent data dependencies. The computation is only triggered when you explicitly call the .compute() method. This approach allows Dask’s scheduler to analyze the entire workflow, optimize the execution plan, and efficiently distribute the tasks across available workers.

Let’s see this in action with a simple Dask DataFrame example. Imagine we have a multi-gigabyte CSV file of flight data that we cannot load into Pandas directly.

import dask.dataframe as dd

import pandas as pd

# Assume 'flights.csv' is a very large dataset

# Dask reads the file in chunks without loading it all into memory

# The 'blocksize' parameter can be tuned based on available RAM

ddf = dd.read_csv('flights.csv', blocksize='64MB')

# This operation builds a task graph but doesn't compute anything yet

# We want to find the average arrival delay per origin airport

avg_delay = ddf.groupby('Origin')['ArrDelay'].mean()

# The task graph for 'avg_delay' is now defined.

# We can visualize it (requires graphviz to be installed)

# avg_delay.visualize(filename='task_graph.png')

# Now, we trigger the computation. Dask reads the data, processes it in parallel,

# and returns a final Pandas Series with the result.

result = avg_delay.compute()

print(result.head())In this example, Dask intelligently partitions the CSV, applies the groupby and mean operations to each partition in parallel, and then combines the intermediate results to produce the final output. All of this happens without ever loading the entire dataset into memory.

Scaling Out with the Dask Distributed Scheduler

While Dask’s local schedulers (threaded and multiprocessing) are excellent for leveraging all the cores on a single machine, its true potential is unlocked with the distributed scheduler. This component allows Dask to coordinate computations across a cluster of multiple machines, providing both scalability and resilience.

Components of a Dask Cluster

A Dask distributed cluster consists of three main components:

- Scheduler: The central coordinator that manages the task graph, assigns tasks to workers, and tracks their progress.

- Worker: A process that connects to the scheduler, executes assigned tasks, and stores results in its local memory. You can have many workers spread across multiple machines.

– Client: The user-facing entry point within your Python session. You interact with the client to submit computations to the scheduler, which then orchestrates the work across the workers.

One of the most valuable features of the distributed scheduler is its diagnostic dashboard, a web-based interface that provides a real-time view of the cluster’s activity. It allows you to monitor CPU usage, memory consumption, task progress, and data transfer between workers, making it an indispensable tool for debugging and performance optimization.

Setting up a local cluster on your machine is straightforward and an excellent way to get started and utilize the dashboard.

from dask.distributed import Client, LocalCluster

import dask.array as da

# Set up a local cluster with 4 workers, each with 2 threads

# Dask will automatically try to use a sensible default if no args are passed

cluster = LocalCluster(n_workers=4, threads_per_worker=2, memory_limit='4GB')

# Connect a client to the local cluster

client = Client(cluster)

# The client provides a link to the diagnostic dashboard

print(f"Dask Dashboard: {client.dashboard_link}")

# Create a large random Dask array (e.g., 20GB)

# This operation is lazy and just creates the graph

x = da.random.random((20000, 20000), chunks=(2000, 2000))

# Perform a computation that involves communication between workers

# (e.g., transposing and then taking the mean)

result = (x + x.T).mean().compute()

print(f"Result: {result}")

# Cleanly shut down the client and cluster

client.close()

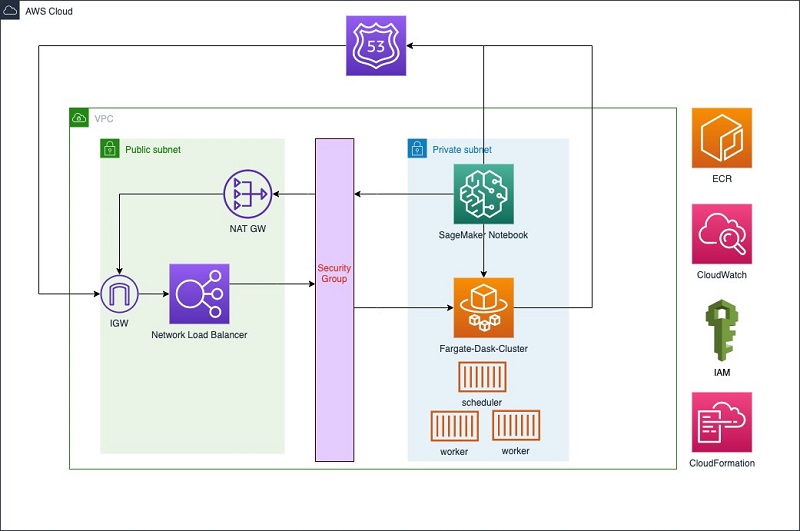

cluster.close()This simple setup demonstrates the core workflow. In a real-world scenario, you would deploy Dask on dedicated hardware, such as an HPC cluster using dask-jobqueue or a cloud environment like Kubernetes, connecting to platforms like AWS SageMaker News or Azure Machine Learning News for managed infrastructure.

Accelerating Data Science with Dask and Multiple GPUs

The parallel computing landscape has been revolutionized by GPUs, which offer massive speedups for data-intensive tasks. While often associated with deep learning frameworks discussed in PyTorch News and TensorFlow News, their application is much broader. The NVIDIA RAPIDS ecosystem provides a suite of libraries, including cuDF (GPU DataFrames) and cuML (GPU ML algorithms), that mirror the APIs of Pandas and Scikit-learn.

Orchestrating Multi-GPU Workflows

Dask is the perfect tool to scale RAPIDS from a single GPU to multiple GPUs, either within one machine or across a multi-node cluster. The dask-cuda library simplifies the process of creating a Dask cluster where each worker is assigned to a specific GPU. A dask_cudf DataFrame is simply a Dask DataFrame where each partition is a cuDF DataFrame residing in the memory of a specific GPU. This allows Dask to orchestrate GPU-to-GPU communication and parallelize computations across all available devices.

This integration is a game-changer for ETL (Extract, Transform, Load) and feature engineering pipelines, enabling data scientists to process terabytes of data entirely on GPUs at blistering speeds. This is a significant topic in the latest NVIDIA AI News, showcasing how hardware and software combine for unprecedented performance.

Here’s a practical example of setting up a multi-GPU Dask cluster and performing a GPU-accelerated merge operation.

import dask_cudf

import cudf

from dask_cuda import LocalCUDACluster

from dask.distributed import Client

# Set up a cluster where each Dask worker controls one GPU

# This will automatically detect the GPUs on your machine

cluster = LocalCUDACluster()

client = Client(cluster)

print(f"Client and cluster are ready. Dashboard: {client.dashboard_link}")

# Create two large Dask-cuDF DataFrames

# In a real scenario, you would read this from storage (e.g., Parquet files)

n_rows = 100_000_000

n_partitions = 10

ddf1 = dask_cudf.from_cudf(

cudf.DataFrame({

'id': range(n_rows),

'x': range(n_rows)

}),

npartitions=n_partitions

)

ddf2 = dask_cudf.from_cudf(

cudf.DataFrame({

'id': range(0, n_rows, 2), # Only even IDs

'y': range(n_rows // 2)

}),

npartitions=n_partitions // 2

)

# Perform a merge operation entirely on the GPUs

# Dask will handle shuffling data between GPUs as needed

merged_ddf = ddf1.merge(ddf2, on='id')

# Trigger the computation and get the length of the result

result_len = len(merged_ddf)

print(f"Length of merged DataFrame: {result_len}")

# Clean up

client.close()

cluster.close()Best Practices, Optimization, and the AI Ecosystem

To get the most out of Dask, it’s essential to follow best practices and understand its place in the broader AI and MLOps landscape. Effective Dask usage often involves careful consideration of data partitioning, workflow design, and performance monitoring.

Key Optimization Strategies

- Partitioning is Key: The size of your partitions (or chunks) is the most critical parameter to tune. Partitions should be small enough to fit comfortably in a worker’s memory but large enough that the overhead of the Dask scheduler doesn’t dominate the computation time. A good starting point is partitions of 100-250MB.

- Avoid Shuffling: Operations that require extensive data movement between workers, such as setting a new index (

set_index) on an unsorted column or merging on a column that isn’t the index, are very expensive. Whenever possible, structure your computations to minimize these “shuffles.” - Persist Intermediate Results: If you plan to reuse an intermediate result multiple times, use the

.persist()method. This tells Dask to compute the result and keep it distributed in the workers’ memory, avoiding redundant computation. - Use the Dashboard: The diagnostic dashboard is your best friend for performance tuning. Use it to identify long-running tasks, memory pressure, or imbalances in work distribution across your workers.

Dask is not an isolated tool; it’s a foundational component in many modern data pipelines. It’s frequently used for preprocessing data before feeding it into training workflows managed by tools like MLflow News or tracked with Weights & Biases News. For large-scale model training, Dask can prepare data that is then consumed by frameworks like PyTorch or TensorFlow, which themselves can be scaled using tools like DeepSpeed News. Furthermore, the data processed by Dask is often used to populate vector databases like those discussed in Pinecone News or Milvus News, which are crucial for retrieval-augmented generation (RAG) systems.

Conclusion: Your Next Step in Scalable Python

Dask provides a powerful and Python-native solution to one of the most significant challenges in data science: scale. By extending familiar APIs and employing a sophisticated lazy-evaluation task scheduler, it empowers developers to handle larger-than-memory datasets with ease. Its ability to seamlessly scale from a single laptop to a multi-node, multi-GPU supercomputing cluster makes it an indispensable tool for modern scientific and AI workloads.

The key takeaways are clear: Dask bridges the gap between the productivity of the PyData ecosystem and the performance demands of high-performance computing. Its integration with the RAPIDS ecosystem further solidifies its position as a go-to solution for GPU-accelerated data analytics. As your data challenges grow, Dask provides a scalable, flexible, and performant path forward. The next step is to explore the official Dask tutorials, set up a local cluster on your machine, and start applying these powerful parallel computing patterns to your own data problems.