Keras 3.11 Deep Dive: Unleashing INT4 Quantization and High-Performance Data Pipelines with Grain

The machine learning landscape is in a constant state of flux, with frameworks evolving at a breakneck pace to meet the demands of ever-larger models and more complex data pipelines. In this dynamic environment, Keras has consistently stood out for its user-friendly API and powerful capabilities. The recent evolution into Keras 3 marked a pivotal moment, transforming it into a truly multi-backend framework that runs seamlessly on top of TensorFlow, PyTorch, and JAX. The latest release continues this trajectory, solidifying Keras’s position as a go-to tool for both research and production. This release introduces two game-changing features: comprehensive `int4` quantization support and native integration of Google’s high-performance data loader, Grain.

These updates are not just incremental improvements; they represent a significant leap forward in model efficiency and data handling scalability. `int4` quantization pushes the boundaries of model compression, making it possible to run massive models on resource-constrained hardware, a topic of immense interest in recent Hugging Face Transformers News. Simultaneously, the integration of Grain addresses one of the most persistent bottlenecks in large-scale training: efficient data loading. This article provides a comprehensive technical deep dive into these new features, complete with practical code examples, best practices, and an analysis of their impact on the broader ML ecosystem, from developments in PyTorch News to the latest in JAX News.

Unlocking Extreme Efficiency: INT4 Quantization Across All Backends

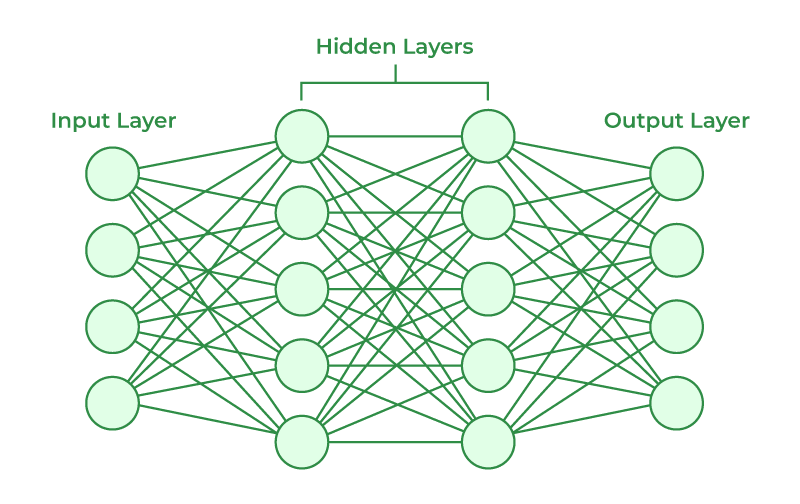

Model quantization is the process of reducing the numerical precision of a model’s weights and/or activations. Traditionally, models are trained using 32-bit floating-point numbers (FP32). Quantization converts these numbers to lower-precision formats, such as 8-bit integers (INT8) or, in this exciting new development, 4-bit integers (INT4). This reduction in precision yields substantial benefits for model deployment.

What is Quantization and Why INT4?

The primary advantages of quantization are:

- Reduced Model Size: Moving from FP32 to INT4 can reduce a model’s storage footprint by up to 8x. This is critical for deployment on edge devices with limited memory or for reducing download times for mobile applications.

- Faster Inference: Integer arithmetic is significantly faster than floating-point arithmetic on most modern CPUs and specialized hardware like TPUs and GPUs with Tensor Cores. This leads to lower latency and higher throughput.

- Lower Power Consumption: Reduced memory access and simpler computations result in less power being consumed during inference, a crucial factor for battery-powered devices.

While INT8 has become a standard for model optimization, INT4 represents the next frontier. It offers even greater compression and potential speedups, making it possible to run models that were previously too large for a given hardware target. The key challenge with lower-bit quantization is maintaining model accuracy. However, for many large, over-parameterized models, such as those frequently featured in OpenAI News or Mistral AI News, the slight loss in precision is often an acceptable trade-off for the massive gains in efficiency. Keras 3.11 now makes this advanced technique accessible across all its supported backends.

A Practical Example: Quantizing a Model with Keras

Keras introduces a simple and intuitive API for applying quantization. You can specify a quantizer for the weights and activations of any layer. Let’s look at how to apply `int4` quantization to the weights of a `Dense` layer in a simple sequential model.

import keras

from keras import layers

from keras import quantizers

# Define an int4 weight quantizer

# 'int4' is a shorthand for the Int4Quantizer class

weight_quantizer = quantizers.Int4Quantizer()

# Build a simple sequential model with a quantized Dense layer

model = keras.Sequential([

layers.Input(shape=(784,)),

layers.Dense(512, activation="relu"),

# Apply int4 quantization only to the weights of this layer

layers.Dense(

256,

activation="relu",

kernel_quantizer=weight_quantizer

),

layers.Dense(10, activation="softmax")

])

# Print the model summary to see the architecture

model.summary()

# The model can now be compiled and trained as usual

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

print("\nModel with int4 quantized layer created successfully.")

print("The 'kernel_quantizer' is set on the second Dense layer.")In this example, we instantiate `Int4Quantizer` and pass it to the `kernel_quantizer` argument of the `Dense` layer. Keras handles the underlying logic of converting the FP32 weights to the INT4 format during inference. This simple API integration means developers don’t need to fundamentally change their model-building workflow, making advanced optimization techniques more accessible. This ease of use is a core tenet of Keras and is crucial for developers working with tools like Google Colab or on platforms like AWS SageMaker.

High-Performance Data Loading: Introducing Grain Support

As models grow, so do datasets. In many large-scale training scenarios, the GPU or TPU sits idle, waiting for the CPU to load and preprocess the next batch of data. This data loading bottleneck can severely limit training speed and hardware utilization. This is a problem that distributed computing frameworks, often discussed in Ray News and Apache Spark MLlib News, aim to solve. Keras 3.11 tackles this head-on by integrating support for Grain.

The Data Bottleneck Problem and the Grain Solution

Grain is a high-performance, scalable, and deterministic data loading library developed by Google AI. It was designed specifically to feed data to large-scale models running on accelerators like TPUs and multiple GPUs. Key features of Grain include:

- Performance: Grain is written in C++ and Python, optimized for high throughput and low latency. It uses multi-process data loading and prefetching to ensure the training pipeline is never starved for data.

- Determinism: It provides guarantees of determinism, meaning that given the same seed, it will always produce the exact same sequence of data. This is crucial for reproducibility in research and debugging.

- Scalability: It is designed for massive datasets that may not fit in memory and can efficiently shard data across multiple hosts and devices in a distributed training setup.

By supporting Grain directly in the `model.fit()` API, Keras allows users to seamlessly switch from standard data loaders like `tf.data.Dataset` to a more powerful, production-grade alternative without significant code changes. This is particularly relevant for users of cloud platforms like Vertex AI or Azure Machine Learning, where efficient resource utilization is paramount.

Integrating Grain with Keras `fit()`

Using Grain with Keras is straightforward. First, you define your data source and operations using the Grain API, and then you pass the resulting `grain.DataLoader` object directly to `model.fit()`. Let’s create a simple example using an in-memory dataset.

import grain.python as grain

import numpy as np

import tensorflow as tf # Or torch, or jax

import keras

# 1. Create a dummy in-memory data source

# In a real scenario, this could be a list of file paths.

num_examples = 1000

features = np.random.rand(num_examples, 28 * 28).astype(np.float32)

labels = np.random.randint(0, 10, size=(num_examples,)).astype(np.int32)

# Grain works with a map-style dataset (indexable)

class DummyDataSource(grain.RandomAccessDataSource):

def __init__(self):

super().__init__()

self._features = features

self._labels = labels

def __len__(self):

return num_examples

def __getitem__(self, index):

return {"features": self._features[index], "labels": self._labels[index]}

# 2. Define operations (transformations)

def transform(data):

# In a real pipeline, you would do your preprocessing here

return {"features": data["features"], "labels": data["labels"]}

# 3. Create the Sampler, DataLoader, and PyGrainDataset

sampler = grain.IndexSampler(num_records=num_examples, shard_options=grain.ShardOptions(shard_index=0, shard_count=1, drop_remainder=True))

data_source = DummyDataSource()

# The operations list can contain multiple transformations

operations = [grain.MapOperation(map_function=transform)]

data_loader = grain.DataLoader(

data_source=data_source,

sampler=sampler,

operations=operations,

worker_count=2, # Use multiple processes for loading

)

# 4. Wrap the DataLoader for use with Keras

# This converts the data to the backend-specific tensor format

pygrain_dataset = grain.experimental.PyGrainDataset(data_loader)

# Create a simple model to train

model = keras.Sequential([

keras.layers.Input(shape=(784,)),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# 5. Pass the PyGrainDataset directly to model.fit()

print("Starting training with Grain DataLoader...")

model.fit(pygrain_dataset, epochs=2, steps_per_epoch=100)

print("Training finished.")This example demonstrates the complete pipeline: defining a data source, creating a sampler and loader, and finally passing the `PyGrainDataset` to `model.fit()`. The `worker_count` parameter in the `DataLoader` is key for performance, as it parallelizes the data loading process.

Advanced Techniques and Multi-Backend Synergy

The true power of Keras 3 lies in its multi-backend architecture. The new quantization and data loading features are designed to be backend-agnostic, providing a consistent developer experience whether you are using TensorFlow, PyTorch, or JAX.

Cross-Backend Compatibility in Action

Imagine you have developed a model and a data pipeline using the TensorFlow backend. Now, you want to experiment with JAX for its performance benefits in JIT compilation. With Keras 3, this transition is seamless. The same Keras code for model definition and training works across backends. The quantization API is a perfect example of this philosophy.

import os

import keras

from keras import layers

from keras import quantizers

# --- Backend Agnostic Code ---

def create_quantized_model():

"""This function is independent of the backend."""

return keras.Sequential([

layers.Input(shape=(28, 28, 1)),

layers.Conv2D(32, kernel_size=(3, 3), activation="relu"),

layers.MaxPooling2D(pool_size=(2, 2)),

# Apply int4 quantization to this layer's weights

layers.Conv2D(

64,

kernel_size=(3, 3),

activation="relu",

kernel_quantizer=quantizers.Int4Quantizer()

),

layers.Flatten(),

layers.Dense(10, activation="softmax")

])

# --- Backend Specific Usage ---

# 1. Use with TensorFlow (default)

print("--- Using TensorFlow Backend ---")

os.environ["KERAS_BACKEND"] = "tensorflow"

# We need to re-import keras for the backend change to take effect

import importlib

importlib.reload(keras)

tf_model = create_quantized_model()

tf_model.summary()

# 2. Switch to JAX

print("\n--- Using JAX Backend ---")

os.environ["KERAS_BACKEND"] = "jax"

importlib.reload(keras)

jax_model = create_quantized_model()

jax_model.summary()

# 3. Switch to PyTorch

print("\n--- Using PyTorch Backend ---")

os.environ["KERAS_BACKEND"] = "torch"

importlib.reload(keras)

torch_model = create_quantized_model()

torch_model.summary()

This code snippet illustrates how the exact same model-building function can be used to create a model for TensorFlow, JAX, and PyTorch. This portability is a massive productivity booster, allowing teams to leverage the best tools for the job without rewriting their core model logic. This unified approach is a significant piece of Keras News that simplifies MLOps workflows, making it easier to track experiments with tools like MLflow or Weights & Biases.

Deploying Quantized Models

Once a model is quantized, the next step is deployment. The reduced model size and faster inference make these models ideal for various targets. You can export your quantized Keras model to a standard format like ONNX, which can then be optimized further by inference engines such as NVIDIA’s TensorRT or Intel’s OpenVINO. This workflow is essential for deploying high-performance services with tools like Triton Inference Server or for running models on edge devices.

Best Practices for Implementation and Optimization

Adopting new features like `int4` quantization and Grain requires careful consideration to maximize their benefits. Here are some best practices and tips.

When to Use INT4 Quantization

While powerful, `int4` quantization is not a silver bullet. It introduces a trade-off between performance and accuracy.

- Ideal Candidates: Large, over-parameterized models like Transformers (e.g., BERT, GPT variants) are excellent candidates. Their inherent redundancy means they can often tolerate significant precision loss with minimal impact on performance. This is why quantization is a hot topic in LLM News.

- Sensitive Models: Smaller, more compact models or models trained on tasks highly sensitive to small weight variations (e.g., some scientific or medical imaging models) may see a more significant accuracy drop.

- Evaluation is Key: Always rigorously benchmark the quantized model against the FP32 baseline on a hold-out test set. Measure not only accuracy but also latency and memory usage to quantify the real-world performance gains. Use experiment tracking tools like those discussed in Comet ML News to log and compare these metrics.

Optimizing Grain Data Pipelines

To get the most out of Grain, consider the following:

- Tune `worker_count`: The number of worker processes for data loading should be tuned based on the number of CPU cores available. A good starting point is the number of physical cores, but you should experiment to find the optimal value for your specific workload and hardware.

- Preprocessing in `MapOperation`: Perform your data augmentation and preprocessing steps within Grain’s `MapOperation`. This ensures the work is done in parallel across the worker processes, preventing the main training process from becoming a bottleneck.

- Use Sharding for Distributed Training: When training on multiple GPUs or TPUs, use `grain.ShardOptions` to ensure each device receives a unique, non-overlapping slice of the data. Grain is designed to handle this seamlessly.

Conclusion: The Road Ahead for Keras

The release of Keras 3.11 is a clear statement of intent. By focusing on production-critical features like `int4` quantization and high-performance data loading, Keras is evolving from a rapid prototyping tool into a comprehensive, end-to-end framework for building, training, and deploying machine learning models at scale. The multi-backend architecture ensures that these powerful features are not locked into a single ecosystem, giving developers the freedom to choose the best tools for their needs, whether it’s TensorFlow’s robust production environment, PyTorch’s research flexibility, or JAX’s raw performance.

For developers and MLOps engineers, these updates provide actionable tools to build more efficient and scalable AI systems. The ability to easily quantize models opens up new possibilities for on-device AI and reduces the operational costs of serving large models. The integration of Grain directly addresses a critical performance bottleneck, enabling faster iteration and more efficient use of expensive accelerator hardware. As the AI landscape continues to evolve, Keras is positioning itself not just as an API, but as a powerful, flexible, and indispensable platform for the next generation of artificial intelligence. The next step is for the community to start applying these features to real-world problems and pushing the boundaries of what’s possible.