Navigating the Data Labyrinth: Technical Strategies for Training Generative AI Models Responsibly

The rapid evolution of generative AI, particularly in the realm of text-to-video and advanced image synthesis, has captured the world’s imagination. Recent developments and headlines, including the latest OpenAI News and Stability AI News, highlight the incredible capabilities of these models. However, beneath the surface of these technological marvels lies a foundational and increasingly complex challenge: the sourcing and ethical use of vast quantities of training data. The quality, diversity, and provenance of this data directly dictate a model’s performance, biases, and, crucially, its legal and ethical standing.

For developers, data scientists, and MLOps engineers, this is not just an abstract debate but a pressing technical problem. How do we build state-of-the-art models while navigating the intricate labyrinth of data rights? The answer lies in a multi-faceted technical strategy that combines intelligent data sourcing, the generation of synthetic datasets, robust MLOps practices for transparency, and a commitment to responsible development. This article delves into the practical, code-driven approaches that teams can implement to build powerful generative models while mitigating risk and fostering trust in an ecosystem constantly being reshaped by news from Meta AI News and Google DeepMind News.

The Foundation: Data Sourcing and Preprocessing Pipelines

Every great AI model is built on a foundation of data. The scale required for training modern foundation models is staggering, often involving petabytes of text, images, and video. The initial and most critical phase of any project is establishing a robust and defensible data sourcing and preprocessing pipeline. This involves navigating a spectrum of data sources and implementing technical safeguards.

The Spectrum of Data Sources

Training data for generative models typically comes from a variety of sources, each with its own technical and legal considerations:

- Public Domain and Open Licenses: Datasets explicitly licensed for commercial use and modification (e.g., Creative Commons licenses like CC0 or CC-BY) are the safest starting point. This includes government data, historical archives, and community-curated datasets.

- Licensed Commercial Datasets: Companies can purchase or license high-quality, curated datasets from providers like Getty Images or Shutterstock. While costly, this provides clear usage rights.

- Web-Scraped Data: The most common but also the most contentious source. Common Crawl is a popular example, containing a massive snapshot of the public web. The challenge here is filtering this raw data to respect `robots.txt` directives, remove copyrighted material, and filter out low-quality or harmful content.

- Proprietary Data: An organization’s internal data can be a powerful asset for fine-tuning models for specific tasks, assuming the necessary rights and user consents are in place.

Technical Implementation of a Filtering Scraper

When scraping data, it’s crucial to build in programmatic checks for permissions. This involves not only respecting `robots.txt` but also attempting to identify licensing information directly on the page. While not foolproof, it represents a due-diligence step in the data collection process. The following Python example uses `requests` and `BeautifulSoup` to demonstrate a basic scraping function with a placeholder for license filtering.

import requests

from bs4 import BeautifulSoup

import re

def has_permissive_license(soup):

"""

A simplified function to check for common permissive license indicators.

In a real-world scenario, this would be far more sophisticated,

potentially using NLP to understand license text.

"""

# Check for Creative Commons links

cc_links = soup.find_all('a', href=re.compile(r"creativecommons.org/licenses/(by|sa|by-sa)/"))

if cc_links:

print("Found a Creative Commons license.")

return True

# Check for meta tags indicating license

license_meta = soup.find('meta', attrs={'name': 'license'})

if license_meta and 'creativecommons' in license_meta.get('content', '').lower():

print("Found a license meta tag.")

return True

# Check for explicit text (very basic)

body_text = soup.get_text().lower()

if "public domain" in body_text or "cc-by" in body_text:

print("Found permissive text indicators.")

return True

return False

def scrape_and_filter_url(url):

"""

Scrapes a URL and processes it only if a permissive license is detected.

"""

try:

headers = {

'User-Agent': 'MyResponsibleAI-Bot/1.0 (+http://my-project-url.com/bot-info)'

}

response = requests.get(url, headers=headers, timeout=10)

response.raise_for_status() # Raise an exception for bad status codes

soup = BeautifulSoup(response.content, 'html.parser')

if has_permissive_license(soup):

print(f"Processing content from {url} as it appears to have a permissive license.")

# ... further processing logic here ...

# For example, extract image URLs and their alt text

images = soup.find_all('img')

for img in images:

img_src = img.get('src')

alt_text = img.get('alt', 'No description')

if img_src:

print(f" - Found Image: {img_src}, Description: '{alt_text}'")

else:

print(f"Skipping {url} - No clear permissive license found.")

except requests.exceptions.RequestException as e:

print(f"Could not retrieve {url}: {e}")

# Example Usage

target_url = "https://en.wikipedia.org/wiki/Public_domain" # Example URL that likely mentions licenses

scrape_and_filter_url(target_url)This approach, when scaled up using frameworks like Apache Spark MLlib News or Ray News, forms the first line of defense in a responsible data acquisition strategy.

Building Ethically: Synthetic Data and Licensed Alternatives

Relying solely on scraped data is becoming increasingly untenable. An effective alternative is the generation of synthetic data. This involves using a pre-existing model to create new, original data points that mimic the statistical properties of a real-world dataset. This approach offers greater control over content, can help mitigate bias, and sidesteps many copyright issues.

Generating Synthetic Training Prompts with Transformers

For training text-to-video models, one of the key assets is a high-quality dataset of video-description pairs. Instead of scraping potentially copyrighted descriptions, we can use a powerful language model to generate them. The Hugging Face Transformers News library makes this incredibly accessible. We can prompt a model like Mistral or Llama to generate rich, descriptive scenarios that can then be used as training targets.

This example demonstrates how to use a model from the Hugging Face Hub to generate creative prompts for a video generation model. This is a key technique discussed in the latest Mistral AI News and Cohere News updates.

from transformers import pipeline

import torch

# Use a smaller, efficient model for demonstration.

# In production, you might use a larger model via an API or a powerful GPU.

# Make sure to install: pip install transformers torch accelerate

generator = pipeline('text-generation', model='mistralai/Mistral-7B-Instruct-v0.1', device=0 if torch.cuda.is_available() else -1)

def generate_video_prompts(base_concept, num_prompts=3):

"""

Generates a list of detailed, synthetic video prompts based on a simple concept.

"""

prompts = []

instruction = f"""

Generate {num_prompts} highly descriptive, cinematic prompts for a text-to-video AI.

Each prompt should be a single, detailed paragraph.

Base the prompts on the concept: '{base_concept}'.

Example format:

A drone shot slowly pushing in on a lone lighthouse at sunset. Storm clouds gather on the horizon, and waves crash violently against the rocky shore. The lighthouse beam cuts through the twilight mist.

"""

response = generator(instruction, max_new_tokens=300, num_return_sequences=1)

# The output needs parsing to separate the prompts

generated_text = response[0]['generated_text']

# Simple parsing based on newlines, a more robust parser might be needed

potential_prompts = generated_text.split('\n')

# Filter for non-empty, descriptive lines

for p in potential_prompts:

if len(p.strip()) > 50: # Filter out short/empty lines

prompts.append(p.strip())

if len(prompts) >= num_prompts:

break

return prompts

# Generate prompts for a specific concept

concept = "a futuristic city at night"

synthetic_prompts = generate_video_prompts(concept, num_prompts=3)

print(f"--- Generated Synthetic Prompts for '{concept}' ---")

for i, prompt in enumerate(synthetic_prompts):

print(f"{i+1}. {prompt}")By creating a large corpus of these synthetic prompts, teams can build a unique and proprietary dataset for training or fine-tuning models without directly using scraped text that may be copyrighted. Frameworks like LangChain News and LlamaIndex News are excellent for orchestrating these complex generation and data augmentation pipelines.

Ensuring Transparency and Reproducibility with MLOps

As datasets become a complex mix of scraped, licensed, and synthetic data, tracking the origin and transformation of every single data point becomes paramount. This is where MLOps (Machine Learning Operations) practices are essential. Data provenance—a detailed record of a data’s origins—is the cornerstone of building auditable and trustworthy AI systems.

Implementing Data Lineage with MLflow

Tools like MLflow News, Weights & Biases News, and Comet ML News are designed to track experiments, including the data used. By logging dataset sources, versions, and preprocessing steps as parameters or artifacts, you create an immutable record for each model you train. This is invaluable for debugging, for reproducing results, and for demonstrating compliance if questions about your training data arise.

Here is a conceptual example of how you might use MLflow to log data sources during a training script. This practice is becoming standard on platforms like AWS SageMaker News and Azure Machine Learning News.

import mlflow

import hashlib

def get_dataset_hash(file_path):

"""Calculates the SHA256 hash of a dataset file for versioning."""

sha256_hash = hashlib.sha256()

with open(file_path, "rb") as f:

for byte_block in iter(lambda: f.read(4096), b""):

sha256_hash.update(byte_block)

return sha256_hash.hexdigest()

# --- Configuration for our training data ---

licensed_data_path = "s3://my-bucket/licensed_data/shutterstock_q1_2024.csv"

synthetic_data_path = "s3://my-bucket/synthetic_data/generated_prompts_v2.csv"

web_data_path = "s3://my-bucket/filtered_web_data/common_crawl_subset_filtered.parquet"

# Start an MLflow run to track our experiment

with mlflow.start_run(run_name="Video_Model_Training_Run_v1.2") as run:

print(f"Started MLflow run: {run.info.run_id}")

# Log data sources and versions as parameters

mlflow.log_param("licensed_data_source", licensed_data_path)

mlflow.log_param("synthetic_data_source", synthetic_data_path)

mlflow.log_param("web_data_source", web_data_path)

# For local files, we could log a hash to ensure reproducibility

# For this example, we'll just log the path as a proxy for version

# licensed_data_hash = get_dataset_hash('local_licensed_data.csv')

# mlflow.log_param("licensed_data_hash", licensed_data_hash)

# Log preprocessing parameters

mlflow.log_param("image_resolution", 512)

mlflow.log_param("frames_per_video", 24)

print("Logged data sources and parameters to MLflow.")

# --- Training Loop Would Go Here ---

# model = train_my_model(data_paths=[...])

# mlflow.pytorch.log_model(model, "video_model")

# Log metrics

mlflow.log_metric("validation_loss", 0.123)

mlflow.log_metric("fid_score", 28.5)

print("MLflow run completed.")

# You can now view this run in the MLflow UI to see exactly what data was used.Fine-Tuning on Curated Datasets

Another powerful strategy is to leverage a pre-trained open-source foundation model and fine-tune it on a smaller, high-quality, and fully licensed dataset. This approach, central to the latest PyTorch News and TensorFlow News tutorials, significantly reduces the data and compute requirements while allowing for specialization. Vector databases like Pinecone News, Milvus News, and Weaviate News can be instrumental in curating this fine-tuning data by helping find and remove items semantically similar to known copyrighted works.

Best Practices and Optimization for Responsible AI

Beyond specific code implementations, a culture of responsible AI development requires adopting a set of overarching best practices. These practices ensure that ethical considerations are woven into the entire model lifecycle, from conception to deployment.

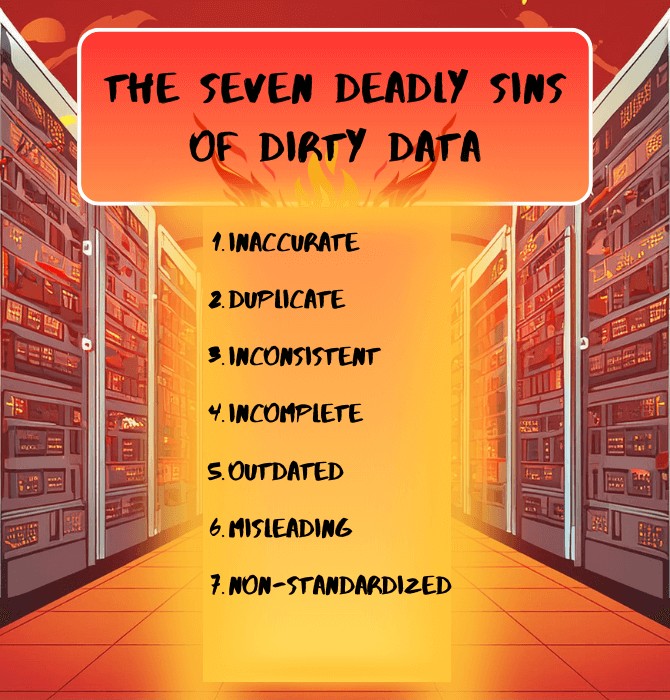

Data Filtering and Denoising

Raw datasets are invariably noisy. It’s critical to implement robust filtering steps to remove:

- Personally Identifiable Information (PII): Use NLP techniques and pattern matching to scrub names, addresses, and other sensitive information.

- Harmful or Toxic Content: Employ content moderation APIs or pre-trained classifier models to identify and remove unsafe text and images.

- Low-Quality Data: Filter out blurry images, garbled text, or irrelevant content that could degrade model performance.

Documentation and Model Cards

Transparency is key to building trust. Adopting practices like “Datasheets for Datasets” and “Model Cards” is crucial. A datasheet should meticulously document a dataset’s origins, collection methods, licensing, and known biases. A model card should similarly detail the trained model’s performance characteristics, limitations, and intended use cases. This documentation provides essential context for both internal stakeholders and external users, a practice championed in recent Anthropic News.

Leveraging Cloud AI Platforms and Optimized Inference

Managing these complex data and training pipelines at scale is a significant engineering challenge. Cloud platforms like AWS SageMaker, Azure Machine Learning, and Google’s Vertex AI News provide managed infrastructure and services that simplify MLOps. For deployment, optimizing models with tools like NVIDIA AI News‘s TensorRT or using standards like ONNX News is critical for efficient inference. Serving these optimized models on platforms like Triton Inference Server News or using efficient engines like vLLM News ensures that the final product is both performant and scalable.

Conclusion: The Path Forward

The conversation around training data for generative AI is evolving at a breakneck pace. For developers and researchers, the challenge is to keep up not just with the latest model architectures but with the best practices for responsible data handling. The path forward is not about finding a single, perfect data source, but about building a resilient and multi-faceted technical strategy.

This involves implementing programmatic safeguards during data collection, embracing synthetic data generation to reduce reliance on web-scraped content, and leveraging robust MLOps tools like MLflow to ensure every model is auditable and reproducible. By combining these technical solutions with a commitment to transparency through thorough documentation, the AI community can continue to push the boundaries of what’s possible while building a more trustworthy and ethical technological future.