Unlocking SAP Data with DataRobot: A Technical Deep Dive into the New AI Application Suites

Introduction: The New Frontier of Enterprise AI in SAP Environments

In the world of enterprise resource planning (ERP), SAP stands as a titan, housing the central nervous system of countless global organizations. From supply chain logistics and financial transactions to human resources data, SAP systems are the definitive source of truth for critical business operations. However, extracting predictive value and actionable intelligence from this vast data repository has historically been a monumental task, often requiring specialized data science teams, lengthy development cycles, and deep expertise in complex machine learning frameworks like TensorFlow or PyTorch. This is a landscape ripe for disruption, and recent DataRobot News signals a significant shift. The introduction of new AI Application Suites specifically designed for SAP users aims to democratize access to advanced analytics and automated decision-making.

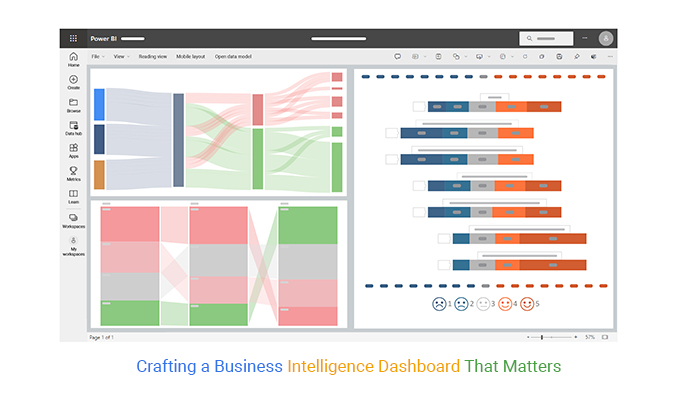

This initiative moves beyond traditional business intelligence (BI) dashboards, empowering business analysts and operations managers—the individuals closest to the data—to build, deploy, and manage sophisticated AI models directly within their workflows. By abstracting the complexities of model selection, feature engineering, and MLOps, these tools promise to dramatically accelerate the path from raw SAP data to tangible business outcomes. This article provides a comprehensive technical exploration of how these new capabilities work, offering practical code examples and best practices for developers and data professionals looking to leverage DataRobot to supercharge their SAP ecosystems. We will delve into the end-to-end workflow, from data integration to programmatic model deployment and monitoring, showcasing how this fusion of AutoML and enterprise data is setting a new standard for business innovation.

Section 1: Core Concepts – Connecting SAP Data to the AutoML Engine

The fundamental challenge in leveraging SAP data for AI is bridging the gap between the structured, transactional world of ERP and the flexible, iterative environment of machine learning. SAP data is often siloed, complexly structured, and requires significant domain expertise to interpret. DataRobot’s approach simplifies this by providing a robust platform that can ingest this data and automate the entire modeling pipeline. The core of this process is DataRobot’s industry-leading AutoML capabilities, which algorithmically explore thousands of modeling strategies to find the best fit for a given dataset and prediction problem.

Understanding the Data Connection and Project Setup

Before any modeling can begin, data must be extracted from the SAP system (e.g., SAP S/4HANA, SAP BW/4HANA, or legacy ECC systems) and made available to DataRobot. While direct connectors are often available, a common and flexible approach involves exporting the required data as flat files (like CSVs) or staging it in a cloud data warehouse like Snowflake or Google BigQuery, which DataRobot can then access. For this article, we will simulate this process using a CSV file representing a typical business problem: predicting customer churn based on sales and engagement data that would originate from an SAP Sales and Distribution (SD) module.

Programmatically, interaction with the platform is handled via the DataRobot Python client. This allows for seamless integration into existing data pipelines and CI/CD workflows. The first step is always to establish a connection and create a new project by uploading your dataset.

import datarobot as dr

import pandas as pd

# --- Configuration ---

# It's best practice to use environment variables for credentials

# For example: DATAROBOT_API_TOKEN and DATAROBOT_ENDPOINT

# dr.Client() will automatically pick them up.

# dr.Client(token='YOUR_API_TOKEN', endpoint='https_app.datarobot.com/api/v2')

# --- 1. Connect to DataRobot ---

print("Connecting to DataRobot...")

client = dr.Client()

print("Connection successful.")

# --- 2. Simulate SAP Data Export ---

# In a real-world scenario, this data would be an export from an SAP system.

# It might contain customer master data, sales order history, etc.

data = {

'customer_id': [1001, 1002, 1003, 1004, 1005, 1006],

'avg_order_value': [150.50, 89.99, 450.00, 210.25, 99.00, 320.75],

'orders_last_6_months': [12, 3, 25, 8, 4, 18],

'days_since_last_order': [15, 89, 5, 32, 120, 10],

'support_tickets_opened': [1, 5, 2, 0, 8, 1],

'churned': [0, 1, 0, 0, 1, 0] # Our target variable

}

sap_customer_df = pd.DataFrame(data)

sap_customer_df.to_csv('sap_customer_churn.csv', index=False)

print("Simulated SAP data saved to sap_customer_churn.csv")

# --- 3. Create a Project in DataRobot ---

print("Uploading dataset and creating a new project...")

project = client.projects.create(

project_name='SAP Customer Churn Prediction',

sourcedata='sap_customer_churn.csv'

)

print(f"Project '{project.project_name}' created successfully.")

print(f"Project ID: {project.id}")

print(f"Project URL: {project.get_uri()}")This script demonstrates the foundational step of programmatic interaction. It establishes a connection, prepares a sample dataset, and initiates a project in DataRobot. This simple action kicks off a powerful automated process where DataRobot profiles the data, identifies variable types, and prepares for the modeling stage. This level of automation is a key theme in recent AutoML News, where the focus is on reducing manual data preparation overhead.

Section 2: The Automated Modeling Pipeline in Action

Once a project is created, the next step is to configure and run the AutoML process. This is where DataRobot’s core value proposition shines. Instead of a data scientist manually testing algorithms from libraries like scikit-learn, XGBoost, or even deep learning frameworks like Keras or PyTorch, DataRobot automates this exploration. It builds dozens of models, from simple linear regressions to complex gradient-boosted trees and ensembles, all competing on a leaderboard to identify the most accurate and robust solution.

Initiating and Monitoring an AutoML Project

For our SAP churn prediction use case, we need to specify ‘churned’ as the target variable we want to predict. We can also choose the modeling mode. “Quick” mode provides a fast exploration, while “Autopilot” is more comprehensive. For production use cases, “Autopilot” is recommended. The following code starts the modeling process and then monitors its progress until completion.

import datarobot as dr

import time

# --- Configuration ---

# Assume the project was created in the previous step

PROJECT_ID = 'YOUR_PROJECT_ID' # Replace with the ID from the previous script

# --- 1. Connect and Get Project ---

client = dr.Client()

project = dr.Project.get(PROJECT_ID)

print(f"Retrieved project: '{project.project_name}'")

# --- 2. Set the Target and Start Autopilot ---

# We are predicting the 'churned' column.

# The metric 'LogLoss' is a good choice for binary classification.

print("Starting Autopilot to predict 'churned'...")

project.set_target(

target='churned',

mode=dr.enums.AUTOPILOT_MODE.FULL_AUTOPILOT, # or .QUICK

worker_count=-1 # Use maximum available workers

)

print("Autopilot started. Modeling is in progress...")

# --- 3. Monitor the Project ---

# We can wait for the project to finish modeling.

project.wait_for_autopilot()

print("Autopilot has completed.")

# --- 4. Retrieve and Inspect the Best Model ---

# The leaderboard contains all the models DataRobot built.

leaderboard = project.get_models()

print(f"\nTop 5 models on the leaderboard (sorted by validation score):")

for i, model in enumerate(leaderboard[:5]):

print(f"{i+1}. Model Type: {model.model_type}, Score ({model.metric}): {model.metrics[model.metric]['validation']:.4f}")

# Get the single best model recommended by DataRobot

best_model = leaderboard[0]

print(f"\nRecommended best model: {best_model.model_type}")

print(f"Model ID: {best_model.id}")

# You can also inspect feature impact (SHAP values) for the best model

feature_impact = best_model.get_or_create_feature_impact()

print("\nTop 5 most impactful features for the best model:")

for impact in feature_impact[:5]:

print(f"- Feature: {impact['featureName']}, Impact Score: {impact['impactNormalized']:.4f}")This script automates the core data science workflow. It points DataRobot to the target, launches the competitive modeling process, and waits for it to finish. Crucially, it then retrieves the leaderboard and identifies the most impactful features. For a business user, seeing that `days_since_last_order` is the top driver of churn is an immediately actionable insight, without needing to understand the underlying SHAP (SHapley Additive exPlanations) calculations. This aligns with trends seen in MLflow News and Weights & Biases News, which emphasize model explainability and tracking as critical components of the MLOps lifecycle.

Section 3: Deployment, Prediction, and Operationalizing Insights

A trained model is only valuable when it’s integrated into business processes. This is where MLOps (Machine Learning Operations) becomes critical. DataRobot provides a fully managed deployment environment, allowing for one-click (or single-API-call) deployment of any model from the leaderboard. Once deployed, the model is exposed as a REST API endpoint, ready to serve real-time predictions.

Programmatic Deployment and Real-Time Inference

Imagine a scenario where a new sales order is created in SAP. A workflow could be triggered to call our deployed churn model to score that customer in real-time. If the churn risk is high, the system could automatically flag the account for a retention specialist. This seamless integration is key to operationalizing AI. The following code demonstrates how to deploy the best model and then make a prediction for a new customer.

import datarobot as dr

import pandas as pd

import json

# --- Configuration ---

PROJECT_ID = 'YOUR_PROJECT_ID'

MODEL_ID = 'YOUR_BEST_MODEL_ID' # Get this from the previous script

client = dr.Client()

# --- 1. Get the Prediction Server ---

# DataRobot has dedicated prediction servers for low-latency inference.

prediction_server = dr.PredictionServer.list()[0]

print(f"Using prediction server: {prediction_server.name} ({prediction_server.id})")

# --- 2. Deploy the Model ---

# This creates a REST API endpoint for the model.

deployment = dr.Deployment.create_from_learning_model(

model_id=MODEL_ID,

label='SAP Churn Prediction API',

description='Real-time churn scoring for SAP customers',

default_prediction_server_id=prediction_server.id

)

print(f"Deployment '{deployment.label}' created with ID: {deployment.id}")

print("Waiting for deployment to become active...")

# You might need a short wait here in a real script for the service to spin up.

# --- 3. Prepare New Data for Prediction ---

# This represents a new customer record from your SAP system.

new_customer_data = pd.DataFrame([{

'customer_id': 1007,

'avg_order_value': 75.00,

'orders_last_6_months': 2,

'days_since_last_order': 150,

'support_tickets_opened': 6

}])

# --- 4. Make a Real-Time Prediction ---

# The DataRobot client handles the API call details.

# You can also use standard 'requests' library to call the REST endpoint directly.

predictions = deployment.predict_dataframe(new_customer_data)

print("\n--- Prediction Results ---")

print(predictions[['positive_probability', 'prediction']])

# The output gives the probability of churn (positive_probability) and the binary prediction.

churn_probability = predictions.iloc[0]['positive_probability']

print(f"\nPredicted churn probability for customer 1007: {churn_probability:.2%}")

if churn_probability > 0.6:

print("Action: Flag customer for immediate retention outreach.")

else:

print("Action: No immediate action required.")This final piece of the puzzle closes the loop from data to decision. The ability to programmatically deploy and query models is essential for enterprise integration. This workflow can be embedded in middleware connecting to SAP, in a cloud function, or any application that needs to consume AI-driven insights. The platform also automatically handles model monitoring for data drift and accuracy, a topic frequently discussed in Azure Machine Learning and AWS SageMaker news, ensuring that the model remains effective over time.

Section 4: Best Practices and Advanced Considerations

While the core workflow is highly automated, maximizing the value of DataRobot within an SAP environment requires adherence to several best practices and an awareness of more advanced capabilities.

Governance, MLOps, and the Broader AI Ecosystem

- Data Governance and Feature Lists: Before starting a project, collaborate with SAP business analysts to curate the right datasets. Use DataRobot’s feature list management to create specific sets of features for modeling, which helps control for data leakage and ensures compliance with regulations like GDPR.

- Champion/Challenger Models: For critical processes, don’t just deploy one model. Use DataRobot’s MLOps capabilities to set up champion/challenger deployments. The “champion” model handles live traffic, while one or more “challenger” models receive a sample of the traffic, allowing you to test new models in production without risk.

- Integration with Generative AI: The predictive outputs from DataRobot can serve as powerful inputs for generative AI applications. For instance, a high churn score from a DataRobot model could trigger a workflow using a large language model (LLM) from OpenAI or Anthropic, accessed via a framework like LangChain, to automatically draft a personalized retention email for the customer. This combines the strengths of predictive and generative AI.

- Custom Tasks and Extensibility: While AutoML is powerful, DataRobot also allows for the integration of custom models written in Python or R. If you have a highly specialized algorithm developed using TensorFlow or PyTorch, you can upload it into a custom model environment, allowing it to compete on the leaderboard and leverage DataRobot’s MLOps infrastructure. This provides a “best of both worlds” approach, combining automation with customizability.

- Performance and Scalability: For high-throughput prediction needs, consider using the DataRobot Prediction API with batch predictions or deploying models to scalable infrastructure managed by platforms like Ray or within a Kubernetes cluster using tools like the Triton Inference Server.

By thinking about these factors, organizations can move from ad-hoc projects to a scalable, governed, and enterprise-grade AI factory built on top of their existing SAP investment. The latest DataRobot News on these SAP-specific suites underscores the platform’s commitment to meeting these enterprise needs head-on.

Conclusion: A New Paradigm for Business-Led AI

The launch of DataRobot’s AI Application Suites for SAP marks a pivotal moment in the journey toward the intelligent enterprise. By abstracting the immense complexity of the machine learning lifecycle, DataRobot empowers the business users and analysts who understand the data best to solve their own problems, transforming them from passive consumers of reports into active creators of AI-driven value. The programmatic access via the Python API, as demonstrated, ensures that these solutions can be tightly integrated, automated, and governed within an enterprise IT landscape.

For developers and data professionals, this shift doesn’t remove their role but elevates it. Instead of spending months on manual modeling, their focus can shift to higher-value activities: curating high-quality data pipelines from SAP, architecting robust integrations, governing the AI lifecycle, and exploring novel use cases that combine predictive analytics with the latest in generative AI from the worlds of OpenAI News and Hugging Face News. The future of business innovation lies in this powerful collaboration between domain experts and AI platforms, and with these new tools, that future is more accessible than ever for the millions of users within the SAP ecosystem.