Beyond Dense Vectors: The Rise of ColBERT and Late-Interaction Models in the Sentence Transformers Ecosystem

The world of natural language processing is in a constant state of flux, with new architectures and techniques emerging at a breathtaking pace. For years, the Sentence Transformers library has been the undisputed champion for creating dense vector embeddings, powering countless semantic search, clustering, and information retrieval applications. This bi-encoder approach, which condenses the meaning of a sentence or document into a single high-dimensional vector, offered a revolutionary blend of speed and semantic understanding. However, as the demand for precision and nuance in search grows, the limitations of single-vector representations are becoming more apparent. This is where a new paradigm, late-interaction models, is making significant waves, and recent developments are bringing this power directly into the familiar Sentence Transformers ecosystem.

This shift represents a pivotal moment in Hugging Face News and the broader NLP community. While bi-encoders excel at capturing general semantic gist, they can sometimes struggle with queries that depend on specific keywords or fine-grained details. Late-interaction models, exemplified by ColBERT (Contextualized Late Interaction over BERT), address this by deferring the similarity calculation. Instead of comparing two single vectors, they compare the token-level embeddings of the query and the document, preserving a much richer level of detail. This article dives deep into this exciting evolution, exploring how ColBERT is being integrated into modern search pipelines, providing practical code examples, and discussing the implications for the future of information retrieval and Retrieval-Augmented Generation (RAG).

From Single Vectors to Rich Representations: A Search Paradigm Shift

To appreciate the significance of late-interaction models, it’s essential to first understand the foundation they are building upon: the bi-encoder architecture popularized by Sentence Transformers.

The Classic Bi-Encoder Approach

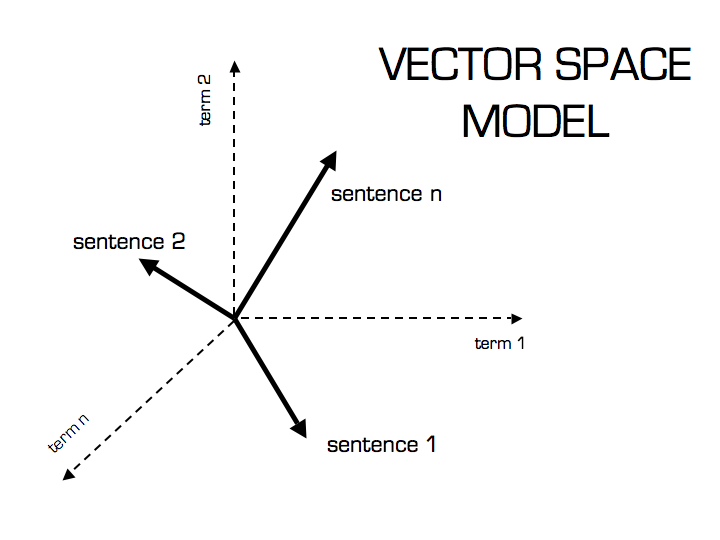

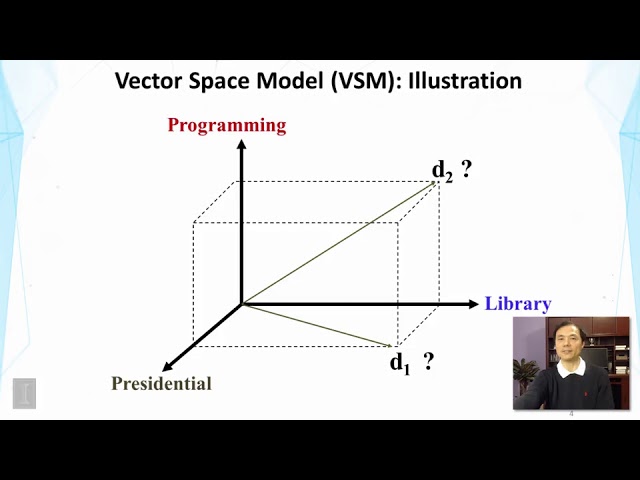

The bi-encoder model is elegant in its simplicity. It uses a Transformer model (like BERT or RoBERTa) with a pooling layer to map a piece of text to a fixed-size vector. During a search operation, the query and all documents in the corpus are independently encoded into these vectors. The retrieval process then becomes a highly efficient nearest neighbor search in this vector space, typically using cosine similarity.

This is the workhorse of modern semantic search and is incredibly fast at query time because the expensive document encoding is done offline. Frameworks built on PyTorch News and TensorFlow News have made this process highly optimized. Here’s a classic example using the sentence-transformers library:

from sentence_transformers import SentenceTransformer, util

import torch

# Load a pre-trained bi-encoder model

model = SentenceTransformer('all-MiniLM-L6-v2')

# Corpus of documents

documents = [

"The James Webb Space Telescope has captured stunning new images of the Pillars of Creation.",

"Data analysis shows that Python remains the most popular programming language for data science.",

"The new electric vehicle boasts a range of over 400 miles on a single charge.",

"Researchers are using machine learning to predict protein folding structures."

]

# 1. Encode the entire corpus into dense vectors (done once, offline)

document_embeddings = model.encode(documents, convert_to_tensor=True)

# 2. Encode the user query at search time

query = "What is the latest news in astronomy?"

query_embedding = model.encode(query, convert_to_tensor=True)

# 3. Compute cosine similarity between the query and all documents

cosine_scores = util.cos_sim(query_embedding, document_embeddings)

# 4. Find the most similar document

best_doc_index = torch.argmax(cosine_scores)

print(f"Query: {query}")

print(f"Most similar document: '{documents[best_doc_index]}' with a score of {cosine_scores[0][best_doc_index]:.4f}")Introducing ColBERT: The Power of Late Interaction

ColBERT challenges the core assumption of the bi-encoder. Instead of compressing everything into one vector, it generates a contextualized vector embedding for *every token* in the document and the query. This results in a matrix of embeddings (a “bag of embeddings”) for each text.

The “late interaction” happens at query time. For each query token embedding, the model finds the most similar document token embedding (maximum similarity). These maximum scores are then summed up to produce the final relevance score for the document. This MaxSim operator allows ColBERT to excel at queries where specific terms are critical, as it can match “James Webb” in the query directly to “James Webb” in the document at a token level, a nuance that might be diluted in a single sentence-level vector.

This approach offers a superior trade-off between the full-interaction of cross-encoders (highly accurate but extremely slow) and the efficiency of bi-encoders. It provides a significant accuracy boost while maintaining manageable query latencies.

Practical Implementation: Building a ColBERT Search Pipeline

The theoretical advantages of ColBERT are compelling, but its adoption has been historically hampered by implementation complexity. This is changing rapidly, with new libraries designed to make ColBERT as accessible as traditional Sentence Transformers. One such library is neural-cherche, which provides a high-level API for building state-of-the-art retrieval systems.

Setting Up Your ColBERT Retriever

Getting started is straightforward. First, you need to install the necessary libraries. This new wave of tools often integrates seamlessly with the Hugging Face Transformers News ecosystem.

# Install the necessary libraries

pip install neural-cherche

pip install sentence-transformers torchIndexing and Searching Documents with ColBERT

With the environment set up, you can initialize a ColBERT retriever and start indexing your documents. The library handles the complex token-level embedding generation and indexing process behind the scenes, often leveraging highly optimized backends like FAISS News for the initial candidate selection phase of the search.

The following example demonstrates how to build a complete search pipeline, from indexing to querying, in just a few lines of code.

from neural_cherche import models, retrieve

# Corpus of documents

documents = [

{"id": "doc1", "text": "The James Webb Space Telescope (JWST) is a space telescope designed primarily to conduct infrared astronomy."},

{"id": "doc2", "text": "Python's popularity in data science is driven by libraries like Pandas, NumPy, and Scikit-learn."},

{"id": "doc3", "text": "The new Lucid Air EV has an EPA estimated range of 520 miles, a new industry benchmark."},

{"id": "doc4", "text": "AlphaFold, a model from Google DeepMind, revolutionized the field of protein structure prediction."},

{"id": "doc5", "text": "The Hubble Space Telescope, a predecessor to JWST, has been in orbit for over 30 years."}

]

# 1. Initialize a ColBERT model

# This will download the model from the Hugging Face Hub

colbert_model = models.ColBERT(

model_name_or_path='colbert-ir/colbertv2.0',

device='cuda' if torch.cuda.is_available() else 'cpu'

)

# 2. Initialize the retriever pipeline

retriever = retrieve.ColBERT(

key="id",

on=["text"],

model=colbert_model

)

# 3. Index the documents

# This step generates and stores the token-level embeddings

retriever.add(documents=documents)

# 4. Perform a search

query = "What telescope is the successor to Hubble?"

results = retriever(q=query, k=3) # Retrieve top 3 documents

# Print the results

print(f"Query: '{query}'\n")

for result in results:

print(f"ID: {result['id']}, Score: {result['score']:.4f}")

print(f"Text: {result['text']}\n")

This code abstracts away the complexity of the MaxSim operation and the underlying vector indexing. It provides a clean, user-friendly interface that feels familiar to anyone who has worked with libraries like Scikit-learn or Sentence Transformers, democratizing access to this powerful technology. This ease of use is a major driver of recent Sentence Transformers News.

Advanced Techniques and Ecosystem Integration

The true power of modern retrieval systems lies not in a single algorithm but in their ability to be combined and integrated into larger applications, particularly within the booming field of RAG, which is a focus of recent LangChain News and LlamaIndex News.

Hybrid Search: The Best of Both Worlds

While ColBERT is highly precise, it can be more computationally intensive than a dense retriever. A common and powerful pattern is to implement a hybrid or multi-stage search pipeline. You can use a fast, traditional method like BM25 or a bi-encoder to retrieve a large set of candidate documents (e.g., top 100), and then use a more sophisticated model like ColBERT to re-rank these candidates for maximum precision.

This approach balances speed and accuracy, ensuring a responsive system that doesn’t sacrifice quality. Frameworks like Haystack News are increasingly offering built-in support for these multi-stage retrieval pipelines, acknowledging that no single retriever is perfect for all scenarios.

Integration with RAG Frameworks for Smarter LLMs

Retrieval-Augmented Generation (RAG) is the dominant architecture for building LLM-based applications that can reason over private or real-time data. The quality of the retrieval step is paramount to the final output quality—garbage in, garbage out. Using a high-precision retriever like ColBERT can dramatically improve the context provided to the LLM, reducing hallucinations and leading to more accurate, factual answers.

Here’s a conceptual example of how you might integrate a ColBERT retriever into a RAG pipeline using a framework like LangChain:

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI

# Assume 'retriever' is the initialized neural_cherche ColBERT retriever from the previous example

# Define a custom retrieval function for LangChain

def retrieve_documents(query: str):

results = retriever(q=query, k=3)

return "\n\n".join([doc['text'] for doc in results])

# Initialize a powerful LLM (e.g., from OpenAI, Anthropic, or Cohere)

llm = ChatOpenAI(model="gpt-4o")

# Create the RAG prompt template

template = """

Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

# Build the RAG chain

rag_chain = (

{"context": retrieve_documents, "question": RunnablePassthrough()}

| prompt

| llm

)

# Invoke the chain

query = "What is the main difference between the JWST and Hubble telescopes?"

response = rag_chain.invoke(query)

print(response.content)

By swapping out a standard vector store retriever with a ColBERT-powered one, you provide the LLM with more relevant, keyword-aware context, directly improving the quality of its generated response. This is a critical insight for developers working with platforms like Amazon Bedrock News or Azure AI News.

Best Practices, Optimization, and the Road Ahead

Adopting ColBERT and late-interaction models requires a shift in thinking. Here are some key considerations for developers and MLOps engineers.

When to Choose ColBERT

- High-Precision Needs: Use ColBERT in applications where keyword matching and fine-grained relevance are critical, such as legal document search, technical support knowledge bases, or e-commerce product search.

- Complex Queries: It excels at handling long, specific queries that contain multiple distinct concepts.

- RAG Systems: It’s an excellent choice for the retriever component in a RAG pipeline to ensure the context fed to the LLM is of the highest possible quality.

- When to Stick with Bi-Encoders: For applications that require extremely low latency and where general semantic meaning is sufficient (e.g., finding broadly related articles), a classic bi-encoder might still be the more pragmatic choice.

Performance and Scaling

The primary cost of ColBERT is at query time due to the late-interaction mechanism. To scale, consider:

- Hardware Acceleration: Utilize GPUs for inference. The latest developments in NVIDIA AI News, including libraries like TensorRT, can significantly accelerate these models.

- Model Quantization: Techniques like converting models to ONNX News format or using OpenVINO News can reduce model size and latency with minimal impact on accuracy.

- Efficient Indexing: While the library handles much of this, understanding the underlying vector stores is key. The landscape of vector databases, including Milvus News, Pinecone News, Weaviate News, and Qdrant News, is evolving rapidly to better support these complex representations.

- Inference Servers: For production deployment, using a dedicated service like Triton Inference Server or a cloud platform like AWS SageMaker or Vertex AI is essential for managing and scaling the model endpoints.

Conclusion: A New Chapter for Semantic Search

The integration of ColBERT and other late-interaction models into the mainstream NLP toolkit marks a significant step forward. We are moving beyond the era where semantic search was synonymous with a single, dense vector. The latest Sentence Transformers News reflects a maturation of the field, acknowledging that a one-size-fits-all approach is insufficient for the diverse and demanding search problems of today.

By preserving token-level details, these models offer a new level of precision that bridges the gap between the speed of bi-encoders and the accuracy of cross-encoders. Thanks to new, user-friendly libraries, this cutting-edge technology is no longer confined to research labs. Developers can now easily build and deploy sophisticated, hybrid retrieval systems that power more intelligent search applications and smarter RAG pipelines. As the AI community, from Meta AI News to the open-source world, continues to innovate, the fusion of different retrieval paradigms will undoubtedly become the new standard for state-of-the-art information access.