The Evolution of AutoML: From Automated Pipelines to Integrated MLOps and Real-Time Insights

The Next Wave of Automated Machine Learning: Smarter, Faster, and More Integrated

Automated Machine Learning (AutoML) has rapidly evolved from a niche academic concept into a cornerstone of modern data science and enterprise AI. Initially celebrated for its ability to automate the tedious tasks of model selection and hyperparameter tuning, the latest AutoML News reveals a significant shift towards more holistic, integrated, and production-ready solutions. Today’s leading AutoML platforms are no longer just about finding the best algorithm; they are about simplifying the entire machine learning lifecycle, from data preparation and feature engineering to real-time model monitoring and robust MLOps integration. This evolution is driven by the need for organizations to deploy reliable, scalable, and transparent AI models that deliver tangible business value, a trend reflected in recent updates from major cloud providers and open-source communities.

As the AI landscape matures, we see a convergence of technologies. The latest developments in Vertex AI News and AWS SageMaker News highlight a deep focus on end-to-end automation, incorporating everything from data validation to explainability reports. Simultaneously, the open-source world, with constant updates covered in Optuna News and MLflow News, is providing data scientists with more granular control and reproducibility. This article explores the current state of AutoML, diving into its core components, practical implementations, advanced techniques, and the best practices required to harness its full potential in a rapidly changing AI ecosystem.

Section 1: The Core Engine of AutoML: Automating the ML Pipeline

At its heart, AutoML aims to automate the repetitive and often intuition-driven steps of building a machine learning model. A typical ML workflow involves several stages, and AutoML tools are designed to intelligently navigate this complex search space to find an optimal solution. The primary components that AutoML automates include Data Preprocessing, Feature Engineering, Model Selection, and Hyperparameter Optimization (HPO).

Key Components of an AutoML System

- Data Preprocessing: This involves cleaning the data, handling missing values (imputation), and encoding categorical variables (e.g., one-hot encoding, label encoding). AutoML systems test various strategies to see which yields the best performance.

- Feature Engineering & Selection: The system automatically generates new features from existing ones (e.g., polynomial features, interaction terms) and selects the most relevant features to prevent overfitting and reduce model complexity.

- Model Selection: This is the classic AutoML task where the system trains and evaluates a wide range of algorithms—from logistic regression and random forests to gradient boosting machines (like XGBoost and LightGBM) and even simple neural networks.

- Hyperparameter Optimization (HPO): For each selected model, AutoML fine-tunes its hyperparameters (e.g., learning rate, number of trees) using sophisticated search strategies like Bayesian optimization, genetic algorithms, or successive halving.

Open-source libraries like TPOT (Tree-based Pipeline Optimization Tool) excel at this by using genetic programming to optimize a series of preprocessing and modeling steps simultaneously, effectively finding the best end-to-end pipeline for a given dataset.

Practical Example: Finding an Optimal Pipeline with TPOT

Let’s see how TPOT can explore thousands of possible pipelines to find the best one for the classic Iris dataset. This example showcases the power of automating the entire workflow, not just tuning a single model.

import pandas as pd

from sklearn.model_selection import train_test_split

from tpot import TPOTClassifier

from sklearn.datasets import load_iris

# Load the dataset

iris = load_iris()

X = pd.DataFrame(iris.data, columns=iris.feature_names)

y = iris.target

# Split the data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(

X, y, train_size=0.75, test_size=0.25, random_state=42

)

# Initialize the TPOTClassifier

# generations: Number of iterations to run the genetic algorithm

# population_size: Number of individuals to keep in each generation

# verbosity: How much information to print during the process

# random_state: For reproducibility

tpot = TPOTClassifier(generations=5, population_size=50, verbosity=2, random_state=42)

# Fit TPOT on the training data

tpot.fit(X_train, y_train)

# Evaluate the best pipeline on the test set

print(f"Test set score: {tpot.score(X_test, y_test)}")

# Export the Python code for the best pipeline found

tpot.export('tpot_iris_pipeline.py')

print("\nBest pipeline code has been exported to tpot_iris_pipeline.py")After running, TPOT not only gives you a high-performing model but also generates the Python code for the entire pipeline, promoting transparency and making it easy to deploy the solution. This is a foundational concept that commercial platforms like DataRobot and H2O.ai have built upon.

Section 2: Enterprise-Grade AutoML on Cloud Platforms

While open-source libraries are powerful, enterprises often require more scalable, managed, and integrated solutions. This is where cloud-based AutoML services from Google, Amazon, and Microsoft shine. These platforms abstract away the underlying infrastructure, allowing teams to build and deploy models without managing servers or clusters. The latest Azure Machine Learning News and related cloud updates show a clear trend towards unifying AutoML with broader MLOps capabilities.

Leading Cloud AutoML Offerings

- Google Cloud Vertex AI AutoML: Part of the unified Vertex AI platform, it provides a simple GUI-based workflow for training models on tabular, image, text, and video data. It handles everything from infrastructure provisioning to model deployment with a single API call.

- Amazon SageMaker Autopilot: As a feature within AWS SageMaker, Autopilot automatically inspects your data, generates candidate pipelines, and runs them in parallel to find the best model. It also generates an explainability report and a notebook detailing the entire process for full transparency.

- Azure Machine Learning (Automated ML): Azure’s offering is tightly integrated into its ML workspace, allowing for seamless transition from an AutoML-generated model to a custom-developed one. It emphasizes responsible AI features, providing tools for interpretability and fairness assessment.

These platforms are not just black boxes. They provide detailed leaderboards, model explanations, and the ability to export the winning model or the code that generated it, giving teams both automation and control.

Practical Example: Launching a Vertex AI AutoML Job

Below is a conceptual Python code snippet using the Google Cloud AI Platform SDK to demonstrate how a tabular classification job is initiated. This abstracts the complexity of distributed training and hyperparameter search into a few lines of code.

from google.cloud import aiplatform

# Initialize the AI Platform client

aiplatform.init(project='your-gcp-project-id', location='us-central1')

# First, create a dataset in Vertex AI from a GCS source

# This can also be done via the UI

dataset = aiplatform.TabularDataset.create(

display_name="my-tabular-dataset",

gcs_source=['gs://your-bucket/your-data.csv']

)

# Configure the AutoML training job

# This defines the task, target column, and compute budget

job = aiplatform.AutoMLTabularTrainingJob(

display_name="train-my-classifier",

optimization_prediction_type="classification",

optimization_objective="maximize-au-prc",

)

# Run the training job

# The target_column is the name of the column you want to predict

# The training_fraction_split determines the train/validation split

model = job.run(

dataset=dataset,

target_column="target_variable_name",

training_fraction_split=0.8,

model_display_name="my-automl-model",

budget_milli_node_hours=1000 # 1 hour of training

)

# After training, you can deploy the model to an endpoint

endpoint = model.deploy(machine_type="n1-standard-4")

print(f"Model deployed to endpoint: {endpoint.resource_name}")This approach democratizes access to powerful ML capabilities, enabling teams with less ML expertise to build high-quality models. The latest Snowflake Cortex News also points to the trend of bringing these automated capabilities directly into data warehouses, further simplifying the workflow.

Section 3: Advanced Techniques and MLOps Integration

The frontier of AutoML is pushing beyond standard tabular data and into more complex domains like computer vision and natural language processing. This involves advanced techniques like Neural Architecture Search (NAS), which automates the design of neural network architectures. Furthermore, the most significant recent trend is the deep integration of AutoML with MLOps tools to ensure that models are not just built but are also reproducible, monitored, and maintained in production.

Neural Architecture Search (NAS)

NAS uses optimization algorithms to search for the best-performing neural network architecture for a specific task. Instead of just tuning hyperparameters like the learning rate, NAS explores different combinations of layers, activation functions, and connection patterns. Tools like Google’s NAS-FPN for object detection are examples of how this technology can outperform manually designed architectures. This area is a hot topic in Google DeepMind News and Meta AI News, as research continues to make NAS more computationally efficient.

Integrating AutoML with MLOps for Robustness

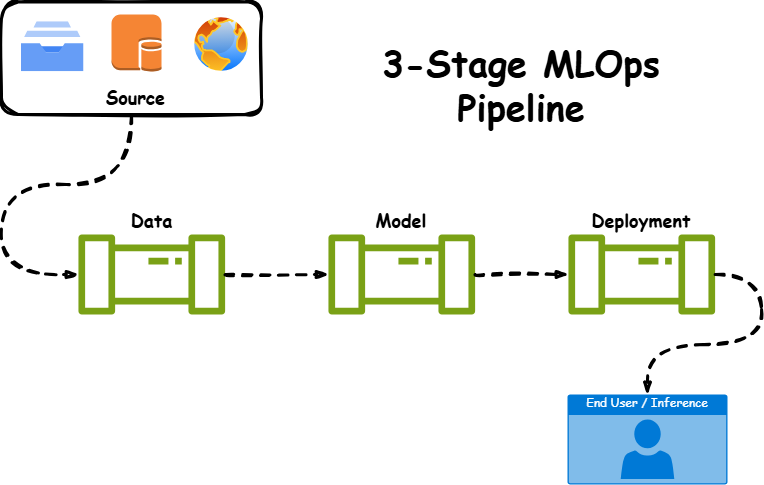

A model is only useful if it can be reliably deployed and maintained. This is where MLOps comes in. Modern AutoML workflows are increasingly integrated with tools for experiment tracking, model versioning, and monitoring.

- Experiment Tracking: Tools like MLflow, Weights & Biases, and Comet ML are used to log every AutoML run, including the dataset version, parameters, performance metrics, and the resulting model artifact. This ensures full reproducibility.

- Model Registry: Once a satisfactory model is found, it’s stored in a central model registry (a feature of MLflow, SageMaker, and Vertex AI) where it can be versioned and staged for deployment.

- CI/CD for ML: AutoML can be a step in a larger CI/CD pipeline. For example, a new push to a Git repository could trigger an AutoML job to retrain a model on new data, with the best model being automatically promoted to a staging environment.

Practical Example: Hyperparameter Tuning with Optuna and MLflow

For scenarios requiring more control than fully automated platforms, libraries like Optuna offer a powerful framework for hyperparameter optimization. Here’s how you can use Optuna to find the best hyperparameters for a LightGBM model and log the results with MLflow for tracking.

import lightgbm as lgb

import optuna

import mlflow

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score

# Load data

X, y = load_breast_cancer(return_X_y=True)

X_train, X_val, y_train, y_val = train_test_split(X, y, random_state=42)

# Define the objective function for Optuna to optimize

def objective(trial):

# Set the experiment name for MLflow

mlflow.set_experiment("LGBM_Optuna_Tuning")

with mlflow.start_run(nested=True):

# Suggest hyperparameters for the trial

params = {

'objective': 'binary',

'metric': 'binary_logloss',

'verbosity': -1,

'boosting_type': 'gbdt',

'lambda_l1': trial.suggest_float('lambda_l1', 1e-8, 10.0, log=True),

'lambda_l2': trial.suggest_float('lambda_l2', 1e-8, 10.0, log=True),

'num_leaves': trial.suggest_int('num_leaves', 2, 256),

'feature_fraction': trial.suggest_float('feature_fraction', 0.4, 1.0),

'bagging_fraction': trial.suggest_float('bagging_fraction', 0.4, 1.0),

'bagging_freq': trial.suggest_int('bagging_freq', 1, 7),

'min_child_samples': trial.suggest_int('min_child_samples', 5, 100),

}

# Log parameters with MLflow

mlflow.log_params(params)

# Train the model

model = lgb.LGBMClassifier(**params)

model.fit(X_train, y_train, eval_set=[(X_val, y_val)], early_stopping_rounds=50, verbose=False)

# Make predictions and calculate AUC

preds = model.predict_proba(X_val)[:, 1]

auc = roc_auc_score(y_val, preds)

# Log metric with MLflow

mlflow.log_metric("validation_auc", auc)

return auc

# Create an Optuna study and run the optimization

# We want to maximize the AUC, so the direction is 'maximize'

study = optuna.create_study(direction='maximize')

study.optimize(objective, n_trials=50)

# Print the best trial results

print("Number of finished trials: ", len(study.trials))

print("Best trial:")

trial = study.best_trial

print(" Value: ", trial.value)

print(" Params: ")

for key, value in trial.params.items():

print(f" {key}: {value}")This hybrid approach, combining powerful optimization libraries like Optuna with MLOps platforms like MLflow, represents the cutting edge of practical AutoML implementation, offering both automation and fine-grained control.

Section 4: Best Practices, Pitfalls, and the Future Outlook

While AutoML is incredibly powerful, it’s not a magic bullet. Applying it effectively requires a strategic approach and an awareness of its limitations. Adhering to best practices ensures that the automated process yields results that are not only accurate but also reliable, interpretable, and cost-effective.

Best Practices for Using AutoML

- Understand Your Data First: Garbage in, garbage out. AutoML cannot fix fundamental data quality issues. Perform thorough exploratory data analysis (EDA) to understand distributions, identify outliers, and formulate a clear problem statement before handing the data over to an automated tool.

- Start with a Simple Baseline: Before running a complex and potentially expensive AutoML job, establish a simple baseline model (e.g., Logistic Regression). This provides a benchmark to judge whether the complexity introduced by AutoML is justified.

- Use a “Human-in-the-Loop” Approach: Don’t treat AutoML as a black box. Use the leaderboards and model explanations it provides to understand *why* a certain model was chosen. This insight can help you refine your feature set or even guide future manual modeling efforts.

- Manage Costs: AutoML, especially on the cloud, can be computationally expensive. Set clear budgets (e.g., `budget_milli_node_hours` in Vertex AI) and time limits for your jobs to avoid unexpected costs.

- Focus on Interpretability: For many applications (e.g., finance, healthcare), a slightly less accurate but highly interpretable model is better than a high-performing black box. Leverage the explainability (XAI) features built into modern AutoML platforms.

The Future: AutoML Meets Foundation Models

The future of AutoML is being shaped by the rise of Large Language Models (LLMs) and foundation models. We are seeing a new paradigm where AutoML techniques are used to fine-tune massive pre-trained models from sources like Hugging Face. The latest OpenAI News and Anthropic News suggest a future where models can be adapted to specific tasks with minimal data, using automated prompt engineering and parameter-efficient fine-tuning (PEFT) techniques. Frameworks like LangChain and LlamaIndex are already building ecosystems around this, and it’s only a matter of time before these capabilities are seamlessly integrated into mainstream AutoML platforms.

Conclusion: AutoML as a Strategic Enabler

The narrative around AutoML has matured. It is no longer about replacing data scientists but about augmenting them, freeing them from repetitive tasks to focus on higher-value activities like problem formulation, feature engineering creativity, and interpreting model results for business impact. The latest AutoML News clearly indicates a trajectory towards deeper integration with the MLOps lifecycle, greater transparency, and broader applicability across different data types.

By leveraging modern AutoML platforms and libraries—from open-source powerhouses like TPOT and Optuna to enterprise-grade cloud services like Vertex AI and SageMaker Autopilot—organizations can significantly accelerate their AI development cycles. The key to success lies in adopting a strategic, human-in-the-loop approach, focusing on robust MLOps practices, and staying informed about the rapid advancements in the field. As automation becomes more intelligent and integrated, it solidifies its role as an indispensable tool for any team looking to build and deploy AI at scale.