Building Advanced RAG Pipelines with Haystack: A Deep Dive into LLM-Powered News Analysis

Introduction to Production-Ready LLM Applications with Haystack

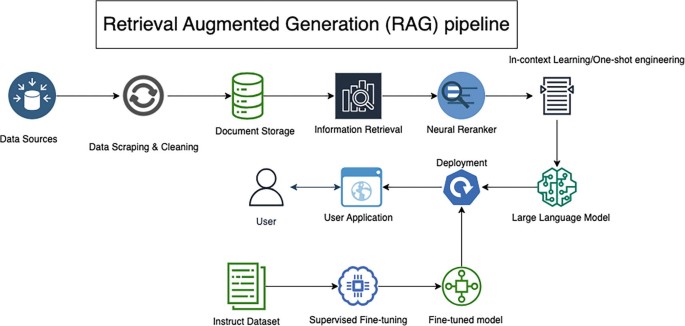

In the rapidly evolving landscape of Large Language Models (LLMs), moving from a promising prototype to a robust, production-ready application is a significant challenge. While models from providers like OpenAI, Anthropic, and Mistral AI offer incredible generative capabilities, harnessing them effectively requires a structured framework. This is where Haystack, an open-source Python framework by deepset, truly shines. Haystack provides the tools to build sophisticated, scalable, and maintainable LLM applications, specializing in Retrieval-Augmented Generation (RAG) systems that connect powerful models to your private data.

Unlike monolithic approaches, Haystack champions a modular, pipeline-centric philosophy. It allows developers to compose complex workflows by connecting independent, reusable components in a directed acyclic graph (DAG). This design promotes flexibility, enabling seamless integration with a vast ecosystem of tools. Whether you’re leveraging embedding models from the Hugging Face Transformers News hub, storing vectors in databases like Pinecone News or Milvus News, or deploying on platforms such as AWS SageMaker News, Haystack provides the necessary abstractions. In this article, we will embark on a deep dive into Haystack, using the practical goal of building an intelligent news analysis and question-answering system to explore its core concepts, advanced features, and best practices for production deployment.

Section 1: The Core of Haystack – Understanding Pipelines and Components

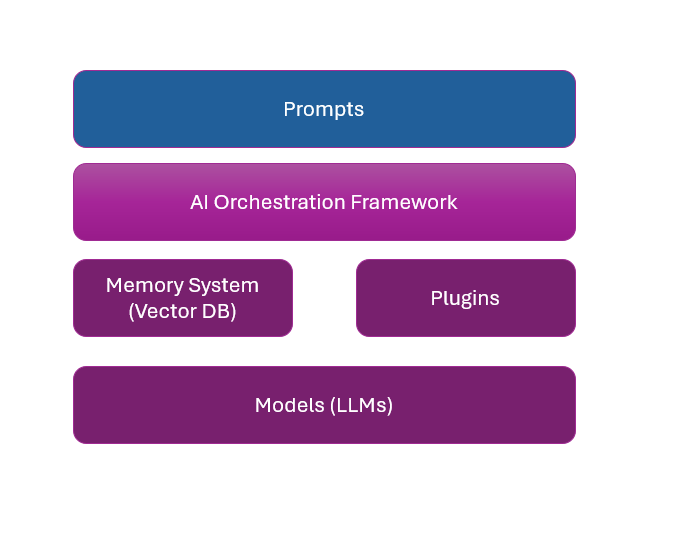

At its heart, Haystack is built on two fundamental concepts: Components and Pipelines. Understanding how these interact is the key to mastering the framework and building powerful, custom applications. A Pipeline is a graph that defines the flow of data, while Components are the nodes in that graph, each performing a specific, atomic task.

Understanding the Haystack Pipeline

A Haystack Pipeline is a powerful abstraction for defining complex data processing workflows. It explicitly defines how different components are connected and how data passes between them. This is crucial for debugging, visualization, and creating predictable, repeatable results. Each component has defined inputs and outputs, and you connect them by mapping the output of one component to the input of another. This explicit declaration of data flow is a key differentiator from some other frameworks like LangChain News, which often rely on more implicit “chaining” mechanisms. This graph-based structure makes it easier to reason about complex, multi-step processes involving branching logic and data merging.

Essential Haystack Components for RAG

To build any RAG system, you need a set of core components. Haystack provides a rich library of pre-built components for common tasks:

- DocumentStore: This is the persistent storage layer for your data. Haystack supports a wide range of backends, from a simple

InMemoryDocumentStorefor quick prototyping to production-grade vector databases like Weaviate News, Qdrant News, and Chroma News. - Embedders: These components are responsible for converting text into dense vector representations. The

SentenceTransformerDocumentEmbedderandSentenceTransformerTextEmbedderare popular choices, leveraging powerful models from the Sentence Transformers News library. - Retrievers: Once your documents are embedded and stored, a Retriever’s job is to find the most relevant documents for a given query. The

InMemoryEmbeddingRetrieveror other database-specific retrievers perform this crucial first step in the RAG process. - Prompters and Generators: A

PromptBuilderis used to dynamically create a prompt for the LLM, typically by combining a user’s query with the context from retrieved documents. TheGeneratorcomponent (e.g.,OpenAIGenerator,HuggingFaceLocalGenerator) then takes this prompt and interacts with the chosen LLM to produce the final answer. This is where you connect to models discussed in OpenAI News or Mistral AI News.

Let’s see how to set up a basic indexing pipeline to prepare our news articles for querying.

# main.py - Setting up a basic indexing pipeline in Haystack

import os

from haystack import Document, Pipeline

from haystack.components.writers import DocumentWriter

from haystack.components.converters import TextFileToDocument

from haystack.document_stores.in_memory import InMemoryDocumentStore

# For this example, let's create some dummy news files

os.makedirs("news_articles", exist_ok=True)

with open("news_articles/tech_news.txt", "w") as f:

f.write("NVIDIA announced its new Blackwell GPU architecture, promising significant performance gains for AI training and inference. This development is a key part of the latest NVIDIA AI News.")

with open("news_articles/ai_framework_news.txt", "w") as f:

f.write("The open-source community is buzzing with Haystack News, as the latest version introduces powerful agentic workflows. Frameworks like PyTorch and TensorFlow also continue to release updates.")

# 1. Initialize the Document Store

document_store = InMemoryDocumentStore()

# 2. Define the indexing pipeline

indexing_pipeline = Pipeline()

indexing_pipeline.add_component("converter", TextFileToDocument())

indexing_pipeline.add_component("writer", DocumentWriter(document_store=document_store))

# 3. Connect the components

indexing_pipeline.connect("converter.documents", "writer.documents")

# 4. Run the pipeline to index the news articles

# The input to the converter is a list of file paths

file_paths = ["news_articles/tech_news.txt", "news_articles/ai_framework_news.txt"]

indexing_pipeline.run({"converter": {"sources": file_paths}})

print(f"Successfully indexed {document_store.count_documents()} documents.")

# Expected Output: Successfully indexed 2 documents.Section 2: Building a Foundational RAG Pipeline for News Q&A

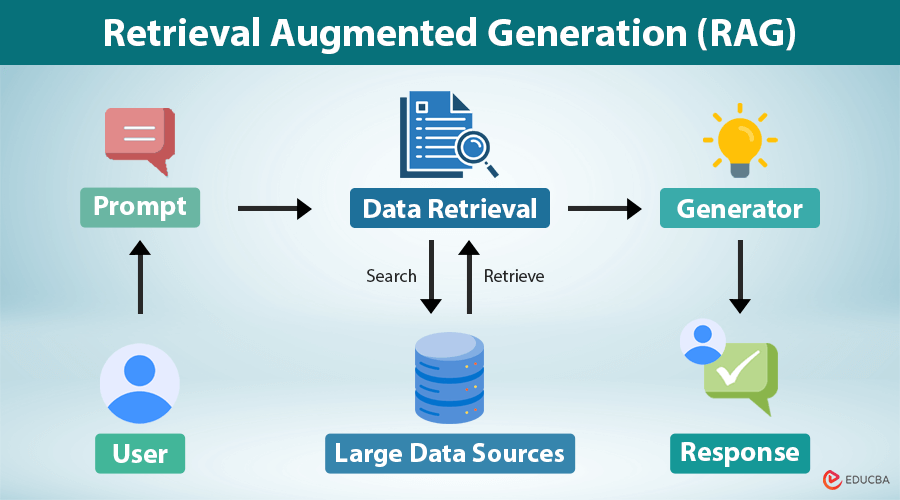

With our news articles indexed in the DocumentStore, we can now build the query pipeline. This pipeline will take a user’s question, find relevant articles, and use an LLM to generate a coherent, context-aware answer. This is the classic RAG workflow and the foundation for countless LLM applications.

Constructing the Query Pipeline Step-by-Step

Our query pipeline will orchestrate several components in sequence to answer a question:

- TextEmbedder: The user’s query (e.g., “What did NVIDIA announce?”) is first converted into a vector embedding using a model like those from the Hugging Face News hub.

- Retriever: This embedding is passed to the

EmbeddingRetriever, which performs a similarity search against all the document embeddings in ourDocumentStoreto find the most relevant text chunks. - PromptBuilder: We use a template to combine the user’s question with the content of the retrieved documents. This creates a rich, contextual prompt for the LLM.

- Generator: Finally, the

OpenAIGeneratorsends this prompt to the OpenAI API (or another model provider like Cohere News) to generate the final, human-readable answer.

This explicit, multi-step process is what allows the LLM to answer questions based on specific data it wasn’t trained on, effectively grounding its response in facts from our news articles.

# main.py - Building and running a RAG query pipeline

import os

from haystack import Pipeline

from haystack.components.embedders import SentenceTransformerTextEmbedder, SentenceTransformerDocumentEmbedder

from haystack.components.retrievers.in_memory import InMemoryEmbeddingRetriever

from haystack.components.builders import PromptBuilder

from haystack.components.generators import OpenAIGenerator

from haystack.document_stores.in_memory import InMemoryDocumentStore

# Assume the document_store is already populated from the previous step

# For completeness, let's re-run the indexing part (in a real app, this is done once)

document_store = InMemoryDocumentStore()

# Note: For a real RAG pipeline, you must use a DocumentEmbedder during indexing

# Let's create a more robust indexing pipeline this time

from haystack.dataclasses import Document

doc_embedder = SentenceTransformerDocumentEmbedder(model="sentence-transformers/all-MiniLM-L6-v2")

doc_embedder.warm_up()

documents = [

Document(content="NVIDIA announced its new Blackwell GPU architecture, promising significant performance gains for AI training and inference. This development is a key part of the latest NVIDIA AI News."),

Document(content="The open-source community is buzzing with Haystack News, as the latest version introduces powerful agentic workflows. Frameworks like PyTorch and TensorFlow also continue to release updates.")

]

documents_with_embeddings = doc_embedder.run(documents=documents)["documents"]

document_store.write_documents(documents_with_embeddings)

# 1. Define the RAG Query Pipeline

query_pipeline = Pipeline()

# 2. Add components to the pipeline

query_pipeline.add_component("text_embedder", SentenceTransformerTextEmbedder(model="sentence-transformers/all-MiniLM-L6-v2"))

query_pipeline.add_component("retriever", InMemoryEmbeddingRetriever(document_store=document_store, top_k=1))

query_pipeline.add_component("prompt_builder", PromptBuilder(template="""

Answer the question based on the context provided below.

Context:

{% for doc in documents %}

{{ doc.content }}

{% endfor %}

Question: {{query}}

"""))

query_pipeline.add_component("llm", OpenAIGenerator(api_key=os.environ.get("OPENAI_API_KEY"), model="gpt-3.5-turbo"))

# 3. Connect the components

query_pipeline.connect("text_embedder.embedding", "retriever.query_embedding")

query_pipeline.connect("retriever.documents", "prompt_builder.documents")

query_pipeline.connect("prompt_builder.prompt", "llm.prompt")

# 4. Run the pipeline with a query

query = "What was the big announcement from NVIDIA?"

result = query_pipeline.run({

"text_embedder": {"text": query},

"prompt_builder": {"query": query}

})

print("Generated Answer:")

print(result["llm"]["replies"][0])

# Expected Output (will vary based on model):

# Generated Answer:

# NVIDIA announced its new Blackwell GPU architecture, which is expected to provide significant performance improvements for AI tasks.Section 3: Advanced Techniques – Custom Components and Agentic Workflows

Haystack’s true power is revealed when you move beyond pre-built components and start customizing your pipelines for specific tasks. This could involve creating custom logic, adding conditional paths, or even building autonomous agents that can decide which tools to use.

Creating Custom Components

Any Python class or function can be turned into a Haystack component using the @component decorator. This allows you to encapsulate unique business logic, integrate with third-party APIs, or perform custom data transformations. For our news analysis system, we could create a component that detects the sentiment of a news article or one that summarizes a list of documents into a daily briefing. This modularity makes your code cleaner and your pipelines more powerful. It’s a key feature for teams working with diverse toolsets, from Apache Spark MLlib News for data processing to Google DeepMind News for cutting-edge model research.

Implementing Agentic Workflows

The latest Haystack News highlights the introduction of Agents. A Haystack Agent is an LLM-powered reasoning loop that can dynamically choose from a set of available “Tools” (which are themselves Haystack components) to accomplish a goal. For example, a news analysis agent could be given a `retriever` tool to search internal articles and a `web_search` tool. When asked a question, it could first decide whether the answer is likely in its document store. If not, it could autonomously decide to perform a web search. This adds a layer of intelligence and autonomy, moving beyond simple, fixed RAG pipelines.

Let’s create a custom component that generates a concise summary from a list of retrieved documents.

# main.py - Creating and using a custom summarization component

import os

from haystack import component, Pipeline

from haystack.components.generators import OpenAIGenerator

from haystack.dataclasses import Document

# This is our custom component. The @component decorator makes it usable in a Haystack Pipeline.

@component

class DocumentSummarizer:

def __init__(self, generator: OpenAIGenerator):

self.generator = generator

@component.output_types(summary=str)

def run(self, documents: list[Document]):

"""

Generates a summary for a list of documents.

"""

context = "\n".join([doc.content for doc in documents])

prompt = f"Please provide a concise, one-paragraph summary of the following news articles:\n\n{context}"

# We are re-using the generator component inside our custom component

result = self.generator.run(prompt=prompt)

summary_text = result["replies"][0]

return {"summary": summary_text}

# Let's build a pipeline that uses this custom component

# Assume 'retriever' and 'text_embedder' are defined as in the previous example

# and the document_store is populated.

# 1. Initialize the components needed

llm_generator = OpenAIGenerator(api_key=os.environ.get("OPENAI_API_KEY"), model="gpt-3.5-turbo")

summarizer = DocumentSummarizer(generator=llm_generator)

# 2. Define the summarization pipeline

# This pipeline will retrieve documents related to a topic and then summarize them.

summarization_pipeline = Pipeline()

summarization_pipeline.add_component("text_embedder", text_embedder) # Re-use from previous example

summarization_pipeline.add_component("retriever", retriever) # Re-use from previous example

summarization_pipeline.add_component("summarizer", summarizer)

# 3. Connect the components

summarization_pipeline.connect("text_embedder.embedding", "retriever.query_embedding")

summarization_pipeline.connect("retriever.documents", "summarizer.documents")

# 4. Run the pipeline with a topic

topic = "latest AI news"

summary_result = summarization_pipeline.run({

"text_embedder": {"text": topic}

})

print("Generated News Summary:")

print(summary_result["summarizer"]["summary"])

# Expected Output (will vary):

# Generated News Summary:

# Recent AI news highlights NVIDIA's launch of the Blackwell GPU architecture for enhanced AI performance, alongside significant updates in the open-source community for frameworks like Haystack, which now supports advanced agentic workflows.Section 4: Best Practices, Deployment, and MLOps Integration

Building a successful Haystack application involves more than just connecting components. It requires careful optimization, robust deployment strategies, and integration with the broader MLOps ecosystem.

Optimization and Evaluation

The performance of your RAG system depends heavily on the quality of its parts.

- Embedding Models: Experiment with different models from the Sentence Transformers News library or commercial offerings. The choice of model is a trade-off between performance, cost, and accuracy.

- Retriever Tuning: Adjust parameters like

top_k(the number of documents to retrieve) to balance context quality with prompt length limitations. - Prompt Engineering: The structure of your prompt in the

PromptBuilderis critical. A well-crafted prompt can dramatically improve the LLM’s output quality. - Evaluation: Haystack includes evaluation pipelines to measure your system’s performance quantitatively. This is essential for iterating and improving your application with confidence. Tools like Weights & Biases News or MLflow News can be used to track these evaluation metrics over time.

Deployment and MLOps

Once your pipeline is performing well, you need to deploy it as a service.

- API Wrapping: A common pattern is to wrap your Haystack pipeline in a web server using frameworks like FastAPI News or Flask News, exposing it as a REST API.

- Scalability: For high-throughput applications, consider using tools like Ray News for distributed computing or NVIDIA’s Triton Inference Server for optimized model serving. Platforms like Modal News or RunPod News also offer serverless GPU infrastructure for scalable deployment.

- Observability: In production, it’s crucial to monitor and debug your pipelines. Tools like LangSmith News are emerging to provide traceability and insights into complex LLM chains and pipelines, helping you understand exactly why your application gave a specific response.

Here is a minimal example of how you might expose a Haystack pipeline via a FastAPI endpoint.

# api.py - A simple FastAPI wrapper for a Haystack pipeline

from fastapi import FastAPI

from pydantic import BaseModel

from haystack import Pipeline

# Assume 'query_pipeline' is your fully constructed and tested Haystack pipeline object

# from the previous examples. You would typically load this from a YAML file or

# build it on startup.

# from main import query_pipeline # For demonstration purposes

app = FastAPI(title="Haystack News Q&A API")

class QueryRequest(BaseModel):

query: str

user_id: str | None = None

class QueryResponse(BaseModel):

answer: str

documents: list[dict]

@app.post("/query", response_model=QueryResponse)

async def ask_question(request: QueryRequest):

"""

Receives a query and returns an answer generated by the Haystack RAG pipeline.

"""

# This is a simplified run call. In a real app, you'd handle errors and edge cases.

result = query_pipeline.run({

"text_embedder": {"text": request.query},

"prompt_builder": {"query": request.query}

})

# Extract the relevant parts of the result to send back to the client

answer = result["llm"]["replies"][0]

retrieved_docs = [doc.to_dict() for doc in result["retriever"]["documents"]]

return QueryResponse(answer=answer, documents=retrieved_docs)

# To run this: uvicorn api:app --reloadConclusion: Your Next Steps with Haystack

Haystack provides a powerful, flexible, and production-oriented framework for building sophisticated applications on top of Large Language Models. By embracing its modular, pipeline-based architecture, developers can move beyond simple API calls to create complex, data-aware systems capable of reasoning and generating valuable insights. We’ve journeyed from the basic concepts of Components and Pipelines to building a functional RAG system for news analysis, and even explored advanced techniques like custom components and API deployment.

The journey doesn’t end here. The ecosystem around LLMs is constantly evolving, with new models from Meta AI News and Google DeepMind News, and new tools and techniques appearing daily. Your next steps should be to dive into the official Haystack documentation, explore its rich library of integrations, and start building. Experiment with different DocumentStores, try out the latest embedding models, and consider contributing your own custom components back to the community. By leveraging Haystack, you are well-equipped to build the next generation of intelligent, data-grounded AI applications.