Modal News: The Developer’s Guide to Building and Deploying Multi-Modal AI with Serverless GPUs

The artificial intelligence landscape is evolving at a breakneck pace, with multi-modal models leading the charge. These sophisticated systems, capable of understanding and processing information from multiple sources like text, images, and audio, are unlocking new frontiers in fields from medicine to creative arts. However, moving these complex models from a research notebook to a scalable, production-ready application presents a significant engineering challenge. Developers often grapple with infrastructure provisioning, dependency management, GPU scaling, and MLOps overhead. This is where a new generation of tools is making a profound impact, and Modal is at the forefront of this shift.

Modal is a serverless compute platform designed to eliminate infrastructure friction for data scientists and AI engineers. It allows you to run Python code—from simple scripts to complex deep learning inference pipelines—on the cloud with on-demand access to powerful hardware like NVIDIA A100s and H100s, all without ever leaving your local development environment. By treating infrastructure as code within your Python scripts, Modal streamlines the path to production. In this comprehensive guide, we will explore the core concepts of Modal, walk through building a practical multi-modal application, dive into advanced features for production, and cover best practices for optimization and cost management. This is essential reading for anyone following the latest in Modal News and the broader MLOps ecosystem.

The Building Blocks of Modal: Core Concepts Explained

Before deploying a complex application, it’s crucial to understand the fundamental abstractions that make Modal so powerful and intuitive. These components work together to define your application’s environment, code, and resources in a declarative, Python-native way.

The Stub: Your Application’s Entrypoint

The Stub is the central object in any Modal application. It acts as a container or a manifest for all the cloud resources your application needs, such as functions, container images, secrets, and schedules. You instantiate a Stub at the beginning of your script, typically giving it a descriptive name that will appear in the Modal dashboard.

The Image: Defining Your Runtime Environment

One of Modal’s most powerful features is its approach to environment management. The modal.Image object lets you define your runtime environment programmatically. Instead of writing a complex Dockerfile, you can specify your dependencies directly in Python. You can start from a base image (like Debian Slim) and layer on system packages with .apt_install() or Python libraries with .pip_install(). This image is built once and cached, making subsequent runs incredibly fast. This is a game-changer compared to manually managing environments on platforms like AWS SageMaker or Azure Machine Learning.

The Function: Your Serverless Compute Unit

A Modal Function is just a regular Python function that you’ve “decorated” to run on the cloud. By adding a decorator like @stub.function(), you instruct Modal to execute that function in the specified cloud environment. You can configure hardware requirements directly in the decorator, such as requesting a specific type of GPU (e.g., gpu="A10G") or setting memory limits. This seamless transition from local function to scalable cloud endpoint is what makes Modal so compelling for rapid iteration and deployment.

Let’s see these concepts in action with a simple example that runs a PyTorch check on a GPU-enabled container.

import modal

# 1. Define a Stub for your application

stub = modal.Stub("gpu-pytorch-check")

# 2. Define the Image with necessary Python libraries

# This builds a container with PyTorch and CUDA support.

pytorch_image = modal.Image.debian_slim().pip_install(

"torch==2.1.2", "torchvision==0.16.2"

)

# 3. Create a Modal Function

# We attach the image and request a T4 GPU.

@stub.function(image=pytorch_image, gpu="T4")

def check_gpu():

"""

This function runs inside the Modal container on a GPU.

"""

import torch

print(f"PyTorch version: {torch.__version__}")

is_available = torch.cuda.is_available()

print(f"CUDA available: {is_available}")

if is_available:

print(f"CUDA device count: {torch.cuda.device_count()}")

print(f"Current CUDA device: {torch.cuda.current_device()}")

print(f"Device name: {torch.cuda.get_device_name(0)}")

return is_available

# A local entrypoint to run the Modal function from your machine.

# To run this: `modal run your_script_name.py`

@stub.local_entrypoint()

def main():

print("Running GPU check on Modal...")

gpu_ready = check_gpu.remote()

if gpu_ready:

print("✅ GPU is configured and accessible on Modal!")

else:

print("❌ GPU check failed on Modal.")Running this script with the Modal CLI (`modal run …`) will build the image (if it’s the first time), provision a T4 GPU, execute the function, and stream the logs back to your terminal, all in a matter of seconds.

Practical Implementation: A Multi-Modal Medical Analysis App

Now, let’s apply these core concepts to a more realistic and complex problem: building a multi-modal application that analyzes both a medical image and a corresponding text report. This type of system could help clinicians by summarizing key findings or flagging anomalies. We’ll use powerful open-source models available through the Hugging Face Transformers News ecosystem.

Setting Up the Multi-Modal Environment

Our application requires libraries for image processing, deep learning, and transformer models. We’ll create a single modal.Image that bundles everything we need, including Pillow for images, torch for deep learning, and transformers for the models. Caching this larger image is a huge time-saver.

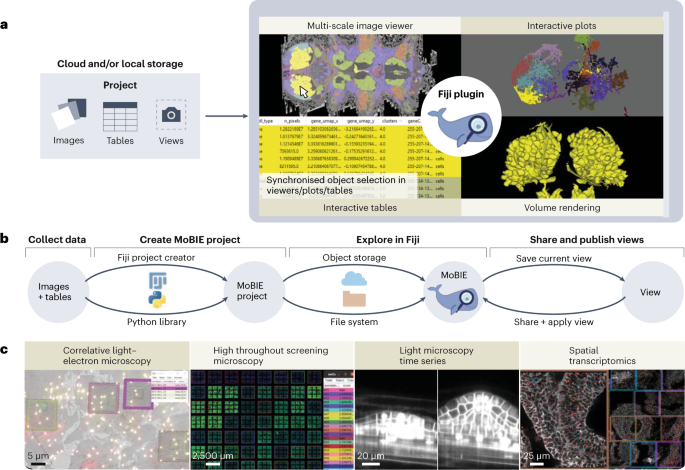

Orchestrating Image and Text Analysis Functions

We will define two distinct Modal functions: one to process the image and another to analyze the text. A third function will then combine the outputs from these two modalities to generate a final result. This modular approach is a best practice, as it allows for independent testing, scaling, and updating of each component.

In this example, we’ll use a pre-trained Vision Transformer (ViT) for image feature extraction and a pre-trained language model like a distilled version of BERT for text analysis.

import modal

import io

from PIL import Image

# Define a stub for our multi-modal application

stub = modal.Stub("multi-modal-medical-analyzer")

# Define a comprehensive image with all necessary dependencies

# This leverages a pre-built image from Modal for faster setup

ml_image = (

modal.Image.debian_slim(python_version="3.10")

.pip_install(

"torch",

"transformers",

"Pillow",

"requests"

)

)

# Use a GPU for model inference and mount a shared volume for model caching

with ml_image.imports():

from transformers import ViTFeatureExtractor, ViTForImageClassification

from transformers import AutoTokenizer, AutoModelForSequenceClassification

import torch

@stub.function(image=ml_image, gpu="A10G")

def analyze_image(image_bytes: bytes):

"""Analyzes an image and returns top classifications."""

print("Analyzing image...")

# Load pre-trained Vision Transformer model from Hugging Face

# Models are downloaded once and cached in the container image

model_name = "google/vit-base-patch16-224"

feature_extractor = ViTFeatureExtractor.from_pretrained(model_name)

model = ViTForImageClassification.from_pretrained(model_name)

image = Image.open(io.BytesIO(image_bytes))

inputs = feature_extractor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

# Get top 5 predictions

predicted_class_idx = logits.argmax(-1).item()

predicted_class = model.config.id2label[predicted_class_idx]

print(f"Image analysis complete. Top prediction: {predicted_class}")

return {"image_prediction": predicted_class}

@stub.function(image=ml_image, gpu="any")

def analyze_text(report_text: str):

"""Analyzes a text report for sentiment or classification."""

print("Analyzing text report...")

# Load a pre-trained text classification model

model_name = "distilbert-base-uncased-finetuned-sst-2-english"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForSequenceClassification.from_pretrained(model_name)

inputs = tokenizer(report_text, return_tensors="pt", truncation=True, padding=True)

with torch.no_grad():

outputs = model(**inputs)

logits = outputs.logits

prediction = torch.argmax(logits, dim=1).item()

sentiment = model.config.id2label[prediction]

print(f"Text analysis complete. Sentiment: {sentiment}")

return {"text_sentiment": sentiment}

@stub.local_entrypoint()

def main():

# Example usage: fetch an image and define a sample report

import requests

image_url = "http://images.cocodataset.org/val2017/000000039769.jpg" # Example cat image

image_bytes = requests.get(image_url).content

report_text = "The patient shows significant improvement with no signs of recurring abnormalities. The outlook is highly positive."

# Run the functions in parallel on Modal

print("Submitting multi-modal analysis jobs to Modal...")

image_result_future = analyze_image.spawn(image_bytes)

text_result_future = analyze_text.spawn(report_text)

# Retrieve results

image_result = image_result_future.get()

text_result = text_result_future.get()

print("\n--- Multi-Modal Analysis Summary ---")

print(f"Image Content: {image_result['image_prediction']}")

print(f"Report Sentiment: {text_result['text_sentiment']}")

print("------------------------------------")This example showcases how easily you can orchestrate multiple, independent GPU-powered tasks. By using .spawn() instead of .remote(), the image and text analysis functions run in parallel, significantly reducing the total execution time.

Scaling Up: Advanced Modal Features for Production

Once your core logic is working, the next step is to expose it to the world. Modal provides simple yet powerful primitives for deploying models as web endpoints, managing state, and scheduling tasks, making it a viable alternative to more complex platforms like Kubernetes or managed services like those found in Azure AI News.

Creating Web Endpoints with FastAPI

Perhaps the most common production use case is serving a model via an API. Modal has first-class support for this, allowing you to serve any ASGI-compliant web framework, such as FastAPI News or Starlette. By adding a decorator like @stub.asgi_app(), you can instantly turn your application into a scalable, serverless API endpoint.

Here’s how we can wrap our multi-modal analyzer in a FastAPI application:

# (Continuing from the previous example, add these to your script)

# ... keep the stub, image, and analysis functions as they are ...

from fastapi import FastAPI, UploadFile, File, Form

from fastapi.responses import JSONResponse

import base64

# Create a FastAPI app object

web_app = FastAPI()

# Define the endpoint

@web_app.post("/analyze")

async def analyze_endpoint(report_text: str = Form(...), image_file: UploadFile = File(...)):

"""

API endpoint that accepts an image and text, and returns a combined analysis.

"""

image_bytes = await image_file.read()

# Call our Modal functions asynchronously

# Note: we use .remote() here because we are already in an async context

image_result = analyze_image.remote(image_bytes)

text_result = analyze_text.remote(report_text)

return JSONResponse(content={

"image_analysis": image_result,

"text_analysis": text_result

})

# Mount the FastAPI app to the Modal stub

@stub.asgi_app()

def fastapi_app():

return web_app

# To deploy this: `modal deploy your_script_name.py`

# Modal will provide a public URL for your API.After deploying this with modal deploy, you get a secure, publicly accessible URL. Modal handles auto-scaling, so if your API receives a sudden burst of traffic, it will automatically spin up more containers to handle the load. This is a powerful pattern for deploying models from the Meta AI News or Mistral AI News ecosystems.

Managing State with Persistent Volumes

Serverless functions are ephemeral, but applications often need to manage state. This is especially true for AI models, which can be several gigabytes in size. Re-downloading them on every cold start is inefficient. Modal’s modal.Volume provides a persistent, high-performance network file system. You can use it to cache model weights, store datasets, or manage outputs. For applications using frameworks like LangChain News or LlamaIndex News, a Volume is perfect for persisting vector indexes built with tools like FAISS News or managed services like Pinecone News.

Best Practices for Performance and Cost on Modal

Building on Modal is easy, but optimizing for production requires attention to a few key areas. Following these best practices will ensure your applications are fast, reliable, and cost-effective.

Optimizing Cold Starts

A “cold start” is the time it takes for a new container to spin up and start processing a request. To minimize this latency:

- Pre-load models into the image: Use a function hook like

.run_function()when defining yourmodal.Imageto download model weights during the image build process. This way, the model is already on disk when the container starts. - Use

modal.Volume: For very large assets, store them on a persistentVolume. The volume can be attached to containers much faster than downloading data from the internet. - Keep containers warm: For latency-critical applications, you can configure a minimum number of containers to keep running using the

keep_warmparameter in@stub.function().

Managing Dependencies and Secrets

Keep your modal.Image definitions as lean as possible. Only install the packages you absolutely need to reduce image size and build times. For sensitive information like API keys for OpenAI News or Anthropic News, never hardcode them. Instead, use modal.Secret, which securely injects environment variables from the Modal dashboard or your local environment.

import modal

import os

stub = modal.Stub()

# Create a secret from an environment variable

# Run `modal secret create my-openai-secret OPENAI_API_KEY=$OPENAI_API_KEY` in your terminal first.

@stub.function(secrets=[modal.Secret.from_name("my-openai-secret")])

def call_openai():

import openai

# The secret is now available as an environment variable inside the container

openai.api_key = os.environ["OPENAI_API_KEY"]

print("Making a call to OpenAI...")

# ... your OpenAI API call logic here ...

return "Success"Cost Management and GPU Selection

Modal’s pay-per-second billing model is highly cost-effective, but it’s important to choose the right hardware. Don’t request an H100 GPU if an A10G or even a T4 will suffice. Profile your application to understand its resource requirements. For high-throughput inference, consider using optimized serving frameworks like vLLM News, which can be easily packaged into a Modal image to maximize GPU utilization and reduce costs.

Conclusion: The Future of AI Development is Serverless

Modal represents a significant step forward in the MLOps toolchain, abstracting away the most painful parts of infrastructure management and allowing developers to focus on what they do best: building intelligent applications. We’ve journeyed from the basic building blocks of the platform to constructing a deployable, multi-modal AI service. We’ve seen how Modal’s Python-native approach simplifies environment creation, function execution, and API deployment.

By integrating seamlessly with the entire AI ecosystem—from frameworks like PyTorch and TensorFlow to model hubs like Hugging Face and experiment trackers like Weights & Biases News—Modal acts as the connective tissue between research and production. As AI models become more complex and the demand for scalable, GPU-powered applications grows, serverless platforms like Modal will become an indispensable part of every AI developer’s toolkit. The next time you have a powerful model running in a Google Colab notebook, consider how quickly you could turn it into a robust, scalable application with just a few lines of Modal code.