Unlocking 2x Performance: A Deep Dive into FP16 Inference with TensorFlow Lite and XNNPack on ARM

The world of artificial intelligence is in a constant state of evolution, with a relentless push for models that are not only more powerful but also faster and more efficient. While massive, cloud-based models often dominate headlines in OpenAI News and Google DeepMind News, a parallel revolution is happening at the edge. On-device machine learning is critical for applications that demand low latency, offline functionality, and enhanced user privacy. The challenge has always been to run sophisticated models on resource-constrained hardware like smartphones and IoT devices without draining the battery or creating a sluggish user experience.

In recent TensorFlow News, a significant breakthrough has emerged that directly addresses this challenge: the native implementation of half-precision floating-point (FP16) inference within TensorFlow Lite’s XNNPack delegate. This advancement effectively doubles the performance of many models on modern ARM-based devices, such as the latest flagship smartphones. It represents a major leap forward for mobile developers and ML engineers, enabling richer, real-time AI experiences. This article provides a comprehensive technical deep dive into this development, exploring the core concepts, practical implementation steps, advanced techniques, and best practices for leveraging FP16 to supercharge your on-device applications.

The “Why” and “What” of On-Device Performance Optimization

To fully appreciate the impact of FP16 inference, it’s essential to understand the fundamentals of numerical precision in machine learning and the role of specialized libraries like XNNPack. The journey from a large, training-time model to a lean, inference-time model is one of careful optimization and trade-offs.

From FP32 to FP16: The Precision-Performance Trade-off

Most neural networks are trained using 32-bit single-precision floating-point numbers, commonly known as FP32. This format offers a wide dynamic range and high precision, which is crucial for the subtle weight adjustments that occur during model training. However, for inference—the process of using a trained model to make predictions—this level of precision is often overkill.

This is where 16-bit half-precision floating-point (FP16) comes in. By representing numbers with 16 bits instead of 32, we gain several key advantages:

- Reduced Model Size: The model’s weights take up half the storage space, reducing the application’s binary size and memory footprint.

- Lower Memory Bandwidth: Less data needs to be moved between memory and the processing units, which is often a significant bottleneck on mobile devices.

- Faster Computation: Modern ARM and GPU processors have specialized hardware instructions that can perform FP16 calculations significantly faster than FP32.

Of course, this is a trade-off. Reducing precision can, in some cases, lead to a minor reduction in model accuracy due to a smaller representable number range, which can cause numerical underflow or overflow. However, for a vast majority of computer vision and NLP models, the impact on accuracy is negligible, while the performance gains are substantial. This focus on efficient inference is a common thread in the industry, with similar optimization efforts regularly featured in PyTorch News and JAX News.

Introducing TensorFlow Lite and the XNNPack Delegate

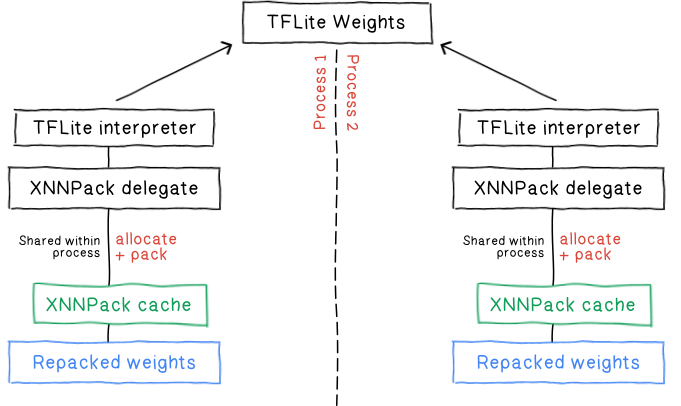

TensorFlow Lite is Google’s open-source deep learning framework for on-device inference. It takes a standard TensorFlow model and converts it into a highly optimized .tflite format. A key feature of TensorFlow Lite is its use of “delegates.” A delegate is a mechanism that allows TFLite to hand off the execution of parts of the model graph to a specialized hardware accelerator, such as a GPU, DSP (Digital Signal Processor), or a highly optimized CPU library.

XNNPack is a high-performance, floating-point neural network inference library that serves as the default CPU delegate in TensorFlow Lite. It contains a collection of hand-tuned operators for various CPU architectures, including ARM, x86, and WebAssembly. The latest updates have equipped XNNPack with native support for FP16 arithmetic on modern ARMv8.2-A and newer architectures, which are found in most recent high-end smartphones. This is the core engine driving the 2x performance gains.

To begin, let’s see how a standard Keras model is converted to a baseline FP32 TensorFlow Lite model. This will be our starting point before applying FP16 optimization.

import tensorflow as tf

# 1. Create or load a Keras model (e.g., MobileNetV2)

# For this example, we'll use a pre-trained model from Keras applications

model = tf.keras.applications.MobileNetV2(

input_shape=(224, 224, 3),

include_top=True,

weights='imagenet'

)

# 2. Initialize the TensorFlow Lite Converter

converter = tf.lite.TFLiteConverter.from_keras_model(model)

# 3. Convert the model (default is FP32)

tflite_model_fp32 = converter.convert()

# 4. Save the model to a .tflite file

with open('mobilenet_v2_fp32.tflite', 'wb') as f:

f.write(tflite_model_fp32)

print("FP32 TensorFlow Lite model saved as mobilenet_v2_fp32.tflite")Putting Theory into Practice: Converting Models for FP16 Inference

With the foundational concepts in place, the next step is to practically apply FP16 quantization during the model conversion process. The TensorFlow Lite Converter API makes this remarkably straightforward, requiring just a few additional configuration flags.

The Conversion Process with TensorFlow Lite Converter

To enable FP16 quantization, you need to configure the TFLiteConverter. The key is to specify the desired optimizations. The tf.lite.Optimize.DEFAULT flag enables a standard set of optimizations, including post-training quantization. To specifically target FP16, you must also tell the converter that tf.float16 is a supported type for the operations in your model.

The converter will then analyze the model’s graph. It will attempt to convert all compatible operations (like convolutions and matrix multiplications) to use FP16 weights and activations. For operations where FP16 might cause numerical instability (e.g., softmax, normalization layers), the converter may intelligently keep them in FP32, creating a “mixed-precision” model that balances performance and accuracy. This intelligent conversion is a hallmark of a mature MLOps pipeline, a topic frequently discussed in MLflow News and Weights & Biases News.

Here is a complete Python script demonstrating how to convert a Keras model to an optimized FP16 TensorFlow Lite model.

import tensorflow as tf

import numpy as np

# 1. Create or load a Keras model

model = tf.keras.applications.MobileNetV2(

input_shape=(224, 224, 3),

weights='imagenet'

)

# 2. Initialize the TensorFlow Lite Converter

converter = tf.lite.TFLiteConverter.from_keras_model(model)

# 3. Set the optimization flags for FP16

converter.optimizations = [tf.lite.Optimize.DEFAULT]

converter.target_spec.supported_types = [tf.float16]

# 4. Convert the model

tflite_model_fp16 = converter.convert()

# 5. Save the optimized model

with open('mobilenet_v2_fp16.tflite', 'wb') as f:

f.write(tflite_model_fp16)

print("FP16 TensorFlow Lite model saved as mobilenet_v2_fp16.tflite")

print(f"Original FP32 model size: {len(tflite_model_fp32) / 1024 / 1024:.2f} MB")

print(f"Optimized FP16 model size: {len(tflite_model_fp16) / 1024 / 1024:.2f} MB")Running this script, you will immediately notice that the resulting mobilenet_v2_fp16.tflite file is approximately half the size of the FP32 version. This is the first tangible benefit of FP16 quantization.

Measuring Impact and Navigating the Nuances

Creating an optimized model is only half the battle. It is crucial to benchmark its performance to validate the improvements and to be aware of platform-specific limitations that might affect deployment.

Benchmarking Performance on ARM Devices

TensorFlow provides a command-line benchmark tool that allows you to measure the latency, memory usage, and accuracy of your .tflite models on a target device. To get accurate results, you should run the benchmark directly on your ARM-based Android or iOS device.

You’ll need to use the Android Debug Bridge (adb) to push the model and the benchmark binary to your device and then execute the test. The key flag is --use_xnnpack=true, which ensures the XNNPack delegate is enabled.

Here’s an example of how you would run the benchmark tool from your computer’s terminal for the FP16 model we just created.

# First, push the model and benchmark tool to the device

adb push mobilenet_v2_fp16.tflite /data/local/tmp

adb push benchmark_model /data/local/tmp

# Make the benchmark tool executable

adb shell chmod +x /data/local/tmp/benchmark_model

# Run the benchmark on the device using 4 CPU threads

adb shell /data/local/tmp/benchmark_model \

--graph=/data/local/tmp/mobilenet_v2_fp16.tflite \

--num_threads=4 \

--use_xnnpack=true \

--warmup_runs=10 \

--num_runs=50By running this command for both the FP32 and FP16 models, you can directly compare the average inference times and confirm the performance uplift. On compatible ARM devices, you should observe the latency for the FP16 model to be roughly 50% of the FP32 model’s latency.

Platform Specifics and Potential Pitfalls

A critical consideration is the hardware target. The significant speedup from native FP16 is specific to modern ARM CPUs (ARMv8.2-A and newer). If you run the same FP16 model on an older ARM device or an x86-based machine (like a typical developer laptop or emulator), XNNPack will fall back to an *emulation mode*. In this mode, it simulates FP16 operations using FP32 arithmetic, which can actually be slower than running the original FP32 model. This is a common pitfall: always benchmark on your target hardware.

This hardware-specific optimization strategy is a recurring theme in the high-performance computing space. It’s conceptually similar to how engineers use NVIDIA’s TensorRT for GPU optimization, a topic often seen in NVIDIA AI News, or Intel’s OpenVINO for optimizing models on Intel hardware, as covered in OpenVINO News. Similarly, the concept of a standardized model format that can be optimized for different backends is the core promise of initiatives like ONNX, making ONNX News highly relevant to MLOps practitioners.

Finally, let’s look at how you would actually use this model in an Android application using Kotlin.

import org.tensorflow.lite.Interpreter

import java.io.FileInputStream

import java.nio.ByteBuffer

import java.nio.channels.FileChannel

// ... inside your Android Activity or ViewModel

private fun loadModelFile(modelPath: String): ByteBuffer {

val fileDescriptor = assets.openFd(modelPath)

val inputStream = FileInputStream(fileDescriptor.fileDescriptor)

val fileChannel = inputStream.channel

val startOffset = fileDescriptor.startOffset

val declaredLength = fileDescriptor.declaredLength

return fileChannel.map(FileChannel.MapMode.READ_ONLY, startOffset, declaredLength)

}

fun runInference(inputData: ByteBuffer) {

val tfliteModel = loadModelFile("mobilenet_v2_fp16.tflite")

// Configure the interpreter options to use the XNNPack delegate

val options = Interpreter.Options()

// XNNPack is enabled by default, but you can be explicit

// options.addDelegate(XNNPackDelegate())

val interpreter = Interpreter(tfliteModel, options)

// Allocate output buffer

val outputBuffer = ByteBuffer.allocateDirect(1 * 1001 * 4) // Example for MobileNetV2

outputBuffer.order(ByteOrder.nativeOrder())

// Run inference

interpreter.run(inputData, outputBuffer)

// Process the results from outputBuffer...

}Best Practices and the Evolving AI Landscape

Successfully deploying high-performance models goes beyond simple conversion. It requires integrating these techniques into a robust workflow and understanding their place in the broader AI ecosystem.

When to Use FP16 Quantization

FP16 quantization is an excellent choice for latency-critical applications on modern mobile hardware. It provides a “sweet spot” of significant performance gains with a very low risk of impacting model accuracy. It’s ideal for use cases like:

- Real-time video and image processing (e.g., style transfer, background segmentation).

- On-device NLP tasks (e.g., text classification, sentiment analysis).

- Augmented reality filters and effects.

It should be compared against other techniques like full integer (INT8) quantization. INT8 can provide even greater speedups (up to 3-4x) and further size reduction but typically requires a representative dataset for calibration and has a higher chance of affecting accuracy. Choosing the right quantization strategy is a key decision, and platforms like Vertex AI and AWS SageMaker offer tools and AutoML features to help automate this evaluation process.

The Industry Trend Towards Efficiency

This development in TensorFlow Lite is part of a much larger industry trend. As models from research labs like Meta AI and Mistral AI become more powerful, there is a parallel and equally important push to make them more accessible and efficient. The ability to run smaller, specialized versions of these models on the edge is a game-changer. It reduces reliance on expensive cloud APIs, a topic of interest for users of Azure AI and Amazon Bedrock, and enables new kinds of interactive applications.

Frameworks like LangChain and vector databases like Pinecone or Milvus are increasingly being used with smaller, local embedding models. Faster on-device inference, powered by optimizations like FP16, makes these architectures more viable for mobile applications. This trend democratizes AI, allowing developers to build powerful features without mandating a constant internet connection, a narrative frequently highlighted in Hugging Face News.

Conclusion

The introduction of native FP16 support in TensorFlow Lite’s XNNPack delegate is a landmark event for the on-device machine learning community. It provides a simple yet powerful mechanism to double inference speed on a wide range of modern ARM devices, directly translating to more responsive, capable, and battery-friendly AI-powered applications. By understanding the fundamentals of half-precision floating-point, leveraging the TensorFlow Lite Converter correctly, and rigorously benchmarking on target hardware, developers can unlock a new level of performance for their mobile ML features.

The key takeaways are clear: FP16 offers a fantastic balance of speed and accuracy, the conversion process is straightforward, and platform-specific testing is non-negotiable. As hardware continues to evolve with even more powerful neural processing units, we can expect the line between edge and cloud AI capabilities to blur further, ushering in a new era of intelligent, accessible, and efficient applications for everyone.