Triton Inference Server News: A Deep Dive into High-Performance AI Model Deployment

Unlocking Production-Grade AI: The Latest Advancements in NVIDIA Triton Inference Server

In the rapidly evolving landscape of artificial intelligence, the journey from a trained model to a production-ready, scalable application is fraught with challenges. Inference—the process of using a trained model to make predictions—is where AI delivers real-world value, but it demands high throughput, low latency, and efficient resource utilization. This is precisely the problem that NVIDIA Triton Inference Server, an open-source inference serving platform, is designed to solve. As organizations worldwide deploy increasingly complex models, from classic computer vision systems to massive Large Language Models (LLMs), the need for a robust, flexible, and high-performance serving solution has never been more critical. Recent developments in the Triton ecosystem are pushing the boundaries of what’s possible, making it a cornerstone of modern MLOps stacks and a key topic in NVIDIA AI News.

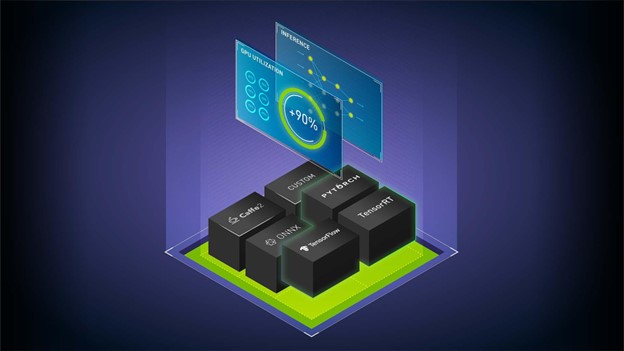

Triton simplifies the complexity of model deployment by providing a standardized environment that supports a vast array of machine learning frameworks. Whether your team works with TensorFlow, PyTorch, ONNX, or TensorRT, Triton offers a unified entry point for serving these models at scale. Its powerful features, such as dynamic batching, concurrent model execution, and business logic scripting, empower engineers to optimize performance without rewriting model code. This article explores the latest news and advancements in Triton, diving into its core concepts, practical implementation details, and the advanced features that are enabling breakthroughs across industries.

Core Concepts and Foundational Features

At its heart, Triton is an inference server that acts as a bridge between client applications and trained AI models. It exposes simple HTTP/REST and gRPC endpoints, allowing applications written in any language to easily request predictions. Its power lies in its sophisticated backend architecture and flexible configuration system.

The Multi-Framework Backend Architecture

One of Triton’s most celebrated features is its “bring-your-own-backend” philosophy. It is not tied to a single framework. Instead, it uses a modular backend system to load and execute models from virtually any source. This is a game-changer for organizations that use a diverse set of tools. Key supported backends include:

- TensorFlow & Keras: Native support for TensorFlow 1.x and 2.x SavedModels and GraphDefs. This is crucial for teams following the latest TensorFlow News and Keras News.

- PyTorch: Direct execution of PyTorch TorchScript models, a popular choice for researchers and developers following PyTorch News.

- TensorRT: For achieving the absolute lowest latency and highest throughput on NVIDIA GPUs, Triton’s TensorRT backend is unparalleled. It leverages NVIDIA’s high-performance deep learning inference optimizer and runtime.

- ONNX Runtime: The Open Neural Network Exchange (ONNX) format provides model interoperability. Triton’s robust support for ONNX, a frequent topic in ONNX News, allows teams to decouple training frameworks from deployment environments.

- OpenVINO: For high-performance inference on Intel hardware, the OpenVINO backend is a first-class citizen, reflecting the latest in OpenVINO News.

This flexibility means a single Triton instance can concurrently serve a computer vision model optimized with TensorRT, a natural language processing model from PyTorch, and a forecasting model in ONNX format, all sharing the same GPU resources efficiently.

Model Configuration and the Model Repository

Triton manages models through a structured directory called the “model repository.” Each model requires a specific folder structure and a `config.pbtxt` file that tells Triton how to load and serve it. This configuration file defines critical metadata, including the model’s inputs and outputs, data types, and shapes, as well as performance optimization settings.

Here is a basic example of a `config.pbtxt` for a simple image classification model that expects a 3-channel, 224×224 image.

name: "image_classifier"

platform: "onnxruntime_onnx"

max_batch_size: 64

input [

{

name: "INPUT__0"

data_type: TYPE_FP32

dims: [ 3, 224, 224 ]

}

]

output [

{

name: "OUTPUT__0"

data_type: TYPE_FP32

dims: [ 1000 ]

}

]This simple text file is the control panel for your model deployment, allowing you to fine-tune its behavior without ever touching the model’s source code.

Practical Implementation: Serving a Transformer Model

Let’s walk through a practical example of deploying a sentiment analysis model from the Hugging Face ecosystem. We’ll assume the model has been converted to the ONNX format for maximum portability and performance.

Setting Up the Model Repository

First, we create the necessary directory structure. Triton will automatically detect and load models placed here.

/models

└── sentiment_analyzer

├── 1

│ └── model.onnx

└── config.pbtxtThe `models` directory is our model repository. Inside, `sentiment_analyzer` is the model name. The `1` directory represents the model version, allowing for seamless model updates. The `model.onnx` file is our exported model, and the `config.pbtxt` defines its serving parameters.

Interacting with the Deployed Model via the Python Client

Once Triton is running and has loaded the model, we can send inference requests using the `tritonclient` library. This library simplifies communication over both HTTP and gRPC. The following Python script demonstrates how to send a preprocessed text input to our `sentiment_analyzer` model.

This example showcases a typical client-side workflow: establish a connection, prepare input tensors, send the request, and parse the results. This pattern is common whether you’re building a backend with FastAPI or an interactive demo with Streamlit or Gradio.

import numpy as np

import tritonclient.http as httpclient

from transformers import AutoTokenizer

# 1. Initialize tokenizer and Triton client

model_name = "sentiment_analyzer"

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased-finetuned-sst-2-english")

client = httpclient.InferenceServerClient(url="localhost:8000")

# 2. Prepare the input data

text = "NVIDIA Triton Inference Server makes deployment incredibly efficient!"

inputs = tokenizer(text, return_tensors="np", padding=True, truncation=True)

input_ids = inputs['input_ids'].astype(np.int64)

attention_mask = inputs['attention_mask'].astype(np.int64)

# 3. Create Triton input tensors

triton_input_ids = httpclient.InferInput('input_ids', input_ids.shape, 'INT64')

triton_input_ids.set_data_from_numpy(input_ids)

triton_attention_mask = httpclient.InferInput('attention_mask', attention_mask.shape, 'INT64')

triton_attention_mask.set_data_from_numpy(attention_mask)

# 4. Send inference request

response = client.infer(

model_name=model_name,

inputs=[triton_input_ids, triton_attention_mask]

)

# 5. Parse the output

output_data = response.as_numpy('logits')

prediction = np.argmax(output_data, axis=1)

print(f"Input text: '{text}'")

print(f"Raw logits: {output_data}")

print(f"Predicted class: {'POSITIVE' if prediction[0] == 1 else 'NEGATIVE'}")Advanced Features and Recent News

Triton’s development is fast-paced, with new features constantly being added to address emerging challenges in AI deployment, especially around LLMs and traditional machine learning at scale.

Dynamic Batching for Maximum Throughput

One of Triton’s most powerful optimization features is dynamic batching. GPUs perform best when processing data in large batches. However, in a real-world application, inference requests often arrive one by one. The dynamic batcher solves this by transparently intercepting individual requests on the server, waiting a very short, configurable amount of time to collect a batch, and then sending the full batch to the model for execution. This dramatically increases GPU utilization and overall throughput with minimal impact on latency for any single request.

Enabling it is as simple as adding a stanza to your `config.pbtxt`:

# In config.pbtxt

dynamic_batching {

preferred_batch_size: [8, 16, 32]

max_queue_delay_microseconds: 100

}This tells Triton to try and form batches of 8, 16, or 32 and to wait no more than 100 microseconds before dispatching a batch. Finding the optimal values is often done using Triton’s own Model Analyzer tool.

Accelerating Traditional ML with the FIL Backend

While much of the hype surrounds deep learning, a vast number of production models are gradient-boosted decision trees from libraries like XGBoost, LightGBM, and Scikit-learn. Historically, serving these models on GPUs was difficult. The Forest Inference Library (FIL) backend is a major advancement that allows Triton to execute these tree-based models directly on the GPU, yielding massive performance gains. This means that the same infrastructure used for serving a complex model from Hugging Face Transformers News can also accelerate a fraud detection model built with XGBoost. This unified approach simplifies MLOps for organizations like financial services and retail that rely heavily on both types of models.

State-of-the-Art LLM Serving

The rise of generative AI has introduced new inference challenges. LLMs require immense memory, and techniques like auto-regressive decoding are computationally unique. The latest Triton Inference Server News is heavily focused on this area. Triton now integrates with specialized backends designed for LLMs, such as TensorRT-LLM and popular community projects discussed in vLLM News. These backends implement cutting-edge techniques like:

- PagedAttention: An attention algorithm that manages memory more efficiently, inspired by virtual memory and paging in operating systems.

- In-Flight Batching (Continuous Batching): A more advanced form of batching where new requests can be added to the batch even as it’s being processed, maximizing GPU uptime.

- Optimized Kernels: Highly-tuned GPU code for key operations in models from OpenAI News, Mistral AI News, and Meta AI News.

This integration makes Triton a premier choice for deploying LLMs in production, whether on-premise or on cloud platforms like AWS SageMaker, Azure Machine Learning, or Vertex AI.

Best Practices and the Broader MLOps Ecosystem

Deploying a model with Triton is just one piece of the puzzle. To build a robust production system, it’s essential to integrate it with other MLOps tools and follow best practices.

Performance Tuning with the Model Analyzer

Manually tuning batch sizes and instance counts for every model is tedious and error-prone. Triton’s Model Analyzer is a command-line tool that automates this process. It systematically profiles a model under various configurations to find the optimal settings that meet your specific latency and throughput goals. This data-driven approach is essential for maximizing the return on your hardware investment.

Monitoring and Observability

You can’t optimize what you can’t measure. Triton exposes a rich set of performance metrics in the Prometheus format. These metrics include GPU utilization, memory usage, inference count, and request latency distributions. By scraping these metrics with a tool like Prometheus and visualizing them in Grafana, you can gain deep insights into your server’s performance and set up alerts for potential issues. This observability is critical for maintaining service-level agreements (SLAs) and integrates well with experiment tracking platforms like MLflow News and Weights & Biases News.

Integration with Orchestration Tools

In large-scale deployments, Triton is typically run within containers and managed by an orchestrator like Kubernetes. This allows for automatic scaling, rolling updates, and high availability. Many cloud providers offer managed Kubernetes services that make this process even easier. For complex AI pipelines, such as Retrieval-Augmented Generation (RAG) systems that might use vector databases like Pinecone or Milvus, Triton can serve the embedding and generation models as scalable microservices within the Kubernetes cluster.

Conclusion: The Future of AI Inference

NVIDIA Triton Inference Server has firmly established itself as a critical component of the modern AI stack. Its ability to handle a diverse range of frameworks, from TensorFlow and PyTorch to specialized backends for tree-based models and LLMs, makes it an incredibly versatile and powerful tool. The continuous addition of features like the FIL backend and advanced LLM serving capabilities demonstrates a commitment to addressing the most pressing challenges in production AI.

For data scientists and MLOps engineers, mastering Triton means unlocking the ability to deploy any model with confidence, knowing it will be served with optimal performance and efficiency. As AI models continue to grow in complexity and scale, solutions like Triton will not just be beneficial; they will be essential. The ongoing developments promise even tighter integrations with the broader ecosystem, from data processing frameworks like Ray and Apache Spark MLlib to LLM orchestration tools like LangChain, solidifying Triton’s role as the engine for production AI.