DataRobot’s Strategic Leap into Agentic AI: Unpacking the Future of Intelligent Application Development

The artificial intelligence landscape is undergoing a monumental shift. For years, the primary focus of enterprise AI has been on predictive modeling—building systems that could forecast sales, detect fraud, or classify customer sentiment. While incredibly valuable, this paradigm is evolving. The industry is now rapidly moving towards a new frontier: Agentic AI. This new class of AI systems doesn’t just predict; it reasons, plans, and acts autonomously to accomplish complex, multi-step goals. This transition marks a significant leap from passive data analysis to active problem-solving, and major players in the AI platform space are making strategic moves to lead this charge. Recent developments in the world of DataRobot News signal a clear focus on empowering developers to build these sophisticated, next-generation AI applications, integrating powerful orchestration capabilities directly into the AI lifecycle.

The Rise of Agentic AI: From Prediction to Action

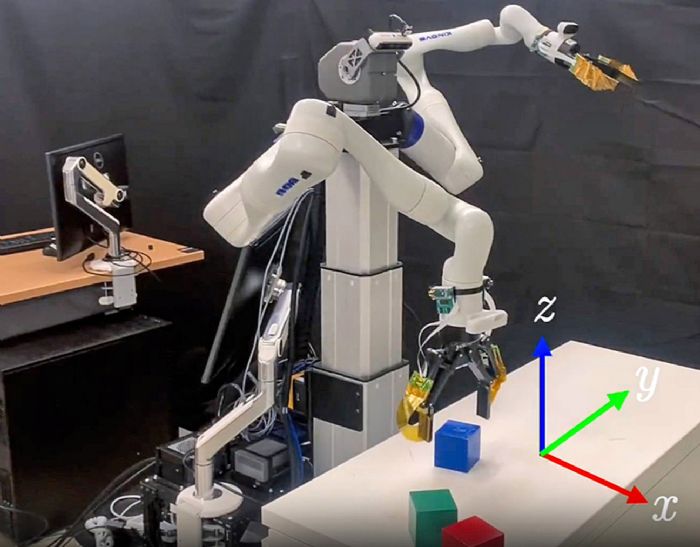

At its core, Agentic AI represents a fundamental change in how we interact with and leverage artificial intelligence. Instead of a single model providing a single output, an AI agent is a system designed to achieve a goal by intelligently sequencing a series of actions. This requires a sophisticated architecture that goes far beyond traditional machine learning.

What is an AI Agent?

An AI agent typically consists of several key components:

- The “Brain”: A Large Language Model (LLM) serves as the central reasoning engine. This could be a model from providers discussed in OpenAI News, Anthropic News, or open-source powerhouses highlighted in Mistral AI News and Meta AI News.

- Tools: These are the functions or capabilities the agent can use to interact with the world. Tools can range from simple API calls (e.g., a weather API) and database queries to executing code or running complex computational simulations.

- Planning and Reasoning Loop: The agent uses a framework to break down a high-level goal into a sequence of steps. It observes the outcome of each step, reflects on the result, and decides on the next best action until the goal is achieved. Frameworks like those featured in LangChain News and LlamaIndex News have become the industry standard for building these reasoning loops.

A Simple Agentic Workflow with LangChain

Building a basic agent is surprisingly accessible thanks to modern frameworks. Let’s look at a simple example using LangChain that equips an LLM with a tool to search the web. This demonstrates the core principle of augmenting an LLM’s knowledge with external, real-time information.

# First, ensure you have the necessary libraries installed:

# pip install langchain langchain-openai duckduckgo-search

import os

from langchain_openai import ChatOpenAI

from langchain.agents import tool, AgentExecutor, create_react_agent

from langchain_core.prompts import PromptTemplate

# It's best practice to set your API key as an environment variable

# os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

# 1. Define the "Brain" of the agent

llm = ChatOpenAI(model="gpt-4-turbo-preview", temperature=0)

# 2. Define the "Tools" the agent can use

@tool

def search_web(query: str) -> str:

"""Searches the web for the given query using DuckDuckGo."""

from duckduckgo_search import DDGS

with DDGS() as ddgs:

results = [r for r in ddgs.text(query, max_results=5)]

return str(results) if results else "No results found."

tools = [search_web]

# 3. Create the Prompt and Reasoning Logic (using a pre-built ReAct prompt)

prompt_template = """

Answer the following questions as best you can. You have access to the following tools:

{tools}

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!

Question: {input}

Thought:{agent_scratchpad}

"""

prompt = PromptTemplate.from_template(prompt_template)

# 4. Create the Agent

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# 5. Run the Agent

question = "What is the latest news regarding NVIDIA's AI chips?"

response = agent_executor.invoke({"input": question})

print(response)In this example, the agent receives a question, realizes it needs up-to-date information, decides to use the `search_web` tool, processes the search results, and formulates a final answer. This simple pattern is the foundation of far more complex agentic systems.

Building Scalable and Efficient AI Agents

While the simple example is powerful, building enterprise-grade agents that can perform truly complex tasks introduces significant challenges in scalability, efficiency, and orchestration. An agent might need to do more than just search the web; it might need to run a financial model, process a large dataset, or execute a scientific simulation. These tasks are often computationally intensive and cannot be run within the agent’s primary process.

The Challenge of Hybrid Workflows

Modern AI applications often involve a mix of different computational paradigms. A single workflow might involve data preprocessing (best suited for a framework like Apache Spark MLlib), model training (using libraries from TensorFlow News or PyTorch News), and complex, non-ML calculations. Orchestrating these hybrid workflows is a major hurdle. This is where specialized workflow orchestration tools become critical, enabling developers to define, execute, and manage complex computational graphs that can span across different environments, from a local machine to a powerful cloud cluster.

Orchestrating Distributed Tasks with Covalent

One powerful open-source tool designed for this exact problem is Covalent. It allows developers to create Pythonic workflows that can seamlessly execute tasks across diverse hardware resources, including HPC clusters, cloud GPUs, and quantum computers. This capability is transformative for Agentic AI, as it allows an agent’s “tools” to be incredibly powerful, distributed computations.

Here’s a conceptual example of how Covalent defines a distributed workflow. Tasks are defined as “electrons,” and a workflow that chains them together is a “lattice.”

# First, ensure you have Covalent installed:

# pip install covalent

import covalent as ct

import time

import random

# Define computational tasks as "electrons"

# These can be configured to run on different backends (local, AWS, etc.)

@ct.electron

def process_large_dataset(data_path: str) -> dict:

"""A mock task that simulates heavy data processing."""

print(f"Starting to process data from {data_path}...")

time.sleep(5) # Simulate work

processed_data = {"processed_rows": random.randint(10000, 20000)}

print("Data processing complete.")

return processed_data

@ct.electron

def train_model(processed_data: dict) -> str:

"""A mock task that simulates model training on the processed data."""

print(f"Starting model training with {processed_data['processed_rows']} rows...")

time.sleep(10) # Simulate training time

model_id = f"model_{random.randint(1000, 9999)}"

print(f"Model training complete. Model ID: {model_id}")

return model_id

# Define the workflow that connects the tasks as a "lattice"

@ct.lattice

def data_processing_and_training_workflow(data_path: str):

"""This workflow orchestrates the processing and training tasks."""

processed_data_result = process_large_dataset(data_path=data_path)

model_id_result = train_model(processed_data=processed_data_result)

return model_id_result

# Dispatch the workflow for execution

# Covalent's dispatcher sends this to the configured compute backend.

dispatch_id = ct.dispatch(data_processing_and_training_workflow)(data_path="/path/to/large/dataset.csv")

print(f"Workflow dispatched with ID: {dispatch_id}")

# You can then check the status and retrieve the result using the Covalent UI or API.

# result = ct.get_result(dispatch_id=dispatch_id, wait=True)

# print(result)By abstracting away the execution backend, developers can focus on the logic of their workflow, making it possible to create highly scalable and powerful tools for their AI agents.

Advanced Agentic Architectures and Tool Integration

The true power of Agentic AI is realized when we combine the reasoning capabilities of LLMs with the distributed execution power of workflow orchestrators. This fusion allows us to build agents that can tackle problems previously unimaginable for a single, monolithic application.

Integrating Complex Workflows as Agent Tools

Imagine an agent designed for a financial analyst. A user might ask, “Run a Monte Carlo simulation on our new portfolio strategy using the latest market data and generate a risk report.” A simple agent cannot handle this. However, an agent equipped with a Covalent-powered tool can.

The agent would recognize the need to run a simulation, trigger the corresponding Covalent workflow as its “tool,” and wait for the result. The workflow itself could spin up thousands of parallel jobs on a cloud cluster, process terabytes of data, and return a concise result (like a report ID) to the agent. The agent then presents this result to the user. Here’s how you might conceptually define such a tool for a LangChain agent.

# This is a conceptual example of integrating a Covalent workflow into a LangChain agent.

# (Assumes the Covalent workflow from the previous example is defined elsewhere)

import covalent as ct

from langchain.agents import tool

from my_workflows import data_processing_and_training_workflow # Fictional import

@tool

def run_heavy_computation(data_path: str) -> str:

"""

Use this tool to run a heavy data processing and model training workflow.

The input should be the path to the dataset.

The tool will return a dispatch ID for tracking the job's progress.

"""

print(f"Handing off heavy computation for {data_path} to the Covalent dispatcher.")

# Dispatch the pre-defined Covalent lattice

dispatch_id = ct.dispatch(data_processing_and_training_workflow)(data_path=data_path)

return f"Workflow has been dispatched successfully. The tracking ID is: {dispatch_id}"

# This new `run_heavy_computation` tool can now be added to the `tools` list

# of the LangChain agent from the first example. The agent can now decide

# when to delegate complex, long-running tasks to a more robust backend.

#

# tools = [search_web, run_heavy_computation]

# ... create and run agent as before ...

Connecting to Enterprise Data with RAG

For agents to be truly useful in an enterprise context, they need access to proprietary, up-to-date information. This is often achieved through Retrieval-Augmented Generation (RAG). RAG systems connect LLMs to external knowledge bases, most commonly vector databases. The latest Pinecone News and Milvus News highlight the growing importance of these databases for storing and retrieving semantic information. An agent can use a RAG pipeline as a tool to query internal documents, customer support tickets, or product specifications, ensuring its responses are accurate and contextually relevant. Integrating these systems securely is a key focus of enterprise platforms like AWS SageMaker and Google’s Vertex AI.

Best Practices, MLOps, and the Evolving AI Stack

As Agentic AI systems move from prototypes to production, a new set of challenges around MLOps (Machine Learning Operations) emerges. Managing an agent is far more complex than managing a single model.

MLOps for Agentic AI

The MLOps lifecycle for agents requires new tools and practices:

- Observability and Tracing: Understanding why an agent made a particular decision is crucial for debugging and improvement. Tools like those in the latest LangSmith News are becoming essential for tracing the complex chain of thoughts and actions within an agent.

- Experiment Tracking: The performance of an agent depends on the LLM, the prompt, the tools, and the reasoning strategy. Platforms highlighted in MLflow News and Weights & Biases News are adapting to track these complex, multi-component experiments.

- Evaluation: How do you measure if an agent is “good”? Evaluation is non-trivial and often requires creating complex benchmarks and human-in-the-loop validation processes.

Optimizing Performance and Cost

Agentic systems can be expensive, as each step in the reasoning loop may involve a call to a powerful LLM. Optimizing performance and cost is critical. This involves techniques like model quantization, using efficient model formats like those in ONNX News, and deploying on high-performance inference servers. The latest NVIDIA AI News often centers on hardware and software like TensorRT and the Triton Inference Server, which are designed to accelerate inference. Furthermore, using smaller, fine-tuned models for specific tasks within the agent’s toolkit can dramatically reduce latency and cost.

The Future of the Integrated AI Platform

The rise of Agentic AI is driving a convergence in the AI development stack. It’s no longer enough for a platform to simply offer model training or deployment. The future belongs to integrated platforms that provide a seamless experience for the entire AI application lifecycle. This includes data management, model development (leveraging insights from AutoML News), workflow orchestration, agentic framework integration, MLOps, and governance. The strategic direction indicated by recent DataRobot News points towards building exactly this type of unified platform, where developers can move from a business idea to a fully operational, scalable, and governed AI agent within a single environment, rivaling the end-to-end capabilities of offerings like Azure Machine Learning and Snowflake Cortex.

Conclusion: The Dawn of Action-Oriented AI

The industry is at an inflection point. The transition from predictive AI to action-oriented, Agentic AI is well underway, promising to unlock unprecedented value by automating complex processes and augmenting human capabilities. Building these systems at scale, however, requires a new paradigm of tools—ones that fuse the reasoning power of LLMs with the scalable, distributed computing power of modern workflow orchestrators.

The strategic consolidation happening in the market underscores this reality. By integrating sophisticated workflow orchestration directly into the core AI platform, companies are creating a powerful, unified environment for developing, deploying, and managing the next generation of AI applications. For developers and data scientists, this means fewer infrastructural headaches and more time spent building innovative solutions that can truly reason, plan, and act in the world.