Ray News: A Deep Dive into Scaling AI and Python Workloads

The artificial intelligence landscape is evolving at an unprecedented pace. The rise of foundation models, large language models (LLMs), and complex data processing pipelines has pushed single-machine computation to its limits. Developers and data scientists now face the critical challenge of efficiently scaling their workloads from a laptop to a large cluster. This is where Ray, an open-source unified compute framework, has emerged as a transformative solution. It simplifies the complex task of building and running distributed applications, making it an essential tool for modern AI development.

This article provides a comprehensive overview of Ray, exploring its core concepts, practical implementations for machine learning, advanced architectural patterns, and optimization best practices. We will delve into how Ray and its ecosystem of libraries, known as the Ray AI Runtime (AIR), are empowering developers to tackle the next generation of computational challenges. Whether you’re interested in the latest Ray News, distributed training updates from the PyTorch News and TensorFlow News communities, or scaling complex applications built with LangChain News, understanding Ray is crucial for staying at the forefront of the AI revolution.

Understanding the Core of Ray: Tasks and Actors

At its heart, Ray is designed to solve one of Python’s most significant limitations in high-performance computing: the Global Interpreter Lock (GIL), which prevents true parallelism by allowing only one thread to execute at a time. Ray elegantly sidesteps this by distributing work across multiple processes and even multiple machines, providing a simple and intuitive API to parallelize existing Python code.

Stateless Parallelism with Ray Tasks

The most fundamental concept in Ray is the “Task.” A Ray Task is simply a Python function that is executed asynchronously on a remote worker process. You can convert any standard Python function into a Ray Task by applying the @ray.remote decorator. This allows you to instantly parallelize computations with minimal code changes.

When you invoke a remote function using .remote(), it immediately returns a future or an ObjectRef, which is a pointer to the eventual result. The actual execution happens in the background on the Ray cluster. To retrieve the results, you use ray.get(), which blocks until the computations are complete. This asynchronous pattern is incredibly powerful for executing independent computations in parallel, such as data preprocessing, batch inference, or simulations.

import ray

import time

import requests

# Initialize Ray. If running on a laptop, this starts a local "cluster".

# On a multi-node setup, you would connect to an existing cluster address.

if ray.is_initialized():

ray.shutdown()

ray.init()

# A regular Python function to fetch a URL's status

def get_url_status(url):

try:

response = requests.get(url, timeout=5)

return url, response.status_code

except requests.exceptions.RequestException as e:

return url, str(e)

# Use the @ray.remote decorator to turn it into a Ray Task

@ray.remote

def get_url_status_remote(url):

return get_url_status(url)

urls = [

"https://www.google.com",

"https://www.github.com",

"https://www.ray.io",

"https://www.openai.com",

"https://this-url-does-not-exist.xyz",

"https://www.meta.com"

]

# Sequentially (slow)

start_time_seq = time.time()

sequential_results = [get_url_status(url) for url in urls]

duration_seq = time.time() - start_time_seq

print(f"Sequential execution took: {duration_seq:.2f} seconds")

print(sequential_results)

# In parallel with Ray (fast)

start_time_parallel = time.time()

# Launch all tasks asynchronously. This returns a list of ObjectRefs immediately.

futures = [get_url_status_remote.remote(url) for url in urls]

# Block and retrieve the results

parallel_results = ray.get(futures)

duration_parallel = time.time() - start_time_parallel

print(f"\nParallel execution with Ray took: {duration_parallel:.2f} seconds")

print(parallel_results)

ray.shutdown()Stateful Computation with Ray Actors

While tasks are ideal for stateless computations, many applications require maintaining state between calls. For this, Ray provides “Actors.” An Actor is a stateful worker created from a Python class decorated with @ray.remote. Each actor runs in its own process, encapsulating its state and methods. You can call its methods remotely, and these calls are executed serially on the actor, ensuring thread-safety without explicit locks. Actors are perfect for implementing parameter servers, simulation environments, or serving a machine learning model where loading the model into memory is a one-time cost.

Implementing Distributed ML with the Ray AI Runtime (AIR)

Ray AIR is a unified, scalable toolkit designed specifically for end-to-end machine learning workloads. It integrates several powerful libraries that streamline the process of data loading, training, tuning, and serving, making it a central topic in recent Ray News.

Ray Data and Ray Train: Scaling Data and Model Training

Ray Data provides a standard way to load and transform large datasets that may not fit in a single machine’s memory. It can read from various sources (like S3, Parquet, or Snowflake) and perform distributed transformations, seamlessly integrating with the rest of the Ray ecosystem.

Ray Train simplifies the process of distributed model training. It provides lightweight wrappers for popular frameworks, allowing you to scale your existing training code with minimal changes. This is fantastic news for developers following the latest Hugging Face Transformers News or DeepSpeed News, as Ray Train offers built-in integrations for these libraries. It handles the complexities of setting up distributed communication, checkpointing, and synchronizing with experiment tracking tools like those covered in MLflow News or Weights & Biases News.

Here is a practical example of distributed training using Ray Train with PyTorch.

import ray

import torch

import torch.nn as nn

from torch.utils.data import DataLoader, TensorDataset

from ray.train.torch import TorchTrainer

from ray.train import ScalingConfig, RunConfig

# Ensure Ray is initialized

if ray.is_initialized():

ray.shutdown()

ray.init()

# 1. Define the training logic in a function

def train_func(config: dict):

# Model, optimizer, and loss function

model = nn.Linear(config["input_features"], 1)

optimizer = torch.optim.SGD(model.parameters(), lr=config["lr"])

loss_fn = nn.MSELoss()

# Prepare model for distributed training

model = ray.train.torch.prepare_model(model)

# Create dummy data and DataLoader

inputs = torch.randn(config["batch_size"] * 10, config["input_features"])

labels = torch.randn(config["batch_size"] * 10, 1)

dataset = TensorDataset(inputs, labels)

dataloader = DataLoader(dataset, batch_size=config["batch_size"])

dataloader = ray.train.torch.prepare_data_loader(dataloader)

# Training loop

for epoch in range(config["epochs"]):

for batch in dataloader:

inputs, labels = batch

optimizer.zero_grad()

outputs = model(inputs)

loss = loss_fn(outputs, labels)

loss.backward()

optimizer.step()

# Report metrics back to Ray Train

metrics = {"loss": loss.item(), "epoch": epoch}

ray.train.report(metrics)

print(f"Worker {ray.train.get_context().get_world_rank()}: Epoch {epoch}, Loss: {loss.item()}")

# 2. Configure the Trainer

# This configuration will use 2 workers, each with a GPU if available.

scaling_config = ScalingConfig(num_workers=2, use_gpu=torch.cuda.is_available())

# Configuration for the training function

train_config = {

"lr": 1e-2,

"input_features": 10,

"batch_size": 32,

"epochs": 5

}

# 3. Initialize and run the TorchTrainer

trainer = TorchTrainer(

train_loop_per_worker=train_func,

train_loop_config=train_config,

scaling_config=scaling_config,

run_config=RunConfig(name="my_torch_training_job")

)

result = trainer.fit()

print(f"Training finished. Final reported metrics: {result.metrics}")

ray.shutdown()Advanced Patterns: Scalable Inference and LLM Pipelines

Beyond training, Ray excels at productionizing models through Ray Serve, its scalable model serving library. Unlike simple model servers built with Flask News or FastAPI News, Ray Serve is designed for high-performance, distributed inference and can compose multiple models into a single, flexible deployment graph.

Composing Models with Ray Serve

A common real-world scenario involves chaining multiple models together—for instance, a language detection model followed by a translation model. Ray Serve makes this trivial to implement and scale. Each model can be deployed as a separate component, and Ray handles the routing, batching, and scaling of each component independently.

This is particularly relevant for the LLM era. You can build complex pipelines for retrieval-augmented generation (RAG) where one Ray deployment handles document embedding (using models from Sentence Transformers News), another queries a vector database like Pinecone News or Milvus News, and a final deployment feeds the context to an LLM from Hugging Face News or OpenAI News. Ray can even manage highly optimized inference servers like vLLM for maximum throughput.

import ray

from ray import serve

from starlette.requests import Request

# Initialize Ray Serve

if ray.is_initialized():

ray.shutdown()

ray.init()

serve.start()

# Define a simple "model" that adds a prefix

@serve.deployment(num_replicas=2)

class Greeter:

def __init__(self, prefix: str):

self._prefix = prefix

print(f"Greeter initialized with prefix: '{self._prefix}'")

def __call__(self, name: str) -> str:

return f"{self._prefix}, {name}!"

# Define a deployment that composes two Greeter models

@serve.deployment(route_prefix="/pipeline")

class CompositionPipeline:

def __init__(self, english_greeter, spanish_greeter):

self._english_greeter = english_greeter

self._spanish_greeter = spanish_greeter

async def __call__(self, request: Request) -> dict:

name = request.query_params.get("name", "World")

# Call the other deployments by their handles

english_greeting_ref = self._english_greeter.remote(name)

spanish_greeting_ref = self._spanish_greeter.remote(name)

# Await the results

english_result = await english_greeting_ref

spanish_result = await spanish_greeting_ref

return {"english": english_result, "spanish": spanish_result}

# Bind the deployments together

# This creates two separate Greeter deployments and one CompositionPipeline

# that knows how to call them.

english_app = Greeter.bind(prefix="Hello")

spanish_app = Greeter.bind(prefix="Hola")

pipeline_app = CompositionPipeline.bind(english_greeter=english_app, spanish_greeter=spanish_app)

# Deploy the final application graph

serve.run(pipeline_app)

# You can now query this endpoint:

# import requests

# response = requests.get("http://127.0.0.1:8000/pipeline?name=Ray")

# print(response.json())

# Expected output: {'english': 'Hello, Ray!', 'spanish': 'Hola, Ray!'}

# To stop the deployment:

# serve.shutdown()

# ray.shutdown()Best Practices, Optimization, and Ecosystem Integration

To effectively use Ray, it’s essential to understand its resource management and the broader ecosystem in which it operates. Adhering to best practices ensures your applications are both performant and resilient.

Resource Management and Optimization

- Specify Resources: Always specify resource requirements (e.g.,

@ray.remote(num_cpus=2, num_gpus=0.5)) for tasks and actors. This allows Ray’s scheduler to make intelligent placement decisions and maximize cluster utilization. - Understand the Object Store: Ray uses a high-performance, in-memory object store called Plasma to share data between workers efficiently. Avoid passing large data objects directly as arguments to remote functions, as this can cause serialization overhead. Instead, use

ray.put()to place the object in the store first and pass its reference. - Use the Ray Dashboard: The Ray Dashboard is an indispensable tool for debugging and monitoring your cluster. It provides a real-time view of resource usage, running tasks, actors, and logs, helping you identify performance bottlenecks.

Integration with Cloud and MLOps Platforms

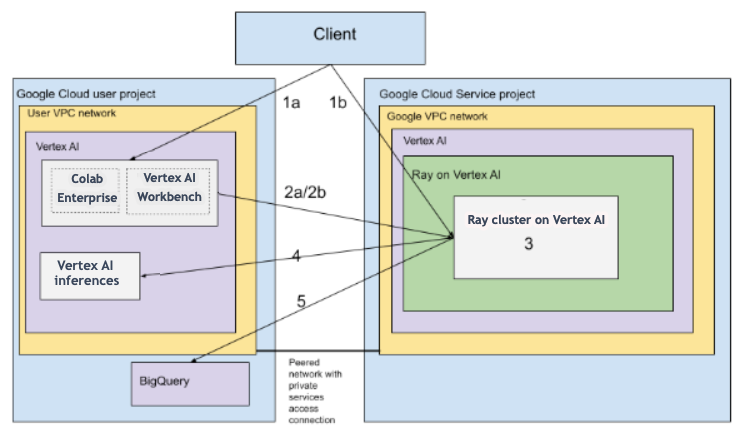

Ray is designed to be cloud-native. KubeRay is the official project for deploying and managing Ray clusters on Kubernetes. Major cloud providers offer seamless integrations, making it straightforward to run Ray on AWS SageMaker, Azure Machine Learning, or Google’s Vertex AI. This tight integration means you can leverage familiar cloud environments for managing large-scale Ray deployments. The latest Azure AI News and Amazon Bedrock News often highlight how distributed compute frameworks like Ray are essential for powering their generative AI services.

Conclusion: The Future of Scalable AI is Distributed

Ray has firmly established itself as a critical framework for building the next generation of AI applications. By providing a simple, unified API for distributed computing, it empowers developers to move beyond the constraints of a single machine and tackle problems at an unprecedented scale. From parallelizing simple Python functions with Tasks and managing state with Actors to orchestrating complex end-to-end machine learning pipelines with Ray AIR, the framework offers a comprehensive solution for modern computational challenges.

As models continue to grow and AI systems become more complex, the principles of distributed computing are no longer optional. The latest Ray News indicates a strong focus on improving performance, deepening integrations with tools like vLLM and LangChain, and simplifying the developer experience. For anyone serious about building scalable, production-ready AI, mastering Ray is a crucial step toward future-proofing their skills and applications.