AWS SageMaker News: A Deep Dive into Deploying and Customizing New Open-Weight AI Models

The artificial intelligence landscape is evolving at an unprecedented pace, marked by the proliferation of powerful, large-scale models. For developers and enterprises, the central challenge has shifted from merely accessing AI to strategically selecting and deploying the right model for the right task. The latest AWS SageMaker News signals a significant leap forward in this paradigm, with the platform aggressively expanding its model catalog to include a new wave of highly capable open-weight models. This move democratizes access to state-of-the-art AI, including models from prominent research labs like OpenAI, enabling builders to harness advanced reasoning and chain-of-thought capabilities directly within the secure and scalable AWS ecosystem.

This expansion is more than just adding new names to a list; it represents a fundamental enhancement of choice and flexibility. Whether you’re building sophisticated agentic systems, domain-specific chatbots, or complex data analysis pipelines, the ability to experiment with and deploy diverse architectures is paramount. This article provides a comprehensive technical guide for navigating this new frontier. We will explore how to discover, deploy, and invoke these new models using AWS SageMaker, dive into advanced techniques like fine-tuning and Retrieval-Augmented Generation (RAG), and cover best practices for optimization and MLOps integration.

The New Frontier: Integrating Powerful Open Models into AWS SageMaker

The integration of cutting-edge open-weight models into AWS SageMaker marks a pivotal moment for the AI community. It bridges the gap between groundbreaking research, often discussed in OpenAI News or Meta AI News, and practical, enterprise-grade application development. This allows developers to move beyond proprietary, black-box APIs and gain deeper control over the model lifecycle.

SageMaker JumpStart vs. Direct Deployment

AWS offers two primary pathways for leveraging these models within SageMaker, each catering to different needs for control and convenience:

- SageMaker JumpStart: This is the streamlined, “easy button” approach. JumpStart provides a curated collection of pre-trained models, including many from the Hugging Face News circuit, that can be deployed with a single click in the UI or a few lines of code. It handles the complexities of containerization, instance configuration, and endpoint creation, making it ideal for rapid prototyping and straightforward deployments.

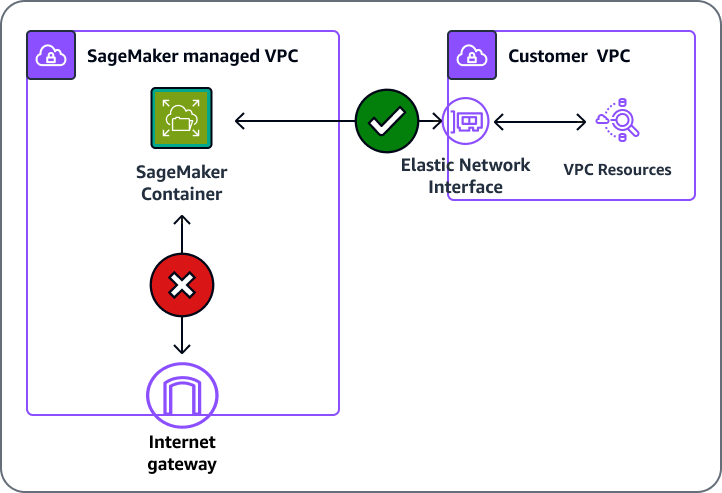

- Direct Deployment: For maximum control, developers can opt for direct deployment. This involves using a custom Docker container or, more commonly, leveraging SageMaker’s Deep Learning Containers (DLCs) for frameworks like PyTorch or TensorFlow. This path is suitable when you need to customize the inference environment, package specific dependencies, or deploy a model not yet available in JumpStart. This approach is often discussed in technical deep dives related to PyTorch News and TensorFlow News.

Why These Models Matter: Advanced Reasoning and Chain-of-Thought

The excitement surrounding these new models stems from their sophisticated capabilities, particularly in “advanced reasoning.” Unlike earlier models that excelled at pattern matching and text generation, these newer architectures can perform multi-step reasoning. A key technique enabling this is Chain-of-Thought (CoT) prompting. When presented with a complex problem, a CoT-capable model doesn’t just output the final answer; it articulates the intermediate steps it took to arrive at that conclusion. This transparency is invaluable for debugging, building trust, and tackling problems that require logical deduction, mathematical calculations, or planning.

For instance, when asked, “If a project starts on Monday and takes 10 working days, but there is a public holiday on the first Friday, what day does it finish?” a CoT-enabled model might respond by breaking it down: “1. Start Day: Monday. 2. A holiday on Friday means the first week has only 4 working days. 3. Remaining days: 10 – 4 = 6 days. 4. The second week will use 5 working days (Monday-Friday). 5. Remaining days: 6 – 5 = 1 day. 6. The final day will be the Monday of the third week. Therefore, the project finishes on a Monday.” This step-by-step output is a hallmark of advanced reasoning.

import sagemaker

from sagemaker.jumpstart.model import JumpStartModel

# Initialize a SageMaker session

sagemaker_session = sagemaker.Session()

# Example: Discovering a specific model in JumpStart

# Replace 'model-id' with the actual ID for a new open-weight model

# e.g., 'huggingface-llm-openai-community-gpt-oss-120b'

model_id = "huggingface-llm-openai-community-gpt-oss-120b"

try:

# This will fetch the model details if it exists in JumpStart

my_model = JumpStartModel(model_id=model_id)

print(f"Successfully found model: {my_model.model_id}")

print(f"Default instance type: {my_model.instance_type}")

print(f"Model Docker Image: {my_model.image_uri}")

except Exception as e:

print(f"Could not find model with ID '{model_id}'. Please check the SageMaker JumpStart catalog.")

print(e)Step-by-Step Guide: Deploying and Invoking a New Open Model

Moving from theory to practice, let’s walk through the end-to-end process of deploying one of these new models and running inference against it. We will use the SageMaker Python SDK and the JumpStart pathway for its simplicity and robustness.

Setting Up Your SageMaker Environment

Before running any code, ensure your environment is correctly configured. This involves:

- An active AWS account with appropriate permissions.

- An IAM Role with policies that grant SageMaker access to other AWS services like S3 and ECR.

- The AWS CLI configured and the SageMaker Python SDK installed (

pip install sagemaker --upgrade).

This code is typically run from a SageMaker Studio Notebook, an EC2 instance, or your local machine with proper AWS credentials.

Deploying a Model with SageMaker JumpStart

Deploying a model from JumpStart requires specifying the model’s unique ID and choosing an appropriate instance type. For large models, this will typically be a GPU-powered instance, such as from the ml.g5 or ml.p4d families. The latest NVIDIA AI News often highlights the GPUs that power these instances, which are critical for efficient model hosting.

import sagemaker

import boto3

from sagemaker.jumpstart.model import JumpStartModel

# Define the model ID and the desired instance type

model_id = "huggingface-llm-openai-community-gpt-oss-120b"

instance_type = "ml.g5.48xlarge" # Choose an instance with sufficient VRAM for the model

# Create a JumpStartModel instance

model = JumpStartModel(model_id=model_id)

# Deploy the model to a SageMaker real-time endpoint

# This step can take 15-30 minutes or more depending on the model size

print(f"Deploying model {model_id} to a {instance_type} instance...")

predictor = model.deploy(

initial_instance_count=1,

instance_type=instance_type,

endpoint_name=f"jumpstart-endpoint-{model_id.replace('/', '-')}" # Create a unique endpoint name

)

print(f"Endpoint {predictor.endpoint_name} is now in service.")Invoking the Endpoint for Inference

Once the endpoint is in the “InService” state, you can send inference requests. The payload is a JSON object containing the text inputs and various generation parameters like max_new_tokens, temperature (for creativity), and top_p. The structure of this payload can vary slightly between models, so it’s always best to consult the model’s documentation page in SageMaker JumpStart.

import json

# The predictor object was returned by the .deploy() call in the previous step

# If you are in a new session, you can reconnect to an existing endpoint:

# from sagemaker.predictor import Predictor

# predictor = Predictor(endpoint_name="your-endpoint-name-here")

# Define the payload for the model

payload = {

"inputs": "Explain the concept of Retrieval-Augmented Generation (RAG) in three clear steps.",

"parameters": {

"max_new_tokens": 512,

"top_p": 0.9,

"temperature": 0.6,

"return_full_text": False

}

}

# Invoke the endpoint

response = predictor.predict(payload)

# The response is often a list of dictionaries, so we parse it

# The exact structure may vary by model

generated_text = response[0]['generated_text']

print("--- Model Response ---")

print(generated_text)

# Don't forget to clean up the endpoint to avoid ongoing charges

# predictor.delete_endpoint()Advanced Applications: Customizing Models for Your Use Case

Deploying a pre-trained model is just the beginning. The true power of the SageMaker ecosystem lies in its ability to adapt these models to specific business needs. This is where techniques like fine-tuning and RAG come into play, transforming a general-purpose model into a specialized expert.

Fine-Tuning on Custom Data with SageMaker

Fine-tuning involves further training a pre-trained model on a smaller, domain-specific dataset. This process adjusts the model’s weights to better understand specific jargon, adopt a particular conversational style, or excel at a niche task (e.g., summarizing legal documents). SageMaker simplifies this by providing managed training jobs that handle the underlying infrastructure. You simply need to provide your data in the correct format (often JSON Lines) in an S3 bucket and configure the training job.

The Hugging Face Transformers News often covers new techniques for efficient fine-tuning, like LoRA (Low-Rank Adaptation), many of which are supported directly through SageMaker’s training scripts.

import sagemaker

from sagemaker.jumpstart.estimator import JumpStartEstimator

# Define the model ID for fine-tuning

model_id = "huggingface-llm-mistralai-7b-instruct" # Using a smaller model for a tuning example

# S3 path to your training data (must be in the required format, e.g., JSONL)

training_data_s3_uri = "s3://your-bucket-name/path/to/your/training-data.jsonl"

# Create a JumpStartEstimator to launch the fine-tuning job

estimator = JumpStartEstimator(

model_id=model_id,

environment={"accept_eula": "true"}, # Some models require accepting a EULA

instance_type="ml.g5.12xlarge" # Choose an appropriate training instance

)

# Set hyperparameters (these are model-specific)

estimator.set_hyperparameters(

instruction_tuned="True",

epoch="3",

max_input_length="1024"

)

# Launch the fine-tuning job

estimator.fit({"training": training_data_s3_uri})

# After the job is complete, you can deploy the fine-tuned model

# fine_tuned_predictor = estimator.deploy()Building RAG Systems with SageMaker Endpoints

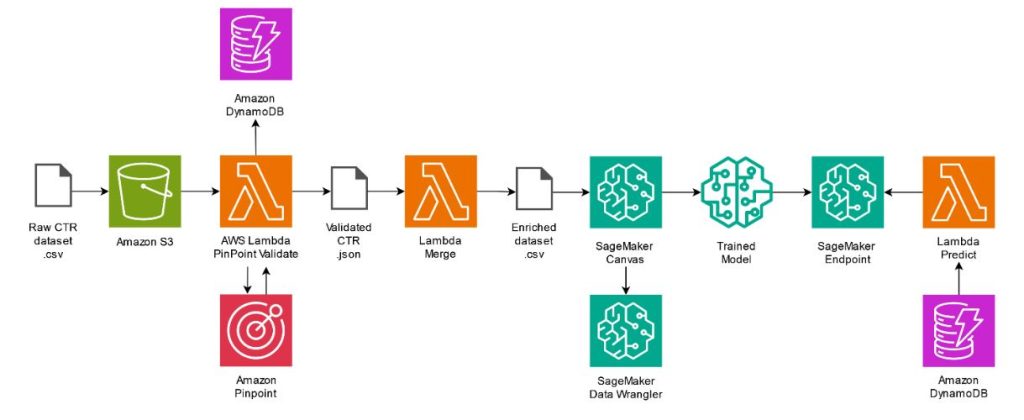

For tasks requiring access to vast, up-to-date, or proprietary information, fine-tuning can be inefficient. This is where Retrieval-Augmented Generation (RAG) shines. A RAG system connects the deployed SageMaker model to an external knowledge base, typically a vector database. The latest LangChain News and LlamaIndex News are filled with innovations in this area.

The workflow is as follows:

- A user’s query is used to search a vector database (like Pinecone, Milvus, or Weaviate) for relevant document chunks.

- These relevant chunks (the “context”) are retrieved.

- The original query and the retrieved context are combined into a new, augmented prompt.

- This augmented prompt is sent to your SageMaker model endpoint.

This approach allows the model to generate answers based on specific, verifiable information, reducing hallucinations and enabling it to use knowledge it was never trained on.

Production-Ready: Optimization, Cost Management, and MLOps

Running large models in production requires a focus on efficiency, cost, and maintainability. The broader AI ecosystem, with constant updates seen in Azure AI News and Vertex AI News, reflects this push towards production-readiness.

Performance and Cost Optimization

To maximize ROI, consider several optimization strategies:

- Right-Sizing Instances: Don’t overprovision. Use SageMaker’s monitoring tools to find the smallest instance that meets your latency and throughput requirements.

- Model Quantization: Techniques like quantization reduce the model’s precision (e.g., from 16-bit to 8-bit integers), which can significantly decrease memory footprint and speed up inference with a minimal impact on accuracy.

- Optimized Inference Servers: For high-throughput scenarios, consider deploying models on specialized inference servers like NVIDIA’s Triton Inference Server or using frameworks like vLLM, which can be run within SageMaker. These tools offer advanced features like dynamic batching to improve GPU utilization.

Integrating with the MLOps Ecosystem

A production model is a living asset that requires continuous management. Integrating your SageMaker workflows with MLOps tools is crucial for long-term success. The latest MLflow News and Weights & Biases News highlight the importance of robust experiment tracking.

You can log metrics, parameters, and model artifacts from your SageMaker training jobs directly to these platforms. This creates a transparent, reproducible record of every model you train, which is essential for governance, debugging, and collaboration. The code snippet below shows a conceptual way to add MLflow logging to a SageMaker training script.

# This is a conceptual example of what your training script (e.g., train.py) might contain

# when using SageMaker's script mode with MLflow.

import os

import argparse

import mlflow

import tensorflow as tf # or torch

def main():

# --- Argument Parsing ---

parser = argparse.ArgumentParser()

parser.add_argument("--learning-rate", type=float, default=0.001)

parser.add_argument("--model-dir", type=str, default=os.environ.get("SM_MODEL_DIR"))

args, _ = parser.parse_known_args()

# --- MLflow Setup ---

# Set tracking URI to your MLflow server

mlflow.set_tracking_uri("http://your-mlflow-server:5000")

mlflow.set_experiment("SageMaker-Fine-Tuning-Experiments")

with mlflow.start_run():

# Log hyperparameters

mlflow.log_param("learning_rate", args.learning_rate)

# --- Your Model Training Logic (TensorFlow, PyTorch, etc.) ---

# model = create_model()

# model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=args.learning_rate), ...)

# history = model.fit(...)

# Log metrics

# accuracy = history.history['accuracy'][-1]

# mlflow.log_metric("final_accuracy", accuracy)

# --- Save the model ---

# model.save(args.model_dir)

print("Training complete and model saved.")

if __name__ == "__main__":

main()Conclusion: The Future of AI Development on AWS

The recent expansion of model availability on AWS SageMaker is a transformative development for AI builders. By providing access to powerful open-weight models with advanced reasoning capabilities, AWS is empowering developers to tackle a new class of complex problems. The combination of choice, flexibility, and a robust, end-to-end MLOps platform creates an environment ripe for innovation.

The key takeaways are clear: developers now have unparalleled freedom to select the best foundation model, a streamlined path to deploy it via SageMaker JumpStart, and powerful tools to customize it through fine-tuning or augment it with RAG. By embracing best practices for optimization and integrating with the wider MLOps ecosystem, teams can move from experimentation to production with greater speed and confidence. The next step is to explore the SageMaker model catalog, deploy a model, and begin building the next generation of intelligent applications today.