Building an AI-Powered News Dashboard with Streamlit and LangGraph

In today’s hyper-connected world, we are inundated with a constant stream of information. Keeping up with the latest developments, especially in fast-moving fields like technology and artificial intelligence, can feel like a full-time job. The challenge is not a lack of information, but a surplus of it. How can we efficiently filter, process, and understand the news that truly matters? The answer lies in building intelligent, personalized tools that work for us. This is where the power of modern AI frameworks and rapid application development tools comes into play.

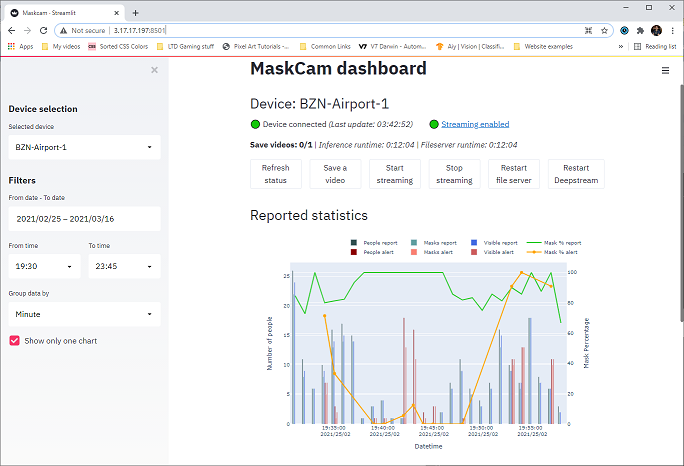

This article provides a comprehensive guide to building a sophisticated, AI-powered news aggregation and summarization dashboard. We will leverage the simplicity of Streamlit News for the user interface, the power of Large Language Models (LLMs) from providers like Groq or OpenAI for intelligent processing, and the robustness of LangGraph for orchestrating complex, stateful workflows. By the end, you will understand the core components, implementation details, and advanced techniques required to create a dynamic application that not only fetches real-time news but also summarizes it and allows for conversational exploration. This project serves as a practical entry point into building agentic AI systems and showcases the synergy between cutting-edge tools in the modern data science stack.

The Core Components: Weaving Together the Tech Stack

Before diving into the code, it’s crucial to understand the role each technology plays in our application. Our news dashboard is not a monolithic piece of software but an ecosystem of specialized tools working in harmony.

1. Streamlit: The Interactive Frontend

Streamlit is an open-source Python library that makes it incredibly easy to create and share beautiful, custom web apps for machine learning and data science. Its core philosophy is to turn data scripts into shareable web apps in minutes. For our project, Streamlit will serve as the user-facing dashboard, providing interactive widgets like text inputs for queries, buttons to trigger actions, and containers to display the fetched news and AI-generated summaries.

2. News & Search API: The Data Source

To get real-time news, we need a reliable data source. A search API tailored for factual, up-to-date information is ideal. While you could use a generic news API, services like Tavily are optimized for AI agents, providing clean, concise results. The API will be responsible for taking a user’s query (e.g., “latest on NVIDIA AI News“) and returning a list of relevant articles and their URLs.

3. Large Language Model (LLM): The Brains of the Operation

The LLM is the core intelligence layer. We will use it for summarization. After fetching an article’s content, we send it to an LLM with a specific prompt, asking it to generate a concise summary. The rise of LLM API providers gives us many options, from the well-established OpenAI News and Anthropic News models to incredibly fast inference engines like Groq, which runs models like Llama 3 and Mistral AI News at exceptional speeds. For those interested in local development, tools like Ollama allow you to run powerful open-source models on your own hardware.

4. LangGraph: The Orchestration Engine

While simple applications can get by with direct API calls, more complex, multi-step tasks require a robust orchestration framework. This is where LangGraph, a library built on top of LangChain, shines. It allows us to define our workflow as a graph, where each node is a step (a function or another LLM call) and edges represent the transitions between steps. This is perfect for building agentic systems that can reason, plan, and execute tasks. It provides a more controllable and transparent alternative to some of the more “magical” agent loops found in the original LangChain News library.

Section 1: Laying the Foundation – A Basic Streamlit News Fetcher

Let’s start by building the skeleton of our application. The first step is to create a simple Streamlit interface that can accept a user query, use the Tavily API to fetch relevant news articles, and display the results. This ensures our data pipeline is working before we add the complexity of AI.

First, ensure you have the necessary libraries installed:

pip install streamlit tavily-python python-dotenvYou will also need to get an API key from Tavily AI and store it in a .env file in your project’s root directory.

.env file:

TAVILY_API_KEY="your_tavily_api_key_here"Now, let’s write the Python script. This code sets up a basic UI with a title, a text input box, and a button. When the button is clicked, it calls the Tavily client to perform a search and then displays the titles and URLs of the returned articles.

import streamlit as st

import os

from tavily import TavilyClient

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Initialize the Tavily client

try:

tavily_api_key = os.getenv("TAVILY_API_KEY")

if not tavily_api_key:

st.error("TAVILY_API_KEY not found. Please set it in your .env file.")

st.stop()

tavily = TavilyClient(api_key=tavily_api_key)

except Exception as e:

st.error(f"Failed to initialize Tavily client: {e}")

st.stop()

# --- Streamlit App UI ---

st.set_page_config(page_title="AI News Dashboard", layout="wide")

st.title("🤖 AI-Powered News Dashboard")

st.sidebar.header("Search Controls")

query = st.sidebar.text_input(

"Enter a topic to search for news:",

"Latest updates on JAX News"

)

if st.sidebar.button("Fetch News"):

if query:

st.write(f"### Top News Articles for: '{query}'")

try:

# Perform the search

response = tavily.search(query=query, search_depth="basic", max_results=5)

# Check if we have results

if response and 'results' in response and response['results']:

# Display the results

for result in response['results']:

st.markdown(f"**[{result['title']}]({result['url']})**")

st.write(f"Source: {result['url']}")

st.divider()

else:

st.warning("No results found for your query.")

except Exception as e:

st.error(f"An error occurred while fetching news: {e}")

else:

st.warning("Please enter a search query.")

To run this app, save the code as app.py and execute streamlit run app.py in your terminal. You now have a functional, albeit simple, news fetching application. This serves as our solid base.

Section 2: Integrating LLM for Intelligent Summarization

With our data pipeline in place, the next logical step is to add intelligence. Instead of just showing a list of links, we want to provide users with a quick, digestible summary of the news. We’ll use the Groq API for this, as it offers incredibly fast inference speeds, which is crucial for a responsive user experience.

First, install the Groq Python library:

pip install groqAdd your Groq API key to the .env file:

GROQ_API_KEY="your_groq_api_key_here"Now, we’ll create a function that takes the content of an article and uses the Groq client to generate a summary. We’ll also need a way to scrape the content from the article URLs we fetched. The requests and BeautifulSoup4 libraries are perfect for this.

pip install requests beautifulsoup4Let’s integrate this into our Streamlit app. We’ll add a function to scrape content and another to summarize it. We’ll also use Streamlit’s caching to avoid re-fetching and re-summarizing the same content on every interaction, which saves time and API costs.

import streamlit as st

import os

from tavily import TavilyClient

from groq import Groq

from dotenv import load_dotenv

import requests

from bs4 import BeautifulSoup

# Load environment variables

load_dotenv()

# --- API Clients Initialization ---

try:

tavily = TavilyClient(api_key=os.getenv("TAVILY_API_KEY"))

groq_client = Groq(api_key=os.getenv("GROQ_API_KEY"))

except Exception as e:

st.error(f"API key initialization failed: {e}")

st.stop()

# --- Helper Functions with Caching ---

@st.cache_data(ttl=3600) # Cache for 1 hour

def scrape_article_content(url):

"""Scrapes the main text content from a given URL."""

try:

response = requests.get(url, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.content, 'html.parser')

# Find all paragraph tags and join their text

paragraphs = soup.find_all('p')

content = ' '.join([p.get_text() for p in paragraphs])

return content[:4000] # Truncate for LLM context window

except Exception as e:

return f"Error scraping content: {e}"

@st.cache_data(ttl=3600)

def get_ai_summary(content, query):

"""Generates a summary using Groq's Llama3 model."""

if not content.startswith("Error"):

try:

chat_completion = groq_client.chat.completions.create(

messages=[

{

"role": "system",

"content": "You are an expert AI news analyst. Your task is to provide a concise, neutral summary of the provided article content. Focus on the key facts and outcomes. The user is researching the topic: " + query

},

{

"role": "user",

"content": content,

}

],

model="llama3-8b-8192",

)

return chat_completion.choices[0].message.content

except Exception as e:

return f"Error generating summary: {e}"

return "Could not generate summary due to scraping error."

# --- Streamlit App UI ---

st.set_page_config(page_title="AI News Dashboard", layout="wide")

st.title("🤖 AI-Powered News Dashboard")

st.sidebar.header("Search Controls")

query = st.sidebar.text_input(

"Enter a topic:",

"Latest on Hugging Face Transformers News"

)

if st.sidebar.button("Fetch & Summarize News"):

if query:

st.write(f"### AI-Summarized News for: '{query}'")

with st.spinner("Fetching and summarizing articles..."):

try:

response = tavily.search(query=query, search_depth="basic", max_results=3)

if response and 'results' in response and response['results']:

for result in response['results']:

with st.container(border=True):

st.markdown(f"#### [{result['title']}]({result['url']})")

content = scrape_article_content(result['url'])

summary = get_ai_summary(content, query)

st.write(summary)

else:

st.warning("No results found.")

except Exception as e:

st.error(f"An error occurred: {e}")

else:

st.warning("Please enter a search query.")

Now, our application is significantly more useful. It fetches articles, scrapes their content, and provides an AI-generated summary, all within a clean interface. The use of st.cache_data is a critical best practice for performance and cost optimization, especially when dealing with external API calls. This pattern is essential for any serious Streamlit application, whether you’re working with Vertex AI News or a simple REST API.

Section 3: Orchestrating Workflows with LangGraph

Our current application follows a linear flow: fetch -> scrape -> summarize. This is effective but rigid. What if we wanted to build a more complex, agent-like system? For example, what if we wanted the system to first search for news, then based on the initial results, decide to perform a follow-up search for more specific details before summarizing? This is where a stateful graph-based approach using LangGraph becomes invaluable.

LangGraph allows us to define our logic as a StateGraph. We define the structure of our application’s state and then create nodes, which are functions or other callables that modify this state. Edges connect these nodes, defining the flow of control. This makes it easy to build systems with cycles, conditional logic, and human-in-the-loop interventions.

Let’s create a simple graph that formalizes our existing workflow. This will serve as a foundation for adding more complex logic later.

from typing import TypedDict, List

from langgraph.graph import StateGraph, END

# Define the state for our graph

class NewsGraphState(TypedDict):

query: str

articles: List[dict]

summaries: List[str]

# Define the nodes (functions that operate on the state)

def fetch_articles(state: NewsGraphState):

"""Node to fetch articles using Tavily."""

print("---FETCHING ARTICLES---")

query = state['query']

response = tavily.search(query=query, search_depth="basic", max_results=3)

articles = response.get('results', [])

return {"articles": articles}

def summarize_articles(state: NewsGraphState):

"""Node to scrape and summarize each article."""

print("---SUMMARIZING ARTICLES---")

articles = state['articles']

summaries = []

for article in articles:

content = scrape_article_content(article['url'])

summary = get_ai_summary(content, state['query'])

summaries.append(summary)

return {"summaries": summaries}

# --- Build the Graph ---

workflow = StateGraph(NewsGraphState)

# Add nodes to the graph

workflow.add_node("fetcher", fetch_articles)

workflow.add_node("summarizer", summarize_articles)

# Define the edges that connect the nodes

workflow.set_entry_point("fetcher")

workflow.add_edge("fetcher", "summarizer")

workflow.add_edge("summarizer", END)

# Compile the graph into a runnable app

app_graph = workflow.compile()

# To run this graph:

# final_state = app_graph.invoke({"query": "Latest on Meta AI News"})

# print(final_state['summaries'])

While this code isn’t directly integrated into the Streamlit UI yet (that would involve calling app_graph.invoke inside the button click handler), it demonstrates the powerful paradigm LangGraph introduces. We have explicitly defined our workflow’s state and its transitions. We could now easily add a “conditional edge” after the fetcher node that checks the quality of the articles and decides whether to go to the summarizer or back to the fetcher with a refined query. This level of control is essential for building robust AI agents and is a significant step up from simple sequential chains, a concept familiar to users of LlamaIndex News or early LangChain.

Section 4: Advanced Features, Best Practices, and Optimization

With a solid foundation, we can now explore advanced features and best practices to make our application more robust, interactive, and efficient. This includes adding a chat interface and considering the broader ecosystem of tools for deployment and monitoring.

Adding a Conversational Chat Interface

A static summary is good, but a conversational interface is better. It allows users to ask follow-up questions and dive deeper into the topics they find interesting. Streamlit’s st.chat_message and st.chat_input make this remarkably simple. We can use Streamlit’s st.session_state to maintain the conversation history.

Here’s a snippet demonstrating how to add a chat component. This could be used to “chat” with the context of the summarized articles.

# --- Chat Interface (add this to your main Streamlit script) ---

st.header("Chat with the News")

# Initialize chat history in session state

if "messages" not in st.session_state:

st.session_state.messages = []

# Display prior chat messages

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Accept user input

if prompt := st.chat_input("Ask a follow-up question about the articles..."):

# Add user message to history

st.session_state.messages.append({"role": "user", "content": prompt})

with st.chat_message("user"):

st.markdown(prompt)

# Generate AI response (this is a simplified example)

# In a real app, you'd pass the conversation history and summarized

# articles as context to the LLM.

with st.chat_message("assistant"):

with st.spinner("Thinking..."):

# Combine summaries into a single context

news_context = "\n\n".join(st.session_state.get('summaries', []))

response_completion = groq_client.chat.completions.create(

messages=[

{"role": "system", "content": "You are a helpful AI assistant. Answer the user's question based on the following news context:\n\n" + news_context},

{"role": "user", "content": prompt}

],

model="llama3-8b-8192",

)

response = response_completion.choices[0].message.content

st.markdown(response)

# Add assistant response to history

st.session_state.messages.append({"role": "assistant", "content": response})

Best Practices and Optimization

- Aggressive Caching: As shown, use

st.cache_datafor functions that return data (like API calls) andst.cache_resourcefor functions that return resources (like database connections or loaded models). This is the single most important optimization for Streamlit apps. - State Management: Use

st.session_stateto store variables that need to persist across user interactions and reruns, such as chat history or the results of a news search. - Error Handling: Wrap all external API calls in

try...exceptblocks to handle network errors, API key issues, or rate limits gracefully. Display user-friendly error messages usingst.error(). - Cost and Performance: Be mindful of LLM context windows and API costs. Truncate scraped text before sending it for summarization. For production apps, consider using tools like LangSmith News for tracing and debugging your LLM calls to identify performance bottlenecks and high-cost operations.

- Deployment: Once your app is ready, you can deploy it using Streamlit Community Cloud, Hugging Face Spaces, or cloud platforms like AWS SageMaker or Azure Machine Learning. For more complex, scalable backends, consider services like Modal or Replicate.

Exploring the AI Ecosystem

The tools we’ve used are just one slice of a vast and rapidly evolving ecosystem. For example, instead of simple summarization, you could implement Retrieval-Augmented Generation (RAG) by storing article content in a vector database like Pinecone News, Chroma News, or Milvus News. This would allow your chat agent to find the most relevant snippets of text to answer user questions. For model training and experimentation, platforms like Weights & Biases News and MLflow News are indispensable. The choice of UI is also flexible; while Streamlit is excellent for data-centric apps, you might explore Gradio News or Chainlit News for more chat-focused interfaces.

Conclusion: Your Gateway to Building Intelligent Apps

We have journeyed from a simple idea—a news dashboard—to a sophisticated, AI-powered application. We started with a basic Streamlit UI for fetching data, then integrated a powerful LLM for intelligent summarization, and finally explored how LangGraph can orchestrate complex, stateful workflows for building robust AI agents. Along the way, we implemented best practices like caching and state management and added an interactive chat interface to enhance user engagement.

The true power of this project lies not just in the final application but in the foundational skills it teaches. The patterns you’ve learned here—connecting a frontend, a data source, an LLM, and an orchestration engine—are the building blocks for a wide array of modern AI applications. Whether you are tracking PyTorch News, analyzing market trends, or building a research assistant, these principles remain the same. The next step is to experiment. Swap out the LLM, try a different search API, add more complex logic to your LangGraph, or deploy your creation to the cloud. The world of AI-powered application development is at your fingertips.