DeepSpeed Ulysses: A Breakthrough in Training Extreme Long-Sequence AI Models

Introduction: Breaking the Sequence Length Barrier in Transformer Models

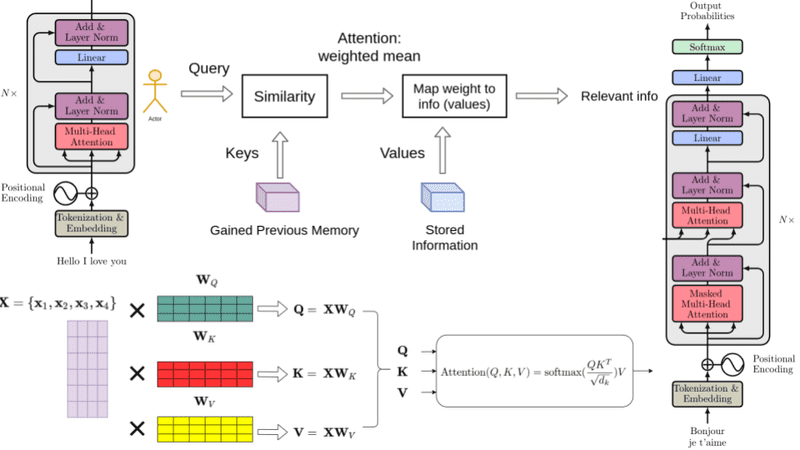

The world of artificial intelligence is in a constant race to build larger, more capable models. A key frontier in this race is the context window—the amount of information a model can process at once. From summarizing entire research papers to analyzing complex codebases, models with long context windows are unlocking unprecedented capabilities. However, the computational heart of modern AI, the Transformer architecture, has a fundamental limitation: the self-attention mechanism’s memory and compute requirements scale quadratically with the sequence length. This O(N²) complexity has been a formidable barrier, making it prohibitively expensive to train models on sequences stretching into millions of tokens.

Recent DeepSpeed News from Microsoft AI has introduced a groundbreaking solution to this very problem: DeepSpeed Ulysses. This novel methodology rethinks parallelism to efficiently train Transformer models on extraordinarily long sequences. By cleverly partitioning the sequence dimension itself across multiple GPUs, Ulysses achieves near-linear scaling and dramatically reduces the memory footprint per device. This article provides a comprehensive technical deep dive into DeepSpeed Ulysses, exploring its core concepts, implementation details, practical code examples, and its profound impact on the future of AI, touching upon trends seen in recent PyTorch News and Hugging Face Transformers News.

Section 1: The Quadratic Bottleneck and the Ulysses Paradigm

To fully appreciate the innovation of Ulysses, we must first understand the problem it solves. The self-attention mechanism in a Transformer calculates an attention score for every pair of tokens in a sequence. For a sequence of length ‘S’ and a hidden dimension ‘H’, the memory required for the attention score matrix alone is proportional to S², and the computation (FLOPs) is proportional to S²H. As ‘S’ grows from a few thousand to hundreds of thousands or even millions, this quadratic scaling becomes an insurmountable memory and compute wall.

Understanding the Memory Wall

The primary obstacles are:

- Activation Memory: Intermediate results (activations) stored for the backward pass grow quadratically with the sequence length.

- KV Cache: During inference, the Key and Value projections for all previous tokens are cached, and this cache also grows linearly with the sequence length, becoming a bottleneck in long-context generation.

- Attention Score Matrix: The (S x S) matrix of attention scores can be too large to fit in a single GPU’s memory.

Let’s illustrate this with a simple PyTorch code example. This snippet demonstrates the memory allocation for a single attention head’s score matrix, highlighting the quadratic growth.

import torch

def estimate_attention_memory(sequence_length, num_heads, d_head, batch_size, dtype=torch.float32):

"""

Estimates the memory required for the Q, K, V, and Attention Score matrices.

"""

# Memory for Q, K, V projections

qkv_memory = 3 * batch_size * num_heads * sequence_length * d_head * dtype.itemsize

# Memory for the attention score matrix (the main bottleneck)

attention_scores_memory = batch_size * num_heads * sequence_length * sequence_length * dtype.itemsize

total_memory_gb = (qkv_memory + attention_scores_memory) / (1024 ** 3)

print(f"Sequence Length: {sequence_length}")

print(f"Attention Scores Memory: {attention_scores_memory / (1024 ** 3):.4f} GB")

print(f"Total Estimated Memory: {total_memory_gb:.4f} GB")

print("-" * 30)

# Example parameters for a single layer

params = {

"num_heads": 12,

"d_head": 64,

"batch_size": 1,

"dtype": torch.bfloat16 # Using bfloat16 to save memory

}

estimate_attention_memory(sequence_length=4096, **params)

estimate_attention_memory(sequence_length=32768, **params)

estimate_attention_memory(sequence_length=131072, **params)Running the code above would show that while a 4K sequence is manageable, the memory for a 131K sequence’s attention matrix explodes, making it infeasible for a single device. This is the exact problem that traditional data, tensor, and pipeline parallelism do not fully solve for extreme sequence lengths.

Introducing Sequence Parallelism

DeepSpeed Ulysses introduces a form of parallelism called **Sequence Parallelism**. Instead of partitioning the model’s weights (Tensor Parallelism) or splitting layers across devices (Pipeline Parallelism), Ulysses partitions the input sequence itself. If you have a sequence of length ‘S’ and ‘N’ GPUs, each GPU processes only a chunk of size S/N. This fundamentally changes the scaling dynamics. Now, each GPU only needs to compute an attention matrix of size (S/N x S), and with clever communication, this can be managed efficiently, breaking the quadratic memory barrier per GPU.

Section 2: The Mechanics of Ulysses: All-to-All Communication

The magic behind Ulysses lies in its highly optimized use of the all-to-all communication collective. This operation allows each GPU to exchange data with every other GPU simultaneously. Ulysses employs a two-stage communication strategy to distribute and gather the necessary tensors for the attention calculation without ever materializing the full S x S matrix on a single device.

The Two-Step Attention Calculation

- Forward Pass (Q, K, V Projections): Initially, each of the ‘N’ GPUs holds a local sequence chunk of size S/N. They compute their local Query (Q), Key (K), and Value (V) projections. Now, to compute attention, every Q chunk needs to interact with every K and V chunk.

- First

all-to-all: The K and V tensors are rearranged across the GPUs. After this operation, each GPU holds 1/N of the K and V tensors from *every* other GPU. This effectively gives each GPU the global K and V information it needs to compute its local portion of the attention output. - Local Attention: Each GPU computes its local attention output by combining its local Q with the global K and V it just received.

- Second

all-to-all: The resulting local attention outputs are scattered back to their original sequence order across the GPUs, reconstructing the final output tensor in a sequence-parallel layout.

- First

- Backward Pass: A similar, reversed

all-to-allpattern is used during the backward pass to correctly accumulate gradients across the sequence dimension.

This approach allows Ulysses to integrate seamlessly with other parallelism techniques. For instance, you can have a system where 4 GPUs are used for tensor parallelism within a node, and 8 nodes use sequence parallelism, enabling massive scale. This composability is a hallmark of the DeepSpeed ecosystem, which is a major topic in recent Azure AI and NVIDIA AI News.

Enabling Ulysses in DeepSpeed

One of the most appealing aspects of DeepSpeed is its ease of use. Enabling Ulysses is as simple as adding a parameter to the DeepSpeed configuration file. This allows researchers and engineers to leverage this powerful technology without rewriting their model code, a principle also championed by tools like Hugging Face Transformers and Fast.ai.

Here is an example of a DeepSpeed JSON configuration file enabling sequence parallelism.

{

"train_batch_size": 8,

"train_micro_batch_size_per_gpu": 1,

"steps_per_print": 10,

"optimizer": {

"type": "AdamW",

"params": {

"lr": 0.0001,

"betas": [

0.9,

0.95

],

"eps": 1e-8,

"weight_decay": 0.01

}

},

"fp16": {

"enabled": false

},

"bf16": {

"enabled": true

},

"zero_optimization": {

"stage": 1

},

"sequence_parallelism": {

"enabled": true,

"size": 8

}

}In this configuration, `”sequence_parallelism”: {“enabled”: true, “size”: 8}` tells DeepSpeed to partition the sequence dimension across 8 GPUs. The rest of the configuration can define other optimizations like ZeRO, mixed-precision training, etc.

Section 3: Practical Implementation and Model Integration

Integrating DeepSpeed Ulysses into a standard PyTorch training script is straightforward. The core logic of the model definition remains unchanged. The magic happens in the `deepspeed.initialize` call, which wraps the model and optimizer and applies the parallelism strategies defined in the configuration file.

This design philosophy ensures that cutting-edge system optimizations are accessible to model developers without requiring them to become experts in distributed computing. This aligns with the goals of many MLOps platforms like Weights & Biases, Comet ML, and cloud services like AWS SageMaker and Vertex AI, which aim to simplify the machine learning lifecycle.

Below is a Python script that outlines the typical structure for initializing a model with DeepSpeed, which would now support Ulysses if the provided JSON config is used.

import torch

import deepspeed

from transformers import GPT2Model, GPT2Config

# 1. Add DeepSpeed command-line arguments

# deepspeed.add_config_arguments(parser)

# args = parser.parse_args()

# For demonstration, we will assume args are parsed and contain the local_rank

# and deepspeed_config path.

# Dummy args for illustration

class DummyArgs:

local_rank = 0

deepspeed_config = 'ds_config_ulysses.json'

args = DummyArgs()

# 2. Initialize distributed backend

deepspeed.init_distributed()

# 3. Define the model (e.g., from Hugging Face Transformers)

config = GPT2Config() # Use a standard model config

model = GPT2Model(config)

# 4. Define optimizer and other training components

# Parameters that require gradients

params = filter(lambda p: p.requires_grad, model.parameters())

optimizer = torch.optim.AdamW(params, lr=0.0001)

# 5. Initialize the DeepSpeed engine

# This is where the Ulysses configuration is applied

model_engine, optimizer, _, _ = deepspeed.initialize(

args=args,

model=model,

optimizer=optimizer,

config=args.deepspeed_config

)

# 6. Training loop

# The training loop uses the model_engine object

# for forward, backward, and step calls.

# The input data (input_ids, attention_mask) would be loaded here.

# For a sequence of length S, it will be automatically partitioned

# across the number of GPUs specified in 'sequence_parallelism.size'.

# Example forward pass

# input_ids = torch.randint(0, config.vocab_size, (1, 262144)).to(model_engine.device)

# output = model_engine(input_ids)

# model_engine.backward(output.loss)

# model_engine.step()This example shows that the user’s interaction with the model remains high-level. The `model_engine` object handles all the complex underlying communication, partitioning, and synchronization required by Ulysses. This is a significant step forward for usability, echoing the progress seen in other frameworks like JAX and TensorFlow in simplifying distributed training.

Section 4: Best Practices, Performance, and Future Impact

While DeepSpeed Ulysses is a powerful tool, applying it effectively requires understanding its trade-offs and best use cases. Here are some key considerations and best practices.

When to Use Ulysses

- Extreme Sequence Lengths: Ulysses shines when sequence length is the primary memory bottleneck. If your model fits comfortably in memory with tensor/pipeline parallelism, the communication overhead of Ulysses might not be worth it. It is designed for scenarios that are otherwise impossible.

- Sufficient Network Bandwidth: The performance of Ulysses is heavily dependent on the interconnect bandwidth between GPUs (e.g., NVIDIA’s NVLink or InfiniBand). The

all-to-allcollective is communication-intensive, so high-speed networking is crucial for good scaling. - Large-Scale Clusters: The benefits are most pronounced on large GPU clusters where you can dedicate a significant number of devices to sequence parallelism.

Performance Tuning and Optimization

- Balance Parallelism: Finding the right mix of sequence, tensor, and pipeline parallelism is key. For a given model and hardware setup, you may need to experiment to find the optimal configuration that balances memory usage, compute efficiency, and communication overhead.

- Monitor and Profile: Use tools like the DeepSpeed profiler or NVIDIA’s Nsight to monitor GPU utilization, memory usage, and communication patterns. This can help identify bottlenecks and validate that sequence parallelism is working as expected. This is a common practice promoted by MLOps tools like MLflow News and ClearML News.

– Overlap Communication and Computation: DeepSpeed automatically attempts to overlap the all-to-all communication with computation (e.g., the MLP layers of the Transformer), effectively hiding the communication latency. Understanding this can help in structuring models for maximum efficiency.

Impact on the AI Ecosystem

The implications of efficiently training on million-plus token sequences are vast. This technology, as highlighted by recent Meta AI News and Google DeepMind News, is a critical enabler for the next generation of AI models. Potential applications include:

- Genomic Data Analysis: Processing entire DNA sequences to understand complex genetic interactions.

- Long-Form Content Understanding: Models that can read and reason about entire books, legal contracts, or financial reports in a single pass.

- High-Resolution Multimodal AI: Processing extremely high-resolution images or long video clips by treating them as long sequences of patches.

- Advanced Code Generation: Analyzing an entire codebase to understand dependencies and generate highly contextual code.

Conclusion: Charting a Course for a Long-Context Future

DeepSpeed Ulysses represents a significant leap forward in solving one of the most persistent challenges in scaling Transformer models. By introducing an efficient and composable sequence parallelism strategy, it effectively demolishes the quadratic memory wall, paving the way for models with context windows that were previously unimaginable. Its seamless integration into the DeepSpeed ecosystem ensures that this powerful capability is accessible to the broader AI community, accelerating research and development.

As the industry continues to push the boundaries of what’s possible, driven by innovations from organizations like OpenAI, Anthropic, and Mistral AI, foundational system optimizations like Ulysses are the engines that power this progress. For developers and researchers working on the cutting edge of large-scale AI, understanding and leveraging this technology is no longer just an option—it’s a necessity for navigating the exciting, long-context future of artificial intelligence. The next step is to explore the official DeepSpeed repositories, experiment with this new parallelism dimension, and start building the next generation of truly long-sequence AI models.