FAISS in 2024: A Deep Dive into High-Performance Vector Search for Modern AI

Introduction: The Engine Behind Modern Similarity Search

In the rapidly evolving landscape of artificial intelligence, the ability to search through vast amounts of unstructured data based on semantic meaning rather than just keywords has become a cornerstone technology. This is the world of vector search, and at its heart lies a powerful, battle-tested library: FAISS (Facebook AI Similarity Search). Developed by Meta AI, FAISS is not just another tool; it’s a high-performance C++ library with Python bindings designed for the efficient similarity search and clustering of dense vectors. As the AI community buzzes with the latest Meta AI News, the role of foundational libraries like FAISS becomes even more critical.

With the meteoric rise of Large Language Models (LLMs) and the widespread adoption of Retrieval-Augmented Generation (RAG) systems, the demand for fast, scalable, and memory-efficient vector search has exploded. Whether you’re building a semantic search engine, a recommendation system, or a sophisticated RAG pipeline powered by models from OpenAI, Cohere, or Mistral AI, the underlying challenge remains the same: how to find the “closest” items in a dataset of millions or even billions of vectors in milliseconds. FAISS provides the fundamental building blocks to solve this problem, offering a suite of algorithms that balance speed, memory usage, and accuracy. This article will provide a comprehensive technical deep dive into FAISS, exploring its core concepts, practical implementations, advanced techniques, and its place in the modern AI ecosystem.

Section 1: Core Concepts and Your First FAISS Index

Before diving into complex implementations, it’s crucial to understand the fundamental concepts of FAISS. The central idea is to work with high-dimensional vectors (or embeddings) that represent data like text, images, or audio. The goal is to find the nearest neighbors for a given query vector based on a distance metric, most commonly L2 (Euclidean) distance or inner product.

The FAISS `Index`

The main object in FAISS is the Index. An index is a data structure that stores the vectors and provides a method to search for the nearest neighbors. FAISS offers a wide variety of index types, each with different performance characteristics.

The simplest index is IndexFlatL2. It performs an exhaustive, brute-force search by comparing the query vector to every other vector in the index. While this guarantees perfect accuracy, it’s computationally expensive and doesn’t scale to large datasets. However, it serves as an excellent baseline for understanding the core mechanics and for validating the accuracy of more complex, approximate nearest neighbor (ANN) indexes.

Practical Example: Building a Brute-Force Index

Let’s build our first index using Python. You’ll need to install FAISS (CPU or GPU version) and NumPy. For the CPU version, you can simply run pip install faiss-cpu numpy.

This example demonstrates the basic workflow: create a dataset, build an index, add the data, and perform a search.

import numpy as np

import faiss

# 1. Set up the dimensions and data

d = 64 # Vector dimension

nb = 100000 # Database size

nq = 100 # Number of query vectors

np.random.seed(1234)

# 2. Generate some random data to index

db_vectors = np.random.random((nb, d)).astype('float32')

db_vectors[:, 0] += np.arange(nb) / 1000.

# 3. Generate some random query vectors

query_vectors = np.random.random((nq, d)).astype('float32')

query_vectors[:, 0] += np.arange(nq) / 1000.

# 4. Build the IndexFlatL2 index

# This index uses the L2 (Euclidean) distance metric

index = faiss.IndexFlatL2(d)

print(f"Is the index trained? {index.is_trained}") # True, as Flat indexes don't need training

index.add(db_vectors)

print(f"Total vectors in the index: {index.ntotal}")

# 5. Perform the search

k = 4 # We want to find the 4 nearest neighbors

distances, indices = index.search(query_vectors, k)

# 6. Print the results for the first 5 queries

print("\nSearch Results (Indices):")

print(indices[:5])

print("\nSearch Results (L2 Distances):")

print(distances[:5])In this code, indices contains the IDs (row numbers) of the nearest vectors from our original db_vectors, and distances contains the corresponding L2 distances. This simple yet powerful API is the foundation for all interactions with FAISS.

Section 2: Building a Practical Search System with Text Embeddings

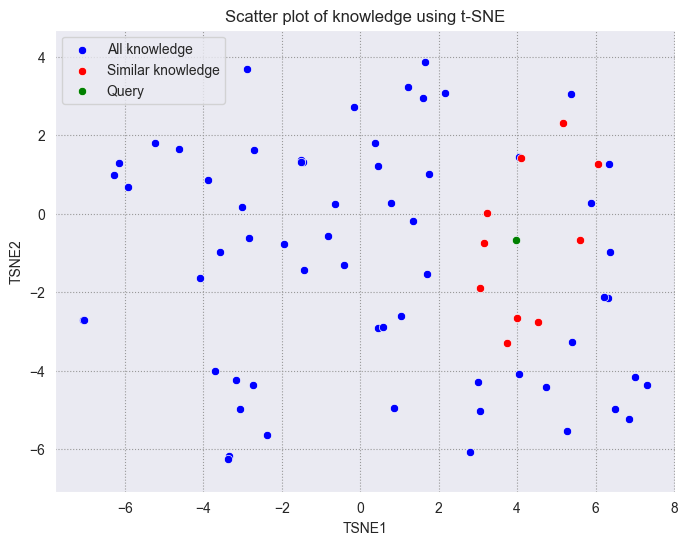

Random vectors are great for learning, but real-world applications involve meaningful data. A common use case is semantic search for text documents. This requires converting text into vector embeddings using a pre-trained model, often from sources highlighted in Hugging Face Transformers News. The sentence-transformers library is perfect for this task.

Moving Beyond Brute-Force: The `IndexIVFFlat`

For larger datasets, `IndexFlatL2` becomes too slow. We need an Approximate Nearest Neighbor (ANN) search algorithm. A popular choice in FAISS is `IndexIVFFlat`. This index works by partitioning the vector space into cells (using a k-means clustering algorithm). During a search, it first identifies the few cells closest to the query vector and then performs an exhaustive search only within those cells. This drastically reduces the number of comparisons needed.

This partitioning process requires a “training” step where the index learns the boundaries of these cells from a representative sample of the data. This is a key difference from the `IndexFlatL2` index.

Practical Example: Semantic Text Search

Let’s build a system that can find semantically similar sentences. First, install the required libraries: pip install faiss-cpu sentence-transformers.

import numpy as np

import faiss

from sentence_transformers import SentenceTransformer

# 1. Sample documents for our knowledge base

documents = [

"The Eiffel Tower is a wrought-iron lattice tower on the Champ de Mars in Paris.",

"The Great Wall of China is a series of fortifications made of stone, brick, and other materials.",

"Photosynthesis is a process used by plants to convert light energy into chemical energy.",

"The Colosseum is an oval amphitheatre in the centre of the city of Rome, Italy.",

"Paris is the capital and most populous city of France.",

"The Amazon rainforest is a moist broadleaf tropical rainforest in the Amazon biome.",

"Machine learning is a field of inquiry devoted to understanding and building methods that 'learn'."

]

# 2. Load a pre-trained model to generate embeddings

# This step often involves models featured in PyTorch News or TensorFlow News

model = SentenceTransformer('all-MiniLM-L6-v2')

doc_embeddings = model.encode(documents).astype('float32')

d = doc_embeddings.shape[1] # Get embedding dimension

# 3. Build the IndexIVFFlat index

nlist = 3 # Number of cells/clusters to partition the data into

quantizer = faiss.IndexFlatL2(d) # The base index for each cell

index = faiss.IndexIVFFlat(quantizer, d, nlist)

# 4. Train the index on the document embeddings

print(f"Is the index trained? {index.is_trained}")

index.train(doc_embeddings)

print(f"Is the index trained after training? {index.is_trained}")

# 5. Add the embeddings to the index

index.add(doc_embeddings)

print(f"Total vectors in the index: {index.ntotal}")

# 6. Perform a search

query_text = "What is the famous landmark in France?"

query_embedding = model.encode([query_text]).astype('float32')

# By default, IVF searches only 1 cell. Let's increase it.

index.nprobe = 2 # Search the 2 closest cells

k = 3 # Find the top 3 results

distances, indices = index.search(query_embedding, k)

# 7. Print the results

print(f"\nQuery: '{query_text}'")

print("Top 3 most similar documents:")

for i, idx in enumerate(indices[0]):

print(f"{i+1}: {documents[idx]} (Distance: {distances[0][i]:.4f})")This example showcases a complete, albeit small, RAG-style retrieval pipeline. The nprobe parameter is crucial for tuning the trade-off between search speed and accuracy. A higher nprobe value means more cells are searched, leading to better accuracy but slower search times. Frameworks like those covered in LangChain News and LlamaIndex News often use FAISS or similar vector stores under the hood to manage this retrieval logic.

Section 3: Advanced Techniques for Massive Scale

When you scale to billions of vectors, even `IndexIVFFlat` can become a bottleneck due to high memory consumption. FAISS provides advanced techniques like quantization to compress vectors and graph-based indexes for ultra-fast search.

Memory Optimization with Product Quantization (PQ)

Product Quantization is a technique that compresses vectors to reduce their memory footprint significantly. It works by splitting each vector into sub-vectors and then using a codebook to represent each sub-vector with a short code. This dramatically reduces the amount of RAM required to hold the index. The `IndexIVFPQ` combines the IVF partitioning scheme with PQ for a highly scalable and memory-efficient index.

Speed Optimization with HNSW

Hierarchical Navigable Small World (HNSW) is a state-of-the-art graph-based indexing algorithm. It builds a multi-layered graph where links connect similar vectors. Searches are performed by efficiently traversing this graph. `IndexHNSWFlat` offers extremely fast and accurate search, but at the cost of higher memory usage and longer build times compared to IVF-based indexes.

Practical Example: Using `IndexIVFPQ` for Memory Savings

Let’s adapt our previous example to use `IndexIVFPQ`. The key changes are in the index definition, where we specify the PQ parameters.

import numpy as np

import faiss

# 1. Set up parameters

d = 128 # Vector dimension

nb = 200000 # Database size

np.random.seed(1234)

db_vectors = np.random.random((nb, d)).astype('float32')

# 2. Define the index parameters for IndexIVFPQ

nlist = 100 # Number of clusters

m = 8 # Number of sub-quantizers

bits = 8 # Bits per sub-quantizer code (8 bits = 256 centroids)

# 3. Build the quantizer and the IVFPQ index

quantizer = faiss.IndexFlatL2(d)

index = faiss.IndexIVFPQ(quantizer, d, nlist, m, bits)

# 4. Train the index

# It's recommended to train on a subset of the data for large datasets

training_vectors = db_vectors[np.random.choice(db_vectors.shape[0], 20000, replace=False)]

index.train(training_vectors)

# 5. Add the full dataset to the index

index.add(db_vectors)

print(f"Total vectors in the index: {index.ntotal}")

# 6. Perform a search

k = 5

query_vector = np.random.random((1, d)).astype('float32')

index.nprobe = 10 # Search 10 closest clusters

distances, indices = index.search(query_vector, k)

print("\nSearch Results (Indices):")

print(indices)

print("\nSearch Results (Distances):")

print(distances)

# Note: The original vectors are compressed.

# The distances are recomputed from the compressed codes by default,

# which is an approximation. For exact distances, you would need to retrieve

# the original vectors using the returned indices.This `IndexIVFPQ` setup allows you to index billions of vectors on a single machine. For even higher performance, FAISS has excellent GPU support, leveraging technologies discussed in NVIDIA AI News to accelerate training and search operations by orders of magnitude. This makes it a perfect fit for high-throughput inference servers like the Triton Inference Server.

Section 4: Best Practices, Optimization, and the Ecosystem

Choosing and tuning the right FAISS index is critical for building a successful application. Here are some best practices and considerations.

Choosing the Right Index

- `IndexFlatL2`: Use for small datasets (<1M vectors) where perfect accuracy is required and search speed is not a major concern. It’s also the gold standard for benchmarking other indexes.

- `IndexIVFFlat`: A great starting point for larger datasets. It provides a good balance between speed and accuracy. Rule of thumb for

nlist: between4*sqrt(N)and16*sqrt(N)where N is the number of vectors. - `IndexIVFPQ`: The go-to choice for massive datasets where memory is a constraint. It offers significant memory reduction with a manageable trade-off in accuracy.

- `IndexHNSWFlat`: Best for applications requiring very low latency search where memory is less of a concern. It often provides the best speed/accuracy trade-off for many real-time use cases.

Tuning for Performance

- `nprobe` (for IVF indexes): This is the most important parameter to tune. Start with a small value (e.g., 1) and increase it to see how accuracy and latency change.

- Training Data: Always train your index on a representative sample of your data. Using too little or unrepresentative data will lead to poor partitioning and inaccurate results.

- Hardware: Utilize GPUs for a massive speedup in both training and searching. The performance difference can be 10-100x over a CPU implementation.

FAISS in the Broader AI Ecosystem

While FAISS is a library, it often competes with and complements managed vector databases. It’s important to understand the distinction:

- FAISS: A library that gives you maximum control. You are responsible for infrastructure, scaling, data persistence, and building an API. It’s ideal for custom solutions and research.

- Vector Databases (Milvus, Pinecone, Weaviate, Chroma, Qdrant): These are full-fledged services or self-hostable databases that handle sharding, replication, filtering, and provide a user-friendly API. As seen in recent Milvus News and Pinecone News, these platforms are rapidly adding features for enterprise-grade MLOps. They often use FAISS or similar algorithms internally.

In a modern MLOps pipeline, you might use tools like MLflow or Weights & Biases to track experiments for embedding models developed with PyTorch or TensorFlow. The resulting FAISS index can then be deployed as part of a microservice on cloud platforms like AWS SageMaker, Azure Machine Learning, or Vertex AI News highlights. The flexibility of FAISS allows it to be a critical component in many different architectural patterns.

Conclusion: The Enduring Relevance of FAISS

FAISS remains a cornerstone of high-performance vector search, providing the speed, flexibility, and scalability required by today’s most demanding AI applications. From powering semantic search to being the retrieval engine in complex RAG systems, its battle-tested algorithms offer unparalleled performance, especially when paired with GPU acceleration. While the rise of managed vector databases has simplified deployment for many, FAISS continues to be the library of choice for developers who need fine-grained control, maximum performance, and the ability to innovate at the core of their search technology.

As a next step, dive into the official documentation to explore the full range of index types and their parameters. Try building a small application using a web framework like FastAPI and a UI tool like Streamlit to see your vector search system in action. By mastering FAISS, you are equipping yourself with a fundamental skill that is more relevant than ever in the age of generative AI.