FAISS News: Mastering High-Performance Vector Search for Modern AI Applications

The artificial intelligence landscape is undergoing a seismic shift, driven by the unprecedented capabilities of large language models (LLMs). From chatbots to complex reasoning engines, these models are transforming industries. However, their power is often constrained by the data they were trained on. This is where Retrieval-Augmented Generation (RAG) has emerged as a game-changing technique, and at its heart lies a critical component: high-speed vector search. While many managed solutions have entered the market, the foundational library that continues to power countless high-performance systems is Meta AI’s FAISS (Facebook AI Similarity Search).

FAISS isn’t just a tool; it’s a low-level, high-performance toolkit that gives developers unparalleled control over how they store and search through billions of vectors. As the latest Meta AI News continues to highlight advancements in model capabilities, the need for efficient retrieval systems becomes even more pronounced. This article dives deep into the world of FAISS, exploring its core concepts, practical implementations for modern AI applications, advanced optimization techniques, and its place within the broader ecosystem of tools and frameworks discussed in today’s PyTorch News and TensorFlow News. Whether you’re building a sophisticated RAG pipeline with tools from the latest LangChain News or designing a custom recommendation engine, mastering FAISS is a crucial skill for any serious AI developer.

Understanding the Core Components of FAISS

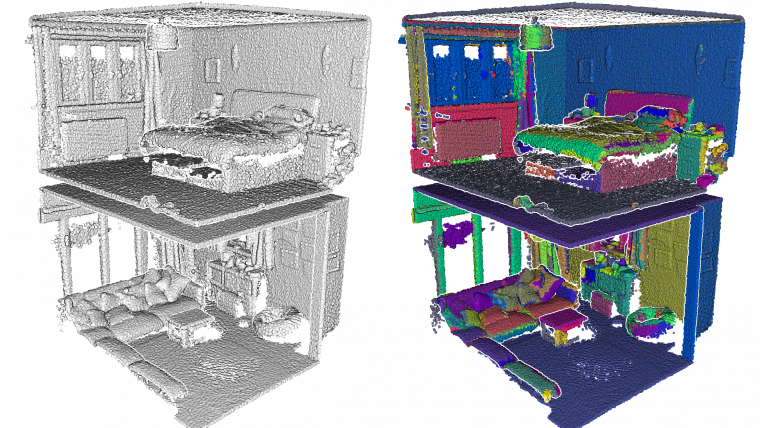

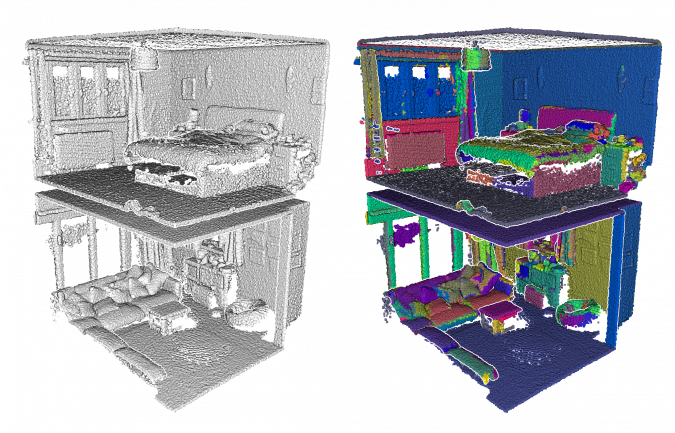

At its core, FAISS is designed to solve one problem exceptionally well: finding the nearest neighbors to a query vector within a massive collection of other vectors. These vectors, often called embeddings, are numerical representations of data—be it text, images, or audio—generated by deep learning models. The effectiveness of any vector search system hinges on its index, the data structure that organizes these vectors for efficient retrieval.

From Raw Vectors to Searchable Indexes

The simplest way to find the nearest neighbors is to compare a query vector to every other vector in the dataset, a method known as brute-force or exact search. FAISS provides an implementation for this called IndexFlatL2, which uses the L2 (Euclidean) distance for comparison. While this guarantees perfect accuracy, its computational cost grows linearly with the dataset size, making it impractical for millions or billions of vectors.

However, for smaller datasets or applications where 100% recall is non-negotiable, IndexFlatL2 is the perfect starting point. It serves as a fundamental benchmark against which all other approximate indexes are measured.

import numpy as np

import faiss

# 1. Define the dimensionality of the vectors

d = 128 # e.g., for a simple embedding model

# 2. Create a dataset of random vectors

nb = 100000 # number of vectors in the database

nq = 1000 # number of query vectors

np.random.seed(1234) # make random reproducible

xb = np.random.random((nb, d)).astype('float32')

xq = np.random.random((nq, d)).astype('float32')

# 3. Build the simplest FAISS index: IndexFlatL2

index = faiss.IndexFlatL2(d)

print(f"Is the index trained? {index.is_trained}") # True, as FlatL2 requires no training

# 4. Add the database vectors to the index

index.add(xb)

print(f"Total vectors in the index: {index.ntotal}")

# 5. Perform a search

k = 5 # we want to find the 5 nearest neighbors

D, I = index.search(xq, k) # D: distances, I: indices/IDs

# Print results for the first 5 queries

print("Indices of nearest neighbors:")

print(I[:5])

print("Distances to nearest neighbors:")

print(D[:5])The Need for Speed: Introduction to Approximate Nearest Neighbor (ANN)

For most real-world applications, the trade-off between perfect accuracy and significant speed improvement is not just acceptable but necessary. This is the domain of Approximate Nearest Neighbor (ANN) search. FAISS provides a rich collection of ANN indexes that use clever algorithms to partition the search space, drastically reducing the number of comparisons needed. This approach is what allows FAISS to scale to billions of vectors and is a core principle behind the performance of dedicated vector databases highlighted in recent Milvus News and Pinecone News. The key idea is to sacrifice a small amount of recall (finding the true nearest neighbors) for orders-of-magnitude gains in search latency.

Building a Practical Search System with FAISS and Modern Tooling

To leverage FAISS effectively, you first need high-quality vector embeddings. The modern AI ecosystem, filled with updates from Hugging Face News and model releases from OpenAI News and Mistral AI News, provides a plethora of powerful, open-source models for this task.

Generating Embeddings with Sentence Transformers

The sentence-transformers library is a popular choice for generating state-of-the-art text embeddings. It provides a simple interface to a wide range of pre-trained models from the Hugging Face Hub, making it easy to convert text into meaningful numerical representations. These models are often fine-tuned for semantic similarity tasks, making them ideal for RAG and semantic search.

Implementing IVF_FLAT for Scalable Search

One of the most widely used and balanced indexes in FAISS is IndexIVFFlat. The “IVF” stands for Inverted File, a concept borrowed from traditional information retrieval. This index works by first partitioning the vector space into cells (using a clustering algorithm like k-means). Each vector is then assigned to its nearest cell. During a search, FAISS only inspects the cell the query vector falls into, plus a few neighboring cells specified by the nprobe parameter. This dramatically narrows down the search space.

This partitioning strategy is a cornerstone of large-scale data processing, with similar concepts appearing in frameworks like Apache Spark MLlib News. In FAISS, tuning the number of cells and the nprobe value allows you to fine-tune the speed-versus-accuracy trade-off for your specific application.

import numpy as np

import faiss

from sentence_transformers import SentenceTransformer

# 1. Sample documents for our knowledge base

documents = [

"The Eiffel Tower is a wrought-iron lattice tower on the Champ de Mars in Paris.",

"The Great Wall of China is a series of fortifications made of stone, brick, and other materials.",

"Photosynthesis is a process used by plants to convert light energy into chemical energy.",

"The Amazon rainforest is the world's largest tropical rainforest, famed for its biodiversity.",

"Python is a high-level, general-purpose programming language.",

"Meta AI developed the Llama series of large language models."

]

# 2. Load a pre-trained model from Sentence Transformers

# This is a key topic in Hugging Face Transformers News

model = SentenceTransformer('all-MiniLM-L6-v2')

doc_embeddings = model.encode(documents).astype('float32')

d = doc_embeddings.shape[1] # Get embedding dimension

# 3. Build an IndexIVFFlat index

nlist = 2 # Number of cells/clusters to partition the data into

quantizer = faiss.IndexFlatL2(d) # The underlying index for the cells

index = faiss.IndexIVFFlat(quantizer, d, nlist)

# 4. Train the index on the data

# The index needs to learn the boundaries of the cells (centroids)

index.train(doc_embeddings)

index.add(doc_embeddings)

print(f"Index is trained: {index.is_trained}, total vectors: {index.ntotal}")

# 5. Perform a query

query_text = "What is the famous tower in France?"

query_embedding = model.encode([query_text]).astype('float32')

k = 2 # Find the top 2 most relevant documents

# Set nprobe: how many nearby cells to search. Higher is more accurate but slower.

index.nprobe = 2

D, I = index.search(query_embedding, k)

# 6. Interpret the results

print(f"Query: '{query_text}'")

print("Top relevant documents:")

for i in I[0]:

print(f" - {documents[i]}")

This example forms the retrieval core of a RAG system. Frameworks discussed in LlamaIndex News and Haystack News often use FAISS as a local, in-memory vector store, orchestrating this retrieval step before passing the results to an LLM from providers like Anthropic News or Cohere News.

Pushing the Limits: Advanced Indexing and GPU Acceleration

For truly massive datasets—terabytes of vectors—even IndexIVFFlat can become a bottleneck due to its high memory consumption. FAISS offers more advanced techniques like Product Quantization (PQ) to tackle this challenge, along with powerful GPU acceleration.

Optimizing for Memory and Speed with Product Quantization (PQ)

Product Quantization is a vector compression technique that dramatically reduces the memory footprint of an index. It works by splitting each vector into sub-vectors and then using clustering to represent each sub-vector with a short code (e.g., 8 bits). This means a high-dimensional float32 vector can be stored using just a few bytes. The IndexIVFPQ combines the IVF partitioning scheme with PQ compression.

This level of optimization is critical for deploying large-scale AI on-premise or at the edge, where memory is a premium. It offers a level of control not always available in managed cloud platforms like AWS SageMaker News or Azure Machine Learning News.

import numpy as np

import faiss

d = 256 # Vector dimension

nb = 200000 # Database size

np.random.seed(123)

xb = np.random.random((nb, d)).astype('float32')

# --- Setting up the IndexIVFPQ ---

nlist = 100 # Number of clusters

m = 8 # Number of sub-quantizers

nbits = 8 # Bits per sub-quantizer code (8 bits = 256 centroids)

# Use a FlatL2 index as the coarse quantizer

quantizer = faiss.IndexFlatL2(d)

# Create the IVFPQ index

index = faiss.IndexIVFPQ(quantizer, d, nlist, m, nbits)

# Train the index

index.train(xb)

# Add the vectors

index.add(xb)

# --- Perform a search ---

xq = np.random.random((10, d)).astype('float32')

k = 10

index.nprobe = 10 # Search 10 closest clusters

D, I = index.search(xq, k)

print("IVFPQ Search Results (Indices):")

print(I)

# Calculate memory usage (approximate)

# Each vector is stored using m bytes (since nbits=8)

mem_bytes = index.ntotal * m

print(f"Approximate memory usage for vectors: {mem_bytes / 1024:.2f} KB")

# Compare with IndexFlatL2 memory

mem_flat_bytes = index.ntotal * d * 4 # 4 bytes per float32

print(f"Memory usage for IndexFlatL2: {mem_flat_bytes / (1024*1024):.2f} MB")

Harnessing NVIDIA GPUs for Blazing-Fast Search

One of FAISS’s most compelling features is its seamless GPU support, a testament to the hardware-centric focus often seen in NVIDIA AI News. For applications requiring real-time, low-latency search, moving the index to a GPU can provide a 10-20x speedup or more. FAISS provides simple utility functions to transfer any CPU index to one or more GPUs.

This capability makes FAISS a strong contender for building high-throughput inference services, rivaling the performance of dedicated systems like the Triton Inference Server. By leveraging GPUs, you can serve millions of search queries per day on a single machine, a critical requirement for production AI systems.

import numpy as np

import faiss

d = 64

nb = 100000

xb = np.random.random((nb, d)).astype('float32')

# 1. Create a CPU index (e.g., IndexIVFFlat)

nlist = 100

quantizer = faiss.IndexFlatL2(d)

cpu_index = faiss.IndexIVFFlat(quantizer, d, nlist)

cpu_index.train(xb)

cpu_index.add(xb)

# 2. Check if a GPU is available

if faiss.get_num_gpus() > 0:

print(f"Found {faiss.get_num_gpus()} GPUs. Moving index to GPU.")

# Define the GPU resource

res = faiss.StandardGpuResources()

# Use a utility function to move the index

gpu_index = faiss.index_cpu_to_gpu(res, 0, cpu_index) # Move to GPU 0

# 3. Perform a search on the GPU

xq = np.random.random((100, d)).astype('float32')

k = 10

gpu_index.nprobe = 10

D, I = gpu_index.search(xq, k)

print("Search completed on GPU.")

print(I[:5])

else:

print("No GPU found. Skipping GPU example.")

Best Practices and Integrating FAISS into the MLOps Ecosystem

Successfully deploying a FAISS-based system requires more than just writing code; it involves careful planning, index selection, and integration with MLOps best practices.

Choosing the Right Index: A Practical Guide

The FAISS documentation provides a comprehensive guide for index selection, but here’s a quick rule of thumb:

IndexFlatL2: For datasets under ~1 million vectors where perfect accuracy is essential and search time is not critical.IndexHNSWFlat: For low-latency search where memory usage is less of a concern. HNSW (Hierarchical Navigable Small World) is a graph-based index that offers excellent speed/accuracy trade-offs.IndexIVFFlat: A great all-rounder for large datasets, offering a good balance between speed, accuracy, and memory.IndexIVFPQ: For massive datasets where memory is the primary constraint. It offers the best compression at the cost of some accuracy.

FAISS in Production: MLOps and Monitoring

In a production environment, your FAISS index is a model artifact that needs to be versioned and managed. When you update your embedding model, you must re-generate your embeddings and rebuild your index. Tools featured in MLflow News or Weights & Biases News are excellent for tracking embedding model versions and the corresponding FAISS indexes. Furthermore, wrapping your FAISS search functionality in a web service using frameworks from the latest FastAPI News allows for easy integration into larger microservices architectures. For distributed index building, frameworks like Ray News can parallelize the embedding generation and indexing process across multiple nodes.

The Vector Database Landscape: FAISS vs. Managed Solutions

FAISS is a library, not a managed database. This is a crucial distinction when comparing it to solutions from recent Weaviate News, Qdrant News, or Chroma News.

- FAISS Pros: Unmatched performance for local search, zero network latency, complete control over hardware and indexing parameters, and no licensing or service fees.

- FAISS Cons: Requires you to manage your own infrastructure, scaling, and API layer. Advanced features like metadata filtering require more manual implementation.

Managed databases excel at providing a holistic solution with APIs, scalability, and metadata filtering out-of-the-box, making them a great choice for teams who want to move fast without managing infrastructure. FAISS is the ideal choice for teams that need maximum performance, cost-efficiency at scale, and deep control over the search process.

Conclusion

FAISS remains a cornerstone of high-performance similarity search in the modern AI era. Its speed, flexibility, and powerful features, from advanced quantization to seamless GPU acceleration, make it an indispensable tool for building cutting-edge applications. While the landscape of vector databases continues to evolve, the fundamental principles and raw power offered by FAISS ensure its continued relevance. It provides the foundational building blocks for sophisticated RAG pipelines, recommendation engines, and large-scale semantic search systems.

For developers and engineers looking to push the boundaries of what’s possible with AI, diving deep into FAISS is not just an academic exercise—it’s a practical investment. By understanding its various index types and performance trade-offs, you can build highly optimized, cost-effective, and scalable solutions that stand out in a competitive field. The next step is to experiment: grab a dataset, try different indexes, and see for yourself how FAISS can unlock new levels of performance for your AI applications.