Google Colab News: Supercharging AI Workflows with Go Concurrency

Google Colab has firmly established itself as an indispensable tool in the arsenal of data scientists, machine learning engineers, and researchers. By providing free access to powerful GPUs and TPUs within a familiar Jupyter notebook interface, it has democratized AI development, making it possible for anyone with an internet connection to train complex models. However, the latest Google Colab News isn’t just about new hardware tiers or UI tweaks; it’s about the platform’s evolution into a versatile cloud development environment capable of handling sophisticated, end-to-end workflows that go beyond pure Python. As datasets grow larger and preprocessing pipelines become more complex, the need for high-performance, concurrent processing becomes paramount. This is where leveraging other languages, like Go, directly within a Colab notebook can unlock significant performance gains and architectural advantages, transforming Colab from a simple experimentation tool into a robust development hub for serious AI projects.

Bridging Worlds: Setting Up and Running Go in Google Colab

While Google Colab is synonymous with Python, its underlying environment is a standard Linux virtual machine. This means you can install and run compilers, tools, and executables for other languages. For tasks requiring high concurrency and raw performance, such as parallel data fetching, log processing, or running lightweight microservices, Go (Golang) is an exceptional choice. Its simple syntax, powerful concurrency primitives, and ability to compile to a single static binary make it a perfect companion for performance-critical components of an ML pipeline. Let’s start by setting up the Go environment and running a basic program directly within a Colab cell.

Installation and First Program

You can use shell commands, prefixed with an exclamation mark (!), to interact with the underlying operating system. We’ll use this to install the Go compiler, write a Go source file using a “here document” (<<EOF), and then compile and run it. This entire process can be managed within a single Colab cell, keeping your workflow self-contained.

# First, run this in a Colab cell to install Go and create the file

!apt-get install golang -y > /dev/null

!cat <<EOF > processor.go

package main

import (

"fmt"

"strings"

)

// processText is a simple function to demonstrate a basic Go operation.

// It converts a string to uppercase and counts its words.

func processText(input string) (string, int) {

if input == "" {

return "", 0

}

upperCased := strings.ToUpper(input)

words := strings.Fields(upperCased)

return upperCased, len(words)

}

func main() {

text := "This is a sample sentence for processing in Go."

processedText, wordCount := processText(text)

fmt.Printf("Original: %s\n", text)

fmt.Printf("Processed: %s\n", processedText)

fmt.Printf("Word Count: %d\n", wordCount)

}

EOF

# Now, compile and run the Go program in the same or a subsequent cell

!go run processor.goIn this example, the processText function performs a simple string manipulation. The main function calls it and prints the result. By running !go run processor.go, we instruct the Go toolchain to compile and execute our code. This simple setup opens the door to integrating powerful Go applications into your notebooks, which is a significant development in the PyTorch News and TensorFlow News landscape, as data preprocessing is a universal bottleneck.

High-Throughput Data Ingestion with Goroutines and Channels

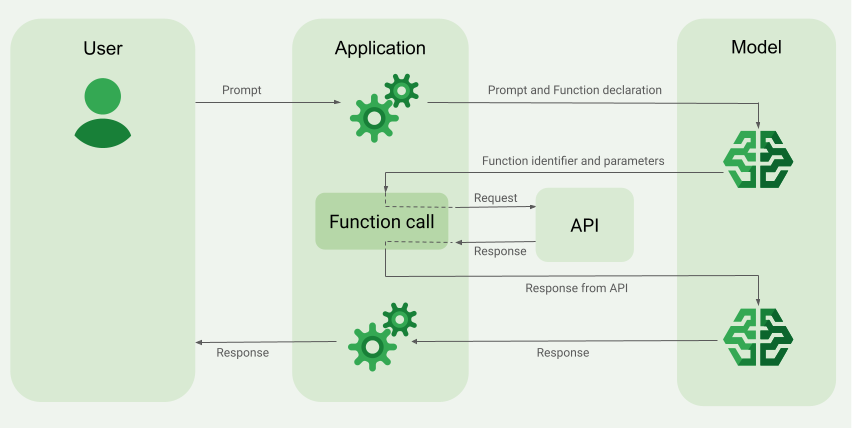

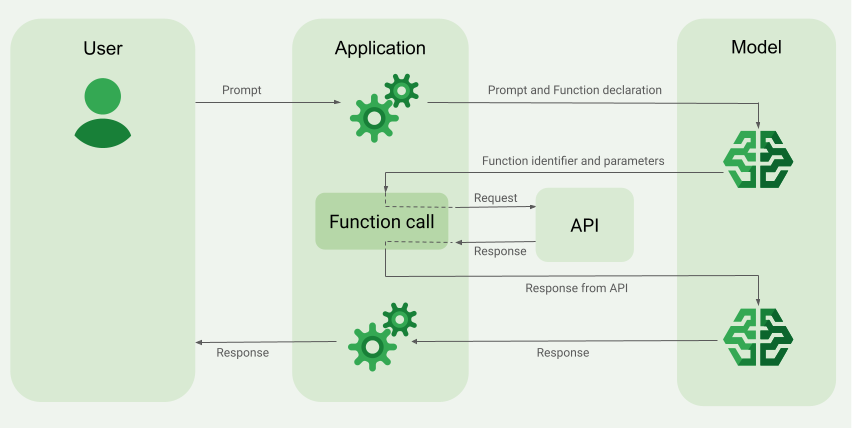

The true power of Go lies in its first-class support for concurrency through goroutines and channels. A goroutine is a lightweight thread managed by the Go runtime, and channels are typed conduits through which you can send and receive values with the <- operator, enabling safe communication between goroutines. This model is perfectly suited for I/O-bound tasks like downloading thousands of images, querying APIs, or reading from multiple data files in parallel—common scenarios when preparing datasets for models from Hugging Face News or training on large corpora. Instead of processing data serially, we can spin up a pool of worker goroutines to handle the workload concurrently, dramatically reducing overall processing time.

Building a Concurrent Worker Pool

Let’s create a more practical example: a worker pool that processes a list of “jobs” concurrently. Each job could represent a file to download, an image to preprocess, or a text document to tokenize. The main thread will dispatch jobs to a jobs channel, and a fixed number of worker goroutines will read from this channel, process the data, and send the results to a results channel.

# In a Colab cell, create the file concurrent_pipeline.go

!cat <<EOF > concurrent_pipeline.go

package main

import (

"fmt"

"time"

"strconv"

)

// worker function reads from the jobs channel and sends results to the results channel.

func worker(id int, jobs <-chan string, results chan<- string) {

for j := range jobs {

fmt.Printf("Worker %d started job %s\n", id, j)

// Simulate work, e.g., an API call or file processing

time.Sleep(time.Millisecond * 500)

result := "Result for job " + j

fmt.Printf("Worker %d finished job %s\n", id, j)

results <- result

}

}

func main() {

const numJobs = 10

const numWorkers = 3

jobs := make(chan string, numJobs)

results := make(chan string, numJobs)

// Start up the workers

for w := 1; w <= numWorkers; w++ {

go worker(w, jobs, results)

}

// Send jobs to the jobs channel

for j := 1; j <= numJobs; j++ {

jobs <- "job_id_" + strconv.Itoa(j)

}

close(jobs) // Close the channel to signal that no more jobs will be sent

// Collect all the results

for a := 1; a <= numJobs; a++ {

fmt.Println(<-results)

}

}

EOF

# Compile and run the concurrent program

!go run concurrent_pipeline.goWhen you run this code, you'll see the workers pick up jobs in parallel, not in a sequential order. The close(jobs) call is crucial; it signals to the worker goroutines (in the for j := range jobs loop) that no more jobs are coming, allowing them to terminate gracefully. This pattern is incredibly powerful for scaling data pipelines and is a technique often seen in production systems built with tools discussed in Ray News or Dask News, but now accessible directly within Colab.

Advanced Architecture: Building Modular Pipelines with Interfaces

To build robust and maintainable software, it's essential to write modular and extensible code. Go's interfaces provide a powerful way to achieve this. An interface is a type that defines a set of method signatures. Any other type that implements all methods of the interface is said to satisfy that interface implicitly. This allows for a form of polymorphism, where you can write functions that operate on interfaces without knowing the underlying concrete type. In an ML context, this is invaluable for creating flexible data processing pipelines. You could define a `DataProcessor` interface and then create multiple implementations: one for images, one for text, and one for audio. Your main pipeline logic can then work with a slice of `DataProcessor`s, making it easy to add, remove, or swap out processing steps. This mirrors the modular philosophy of frameworks featured in LangChain News and LlamaIndex News.

Example: A Polymorphic Processing Pipeline

Let's define a Processor interface with a single Process() method. We'll then create two different structs, ImageProcessor and TextProcessor, that both satisfy this interface. Our main function can then iterate over a list of these processors and call their Process() method, demonstrating how to build a pipeline from heterogeneous components.

# In a Colab cell, create the file interface_pipeline.go

!cat <<EOF > interface_pipeline.go

package main

import "fmt"

// Processor defines the interface for any data processing step.

type Processor interface {

Process() error

}

// TextProcessor is a concrete type for processing text data.

type TextProcessor struct {

Content string

}

func (tp TextProcessor) Process() error {

fmt.Printf("Processing text: '%s'\n", tp.Content)

// Add text-specific logic here (e.g., tokenization, embedding)

return nil

}

// ImageProcessor is a concrete type for processing image data.

type ImageProcessor struct {

FilePath string

}

func (ip ImageProcessor) Process() error {

fmt.Printf("Processing image at: %s\n", ip.FilePath)

// Add image-specific logic here (e.g., resizing, normalization)

return nil

}

func main() {

// Create a pipeline with different types of processors.

pipeline := []Processor{

TextProcessor{Content: "Hello from the world of AI!"},

ImageProcessor{FilePath: "/path/to/cat.jpg"},

TextProcessor{Content: "This is a key insight from Google DeepMind News."},

ImageProcessor{FilePath: "/path/to/dog.png"},

}

// Run the pipeline.

fmt.Println("--- Starting Processing Pipeline ---")

for i, p := range pipeline {

fmt.Printf("Step %d: ", i+1)

err := p.Process()

if err != nil {

fmt.Printf("Error processing step %d: %v\n", i+1, err)

// Decide whether to halt or continue on error

}

}

fmt.Println("--- Pipeline Finished ---")

}

EOF

# Compile and run

!go run interface_pipeline.goThis design pattern is incredibly powerful. It decouples the pipeline orchestration logic from the specific implementation of each processing step. You can easily add a new `AudioProcessor` or `VideoProcessor` without changing the main loop. This level of software engineering rigor is crucial when moving from experimental notebooks to production-grade systems on platforms like Vertex AI News or AWS SageMaker News.

Best Practices and Integration with Python

While running Go in Colab is powerful, the primary environment is still Python. The key to success is seamless integration. The most effective way to combine them is to compile your Go program into a standalone binary and then call it from Python using the subprocess module. This treats your Go program as a high-performance command-line tool.

Tips for Effective Integration

- Data Exchange: The simplest way to pass data is via standard input/output (stdin/stdout), often using a structured format like JSON. Your Python script can serialize data to a JSON string, pass it to the Go program's stdin, and then read the JSON output from its stdout. For larger data, writing to and reading from files on the Colab filesystem (

/content/) is a robust alternative. - Compilation vs. Interpretation: Use

go buildto create a compiled executable. This is faster for subsequent runs than usinggo run, which compiles and runs in one step. You only need to recompile when the Go code changes. - Error Handling: Check the return code and capture stderr from the subprocess call in Python to properly handle errors that occur within the Go program.

Here’s a Python snippet demonstrating how to compile and run the first Go program we wrote, capturing its output.

import subprocess

import os

# Assume processor.go from the first example exists

# Step 1: Compile the Go program into a binary

# The -o flag specifies the output file name

compile_process = subprocess.run(

["go", "build", "-o", "processor_app", "processor.go"],

capture_output=True,

text=True

)

if compile_process.returncode != 0:

print("Go compilation failed:")

print(compile_process.stderr)

else:

print("Go program compiled successfully to 'processor_app'")

# Step 2: Make the binary executable

os.chmod("processor_app", 0o755)

# Step 3: Run the compiled binary

run_process = subprocess.run(

["./processor_app"],

capture_output=True,

text=True

)

if run_process.returncode == 0:

print("\nOutput from Go program:")

print(run_process.stdout)

else:

print("\nError running Go program:")

print(run_process.stderr)This hybrid approach gives you the best of both worlds: Python's rich ecosystem of ML libraries (like those from NVIDIA AI News or Meta AI News) for high-level logic and model training, and Go's raw performance for heavy-duty, concurrent data processing.

Conclusion: The Future of AI Development in the Cloud

The narrative of Google Colab News is one of continuous evolution. What began as a tool for simple Python experiments has matured into a flexible and powerful cloud environment that can accommodate polyglot solutions. By integrating high-performance languages like Go, developers can overcome common bottlenecks in data preprocessing and build more efficient, scalable, and robust AI pipelines. The ability to leverage goroutines for concurrency and interfaces for modular design directly within a notebook environment accelerates the cycle from prototyping to production.

As you continue to push the boundaries of what's possible with AI, think beyond the confines of a single language. Embrace the full potential of the Colab virtual machine to select the right tool for the right job. This approach will not only improve the performance of your current projects but also equip you with the skills to build the next generation of sophisticated AI systems on enterprise-grade platforms like Azure AI News and Amazon Bedrock News.