Milvus News: Architecting Scalable Vector Search for Next-Gen RAG and Generative AI

Introduction: The Vector Database Revolution

In the rapidly evolving landscape of artificial intelligence, the infrastructure supporting machine learning models is undergoing a seismic shift. While the headlines are often dominated by OpenAI News regarding GPT-4 or Google DeepMind News concerning Gemini, the silent engine powering these generative experiences is the vector database. Among the leaders in this space, Milvus has emerged as a definitive standard for enterprise-grade vector similarity search. The recent surge in community growth and adoption signals a maturity in the ecosystem, moving from experimental notebooks to mission-critical production environments.

The necessity for robust vector databases stems from the limitations of traditional relational databases in handling unstructured data. As organizations rush to implement Retrieval-Augmented Generation (RAG), the ability to store, index, and query billions of embedding vectors with millisecond latency has become non-negotiable. This article delves into the technical advancements driving Milvus News, exploring how its cloud-native architecture facilitates seamless integration with frameworks highlighted in PyTorch News and TensorFlow News. We will explore the architectural decisions that allow Milvus to scale, provide practical implementation guides, and discuss how it fits into the broader MLOps landscape alongside tools like LangChain News and LlamaIndex News.

Section 1: Core Concepts and Architecture

To understand the current buzz surrounding Milvus, one must appreciate its architectural distinctiveness. Unlike lightweight vector libraries such as FAISS (often cited in FAISS News), Milvus is designed as a full-fledged database management system. It employs a cloud-native architecture that separates storage from computing, allowing each to scale independently. This is crucial for modern AI applications where storage requirements might grow linearly with data ingestion, while query load might spike unpredictably based on user traffic.

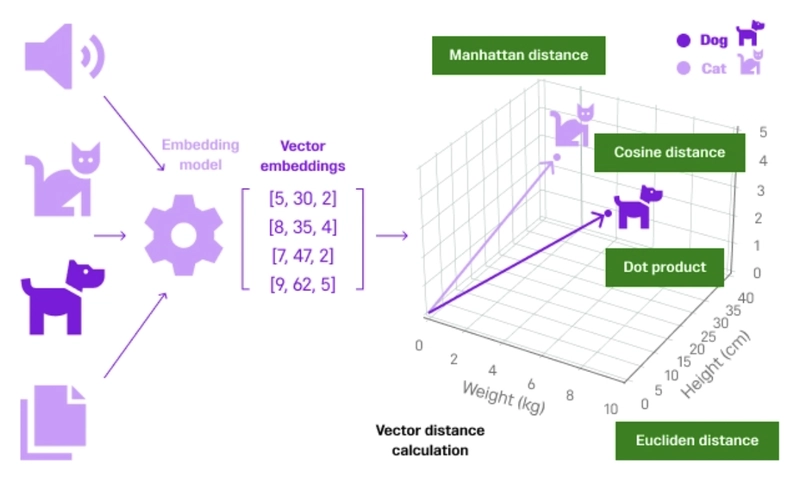

The Role of Embeddings

At the heart of Milvus is the management of vector embeddings. Whether you are following Hugging Face News for the latest transformer models or tracking Sentence Transformers News for specific encoding techniques, the output is invariably a high-dimensional vector. Milvus excels at indexing these vectors. It supports various data types, including floating-point vectors, binary vectors, and, more recently, sparse vectors—a feature often requested in Information Retrieval circles.

Schema Design and Collection Management

Designing a schema in Milvus is the first step toward building a scalable application. Unlike schema-less NoSQL approaches, Milvus enforces a schema to optimize indexing. However, recent updates have introduced dynamic fields, offering flexibility similar to JSON document stores. This hybrid approach allows developers to store scalar metadata (like document IDs, timestamps, or categories) alongside the vector data, enabling hybrid search capabilities.

Below is an example of how to connect to a Milvus instance, define a schema with both scalar and vector fields, and create a collection. This setup is foundational for any RAG pipeline.

from pymilvus import (

connections,

utility,

FieldSchema,

CollectionSchema,

DataType,

Collection,

)

# 1. Connect to the Milvus server

# In a production environment, this would be a remote URI

connections.connect("default", host="localhost", port="19530")

# 2. Define the Schema

# We need a primary key, a text field for metadata, and the vector field

fields = [

FieldSchema(name="pk", dtype=DataType.INT64, is_primary=True, auto_id=True),

FieldSchema(name="source_text", dtype=DataType.VARCHAR, max_length=65535),

FieldSchema(name="publish_date", dtype=DataType.INT64),

FieldSchema(name="embeddings", dtype=DataType.FLOAT_VECTOR, dim=768)

]

schema = CollectionSchema(fields, description="News Article Embeddings for RAG")

# 3. Create the Collection

collection_name = "tech_news_articles"

if utility.has_collection(collection_name):

utility.drop_collection(collection_name)

collection = Collection(name=collection_name, schema=schema)

print(f"Collection {collection_name} created successfully.")This code snippet demonstrates the strict typing system Milvus employs, which is essential for memory management and query optimization. As NVIDIA AI News often highlights, structured memory access is key to leveraging GPU acceleration for indexing, a feature Milvus supports natively.

Section 2: Implementation and Data Ingestion Pipelines

Once the infrastructure is established, the focus shifts to the data pipeline. In the context of Milvus News, a recurring theme is the integration with ETL tools and embedding providers. The workflow typically involves extracting text, chunking it, generating embeddings using a model (perhaps one referenced in Cohere News or Mistral AI News), and inserting the data into Milvus.

Handling Large-Scale Ingestion

For enterprise applications, inserting data row-by-row is inefficient. Milvus is optimized for batch insertion. When dealing with massive datasets—common in scenarios discussed in Apache Spark MLlib News or Ray News—developers should utilize the bulk insert APIs or streaming insertion methods. The system organizes data into segments, which are automatically sealed and indexed once they reach a certain size.

Integration with Embedding Models

Let’s look at a practical implementation using sentence-transformers to generate embeddings and insert them into our previously created collection. This workflow is standard for building semantic search engines.

from sentence_transformers import SentenceTransformer

import random

import time

# Load a pre-trained model

# Frequent updates in Hugging Face Transformers News make this easy

model = SentenceTransformer('all-mpnet-base-v2')

# Simulate data

texts = [

"Milvus releases new features for hybrid search.",

"TensorFlow updates focus on Keras integration.",

"PyTorch 2.0 introduces dynamic compilation.",

"Generative AI changes the landscape of database management.",

"Vector databases are essential for LLM memory."

]

# Generate Embeddings

# The dimension must match the schema (768 for mpnet-base)

vectors = model.encode(texts)

# Prepare data for insertion

# Data must be a list of lists, corresponding to the fields defined in schema

# [pk (auto), source_text, publish_date, embeddings]

data = [

texts,

[int(time.time()) for _ in range(len(texts))], # Dummy timestamps

vectors.tolist()

]

# Insert into Milvus

mr = collection.insert(data)

# Important: Flush to ensure data is written to disk and visible

collection.flush()

print(f"Inserted {len(texts)} entities. IDs: {mr.primary_keys}")This pipeline represents the “Write” path. However, the true power of Milvus lies in the “Read” path—specifically, how it handles indexing. Without an index, Milvus would perform a brute-force search, which is accurate but unscalable. Recent Milvus News emphasizes the support for various index types like HNSW (Hierarchical Navigable Small World) and DiskANN, enabling billion-scale searches on limited RAM.

Section 3: Advanced Indexing and Hybrid Search

As we delve deeper into Milvus News and technical updates, the sophistication of indexing algorithms becomes apparent. While Pinecone News and Weaviate News often discuss developer experience, Milvus differentiates itself with granular control over index parameters, catering to engineers who need to tune for specific recall vs. latency trade-offs.

Understanding Index Types

Milvus supports several index types. IVF_FLAT is a quantization-based index ideal for scenarios where recall is paramount. HNSW is a graph-based index that offers superior performance but consumes more memory. For extremely large datasets that exceed memory limits, Milvus integrates with technologies similar to those discussed in Microsoft Azure AI News regarding DiskANN, allowing indices to reside on NVMe SSDs while maintaining high throughput.

Hybrid Search: The Holy Grail of RAG

Pure vector search isn’t always enough. Sometimes you need to find “news about AI” (vector similarity) but only “published in the last 24 hours” (scalar filtering). This is where Hybrid Search comes in. Milvus allows you to apply boolean expressions to scalar fields before performing the vector search, significantly narrowing the search space and improving relevance.

The following code demonstrates creating an HNSW index and performing a hybrid search with metadata filtering.

# 1. Create an Index

# HNSW is generally the best trade-off for in-memory search

index_params = {

"metric_type": "L2",

"index_type": "HNSW",

"params": {"M": 8, "efConstruction": 64}

}

collection.create_index(

field_name="embeddings",

index_params=index_params

)

print("Index created. Loading collection to memory...")

collection.load()

# 2. Perform a Hybrid Search

# We want to find articles similar to "AI Database"

# BUT only if the publish_date is greater than a specific timestamp

query_text = "AI Database scaling"

query_vector = model.encode([query_text]).tolist()

# Search parameters

search_params = {

"metric_type": "L2",

"params": {"ef": 10}

}

# Boolean expression for filtering

# Assuming we only want recent items (logic depends on timestamp values)

filter_expr = "publish_date > 0"

results = collection.search(

data=query_vector,

anns_field="embeddings",

param=search_params,

limit=3,

expr=filter_expr,

output_fields=["source_text", "publish_date"]

)

for hits in results:

for hit in hits:

print(f"Hit: {hit.entity.get('source_text')} | Score: {hit.score}")This capability puts Milvus in direct competition with features often highlighted in Elasticsearch News, bridging the gap between traditional keyword search and neural search.

Section 4: Ecosystem Integration and Best Practices

The rise of Generative AI has created a new stack, often referred to as the “AI Sandwich.” Milvus News is increasingly dominated by its integrations with orchestration layers. It is no longer an isolated component but a critical node in a network involving LangChain News, LlamaIndex News, and model providers like Anthropic News.

LangChain Integration

One of the most common use cases today is using Milvus as a VectorStore within LangChain. This abstracts much of the boilerplate code and allows developers to swap out underlying databases easily. However, using Milvus specifically offers advantages in scaling that lightweight stores cannot match.

from langchain_community.vectorstores import Milvus

from langchain_community.embeddings import HuggingFaceEmbeddings

# Initialize Embeddings

embeddings = HuggingFaceEmbeddings(model_name="all-MiniLM-L6-v2")

# Connect to Milvus via LangChain wrapper

# This automatically handles schema creation and insertion

vector_db = Milvus.from_texts(

texts=[

"LangChain simplifies LLM application development.",

"Milvus provides the storage layer for RAG.",

"Together they enable powerful QA systems."

],

embedding=embeddings,

connection_args={"host": "localhost", "port": "19530"},

collection_name="langchain_demo"

)

# Perform a similarity search

query = "How do I store data for RAG?"

docs = vector_db.similarity_search(query)

print(f"Most relevant doc: {docs[0].page_content}")Optimization and Monitoring

To maintain a healthy Milvus cluster, observability is key. Following MLflow News and Weights & Biases News, we see a trend toward tracking not just model training, but inference infrastructure. Milvus exposes Prometheus metrics that should be monitored closely.

Key Optimization Tips:

- Consistency Levels: Milvus allows you to tune consistency (Strong, Bounded, Session, Eventually). For most RAG applications, “Bounded” is sufficient and offers better performance than “Strong.”

- Partitioning: Use partitions to physically isolate data. If you are building a multi-tenant SaaS application (a common topic in AWS SageMaker News and Azure Machine Learning News), partitioning by tenant ID can drastically speed up search performance.

- Segment Sizing: Automatic merging of small segments helps reduce the computational overhead during search. Keep an eye on compaction policies.

The Competitive Landscape

While discussing Milvus News, it is impossible to ignore the broader context. Qdrant News and Chroma News often highlight the ease of use for local development. However, Milvus’s architecture is specifically designed for the “Day 2” operations—scalability, failover, and rolling upgrades. Similarly, while Databricks News and Snowflake Cortex News discuss integrated vector search within data warehouses, specialized vector databases like Milvus often offer lower latency and more advanced indexing algorithms tailored specifically for high-dimensional data.

Conclusion

The trajectory of Milvus mirrors the broader explosion of the AI industry. As detailed in this analysis of Milvus News, the platform has evolved from a niche tool for computer vision into the backbone of modern Generative AI and RAG architectures. Its open-source nature, combined with robust backing and a rapidly growing community, positions it as a critical skill for MLOps engineers and AI architects.

For organizations looking to move beyond proof-of-concept, the path forward involves not just choosing a model—whether from OpenAI, Meta AI, or Stability AI—but choosing the right long-term memory for those models. Milvus provides that memory layer with the scalability and reliability required for enterprise deployment. As the ecosystem continues to mature, keeping a close watch on updates regarding hybrid search, disk-based indexing, and GPU acceleration will be essential for staying competitive in the AI arms race.