Mistral AI’s Meteoric Rise: A Technical Deep Dive into Europe’s Open-Source Powerhouse

The artificial intelligence landscape is in a constant state of flux, with breakthroughs and strategic shifts announced almost daily. Amidst a sea of OpenAI News and Google DeepMind News, a European player, Mistral AI, has emerged not just as a competitor but as a formidable force championing a different philosophy: high-performance, open-weight models. Recent developments have catapulted Mistral AI into the spotlight, solidifying its position as a critical entity in the global AI ecosystem. This surge in valuation and influence isn’t just a business headline; it’s a testament to the technical prowess and strategic open-source approach that developers and enterprises are eagerly adopting.

This article provides a comprehensive technical exploration of Mistral AI’s models, their practical implementation, and their role within the broader AI stack. We will delve into the code, frameworks, and best practices that empower developers to leverage Mistral’s technology, moving beyond the headlines to understand the “how” and “why” of its success. From local inference with Ollama to complex RAG pipelines using LangChain, we’ll uncover the technical underpinnings that make Mistral a name to watch in the ongoing AI revolution.

Section 1: The Core of Mistral AI: Performance, Efficiency, and Openness

Mistral AI’s core strategy revolves around creating models that challenge the performance of closed-source giants while remaining accessible. Unlike many competitors, Mistral has focused on releasing model weights, not just APIs. This empowers developers to self-host, fine-tune, and innovate without restriction. This philosophy is evident across their model lineup, from the nimble Mistral 7B to the powerful Mixtral 8x7B and the specialized Codestral.

Understanding the Model Architecture

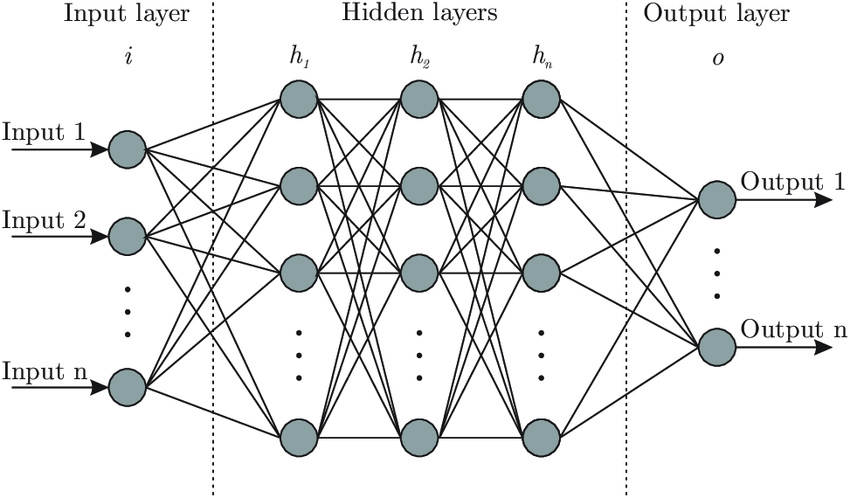

Mistral’s models incorporate several architectural innovations that contribute to their efficiency and performance. Key among these are:

- Sliding Window Attention (SWA): Implemented in models like Mistral 7B, SWA allows the model to handle much longer sequences without the quadratic computational cost associated with standard attention mechanisms. This makes it highly efficient for tasks involving large contexts.

- Mixture of Experts (MoE): The Mixtral 8x7B model is a prime example of a sparse MoE architecture. Instead of activating the entire network for each token, it dynamically routes tokens to a subset of “expert” neural networks. This results in a model with a massive parameter count (46.7B total parameters) but the inference speed and cost of a much smaller model (equivalent to a 12.9B dense model), as only two experts are used per token. This approach is a significant trend, echoing developments in JAX News and large-scale model research.

Getting Started: Basic Inference with Hugging Face Transformers

The easiest way to start experimenting with Mistral models is through the Hugging Face Transformers News ecosystem. The transformers library provides a high-level API to download and run inference on thousands of models, including all of Mistral’s open-weight offerings. Here’s a practical example of running inference with Mistral-7B-Instruct-v0.2, a model fine-tuned for following instructions.

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

# Set the device to GPU if available, otherwise CPU

device = "cuda" if torch.cuda.is_available() else "cpu"

# Load the model and tokenizer from Hugging Face Hub

model_id = "mistralai/Mistral-7B-Instruct-v0.2"

model = AutoModelForCausalLM.from_pretrained(model_id).to(device)

tokenizer = AutoTokenizer.from_pretrained(model_id)

# Define the messages in a chat format

messages = [

{"role": "user", "content": "What is the difference between Sliding Window Attention and a standard attention mechanism?"},

]

# Apply the chat template and tokenize the input

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(device)

# Generate the output

generated_ids = model.generate(model_inputs, max_new_tokens=256, do_sample=True)

decoded = tokenizer.batch_decode(generated_ids)

print(decoded[0])This simple script highlights the accessibility of Mistral’s models. With just a few lines of Python, developers can leverage a powerful language model, a stark contrast to the API-only access provided by many competitors. This accessibility is a driving factor behind its adoption and the positive buzz in PyTorch News and the broader developer community.

Section 2: Practical Deployment and Fine-Tuning

Moving from a simple script to a production-ready application requires robust deployment and customization strategies. Mistral’s open nature provides a wealth of options, from running models on local machines to deploying them on scalable cloud infrastructure like AWS SageMaker or Azure Machine Learning.

Local Deployment with Ollama

For rapid prototyping and local development, Ollama News has become a go-to tool. Ollama is a lightweight, extensible framework that simplifies the process of downloading, running, and managing large language models locally. It packages model weights, configuration, and a runtime into a single, easy-to-use package.

To run Mixtral locally using Ollama, you simply use the command line:

# First, pull the Mixtral model from the Ollama library

ollama pull mixtral

# Once downloaded, run the model interactively

ollama run mixtral

# You can now chat with the model directly in your terminal

# >>> Explain the concept of Mixture of Experts in LLMs in simple terms.Ollama also exposes a local REST API, allowing you to integrate the model into your applications with a simple HTTP request. This ease of use has significantly lowered the barrier to entry for developers wanting to build LLM-powered applications, a major theme in recent FastAPI News and application development circles.

Fine-Tuning for Custom Use Cases

While pre-trained models are powerful, their true potential is often unlocked through fine-tuning on domain-specific data. Tools like LlamaFactory and the Hugging Face ecosystem (TRL, PEFT) simplify this process. Parameter-Efficient Fine-Tuning (PEFT) methods, such as LoRA (Low-Rank Adaptation), are particularly popular. They allow you to adapt a massive model to a new task by training only a small fraction of its parameters, drastically reducing computational requirements.

A typical fine-tuning workflow might involve:

- Data Preparation: Curating a high-quality dataset in a conversational or instruction format.

- Model Loading: Loading a base Mistral model (e.g., Mistral 7B) in a quantized format (like 4-bit) to save memory.

- PEFT Configuration: Applying a LoRA configuration to the model to insert trainable adapter layers.

- Training: Using a library like

transformers.Traineror a framework like fast.ai to run the training process. - Merging and Inference: Merging the trained LoRA weights with the base model for efficient deployment.

This process, tracked using MLOps tools covered in MLflow News or Weights & Biases News, allows organizations to create specialized models for tasks like customer support, code generation, or medical report summarization without the prohibitive cost of training from scratch.

Section 3: Advanced Applications with the Mistral Ecosystem

Mistral models serve as the “brain” for a new generation of sophisticated AI applications. Their integration with orchestration frameworks and high-performance inference servers enables developers to build complex systems like agents and Retrieval-Augmented Generation (RAG) pipelines.

Building a RAG Pipeline with LangChain

RAG is a powerful technique that enhances an LLM’s knowledge by providing it with relevant information retrieved from an external knowledge base. This mitigates hallucinations and allows the model to answer questions based on private or up-to-date data. Frameworks like LangChain News and LlamaIndex News have become the standard for building these systems.

Here’s a conceptual Python example of a simple RAG chain using LangChain with a Mistral model running via Ollama and a local vector store like FAISS News or Chroma News.

from langchain_community.llms import Ollama

from langchain_community.embeddings import OllamaEmbeddings

from langchain_community.vectorstores import FAISS

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.chains.combine_documents import create_stuff_documents_chain

from langchain_core.prompts import ChatPromptTemplate

from langchain.chains import create_retrieval_chain

# 1. Initialize the LLM and Embeddings model from Ollama

llm = Ollama(model="mistral")

embeddings = OllamaEmbeddings(model="mistral")

# 2. Load and process documents (example documents)

documents = ["The new funding round for Mistral AI was led by ASML.",

"Mistral AI focuses on open-weight, high-performance models.",

"Mixtral 8x7B uses a Mixture-of-Experts architecture for efficiency."]

text_splitter = RecursiveCharacterTextSplitter()

split_documents = text_splitter.split_text("\n".join(documents))

# 3. Create a vector store from the documents

vector_store = FAISS.from_texts(split_documents, embedding=embeddings)

retriever = vector_store.as_retriever()

# 4. Create a prompt template

prompt = ChatPromptTemplate.from_template("""Answer the following question based only on the provided context:

<context>

{context}

</context>

Question: {input}""")

# 5. Create the RAG chain

document_chain = create_stuff_documents_chain(llm, prompt)

retrieval_chain = create_retrieval_chain(retriever, document_chain)

# 6. Invoke the chain with a question

response = retrieval_chain.invoke({"input": "Who led the latest funding for Mistral AI?"})

print(response["answer"])This example demonstrates how modular frameworks can chain together components—a retriever, a vector store (relevant to Pinecone News and Milvus News), a prompt template, and an LLM—to create a powerful, context-aware application. The performance of Mistral’s models makes them an excellent choice for the reasoning engine in such pipelines.

Optimizing Inference with vLLM

For production environments with high throughput requirements, inference speed is critical. This is where tools from the NVIDIA AI News ecosystem and open-source projects shine. vLLM News is a standout library for fast LLM inference. It uses a novel memory management technique called PagedAttention to prevent memory waste and fragmentation, significantly increasing throughput. Running Mistral models on an inference server powered by vLLM or Triton Inference Server can yield a dramatic performance boost compared to standard Hugging Face implementations, making real-time applications more feasible.

Section 4: Best Practices, Optimization, and the Competitive Landscape

As Mistral AI solidifies its position, it’s crucial for developers to adopt best practices to maximize the value of its models. The competitive landscape, featuring giants from Meta AI News (with their Llama models) and Anthropic News, means that performance and cost-efficiency are paramount.

Key Best Practices and Considerations

- Model Selection: Choose the right model for the job. Mistral 7B is excellent for simple tasks and edge deployments. Mixtral offers top-tier performance for complex reasoning. Codestral is specifically tuned for code-related tasks. Don’t default to the largest model if a smaller one will suffice.

- Quantization: Use quantized models (e.g., GGUF, AWQ, GPTQ) whenever possible. Quantization reduces the model’s memory footprint and can speed up inference with minimal impact on performance, enabling deployment on consumer-grade hardware. This is a key topic in OpenVINO News and edge AI discussions.

- Prompt Engineering: The quality of your output is heavily dependent on the quality of your input. Follow the specific chat or instruction template for the model you are using. Experiment with different prompting strategies like few-shot learning to guide the model’s response.

- Monitoring and Evaluation: Continuously evaluate your model’s performance on your specific tasks. Tools like LangSmith News or custom evaluation harnesses are essential for tracking regressions and improvements as you iterate on your prompts or fine-tune the model.

The Strategic Importance of Open-Source

Mistral’s success sends a powerful message about the viability of the open-source model in the generative AI era. By providing open-weight models, they foster a collaborative ecosystem where developers can build, share, and improve upon their work. This contrasts with the “walled garden” approach of some competitors and aligns with the ethos of communities like Hugging Face and Kaggle. This openness not only accelerates innovation but also provides enterprises with greater control, security, and transparency over their AI stack, a major selling point compared to relying solely on services like Amazon Bedrock News or Azure AI News.

Conclusion: The Dawn of a New AI Era

Mistral AI’s recent milestones are more than just financial news; they represent a validation of an open, performance-oriented approach to artificial intelligence. For developers and engineers, this translates into unprecedented access to state-of-the-art models that can be freely customized, optimized, and deployed across a wide range of applications. By combining architectural innovations like MoE with a developer-friendly ecosystem, Mistral has created a powerful alternative to the closed-source establishment.

The practical examples using Hugging Face Transformers, Ollama, and LangChain demonstrate that leveraging this power is within reach for developers of all levels. As the AI landscape continues to evolve, the principles of openness, efficiency, and community-driven innovation championed by Mistral AI will undoubtedly play a pivotal role. The next step for any developer is to move beyond the headlines, pull a model, and start building. The tools are available, the community is active, and the potential for innovation is limitless.