ONNX Runtime Evolves: Navigating Training Deprecation, Python Updates, and New CUDA Dependencies

Introduction

In the rapidly advancing world of machine learning, Open Neural Network Exchange (ONNX) has established itself as the indispensable lingua franca for model interoperability. By providing a common format, ONNX allows developers to train a model in one framework, like PyTorch or TensorFlow, and deploy it for high-performance inference in another, using the powerful ONNX Runtime (ORT). This flexibility is crucial for moving models from research to production efficiently. Staying current with the latest ONNX News is vital for any MLOps professional, as the ecosystem is constantly evolving to enhance performance, stability, and developer experience.

Recently, the ONNX Runtime team has introduced several significant updates that signal a strategic refinement of its mission. These changes include the deprecation of the ONNX Runtime Training packages, the cessation of support for older Python versions, and a new approach to managing CUDA dependencies. While these updates may require adjustments to existing workflows, they ultimately aim to solidify ORT’s position as a premier inference engine. This article provides a comprehensive technical deep-dive into these changes, offering practical code examples, best practices, and actionable insights to help you navigate this transition smoothly and continue leveraging the full power of ONNX Runtime in your projects.

Section 1: The Strategic Sunsetting of ONNX Runtime Training

One of the most significant recent developments is the deprecation of all onnxruntime-training packages. This move marks a strategic decision to refocus ONNX Runtime on its core strength: delivering exceptional, cross-platform inference performance. Understanding the “why” behind this change is key to adapting your MLOps strategy.

What Was ONNX Runtime Training?

ONNX Runtime Training was an ambitious project designed to accelerate the training process of models within popular frameworks. By integrating with frameworks like PyTorch via custom optimizers and modules, it aimed to leverage ORT’s performance optimizations not just for inference but during the computationally intensive training phase. The goal was to provide a unified acceleration path from training to deployment. However, the modern AI training landscape, with constant updates from PyTorch News and TensorFlow News, has seen the rise of highly specialized and deeply integrated training acceleration libraries like DeepSpeed, Hugging Face Accelerate, and native solutions like torch.compile.

Refocusing on a Best-in-Class Inference Engine

The decision to deprecate ORT Training reflects a strategic consolidation. Maintaining a competitive training solution requires immense resources to keep pace with the rapid evolution of deep learning frameworks. By stepping back from training, the ONNX Runtime team can dedicate its full attention to what it does best: optimizing and accelerating model inference across a vast array of hardware targets—from cloud GPUs to edge devices. This renewed focus benefits the entire community, as advancements in inference speed and efficiency are critical for deploying cost-effective and responsive AI applications.

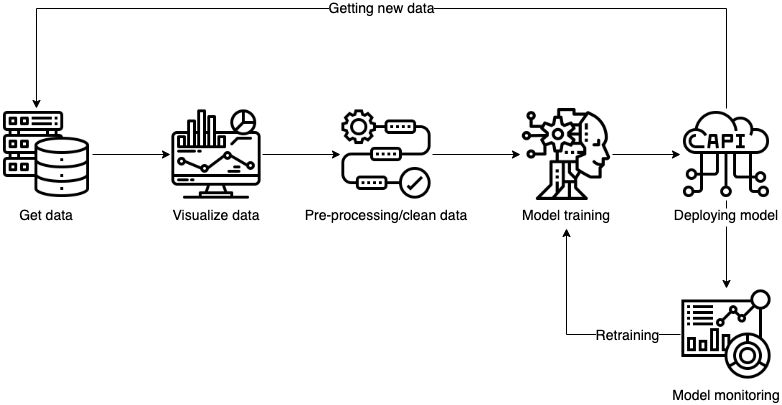

For developers, this means the recommended workflow is now clearer than ever: use native, state-of-the-art tools for training and then export your finalized model to the ONNX format for production inference. This separation of concerns allows you to leverage the best tools for each stage of the model lifecycle.

Practical Example: The Standard Train-to-Export Workflow

The core value of ONNX remains unchanged. Here is a standard example of training a simple model in PyTorch and exporting it to ONNX, which is the primary workflow going forward.

import torch

import torch.nn as nn

import torch.onnx

# 1. Define a simple PyTorch model

class SimpleModel(nn.Module):

def __init__(self):

super(SimpleModel, self).__init__()

self.linear1 = nn.Linear(784, 128)

self.relu = nn.ReLU()

self.linear2 = nn.Linear(128, 10)

def forward(self, x):

x = self.linear1(x)

x = self.relu(x)

x = self.linear2(x)

return x

# 2. Instantiate the model and prepare for export

model = SimpleModel()

model.eval() # Set the model to inference mode

# 3. Create a dummy input tensor with the correct shape

# This is required for the ONNX tracer

batch_size = 1

dummy_input = torch.randn(batch_size, 784, requires_grad=True)

onnx_model_path = "simple_model.onnx"

print("Exporting model to ONNX format...")

# 4. Export the model

torch.onnx.export(model, # model being run

dummy_input, # model input (or a tuple for multiple inputs)

onnx_model_path, # where to save the model

export_params=True, # store the trained parameter weights inside the model file

opset_version=14, # the ONNX version to export the model to

do_constant_folding=True, # whether to execute constant folding for optimization

input_names = ['input'], # the model's input names

output_names = ['output'], # the model's output names

dynamic_axes={'input' : {0 : 'batch_size'}, # variable length axes

'output' : {0 : 'batch_size'}})

print(f"Model successfully exported to {onnx_model_path}")

# You would now use `onnxruntime` to run inference with this file.Section 2: Embracing Modernity: Python 3.8 and 3.9 Support Dropped

In a move that aligns with the broader Python ecosystem, ONNX Runtime packages will no longer support Python 3.8 and 3.9. This change affects how development and deployment environments are configured and underscores the importance of keeping your software stack up-to-date.

The Rationale for Dropping Older Versions

Software maintenance is a balancing act. Supporting older versions of a language requires significant effort in testing, bug fixing, and handling backward compatibility. Python 3.8 is scheduled to reach its official end-of-life in October 2024, meaning it will no longer receive security updates. By standardizing on Python 3.10 and newer, the ONNX Runtime team can:

- Leverage New Language Features: Newer Python versions introduce performance improvements and syntax enhancements (like the `match` statement) that can simplify the codebase.

- Reduce Maintenance Overhead: Focusing on a smaller set of modern Python versions frees up developer resources to work on new features and performance optimizations for ORT.

- Enhance Security: Encouraging users to migrate to actively supported Python versions improves the security posture of the entire ecosystem.

This trend is common across major data science and ML libraries, with many projects in the Ray News and Apache Spark MLlib News cycles also encouraging users to adopt modern Python releases.

Impact and Migration Strategy

For teams with CI/CD pipelines or production environments running on Python 3.8 or 3.9, this change requires proactive planning. The primary impact will be on dependency resolution; attempting to install the latest versions of onnxruntime in an older environment will fail.

Best Practice: Environment Validation

It’s a good practice to include a version check in your application’s entry point or setup scripts to provide clear error messages and prevent runtime failures.

import sys

import onnxruntime as ort

# Define the minimum required Python version

MIN_PYTHON_VERSION = (3, 10)

def check_environment():

"""Checks for compatible Python and ONNX Runtime versions."""

print("--- Environment Sanity Check ---")

# 1. Check Python version

current_python_version = sys.version_info

print(f"Current Python version: {current_python_version.major}.{current_python_version.minor}")

if current_python_version < MIN_PYTHON_VERSION:

raise RuntimeError(

f"Unsupported Python version. ONNX Runtime now requires Python "

f"{MIN_PYTHON_VERSION[0]}.{MIN_PYTHON_VERSION[1]} or newer. "

f"Please upgrade your environment."

)

print("Python version check: PASSED")

# 2. Check ONNX Runtime version

print(f"ONNX Runtime version: {ort.__version__}")

print("------------------------------\n")

if __name__ == "__main__":

try:

check_environment()

print("Environment is configured correctly for the latest ONNX Runtime.")

# Your application logic would start here

except RuntimeError as e:

print(f"Error: {e}", file=sys.stderr)

sys.exit(1)To migrate, teams should prioritize updating their base Docker images and virtual environments (using `conda` or `venv`) to Python 3.10 or 3.11. This is a good opportunity to audit all dependencies and ensure compatibility, which is a core tenet of robust MLOps as seen in platforms discussed in Azure Machine Learning News and AWS SageMaker News.

Section 3: Simplified GPU Deployments: New CUDA Dependency Handling

For developers leveraging NVIDIA GPUs, managing CUDA dependencies has often been a source of complexity. The latest ONNX Runtime releases (1.20 and beyond) introduce a significant change to the CUDA-enabled packages (onnxruntime-gpu) by bundling key dependencies, simplifying setup and improving reliability.

The Old Challenge: The “DLL Hell” of CUDA

Previously, using onnxruntime-gpu required users to have a compatible version of the NVIDIA CUDA Toolkit installed on their system. More specifically, ORT relied on shared libraries like cuDNN, cuBLAS, and TensorRT being present in the system’s library path. Mismatches between the ORT build’s expectations and the locally installed CUDA libraries were a common cause of cryptic runtime errors. This created friction, especially in complex environments or when trying to replicate a setup across different machines. This is a challenge often discussed in NVIDIA AI News and is a pain point for users of tools like TensorRT News.

The New Approach: Bundled Dependencies

Starting with ORT 1.20, the onnxruntime-gpu package now includes its own versions of essential CUDA libraries like cuDNN 9.1 and cuBLAS 12.3. When you `pip install onnxruntime-gpu`, these libraries are installed alongside the main ORT library within your Python environment. This change offers several key advantages:

- Reduced Setup Complexity: You no longer need to perform a system-wide installation of a specific cuDNN version. A compatible NVIDIA driver and the base CUDA toolkit are still required, but the finer-grained library management is handled by the package manager.

- Guaranteed Compatibility: The bundled libraries are the exact versions that the ORT build was tested against, eliminating a major source of version-mismatch errors.

- Improved Portability: Environments become more self-contained and easier to replicate, as a `requirements.txt` file now captures these critical dependencies implicitly.

While this approach increases the package size, the reliability and ease-of-use benefits are a significant win for most users. It aligns ORT with the packaging practices of other major frameworks like PyTorch.

Example: Verifying a GPU Inference Session

With the new packaging, verifying your GPU setup is straightforward. After installing `onnxruntime-gpu`, you can run a simple check to ensure ORT can access the GPU. The code below loads the model we exported earlier and runs inference using the CUDAExecutionProvider.

import onnxruntime as ort

import numpy as np

def verify_gpu_inference(model_path: str):

"""Loads an ONNX model and runs inference on the GPU."""

print(f"Attempting to run inference on: {model_path}")

# Ensure CUDA is available

available_providers = ort.get_available_providers()

print(f"Available execution providers: {available_providers}")

if 'CUDAExecutionProvider' not in available_providers:

raise RuntimeError("CUDAExecutionProvider is not available. "

"Please ensure you have a compatible NVIDIA driver and CUDA toolkit installed, "

"and are using the 'onnxruntime-gpu' package.")

# Create an inference session with the CUDA Execution Provider

session = ort.InferenceSession(model_path, providers=['CUDAExecutionProvider'])

# Get model input details

input_name = session.get_inputs()[0].name

input_shape = session.get_inputs()[0].shape

# The dynamic axis 'batch_size' will be a string, so we replace it with a concrete value

input_shape[0] = 1 # Set batch size to 1 for this example

print(f"Model Input Name: {input_name}")

print(f"Model Input Shape: {input_shape}")

# Create a random input tensor

dummy_input = np.random.randn(*input_shape).astype(np.float32)

# Run inference

results = session.run(None, {input_name: dummy_input})

print("Inference on GPU executed successfully!")

print(f"Output shape: {results[0].shape}")

if __name__ == "__main__":

model_file = "simple_model.onnx"

# Make sure you have run the export script from Section 1 first

try:

verify_gpu_inference(model_file)

except Exception as e:

print(f"An error occurred: {e}")If this script runs without errors, it confirms that ONNX Runtime has successfully loaded its CUDA dependencies and communicated with the NVIDIA driver to perform the computation on the GPU.

Section 4: Best Practices and Preparing for the Future

Adapting to these changes in ONNX Runtime requires a proactive and disciplined approach to environment and dependency management. Here are some consolidated best practices to ensure a smooth transition.

1. Pin Your Dependencies

Always pin the exact versions of your dependencies in a requirements.txt (for pip) or environment.yml (for conda) file. This is the single most effective way to create reproducible environments and prevent unexpected breakages when new versions of libraries are released. Avoid using vague version specifiers like onnxruntime-gpu>=1.19 in production code.

2. Modernize Your CI/CD and Deployment Environments

Audit your CI/CD pipelines, Dockerfiles, and production virtual machines. Update the base images and Python installations to a supported version (3.10+). This not only ensures compatibility with the latest ONNX Runtime but also provides access to performance and security benefits from the language itself. This proactive maintenance is a cornerstone of modern MLOps, a topic frequently covered in MLflow News and by MLOps platforms like those featured in Vertex AI News.

3. Embrace the Inference-First Mindset

With the deprecation of ORT Training, double down on the core ONNX philosophy: train in a specialized framework and deploy with a specialized inference engine. For training, explore powerful tools mentioned in Hugging Face Transformers News or frameworks like fast.ai. For inference, continue to leverage ONNX Runtime, but also explore other high-performance options like NVIDIA’s TensorRT or Intel’s OpenVINO News, both of which can often consume ONNX models as a starting point for further optimization.

4. Stay Informed

The AI ecosystem moves incredibly fast. Regularly check the official ONNX Runtime GitHub releases page and read the release notes. This is the most reliable source of information on breaking changes, new features, and performance improvements. Following general sources like Kaggle News or platform-specific updates from Azure AI News and Amazon Bedrock News can also provide valuable context on broader industry trends.

Conclusion

The latest updates to ONNX Runtime represent a significant and positive evolution. The deprecation of the training packages sharpens the project’s focus on its primary mission: to be the fastest and most versatile inference engine available. The move to support modern Python versions aligns ORT with industry best practices for security and maintainability. Finally, the new CUDA dependency bundling for GPU packages drastically simplifies setup and enhances reliability, removing a common pain point for developers. While these changes require adaptation, they ultimately lead to a more robust, stable, and powerful tool for the entire machine learning community.

Your next steps should be to audit your existing projects, plan the migration to a supported Python version, and update your dependencies. By embracing these changes, you can ensure your MLOps pipelines are built on a solid, modern foundation, ready to take advantage of future innovations in the exciting world of high-performance AI inference.