Powering Self-Improving AI: A Deep Dive into Generative Feedback Loops with Weaviate and LLMs

The advent of Retrieval-Augmented Generation (RAG) has revolutionized how we build applications with Large Language Models (LLMs). By grounding models in external, verifiable knowledge, RAG systems mitigate hallucinations and provide more accurate, context-aware responses. However, most RAG implementations are static; their knowledge base is fixed at a point in time, quickly becoming stale and unable to adapt to new information or user needs. This is where the next evolutionary step in AI systems emerges: the Generative Feedback Loop.

A Generative Feedback Loop transforms a static RAG system into a dynamic, self-improving entity. It creates a cyclical process where the AI learns from its interactions, captures new insights, and integrates validated knowledge back into its memory, becoming progressively smarter and more accurate with every query. This article provides a comprehensive technical guide to understanding, designing, and implementing these powerful loops. We will explore the core components, walk through practical code examples using Weaviate and popular AI frameworks, and discuss advanced strategies for building robust, continuously learning systems. This paradigm shift is a major topic in recent Weaviate News and is crucial for anyone building next-generation AI applications.

The Anatomy of a Generative Feedback Loop

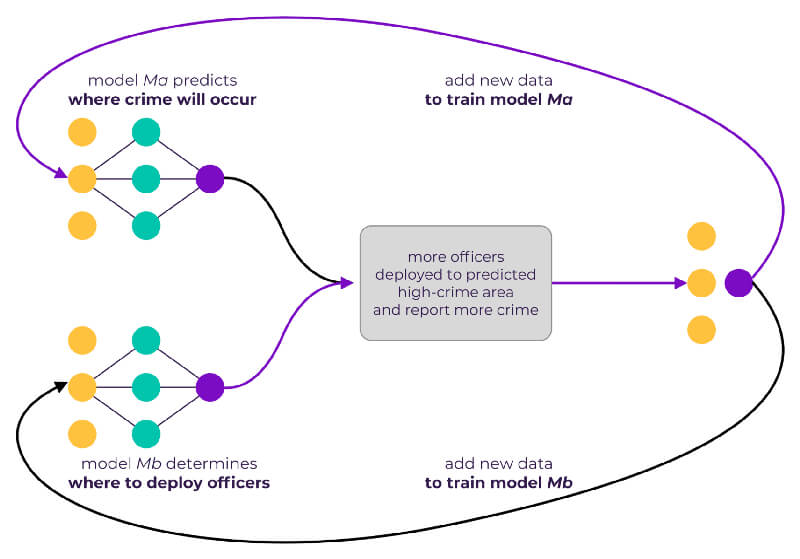

Before diving into code, it’s essential to understand the conceptual framework of a generative feedback loop. At its core, it’s a four-stage process that enables a system to learn from its own output. It moves beyond a simple request-response pattern to a continuous cycle of improvement.

What is a Generative Feedback Loop?

A Generative Feedback Loop is a system architecture where the output of a generative model is captured, evaluated, and then used to refine the system’s underlying knowledge base or operational parameters. In the context of a RAG pipeline, this means the answers generated for users are not the end of the process. Instead, they are the beginning of a learning opportunity. When a user finds an answer helpful, the system can analyze why it was successful and extract the core information to reinforce its knowledge. This stands in stark contrast to traditional systems that rely on periodic, manual updates, which are often slow and resource-intensive.

Key Components of the Loop

The loop consists of four distinct but interconnected stages:

- Generate: The process begins when a user submits a query. The RAG system retrieves relevant context from its knowledge base (a vector database like Weaviate) and uses an LLM (from providers like OpenAI, Cohere, or Mistral AI) to generate a response.

- Capture: The system must capture feedback on the generated response. This can be explicit (e.g., thumbs up/down buttons, star ratings, corrective text) or implicit (e.g., user copies the answer, ends the session, or asks a clarifying question).

- Synthesize: The captured feedback is analyzed. For positive feedback, a separate process—often involving another LLM call—distills the valuable information from the query-answer pair into a new, atomic piece of knowledge.

- Integrate: The newly synthesized knowledge is vectorized and added back into the vector database. This “closes the loop,” making the new information available for retrieval in future queries, thus improving the system’s overall performance.

To set a baseline, let’s look at a standard RAG query using the Weaviate Python client. This is the “Generate” step that kicks off the entire process.

import weaviate

import weaviate.classes.config as wvc

import os

# Connect to a Weaviate instance

# Ensure you have Weaviate running (e.g., via Docker)

# and environment variables for API keys are set

client = weaviate.connect_to_local(

headers={

"X-OpenAI-Api-Key": os.getenv("OPENAI_API_KEY")

}

)

# Assume we have a collection named "Article"

articles = client.collections.get("Article")

# The "Generate" step: Perform a RAG query

query = "What are the benefits of vector databases for AI applications?"

response = articles.query.near_text(

query=query,

limit=3,

generate=wvc.query.Generate(

single_prompt="Explain why {summary} is relevant to the question: {question}"

)

)

# Print the generated answer

if response.generated:

print(f"Query: {query}\n")

print(f"Generated Answer: {response.generated}")

client.close()This code performs a basic RAG query. The feedback loop adds the critical machinery to learn from the quality of this response.generated output.

Building the Loop: A Step-by-Step Implementation Guide

With the core concepts understood, we can now build the mechanism that captures and processes feedback. This section focuses on the “Capture” and “Synthesize” stages, turning a user interaction into a valuable piece of new knowledge.

Step 1: Capturing and Structuring Feedback

The first step is to design a mechanism for capturing user feedback. While implicit signals are powerful, explicit feedback is easier to start with. Let’s imagine a simple API endpoint in a web application (built with Flask or FastAPI) where a user can submit their feedback.

The feedback payload should contain enough context to be useful, including:

- The original user query.

- The LLM-generated response.

- A feedback score (e.g.,

"positive"or"negative"). - A unique session or interaction ID for traceability.

This structured data is the raw input for the next stage. For complex conversational agents, frameworks and tools discussed in LangChain News or LlamaIndex News often provide built-in mechanisms for managing conversation history, which can be leveraged for this purpose.

Step 2: Synthesizing New Knowledge with an Evaluator LLM

When positive feedback is received, we can’t just dump the entire conversation back into our database. It’s noisy and lacks context. Instead, we use another LLM as a “synthesizer” to extract a clean, factual statement. This involves careful prompt engineering.

The synthesizer prompt instructs an LLM to act as a knowledge curator. It receives the successful query-answer pair and is tasked with creating a concise, standalone piece of information that can be added to the knowledge base. This approach is a hot topic in recent OpenAI News and is being explored with models from Anthropic and Google DeepMind.

Here is a Python function that demonstrates this synthesis step.

from openai import OpenAI

import os

# Initialize the OpenAI client

# Best practice: use environment variables for API keys

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

def synthesize_knowledge_from_feedback(query: str, answer: str) -> str | None:

"""

Uses an LLM to synthesize a new knowledge chunk from a successful query-answer pair.

"""

system_prompt = """

You are a knowledge synthesis agent. Your task is to analyze a user query and the successful answer

provided by an AI assistant. From this pair, extract a single, concise, and factual statement that

can be added to a knowledge base. The statement should be a self-contained piece of information.

If no clear, new, and verifiable fact can be extracted, return an empty string.

"""

user_prompt = f"""

Original Query: "{query}"

Successful Answer: "{answer}"

Synthesized Knowledge Chunk:

"""

try:

response = client.chat.completions.create(

model="gpt-4-turbo", # Or another powerful model

messages=[

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_prompt},

],

temperature=0.0, # We want factual, deterministic output

max_tokens=150

)

synthesized_text = response.choices[0].message.content.strip()

if synthesized_text:

return synthesized_text

return None

except Exception as e:

print(f"An error occurred during knowledge synthesis: {e}")

return None

# Example Usage:

user_query = "How does Weaviate's hybrid search work?"

successful_answer = "Weaviate's hybrid search combines keyword-based (BM25) and vector-based semantic search to deliver more relevant results. It balances the lexical matching of keywords with the contextual understanding of semantic search, which is particularly effective for queries with specific terms."

new_knowledge = synthesize_knowledge_from_feedback(user_query, successful_answer)

if new_knowledge:

print(f"New Knowledge to Ingest: {new_knowledge}")

# Expected Output: "Weaviate's hybrid search enhances search relevance by combining keyword-based (BM25) and vector-based semantic search methods."

This function takes the raw material of a good interaction and refines it into a high-quality “golden” record, ready for the final step of the loop.

Closing the Loop: Updating the Vector Knowledge Base

The final and most critical stage is “Integration.” The newly synthesized knowledge must be embedded and stored back into our vector database, making it instantly available for future RAG queries. This is where the power of modern vector databases like Weaviate, Milvus, or Pinecone truly shines.

The Role of the Vector Database

Vector databases are designed for dynamic data management. Unlike static file-based indexes, they allow for the continuous, real-time insertion of new data objects without requiring a full re-index of the entire dataset. Weaviate, for instance, allows us to add a new object with its vector representation and associated metadata seamlessly. This capability is the technical backbone that makes the feedback loop feasible. As highlighted in recent NVIDIA AI News, the performance of these databases is often accelerated by powerful hardware, making real-time updates possible even at massive scale.

The Integration Process

The integration process is straightforward:

- Take the synthesized text string from the previous step.

- Use an embedding model (e.g., from the Sentence Transformers News sphere or an API like OpenAI’s) to convert this text into a vector embedding.

- Create a new data object in Weaviate, including the text, its vector, and useful metadata.

Metadata is crucial for observability and maintenance. We should log the source of the new knowledge (e.g., ‘synthesized_from_feedback’), a timestamp, and perhaps the interaction ID that generated it. This allows us to trace, audit, or even roll back changes if needed.

The following code shows how to add the synthesized knowledge into a Weaviate collection.

import weaviate

import weaviate.classes.config as wvc

from datetime import datetime, timezone

import uuid

# This function assumes the Weaviate client is configured with a text2vec module

# like text2vec-openai, which will automatically vectorize the 'content' property.

def add_synthesized_knowledge_to_weaviate(knowledge_text: str, collection_name: str):

"""

Adds a new piece of synthesized knowledge to a Weaviate collection.

"""

try:

# Re-connect to Weaviate within the function or pass the client as an argument

client = weaviate.connect_to_local()

articles = client.collections.get(collection_name)

# Weaviate will automatically create the vector using the configured module

new_object_uuid = articles.data.insert({

"content": knowledge_text,

"source": "synthesized_from_feedback",

"ingestion_timestamp": datetime.now(timezone.utc).isoformat()

})

print(f"Successfully ingested new knowledge with UUID: {new_object_uuid}")

return new_object_uuid

except Exception as e:

print(f"Failed to ingest knowledge into Weaviate: {e}")

return None

finally:

client.close()

# Example Usage:

# This is the output from our previous synthesis function

new_knowledge_chunk = "Weaviate's hybrid search enhances search relevance by combining keyword-based (BM25) and vector-based semantic search methods."

# Name of our existing collection in Weaviate

collection = "Article"

# Close the loop by ingesting the new knowledge

add_synthesized_knowledge_to_weaviate(new_knowledge_chunk, collection)

With this final step, the loop is complete. The next time a user asks a similar question, the RAG system can now retrieve this new, highly relevant, and validated piece of information, leading to a better answer.

Advanced Strategies and Best Practices

Implementing a basic feedback loop is a significant step, but building a production-ready, robust system requires further consideration. Here are some advanced techniques and best practices to keep in mind.

Handling Negative Feedback

So far, we’ve focused on positive feedback. But what about when the system is wrong? Negative feedback is an equally valuable signal. Instead of adding knowledge, it can be used to:

- Flag Content for Review: The documents retrieved for the incorrect answer can be flagged for human review. Perhaps they are outdated or misleading.

- Down-weighting Sources: If a particular data source consistently contributes to poor answers, its relevance score could be programmatically down-weighted in future retrievals.

- Create Negative Examples: In more advanced systems, the incorrect query-answer pair can be stored as a negative example for fine-tuning the retrieval or generation models, teaching them what not to do.

Human-in-the-Loop (HITL) Validation

Fully automating the knowledge integration process can be risky, as a faulty synthesizer could pollute the knowledge base with incorrect information. For mission-critical applications, a Human-in-the-Loop (HITL) workflow is a best practice. Instead of directly ingesting the synthesized knowledge, it’s added to a moderation queue. A human expert then validates the new information before it’s pushed into the main Weaviate collection. Tools like LangSmith News are making it easier to trace and debug these complex, multi-step chains, which is invaluable for HITL workflows.

Scalability and Cost Management

A feedback loop adds computational overhead. Every piece of positive feedback can trigger at least one extra LLM call for synthesis. At scale, this can become expensive. To manage this, consider:

- Batching: Collect feedback over a period and process it in batches rather than in real-time.

- Model Selection: Use a powerful, expensive model (like GPT-4) for the final user-facing generation but a smaller, faster, and cheaper model for the synthesis step, which is a more constrained task. Many open-source models discussed in Hugging Face News are excellent candidates.

- Monitoring: Use MLOps tools like MLflow News or Weights & Biases to track the system’s performance. Monitor the quality of synthesized knowledge and the impact on retrieval accuracy to ensure the loop is genuinely improving the system. For enterprises, deploying on platforms like Azure AI News, AWS SageMaker, or Google’s Vertex AI provides the necessary infrastructure for scaling and monitoring.

Conclusion

Generative Feedback Loops represent a fundamental shift from static, passive AI systems to dynamic, active learning partners. By creating a mechanism for an application to learn from its interactions, we can build RAG systems that continuously adapt, self-correct, and increase their value over time. The core cycle—Generate, Capture, Synthesize, and Integrate—provides a clear roadmap for implementation.

The modern AI stack, featuring vector databases like Weaviate as the dynamic memory core, LLMs from providers like OpenAI and Anthropic as the reasoning engine, and orchestration frameworks like LangChain, makes building these sophisticated systems more accessible than ever. While there are challenges in handling negative feedback and managing costs, the strategic advantage of a self-improving knowledge base is undeniable. As you continue to develop AI-powered applications, we encourage you to move beyond static RAG and start experimenting with feedback loops. It is the most promising path toward creating truly intelligent and perpetually useful AI systems.