Unleashing the Next Wave of AI: How NVIDIA’s New Architecture is Revolutionizing the ML Ecosystem

Introduction

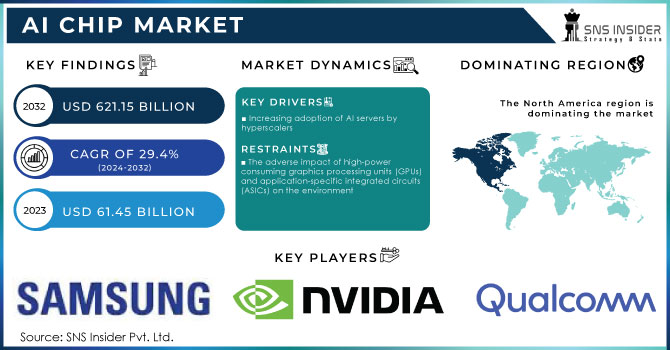

The field of artificial intelligence is in a state of perpetual acceleration, where breakthroughs that once seemed like science fiction are now becoming daily realities. At the heart of this relentless progress lies a symbiotic relationship between software innovation and hardware evolution. The latest NVIDIA AI News underscores this dynamic, revealing how next-generation GPU architectures are not just incremental upgrades but foundational shifts that redefine the boundaries of what’s possible. This evolution sends ripples across the entire AI stack, from the massive foundation models being developed by labs like Google DeepMind and Meta AI to the deployment frameworks that power our everyday applications. For developers, data scientists, and MLOps engineers, understanding the technical implications of these hardware advancements is no longer optional—it’s essential for staying at the cutting edge. This article delves deep into how new GPU architectures are reshaping AI development, providing practical code examples, exploring advanced optimization techniques, and outlining best practices for harnessing this unprecedented computational power.

The Architectural Leap: How New GPUs Reshape AI Development

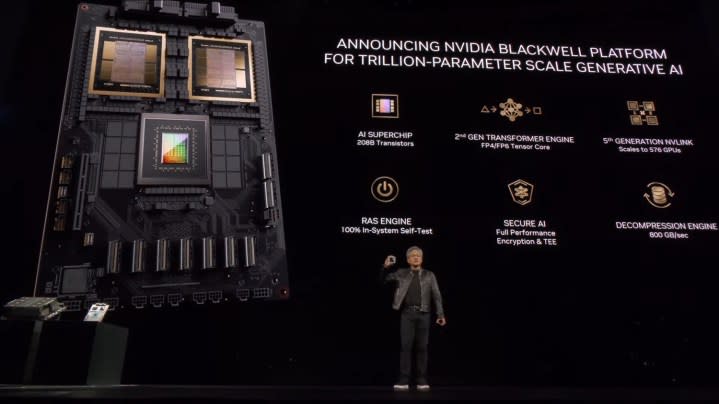

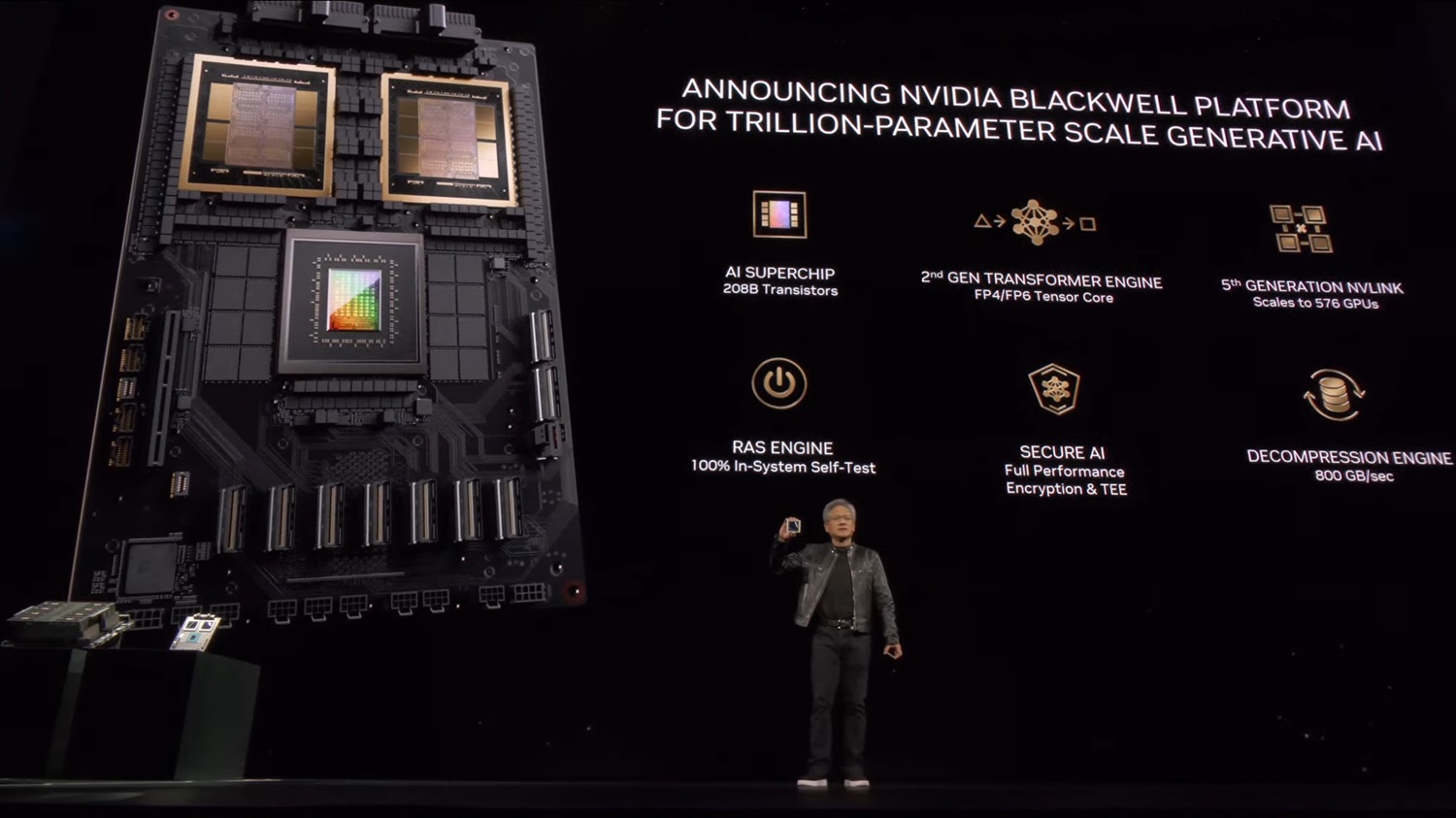

The transition to new GPU architectures, such as NVIDIA’s Blackwell, represents a monumental leap in computational capability. These advancements are not merely about adding more cores; they involve fundamental redesigns in data processing, memory bandwidth, and numerical precision, directly impacting how we build and train models.

Understanding the Core Innovations

At the core of these new architectures are several key innovations. One of the most significant is the introduction of new, lower-precision data formats like FP4 and FP6. While traditional training has relied on FP32 (single precision) and FP16 (half precision), these new formats allow for significantly higher throughput and reduced memory footprints, which is critical for the gargantuan models emerging from the labs of OpenAI, Anthropic, and Mistral AI. The second-generation Transformer Engine, an integral part of the new hardware, can intelligently and dynamically switch between these precisions, optimizing performance without requiring manual intervention from the developer. Furthermore, advancements in interconnect technology like NVLink provide near-limitless scalability for multi-GPU and multi-node training, a prerequisite for training models with trillions of parameters. This directly addresses the needs highlighted in recent PyTorch News and TensorFlow News, as framework developers race to integrate support for these cutting-edge features.

Framework Integration and Performance Gains

Major deep learning frameworks are rapidly evolving to capitalize on these hardware features. PyTorch’s Automatic Mixed Precision (AMP) is a prime example of this synergy. By using `torch.cuda.amp`, developers can effortlessly leverage the hardware’s Tensor Cores, which are specialized for mixed-precision matrix multiplication. The latest updates in frameworks often include deeper integrations with NVIDIA’s libraries like CUDA, cuDNN, and especially the NVIDIA Collective Communications Library (NCCL) for efficient multi-GPU communication. For inference, the story continues with tools like TensorRT, which compiles trained models into highly optimized runtime engines that take full advantage of the specific GPU architecture they are running on. This seamless integration is what translates raw teraflops into real-world performance gains for developers.

# Practical Example: Mixed-Precision Training with PyTorch and AMP

# This demonstrates how easily developers can leverage hardware acceleration.

import torch

import torch.nn as nn

import torch.optim as optim

# A simple model

model = nn.Sequential(nn.Linear(1024, 4096), nn.ReLU(), nn.Linear(4096, 10)).to("cuda")

optimizer = optim.SGD(model.parameters(), lr=0.01)

loss_fn = nn.CrossEntropyLoss()

# Dummy data

inputs = torch.randn(64, 1024, device="cuda")

targets = torch.randint(0, 10, (64,), device="cuda")

# Initialize the gradient scaler for mixed precision

# The scaler helps prevent underflow of gradients in FP16

scaler = torch.cuda.amp.GradScaler()

# Training loop with Automatic Mixed Precision (AMP)

for epoch in range(5): # 5 epochs for demonstration

optimizer.zero_grad()

# The autocast context manager automatically casts operations

# to lower precision (like FP16) where appropriate.

with torch.cuda.amp.autocast():

outputs = model(inputs)

loss = loss_fn(outputs, targets)

# scaler.scale() multiplies the loss by the scale factor

# to prevent gradients from becoming zero (underflowing).

scaler.scale(loss).backward()

# scaler.step() unscales the gradients and calls optimizer.step()

scaler.step(optimizer)

# Updates the scale for the next iteration.

scaler.update()

print(f"Epoch {epoch+1}, Loss: {loss.item()}")

print("Mixed-precision training complete.")Scaling Foundation Models: Training and Fine-Tuning at Unprecedented Scale

The sheer size of modern foundation models necessitates a paradigm shift in training methodologies. A single GPU, no matter how powerful, is insufficient. The real power of new architectures is unlocked when hundreds or thousands of GPUs work in concert. This is where distributed training frameworks and MLOps platforms become indispensable.

The Challenges of Multi-Node, Multi-GPU Training

Training a model with billions or trillions of parameters introduces immense challenges in memory management, data synchronization, and computational efficiency. Frameworks like Microsoft’s DeepSpeed and Anyscale’s Ray provide sophisticated tools to tackle these problems. DeepSpeed’s ZeRO (Zero Redundancy Optimizer) techniques, for instance, partition the model’s state (parameters, gradients, and optimizer states) across the available GPUs, dramatically reducing the memory footprint on each individual device. This allows teams to train models that would otherwise be impossible to fit into memory. Combining this with pipeline parallelism, where the model’s layers are split across GPUs, and tensor parallelism, where individual matrix multiplications are sharded, creates a powerful strategy for scaling. Orchestrating these complex training jobs is a key feature of modern cloud platforms like AWS SageMaker, Azure Machine Learning, and Google’s Vertex AI.

Practical Fine-Tuning with Hugging Face and New Hardware

While pre-training massive models is reserved for a few large organizations, fine-tuning them for specific tasks is a common and powerful technique. The Hugging Face Transformers News is constantly filled with updates that make this process more accessible. Using the `transformers` library in conjunction with tools like `accelerate` and `peft` (Parameter-Efficient Fine-Tuning), developers can efficiently adapt models like Llama or those from Cohere AI to their specific domain. The memory savings from DeepSpeed are particularly valuable here, enabling developers to fine-tune larger models on more modest hardware setups. Tools like LlamaFactory and platforms like RunPod or Modal further democratize this process by providing pre-configured environments and serverless GPU access.

// Example DeepSpeed Configuration for Fine-Tuning (ds_config.json)

// This JSON file configures DeepSpeed to use the ZeRO Stage 2 optimizer,

// which partitions gradients and optimizer states across GPUs to save memory.

{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"optimizer": {

"type": "AdamW",

"params": {

"lr": "auto",

"betas": "auto",

"eps": "auto",

"weight_decay": "auto"

}

},

"scheduler": {

"type": "WarmupLR",

"params": {

"warmup_min_lr": "auto",

"warmup_max_lr": "auto",

"warmup_num_steps": "auto"

}

},

"zero_optimization": {

"stage": 2,

"offload_optimizer": {

"device": "cpu",

"pin_memory": true

},

"allgather_partitions": true,

"allgather_bucket_size": 5e8,

"reduce_scatter": true,

"reduce_bucket_size": 5e8,

"contiguous_gradients": true,

"overlap_comm": true

},

"gradient_accumulation_steps": "auto",

"gradient_clipping": "auto",

"steps_per_print": 2000,

"train_batch_size": "auto",

"train_micro_batch_size_per_gpu": "auto",

"wall_clock_breakdown": false

}Revolutionizing Inference: Delivering AI Services at Speed and Scale

A trained model is only valuable if it can be deployed efficiently to serve users. Inference presents a different set of challenges than training, focusing on latency, throughput, and cost-effectiveness. The latest hardware and software innovations are tackling these challenges head-on, enabling real-time AI applications at an unprecedented scale.

The Role of Specialized Inference Servers

Serving large models, especially LLMs, requires more than a simple Flask or FastAPI endpoint. Specialized servers like NVIDIA Triton Inference Server are built for high-performance deployment. Triton offers features like dynamic batching (grouping incoming requests to maximize GPU utilization), multi-model serving from a single instance, and support for models from any framework via backends for TensorFlow, PyTorch, and ONNX. For LLMs specifically, recent vLLM News has highlighted its PagedAttention algorithm, a groundbreaking technique that dramatically improves throughput by optimizing memory management for key-value caches. These servers are becoming the standard for deploying models on platforms like Amazon Bedrock and Azure AI services.

Model Quantization and Compilation with TensorRT

To maximize inference performance, models often undergo a post-training optimization process. This typically involves two steps: quantization and compilation. Quantization is the process of converting a model’s weights from high-precision formats (like FP32) to lower-precision ones (like INT8 or the new FP4). This reduces the model’s size and speeds up computation, especially on hardware with specialized cores. The ONNX (Open Neural Network Exchange) format often serves as a crucial intermediary step, providing a standardized representation of the model. From ONNX, tools like NVIDIA’s TensorRT can take over. TensorRT is a deep learning compiler and runtime that applies numerous optimizations, including layer fusion, kernel auto-tuning, and precision calibration, to generate a highly optimized engine tailored for the specific target GPU. This workflow is a best practice for achieving the lowest possible latency.

# Conceptual Workflow: PyTorch -> ONNX -> TensorRT

# This code illustrates the steps for optimizing a model for inference.

import torch

import torch.onnx

import tensorrt as trt

# Assume 'model' is a trained PyTorch model and is in evaluation mode

# model = YourPytorchModel().cuda().eval()

# For demonstration, we'll use a simple model from torchvision

model = torch.hub.load('pytorch/vision:v0.10.0', 'resnet18', pretrained=True).cuda().eval()

dummy_input = torch.randn(1, 3, 224, 224, device="cuda")

# 1. Export the PyTorch model to ONNX format

onnx_model_path = "resnet18.onnx"

torch.onnx.export(model,

dummy_input,

onnx_model_path,

export_params=True,

opset_version=11,

do_constant_folding=True,

input_names=['input'],

output_names=['output'],

dynamic_axes={'input' : {0 : 'batch_size'},

'output' : {0 : 'batch_size'}})

print(f"Model exported to {onnx_model_path}")

# 2. Build a TensorRT engine from the ONNX model

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

trt_engine_path = "resnet18.engine"

with trt.Builder(TRT_LOGGER) as builder, \

builder.create_network(1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH)) as network, \

trt.OnnxParser(network, TRT_LOGGER) as parser:

builder.max_batch_size = 16 # Set max batch size

config = builder.create_builder_config()

config.max_workspace_size = 1 << 30 # 1GB workspace size

# Enable FP16 mode for further optimization on supported GPUs

if builder.platform_has_fast_fp16:

config.set_flag(trt.BuilderFlag.FP16)

print(f"Parsing ONNX file from: {onnx_model_path}")

with open(onnx_model_path, 'rb') as model_file:

if not parser.parse(model_file.read()):

print("ERROR: Failed to parse the ONNX file.")

for error in range(parser.num_errors):

print(parser.get_error(error))

exit()

print("Building TensorRT engine... This may take a few minutes.")

engine = builder.build_engine(network, config)

if engine:

with open(trt_engine_path, "wb") as f:

f.write(engine.serialize())

print(f"TensorRT engine saved to: {trt_engine_path}")

else:

print("Failed to build TensorRT engine.")The Broader Ecosystem: MLOps, Cloud Platforms, and RAG

The advancements in hardware and core frameworks catalyze innovation throughout the AI ecosystem, particularly in MLOps and application development. The ability to train and serve larger, more capable models places new demands on the tools we use to manage their lifecycle.

MLOps in the Age of Giant Models

As model complexity grows, so does the need for robust MLOps practices. Experiment tracking is more critical than ever, and tools like MLflow, Weights & Biases, and ClearML are essential for logging metrics, parameters, and artifacts from thousands of training runs. Model registries become the single source of truth for production-ready models. Furthermore, the rise of Retrieval-Augmented Generation (RAG) adds another layer of complexity. Tools like LangSmith are emerging to provide much-needed observability into the complex, multi-step chains of LLM applications. This comprehensive MLOps lifecycle is increasingly managed on integrated platforms like Snowflake Cortex and DataRobot, which aim to provide an end-to-end solution from data preparation to deployment.

Powering Next-Gen AI Applications like RAG

The performance unlocked by new GPUs is a direct enabler for sophisticated applications like RAG. RAG enhances LLMs by providing them with external, up-to-date information from a knowledge base, typically stored in a vector database. The process involves embedding a user query, searching a vector index for relevant documents, and then feeding those documents to an LLM as context. Each step needs to be incredibly fast to maintain a conversational user experience. This is where the ecosystem shines: libraries like Sentence Transformers create the embeddings, vector databases like Pinecone, Milvus, or Weaviate perform the ultra-fast similarity search, and frameworks like LangChain and LlamaIndex orchestrate the entire pipeline. The low-latency inference provided by a TensorRT-optimized model on the latest NVIDIA hardware is the engine that makes this entire complex interaction possible in real-time.

# Conceptual Example: A simple RAG pipeline using LangChain

# This shows how different components come together in a modern AI application.

from langchain.vectorstores import FAISS

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.chains import RetrievalQA

from langchain.llms import HuggingFaceHub # Placeholder for a powerful LLM endpoint

# 1. Initialize embedding model (runs efficiently on GPU)

# Recent Sentence Transformers News shows continuous model improvements.

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

# 2. Create a knowledge base (Vector Store)

# In a real app, this would be populated with your documents.

# Chroma, Qdrant, or Pinecone News often highlight new scaling features.

texts = ["The new NVIDIA architecture is called Blackwell.", "It features a second-generation Transformer Engine.", "TensorRT optimizes models for inference."]

vectorstore = FAISS.from_texts(texts, embeddings)

retriever = vectorstore.as_retriever()

# 3. Initialize a powerful LLM

# This would point to a deployed model, perhaps served via Triton or vLLM.

# The endpoint could be from Replicate, an AWS SageMaker endpoint, etc.

llm = HuggingFaceHub(repo_id="google/flan-t5-xxl", model_kwargs={"temperature":0.1})

# 4. Create the Retrieval-Augmented Generation (RAG) chain

# LangChain News and LlamaIndex News frequently announce new chain types and integrations.

qa_chain = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=retriever

)

# 5. Ask a question

question = "What is a key feature of the new NVIDIA architecture?"

response = qa_chain.run(question)

print(f"Question: {question}")

print(f"Answer: {response}")Conclusion

The latest advancements in NVIDIA’s GPU architecture are far more than just a headline in the tech news cycle; they are a fundamental catalyst propelling the entire artificial intelligence ecosystem forward. This new wave of hardware directly enables the training of larger, more sophisticated models, while simultaneously providing the raw power needed to deploy them for real-time inference at scale. For developers and engineers, this translates into a clear call to action: embrace the tools and techniques that bridge the gap between hardware potential and application reality. This means mastering mixed-precision training in PyTorch and TensorFlow, leveraging distributed frameworks like DeepSpeed for fine-tuning, and adopting optimized inference runtimes like TensorRT and Triton. By staying abreast of these co-evolving hardware and software trends, we can not only build more powerful AI systems but also unlock novel applications that will continue to shape our future.