Unpacking the Latest LlamaFactory Updates: Advanced LLM Fine-Tuning Made Easy

The world of large language models (LLMs) is moving at an unprecedented pace, with breakthroughs and new models announced almost weekly. While foundation models from organizations like OpenAI, Google DeepMind, and Mistral AI provide incredible general-purpose capabilities, the true power for many businesses and researchers lies in specialization. Fine-tuning—the process of adapting a pre-trained model to a specific task or domain—is the key to unlocking this potential. However, the technical complexity of training pipelines, from data preparation to distributed training and inference optimization, has often been a significant barrier. This is where LlamaFactory enters the picture.

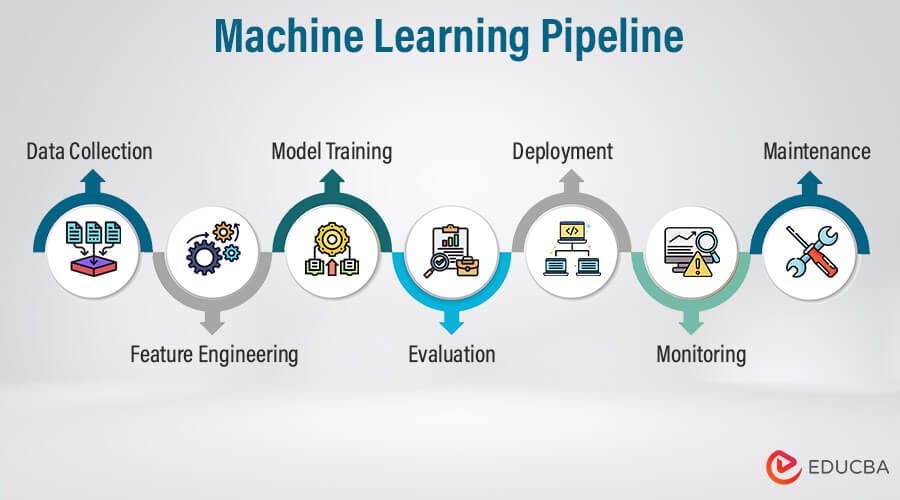

LlamaFactory has rapidly emerged as a go-to, all-in-one framework for fine-tuning LLMs, dramatically simplifying the entire lifecycle. It provides a unified, user-friendly interface for a wide array of state-of-the-art techniques, including Supervised Fine-Tuning (SFT), Reinforcement Learning from Human Feedback (RLHF), and Direct Preference Optimization (DPO). Recent LlamaFactory News highlights its commitment to staying on the cutting edge, incorporating support for the latest models, introducing more efficient training methods, and streamlining the path from training to deployment. This article dives deep into these latest updates, providing a comprehensive technical guide with practical code examples to help you leverage the full power of LlamaFactory for your next AI project.

The LlamaFactory Ecosystem: A Refresher on Core Capabilities

Before exploring the latest advancements, it’s essential to understand why LlamaFactory has gained such traction. Its core philosophy is to abstract away the boilerplate code and complex configurations typically associated with LLM training, which often involves deep knowledge of frameworks like PyTorch and tools like DeepSpeed. This allows developers to focus on what truly matters: their data and the model’s behavior. The latest PyTorch News often emphasizes the need for higher-level libraries, and LlamaFactory is a prime example of this trend in action.

A Unified Training and Inference Interface

LlamaFactory provides a single, coherent interface—accessible via a command-line interface (CLI), a web UI, and a Python API—for multiple training paradigms. Whether you’re performing initial instruction tuning with SFT or aligning your model with human preferences using DPO, the workflow remains consistent. This unification is a significant productivity booster, eliminating the need to learn separate toolchains for different stages of model development. The framework is built on top of the robust Hugging Face Transformers News ecosystem, ensuring compatibility with a vast range of models and datasets available on the Hugging Face Hub.

What’s New: Expanded Model Support and PEFT Techniques

Keeping pace with the rapid release schedule of new models from Meta AI News (Llama 3), Mistral AI News (Mistral Large, Mixtral), and others is a constant challenge. A major recent focus for LlamaFactory has been to provide immediate, out-of-the-box support for these new architectures. This means users can start fine-tuning the latest and greatest models without waiting for custom implementations. Furthermore, the framework excels at integrating Parameter-Efficient Fine-Tuning (PEFT) methods like LoRA and its quantized variant, QLoRA. This allows for fine-tuning massive models on consumer-grade hardware, a democratizing force in the AI community. The ability to easily apply these techniques is a game-changer for anyone without access to a large-scale cluster from providers like AWS SageMaker or Azure Machine Learning.

Getting started is incredibly straightforward. Here’s a practical example of launching a QLoRA SFT job for the new Llama 3 8B model using the command-line interface.

# Example: Supervised Fine-Tuning (SFT) on Llama 3 8B with QLoRA

# This command assumes you have LlamaFactory installed and your dataset is ready.

llamafactory-cli train \

--stage sft \

--do_train \

--model_name_or_path meta-llama/Meta-Llama-3-8B-Instruct \

--dataset alpaca_gpt4_en \

--template llama3 \

--finetuning_type lora \

--lora_target q_proj,v_proj \

--output_dir saves/Llama3-8B-Instruct/lora/sft \

--overwrite_cache \

--per_device_train_batch_size 2 \

--gradient_accumulation_steps 4 \

--lr_scheduler_type cosine \

--logging_steps 10 \

--save_steps 100 \

--learning_rate 5e-5 \

--num_train_epochs 3.0 \

--plot_loss \

--quantization_bit 4 \

--fp16Beyond SFT: Mastering Advanced Alignment with DPO

While SFT is excellent for teaching a model new knowledge or skills, aligning its behavior with human preferences often requires more advanced techniques. Reinforcement Learning from Human Feedback (RLHF) was the pioneering method, but it is notoriously complex and unstable to train. The latest Google DeepMind News and research from other top labs have highlighted Direct Preference Optimization (DPO) as a more stable and efficient alternative. DPO reframes alignment as a simple classification problem, where the model learns to distinguish between “chosen” and “rejected” responses from a preference dataset. LlamaFactory has embraced this trend, offering a robust and easy-to-use implementation of DPO.

Configuring a DPO Training Run

To run DPO, you need a preference dataset. This dataset typically contains a prompt, a “chosen” response (the preferred one), and a “rejected” response (the less preferred one). LlamaFactory simplifies the process by allowing you to define your datasets in a central dataset_info.json file. This modular approach makes it easy to mix and match datasets for different experiments. This is far simpler than wrestling with complex data loaders in raw PyTorch or TensorFlow. The ecosystem around data preparation, with tools like LangChain and LlamaIndex, can be used to generate these preference datasets at scale.

Here is an example of what a dataset configuration for a DPO run might look like in dataset_info.json. This file tells LlamaFactory where to find the data and how to parse it.

{

"hh_rlhf_en": {

"file_name": "anthropic_hh_rlhf.jsonl",

"columns": {

"prompt": "prompt",

"chosen": "chosen",

"rejected": "rejected"

}

},

"dpo_mix_en": {

"file_name": "dpo_mix_7k.jsonl",

"columns": {

"prompt": "prompt",

"chosen": "chosen",

"rejected": "rejected"

}

}

}Programmatically Preparing Your Data

Often, your raw data won’t be in the exact format required. You might need to combine fields, translate, or filter examples. Using Python and the Hugging Face datasets library is a powerful way to preprocess your data before feeding it to LlamaFactory. This programmatic approach is crucial for building reproducible MLOps pipelines. Once your data is ready, you can easily launch the DPO training run from the command line, referencing the SFT model you trained previously.

Below is a Python snippet demonstrating how to load a raw dataset and transform it into the required “prompt”, “chosen”, “rejected” format.

from datasets import load_dataset

def format_dpo_dataset(example):

# Example transformation: create a prompt from a question

# and assume 'response_a' is chosen and 'response_b' is rejected

return {

"prompt": "Question: " + example["question"],

"chosen": example["response_a"],

"rejected": example["response_b"]

}

# Load a hypothetical raw dataset from the Hugging Face Hub

raw_dataset = load_dataset("your_namespace/your_raw_preference_data")

# Apply the transformation

dpo_dataset = raw_dataset["train"].map(format_dpo_dataset)

# Save the formatted dataset to a JSONL file for LlamaFactory

dpo_dataset.to_json("my_dpo_dataset.jsonl", orient="records", lines=True)

print("Dataset successfully converted and saved.")Operationalizing Your Models: MLOps and High-Performance Inference

Training a model is only half the battle. The ultimate goal is to deploy it for real-world applications. Recent LlamaFactory News has focused heavily on bridging the gap between training and production, incorporating features that align with modern MLOps practices and high-performance serving.

Experiment Tracking and Model Management

Reproducibility and traceability are cornerstones of MLOps. LlamaFactory integrates seamlessly with popular experiment tracking tools like Weights & Biases, MLflow, and TensorBoard. By simply adding a few flags to your training command, you can automatically log all hyperparameters, metrics (like training loss and evaluation scores), and even model checkpoints. This is invaluable for comparing different runs, debugging issues, and maintaining a clear history of your model’s lineage, a practice heavily promoted by platforms like Comet ML and ClearML.

Blazing-Fast Inference with vLLM Integration

Once your model is fine-tuned, serving it efficiently is critical. Naive inference implementations can be slow and memory-intensive. The latest news in the inference space revolves around engines like vLLM, which uses innovations like PagedAttention to dramatically increase throughput. LlamaFactory now includes a built-in API server that can leverage vLLM as its backend. This allows you to go from a trained LoRA adapter to a high-performance API endpoint in just two commands: one to merge the adapter into the base model, and another to launch the server. For even more advanced optimization, models can be exported to formats like ONNX and accelerated with NVIDIA AI News-making tools like TensorRT for deployment on Triton Inference Server.

Here’s how you can export your fine-tuned model and launch a vLLM-powered API server.

# Step 1: Export the model

# This merges the LoRA weights with the base model for standalone deployment.

llamafactory-cli export \

--model_name_or_path meta-llama/Meta-Llama-3-8B-Instruct \

--adapter_name_or_path saves/Llama3-8B-Instruct/lora/sft \

--template llama3 \

--finetuning_type lora \

--export_dir models/Llama3-8B-SFT-merged \

--export_size 2 \

--export_legacy_format False

# Step 2: Launch the API server with vLLM

# This starts a RESTful API endpoint compatible with OpenAI's client.

llamafactory-api launch \

--model_name_or_path models/Llama3-8B-SFT-merged \

--template llama3 \

--engine vllm \

--host 0.0.0.0 \

--port 8000Best Practices for Efficient and Effective Fine-Tuning

To get the most out of LlamaFactory, it’s important to follow best practices for both efficiency and model quality. The framework provides many knobs to turn, and knowing which ones to focus on can save significant time and compute resources.

Choosing the Right PEFT Strategy

For most use cases, starting with QLoRA is the recommended approach. It drastically reduces memory usage, allowing you to fine-tune larger models on smaller GPUs. When configuring LoRA, the lora_target parameter is crucial. It specifies which modules of the model (e.g., attention layers like `q_proj`, `v_proj`) to apply the adapters to. Targeting all linear layers can yield better performance at the cost of more trainable parameters. Experimenting with different LoRA ranks (lora_rank) and alpha values (lora_alpha) is also key to finding the sweet spot between performance and model size.

Accelerating Training with DeepSpeed

For multi-GPU training or when working with very large models, leveraging DeepSpeed is essential. LlamaFactory has first-class support for DeepSpeed’s ZeRO (Zero Redundancy Optimizer) stages, which partition the model’s weights, gradients, and optimizer states across multiple GPUs to reduce memory consumption on each device. Enabling DeepSpeed is as simple as providing a configuration file or using one of the built-in presets. This, combined with FlashAttention for optimized self-attention computation, can lead to dramatic speedups in your training jobs. Tools like Ray and Dask are also part of this broader distributed computing ecosystem, tackling similar challenges at different scales.

Common Pitfalls to Avoid

A few common mistakes can derail a fine-tuning project. First, ensure your data quality is high; the model will amplify any noise or biases present in the training set. Second, choose a base model that is already strong in the general domain you’re targeting. Fine-tuning is for specialization, not for teaching a model fundamental reasoning from scratch. Finally, don’t neglect hyperparameter tuning. The default learning rate and scheduler might not be optimal for your specific model and dataset. Consider using hyperparameter optimization tools like Optuna for a more systematic search.

Conclusion: The Future of Accessible LLM Customization

The latest updates from LlamaFactory solidify its position as an indispensable tool in the modern AI stack. By continuously integrating support for new models, refining advanced alignment techniques like DPO, and building robust MLOps and inference pathways, the framework empowers a broader audience to create highly customized, state-of-the-art language models. It elegantly navigates the complex AI landscape, bridging powerful low-level tools from the NVIDIA AI News ecosystem with the high-level usability demanded by developers and researchers.

As the field evolves, with platforms like Ollama making local model execution trivial and services like Replicate and Modal simplifying cloud deployment, having a reliable and versatile fine-tuning toolkit is more important than ever. LlamaFactory is not just a tool; it’s an accelerator for innovation. The next steps are clear: explore the official documentation, try fine-tuning a model on a custom dataset using a Google Colab notebook, and engage with the active community to share your findings and learn from others. The journey to building your own specialized LLM has never been more accessible.