The Next Wave of AutoML: From Automated Model Building to Integrated MLOps

Automated Machine Learning (AutoML) has rapidly evolved from a niche academic concept into a cornerstone of modern data science and AI development. Initially promising to democratize machine learning by automating the tedious tasks of model selection and hyperparameter tuning, today’s AutoML platforms are pushing the boundaries far beyond these original goals. The latest AutoML News isn’t just about finding the best algorithm anymore; it’s about creating a seamless, end-to-end workflow that integrates robust MLOps practices, real-time insights, and enhanced explainability. This shift transforms AutoML from a simple “model-finder” into a powerful engine for building, deploying, and maintaining reliable, production-grade AI systems. As organizations strive to scale their AI initiatives, these integrated AutoML solutions are becoming indispensable for accelerating development cycles, ensuring model reliability, and ultimately, driving tangible business value.

Understanding the Core of AutoML: Automating the ML Pipeline

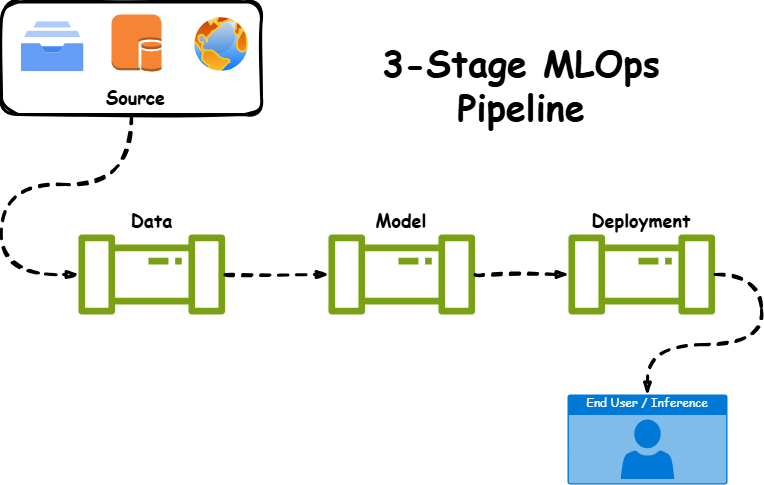

At its heart, AutoML addresses the Combined Algorithm Selection and Hyperparameter optimization (CASH) problem. A typical machine learning project involves a series of complex and often manual steps: data preprocessing, feature engineering, selecting an appropriate model architecture, and fine-tuning its hyperparameters. AutoML automates this entire pipeline, intelligently searching through a vast space of possibilities to discover the optimal configuration for a given dataset and task.

Key Components of an AutoML System

- Data Preprocessing and Feature Engineering: Automatically handles tasks like imputation for missing values, scaling numerical features, and encoding categorical variables. Advanced systems can even generate new, more predictive features from existing ones.

- Model Selection: Systematically evaluates a wide range of algorithms, from classic models like Logistic Regression and Random Forests to more complex ones like Gradient Boosting Machines (e.g., XGBoost, LightGBM) and even neural networks.

- Hyperparameter Optimization (HPO): Employs sophisticated search strategies like Bayesian optimization, genetic algorithms, or reinforcement learning to find the best set of hyperparameters for the chosen model, moving beyond simple grid or random searches.

Libraries like auto-sklearn, built on top of the popular scikit-learn library, provide a powerful, accessible entry point into the world of AutoML. It uses Bayesian optimization to efficiently explore the pipeline configuration space.

Let’s look at a practical example of using auto-sklearn for a classification task. This code snippet demonstrates how few lines of code can replace hundreds of lines of manual experimentation.

import autosklearn.classification

import sklearn.model_selection

import sklearn.datasets

import sklearn.metrics

# Load a sample dataset

X, y = sklearn.datasets.load_digits(return_X_y=True)

X_train, X_test, y_train, y_test = \

sklearn.model_selection.train_test_split(X, y, random_state=1)

# 1. Initialize the AutoML classifier

# We set a time limit for the search (in seconds) and for each individual model

automl = autosklearn.classification.AutoSklearnClassifier(

time_left_for_this_task=120, # Total time for the search

per_run_time_limit=30, # Time limit for a single model fit

n_jobs=-1 # Use all available CPU cores

)

# 2. Start the automated search process

automl.fit(X_train, y_train)

# 3. Evaluate the best model found

y_hat = automl.predict(X_test)

accuracy = sklearn.metrics.accuracy_score(y_test, y_hat)

# 4. Print the results and the final model pipeline

print(f"Accuracy score: {accuracy:.4f}")

print("\nFinal Model Pipeline Discovered:")

print(automl.show_models())This simple example encapsulates the power of AutoML. The system automatically discovered a high-performing pipeline, including preprocessing steps and a tuned model, within the specified time constraint.

Advanced Search Strategies: Genetic Programming with TPOT

While Bayesian optimization is effective, other AutoML frameworks employ different, equally powerful search strategies. TPOT (Tree-based Pipeline Optimization Tool) is a prime example, utilizing genetic programming to optimize machine learning pipelines. It represents pipelines as trees and uses evolutionary principles—like mutation and crossover—to “evolve” the best possible pipeline over multiple generations.

How Genetic Programming Works in TPOT

- Initialization: TPOT starts by creating a population of random ML pipelines.

- Evaluation: Each pipeline is evaluated on the training data using cross-validation, and a fitness score (e.g., accuracy or F1-score) is assigned.

- Selection: The best-performing pipelines are selected to “reproduce.”

- Crossover & Mutation: New pipelines (offspring) are created by combining parts of the parent pipelines (crossover) or making random changes to them (mutation).

- Repeat: This process repeats for many generations, with the overall population fitness improving over time until a stopping criterion (like a time limit or number of generations) is met.

This evolutionary approach can uncover novel and highly effective combinations of preprocessing steps and models that a human data scientist might not have considered. Recent PyTorch News and TensorFlow News also highlight the integration of such advanced HPO techniques directly into deep learning workflows.

Here’s how you can implement TPOT for a regression problem:

from tpot import TPOTRegressor

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

import numpy as np

# Load the dataset

housing = fetch_california_housing()

X_train, X_test, y_train, y_test = train_test_split(

housing.data, housing.target, train_size=0.75, test_size=0.25, random_state=42

)

# Initialize the TPOT Regressor

# generations: Number of iterations for the genetic algorithm

# population_size: Number of pipelines to maintain in each generation

# verbosity: How much information to print during the run

tpot = TPOTRegressor(

generations=5,

population_size=50,

verbosity=2,

random_state=42,

n_jobs=-1

)

# Start the optimization process

tpot.fit(X_train, y_train)

# Evaluate the final pipeline on the test set

print(f"Final R2 score: {tpot.score(X_test, y_test):.4f}")

# Export the Python code for the best pipeline found

tpot.export('tpot_california_housing_pipeline.py')A key advantage of TPOT is its ability to export the final pipeline as clean, readable Python code. This demystifies the process and allows for further customization, inspection, and integration into production systems, bridging the gap between automated discovery and manual refinement.

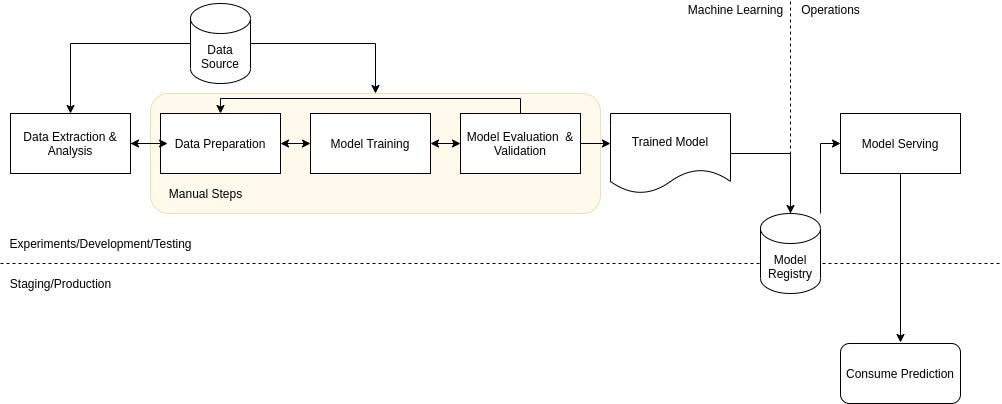

The MLOps Revolution: Integrating AutoML into Production Workflows

The most significant trend in recent AutoML News is its deep integration with the MLOps ecosystem. Modern AutoML is no longer a standalone tool for experimentation but a critical component of the end-to-end machine learning lifecycle. This integration focuses on reproducibility, scalability, monitoring, and governance.

Experiment Tracking and Reproducibility

AutoML systems can run thousands of trials. Without proper tracking, this process becomes a black box. Tools like MLflow, Weights & Biases, and Comet ML are essential for logging every experiment. They record the hyperparameters, code versions, performance metrics, and even the resulting model artifacts for each run. This ensures that results are reproducible and provides a clear audit trail.

Frameworks like Optuna, a popular hyperparameter optimization library, offer seamless integrations with these tracking tools. Let’s see how to combine Optuna’s efficient search with MLflow’s powerful tracking capabilities.

import optuna

import mlflow

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

from sklearn.datasets import load_iris

# Load data

X, y = load_iris(return_X_y=True)

def objective(trial):

# Suggest hyperparameters for the trial

n_estimators = trial.suggest_int("n_estimators", 10, 200)

max_depth = trial.suggest_int("max_depth", 2, 32, log=True)

criterion = trial.suggest_categorical("criterion", ["gini", "entropy"])

# Create and evaluate the model

clf = RandomForestClassifier(

n_estimators=n_estimators,

max_depth=max_depth,

criterion=criterion,

random_state=42

)

# Use cross-validation for robust evaluation

score = cross_val_score(clf, X, y, n_jobs=-1, cv=3).mean()

return score

# Set up MLflow experiment

mlflow.set_experiment("Optuna_RandomForest_Optimization")

# Use MLflowCallback to automatically log trials

mlflow_callback = optuna.integration.MLflowCallback(

tracking_uri=mlflow.get_tracking_uri(),

metric_name="mean_cv_accuracy",

)

# Create and run the Optuna study

study = optuna.create_study(direction="maximize")

study.optimize(objective, n_trials=100, callbacks=[mlflow_callback])

print(f"Number of finished trials: {len(study.trials)}")

print("Best trial:")

trial = study.best_trial

print(f" Value: {trial.value}")

print(" Params: ")

for key, value in trial.params.items():

print(f" {key}: {value}")After running this script, you can launch the MLflow UI (`mlflow ui`) to see a detailed dashboard of all 100 trials, compare their parameters and metrics, and identify the best-performing model, all logged automatically.

Deployment and Monitoring on Cloud Platforms

Leading cloud providers have heavily invested in integrating AutoML into their MLOps platforms. AWS SageMaker, Google Cloud Vertex AI, and Azure Machine Learning offer sophisticated AutoML services (e.g., SageMaker Autopilot, Vertex AI AutoML) that not only find the best model but also provide one-click deployment options. Once deployed, these platforms offer tools for monitoring model drift and performance degradation in real-time, triggering alerts or retraining pipelines automatically when necessary. This closes the loop, creating a truly automated and self-sustaining AI system.

Best Practices and Strategic Considerations

While powerful, AutoML is not a magic wand. To leverage it effectively, it’s crucial to follow best practices and understand its limitations.

When to Use AutoML:

- Establishing Baselines: AutoML is excellent for quickly setting a strong performance baseline for a new problem. This baseline can inform whether more manual, resource-intensive modeling is justified.

- Democratizing AI: It empowers domain experts, analysts, and developers who may not have deep ML expertise to build and deploy models.

- Accelerating Prototyping: It drastically reduces the time required to iterate through different modeling approaches during the initial phases of a project.

- Augmenting Expert Data Scientists: It can handle the “grunt work” of HPO and model selection, freeing up senior data scientists to focus on more complex challenges like problem formulation and feature engineering.

Common Pitfalls to Avoid:

- “Garbage In, Garbage Out”: AutoML cannot fix fundamental data quality issues. The quality of the input data remains the single most important factor for success.

- Computational Cost: Searching a vast space of pipelines can be computationally expensive and time-consuming. It’s important to set realistic time or budget constraints.

- Overfitting the Validation Set: If the search is too exhaustive or the validation strategy is weak, the AutoML system might find a model that performs exceptionally well on the validation data but fails to generalize to new, unseen data.

–Ignoring Domain Knowledge: Blindly applying AutoML without incorporating domain expertise can lead to models that are statistically sound but practically useless or nonsensical.

The latest DataRobot News and developments from platforms like H2O.ai emphasize a “glass-box” approach, where explainability features (like SHAP values and feature importance plots) are built-in, helping to mitigate the risk of deploying a black-box model that cannot be trusted or debugged.

Conclusion: The Future of Intelligent Automation

The trajectory of AutoML is clear: it is moving from a standalone automation tool to a deeply integrated, intelligent component of the modern MLOps stack. The latest AutoML News consistently points towards systems that offer not just automated model building but also robust experiment tracking, one-click deployment, continuous monitoring, and built-in explainability. This evolution is crucial for scaling AI adoption within organizations, ensuring that models are not only high-performing but also reliable, transparent, and maintainable over their entire lifecycle.

For developers and data scientists, the key takeaway is that AutoML is not a replacement for human expertise but a powerful force multiplier. By automating the repetitive and time-consuming aspects of model development, it allows practitioners to focus on higher-value tasks: understanding the business problem, curating high-quality data, and interpreting model results to drive strategic decisions. As the technology continues to mature, we can expect even tighter integrations with tools across the data ecosystem, from data warehouses like Snowflake Cortex to vector databases like Pinecone and Milvus, further solidifying AutoML’s role as the engine of enterprise AI.