o3 Just Broke My Benchmarks (And Probably Yours Too)

I’ve been staring at evaluation curves for the better part of a decade. Usually, they creep up. You get a percent here, a percent there. We celebrate, we tweet, we go back to optimizing hyperparameters. It’s a grind.

Then OpenAI drops o3.

I honestly thought the leak was a typo. 96.7% on AIME? That’s not a “state-of-the-art improvement.” That’s basically solving the dataset. For context, most PhDs I know would struggle to hit those numbers without a coffee IV and a stack of reference books. And we aren’t just talking about math anymore.

The thing that actually kept me up last night wasn’t the math score. It was the coding metrics. Specifically, the Codeforces Elo.

The Grandmaster Threshold

Let’s talk about that 2727 Elo rating. If you aren’t deep into competitive programming, let me translate: that’s absurd. It’s firmly in “Grandmaster” territory. We aren’t talking about a model that can scrape together a decent Python script to parse a CSV file. We’re talking about an entity that can look at a convoluted algorithmic problem, identify the edge cases that would trip up a senior engineer, and implement an optimal solution in C++ while I’m still reading the prompt.

For the last year, I’ve been building eval pipelines in Weights & Biases assuming that code generation was hitting a plateau. My assumption—and yeah, I was wrong—was that we’d see diminishing returns after the initial GPT-4 boom. The reasoning was that there’s only so much high-quality code on GitHub to train on.

But o3 isn’t just regurgitating training data. It’s reasoning. That’s the keyword we’ve been throwing around loosely for years, but now it feels tangible. The model is effectively running a simulation of the problem space before committing to tokens.

The ARC Paradox

Here’s where it gets weird. The Abstraction and Reasoning Corpus (ARC) has been the thorn in the side of LLMs for ages. It was supposed to be the “gotcha” benchmark—the one that proved neural nets were just stochastic parrots because they couldn’t handle novel, visual logic puzzles that a human child could solve.

o3 solving “unsolvable” ARC tasks changes my entire mental model of what we are dealing with.

It implies that the “test-time compute” paradigm—where the model spends more time thinking before answering—is actually working. It’s trading latency for intelligence in a way we haven’t seen before. The model isn’t just predicting the next token; it’s traversing a decision tree, backtracking, and verifying its own logic internally.

Logging “Thoughts” in W&B

So, how do we actually track this? If you’re using W&B to log your LLM experiments, the standard completion metric is starting to feel inadequate. We need to measure the reasoning effort.

I’ve started splitting my token counts. There’s the output you see, and then there’s the invisible work happening behind the API curtain. If you’re building with these reasoning models, you need to log the latency relative to the complexity of the prompt, not just the output length.

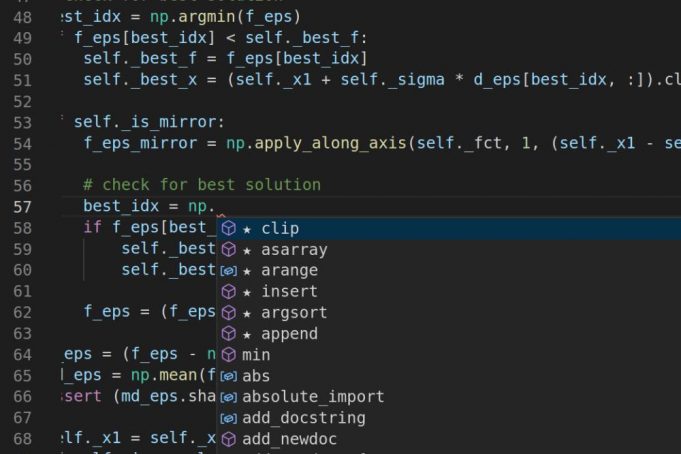

Here’s a quick snippet of how I’m structuring my traces now to capture this “thinking” overhead:

import wandb

import time

from openai import OpenAI

# Initialize W&B run

wandb.init(project="o3-reasoning-evals")

client = OpenAI()

def log_reasoning_trace(prompt, model="o3"):

start_time = time.time()

# The 'reasoning' happens here, latency is our proxy for 'thought depth'

response = client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}]

)

end_time = time.time()

latency = end_time - start_time

# Log the trace with specific metadata for reasoning cost

wandb.log({

"prompt": prompt,

"response": response.choices[0].message.content,

"latency_seconds": latency,

"total_tokens": response.usage.total_tokens,

# If the API exposes reasoning_tokens specifically (hypothetical depending on access level)

"reasoning_tokens": getattr(response.usage, 'reasoning_tokens', 0),

"tokens_per_second": response.usage.total_tokens / latency

})

# Example usage

complex_math_problem = "Prove that for any prime p > 3, p^2 - 1 is divisible by 24."

log_reasoning_trace(complex_math_problem)Why bother logging latency if you aren’t paying for time? Because with o3, latency is a signal of uncertainty. In my early tests, I’ve noticed a correlation: when the model takes longer on a Codeforces problem, it’s usually correct. When it answers instantly? It’s often hallucinating a simple heuristic. That’s the opposite of what we saw with older models.

The “Solved” Problem

There is a downside to all this. A 96.7% benchmark score is boring.

It means AIME is effectively dead as a discriminator for top-tier models. We’re going to need harder tests. I’m already seeing people scramble to create “uncontamined” evaluation sets because o3 has likely seen everything on the public internet. If your evaluation strategy relies on public datasets, you aren’t testing the model anymore—you’re testing its memory.

I’m shifting my focus to custom, private evaluation sets. Synthetically generated logic puzzles that don’t exist in the training distribution. That’s the only way to know if this thing is actually reasoning or just really, really good at pattern matching against a massive library of solved problems.

But hey, if o3 can debug my race conditions without me having to explain the entire codebase three times? I’ll take it. Even if it makes my old leaderboards look ridiculous.