Beyond the Notebook: Mastering MLOps with Comet ML – Latest Features and Integrations

In the rapidly evolving landscape of artificial intelligence, the journey from a promising model in a Jupyter notebook to a robust, production-ready application is fraught with challenges. Reproducibility, collaboration, and scalability are no longer afterthoughts but core requirements for any serious machine learning project. This is where Machine Learning Operations (MLOps) comes in, providing the principles and tools to streamline the entire ML lifecycle. Among the leading platforms in this space, Comet ML has emerged as a comprehensive solution for experiment tracking, model management, and production monitoring. Keeping up with the latest Comet ML News is essential for teams looking to build reliable and scalable AI systems.

This article provides a deep dive into the Comet ML platform. We will explore its fundamental concepts, walk through practical code examples for integrating it into your daily workflow, discuss advanced features for production environments, and examine its place within the broader MLOps ecosystem. Whether you’re a data scientist tired of losing track of model versions or an MLOps engineer building a resilient AI pipeline, understanding how to leverage Comet can fundamentally change the way you work, putting you on par with developments seen in Google DeepMind News and Meta AI News.

Understanding the Core Pillars of Comet ML

At its heart, Comet is an MLOps platform designed to give individuals and teams a centralized, organized, and automated way to manage the machine learning lifecycle. It’s not just about logging metrics; it’s about creating a complete, auditable record of your work, from initial data exploration to post-deployment monitoring. This philosophy is built on several key pillars that address the most common pain points in ML development.

Experiment Tracking: The Foundation of Reproducibility

The most fundamental feature of Comet is its powerful experiment tracking. An “experiment” in Comet is a single run of your code, and the platform automatically captures a wealth of information without requiring significant code instrumentation. This is a critical advantage over manual tracking or simpler tools, providing a level of detail comparable to platforms discussed in MLflow News and Weights & Biases News.

When you run your training script with Comet integrated, it automatically logs:

- Hyperparameters: The exact configuration used for the run.

- Metrics: Any metrics you define, like accuracy, loss, or F1-score, logged at each step or epoch.

- Code: A snapshot of your Git repository and commit hash, ensuring you can always recreate the exact code state.

- System Metrics: CPU, GPU, and RAM usage throughout the experiment, helping you identify performance bottlenecks.

- Dependencies: A list of installed packages from your environment (e.g., `requirements.txt`), guaranteeing environment reproducibility.

This automatic logging works seamlessly with major frameworks. Whether you’re following the latest TensorFlow News, PyTorch News, or developments in the JAX News ecosystem, Comet’s auto-loggers can capture training dynamics with minimal effort.

import comet_ml

import torch

import torch.nn as nn

# Initialize the Comet Experiment

# Replace with your API key and project details

experiment = comet_ml.Experiment(

api_key="YOUR_API_KEY",

project_name="comet-pytorch-example",

workspace="YOUR_WORKSPACE",

)

# Define Hyperparameters

hyper_params = {

"learning_rate": 0.01,

"epochs": 10,

"batch_size": 64,

"optimizer": "Adam"

}

experiment.log_parameters(hyper_params)

# Dummy data and model for demonstration

model = nn.Linear(10, 2)

optimizer = torch.optim.Adam(model.parameters(), lr=hyper_params["learning_rate"])

criterion = nn.CrossEntropyLoss()

X_train = torch.randn(1000, 10)

y_train = torch.randint(0, 2, (1000,))

# Training Loop

model.train()

for epoch in range(hyper_params["epochs"]):

optimizer.zero_grad()

outputs = model(X_train)

loss = criterion(outputs, y_train)

# Log metrics to Comet

experiment.log_metric("loss", loss.item(), step=epoch)

loss.backward()

optimizer.step()

print(f"Epoch {epoch+1}/{hyper_params['epochs']}, Loss: {loss.item():.4f}")

# End the experiment to ensure all data is uploaded

experiment.end()Artifacts and Datasets: Versioning Beyond Code

Modern ML is not just about code; it’s about data, models, and other assets. Comet Artifacts provide a robust way to version and store any file or directory associated with your project. You can save trained model weights, data preprocessing pipelines, evaluation charts, or even entire datasets. Each artifact is versioned, and Comet tracks its lineage, showing you exactly which experiment produced a specific model artifact or which version of a dataset was used in a training run. This is crucial for debugging and maintaining compliance, especially when dealing with large models from sources like those in Hugging Face News or OpenAI News.

Getting Hands-On: A Practical Guide to Integrating Comet ML

Integrating Comet into an existing project is straightforward. The platform is designed to be minimally intrusive, allowing you to add powerful MLOps capabilities without a major refactor of your codebase. Let’s walk through a more realistic example using a popular library.

Training and Logging a Hugging Face Model

The ecosystem around Hugging Face Transformers News is one of the most active in AI. Comet has excellent native integration, making it simple to track fine-tuning jobs for models from Mistral AI News or Cohere News.

First, ensure you have the necessary libraries installed:

pip install comet_ml transformers datasets torch scikit-learn

Next, you can set up your training script. Comet’s auto-logging will handle most of the work. By simply initializing an experiment, Comet can automatically detect you are using a Hugging Face Trainer and log all relevant information.

import comet_ml

import numpy as np

from datasets import load_dataset

from transformers import AutoTokenizer, AutoModelForSequenceClassification, TrainingArguments, Trainer

from sklearn.metrics import accuracy_score, precision_recall_fscore_support

# Start a Comet Experiment

experiment = comet_ml.Experiment(

api_key="YOUR_API_KEY",

project_name="hf-sentiment-analysis",

workspace="YOUR_WORKSPACE",

)

# 1. Load dataset and tokenizer

dataset = load_dataset("imdb", split="train[:1%]") # Use a small subset for demo

tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

def tokenize_function(examples):

return tokenizer(examples["text"], padding="max_length", truncation=True)

tokenized_datasets = dataset.map(tokenize_function, batched=True)

small_train_dataset = tokenized_datasets.shuffle(seed=42)

# 2. Load model

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased", num_labels=2)

# 3. Define metrics computation

def compute_metrics(pred):

labels = pred.label_ids

preds = pred.predictions.argmax(-1)

precision, recall, f1, _ = precision_recall_fscore_support(labels, preds, average="binary")

acc = accuracy_score(labels, preds)

return {"accuracy": acc, "f1": f1, "precision": precision, "recall": recall}

# 4. Set up Training Arguments

# Comet will automatically log these arguments

training_args = TrainingArguments(

output_dir="./results",

num_train_epochs=2,

per_device_train_batch_size=8,

logging_dir='./logs',

logging_steps=10,

report_to="comet_ml", # Key integration point

)

# 5. Initialize Trainer

trainer = Trainer(

model=model,

args=training_args,

train_dataset=small_train_dataset,

compute_metrics=compute_metrics,

)

# 6. Train the model

trainer.train()

# 7. Log the final model as an artifact

# The model is saved by the Trainer in the output_dir

experiment.log_model("distilbert-imdb-sentiment", "./results")

experiment.end()

print("Training complete and logged to Comet!")Visualizing Results in the Comet UI

Once the script finishes, you can navigate to the Comet UI to see your results. You’ll find a detailed dashboard for your experiment with panels for metrics, hyperparameters, system resource usage, and logged artifacts. The real power comes from the project view, where you can compare dozens of experiments side-by-side. You can filter, sort, and group runs to quickly identify which hyperparameter combinations led to the best performance, a feature that is essential for any serious R&D effort, whether you’re using fast.ai News methodologies or custom training loops.

From Experiment to Production: Advanced Comet ML Features

A mature MLOps platform goes beyond tracking experiments. It must bridge the gap between research and production. Comet provides two key features to facilitate this transition: a Model Registry and Production Monitoring capabilities.

The Comet Model Registry: A Single Source of Truth

The Model Registry is a central hub for managing the lifecycle of your trained models. After identifying a promising model from your experiments, you can “register” it. This moves it from a simple experiment artifact to a versioned, stage-managed asset.

Key features include:

- Versioning: Automatically assign versions (e.g., v1.0.0, v1.1.0) to your models.

- Staging: Assign stages like “Development,” “Staging,” or “Production” to model versions, creating a clear and auditable promotion pipeline.

- Metadata: Attach rich metadata, including links back to the training experiment, performance metrics, and notes for your team.

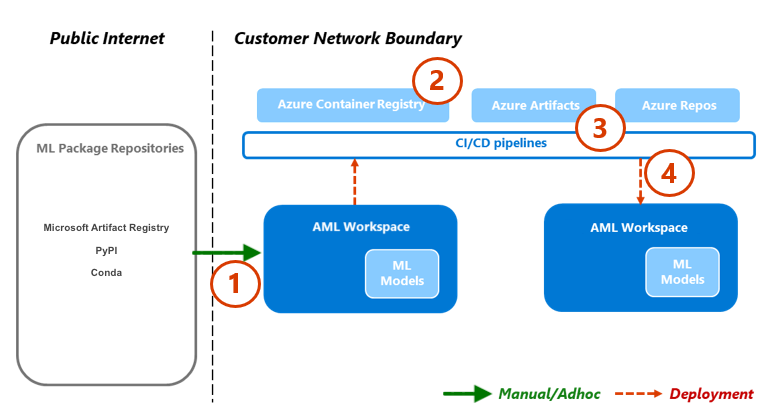

This ensures that when your CI/CD pipeline needs to deploy a model, it pulls a specific, approved version from the registry, not just a file from a shared drive. This is critical for deploying models to platforms like AWS SageMaker, Azure Machine Learning, or Vertex AI News-managed endpoints.

import comet_ml

# Assume you have a completed experiment with a logged model

# You can get the experiment key from the Comet UI

api = comet_ml.API(api_key="YOUR_API_KEY")

experiment = api.get_experiment_by_key("EXPERIMENT_KEY_FROM_PREVIOUS_RUN")

# Find the model artifact in the experiment's assets

model_assets = experiment.get_asset_list(asset_type="model")

if not model_assets:

raise ValueError("No model asset found in the specified experiment.")

model_asset_id = model_assets[0]['assetId'] # Assuming the first model is the one we want

model_name = "sentiment-classifier-prod"

# Register the model from the experiment asset

# This creates version 1.0.0 of the model in the registry

experiment.register_model(

model_name,

asset_paths=[model_asset_id], # Use the asset ID

version="1.0.0",

description="First production-ready version of the sentiment classifier."

)

# You can then promote this version to a specific stage

# For example, move it to the "Production" stage

api.update_model_registry_version_stage(

workspace="YOUR_WORKSPACE",

model_name=model_name,

version="1.0.0",

stage="Production"

)

print(f"Model '{model_name}' version 1.0.0 has been registered and promoted to Production.")Production Monitoring and Model Observability

Once a model is deployed, the work isn’t over. You need to monitor its performance in the real world to detect issues like data drift or concept drift. Comet provides tools to monitor these deployed models. You can log inference data from your production environment back to Comet, allowing you to create monitors that compare the statistical distribution of live data against the training data. If a significant drift is detected, Comet can trigger alerts, notifying your team to investigate and possibly retrain the model. This proactive approach to model maintenance is a hallmark of a mature MLOps strategy, aligning with trends seen in enterprise platforms like DataRobot News and Snowflake Cortex News.

Best Practices and the MLOps Ecosystem

To get the most out of Comet, it’s important to adopt some best practices and understand how it fits into the larger toolchain, including the latest developments from NVIDIA AI News on inference optimization with tools like TensorRT News.

Tips for Effective Experiment Management

- Use Tags: Tag your experiments with meaningful labels (e.g., `baseline`, `data-augmentation`, `hyperparameter-tuning`) for easy filtering.

- Log Configuration Files: In addition to hyperparameters, log your entire configuration file (e.g., YAML or JSON) as an asset. This provides a complete, human-readable record of the setup.

- Leverage Custom Panels: Create custom visualizations in the Comet UI to display metrics that are most important for your project, such as ROC curves or feature importance plots.

- Automate with CI/CD: Integrate Comet into your CI/CD pipeline to automatically run training and evaluation jobs, register successful models, and trigger deployments.

Comet in the Wild: Key Integrations

Comet’s power is amplified by its vast ecosystem of integrations. It’s not a monolithic platform but a connective hub. You can use it with hyperparameter optimization libraries like Optuna News to automatically track hundreds of trials. For large-scale data processing and training, it integrates with distributed computing frameworks like Ray News and Apache Spark MLlib News. For building interactive demos of your registered models, you can easily pull them from the registry into apps built with Gradio News or Streamlit News. In the rapidly growing field of Large Language Models (LLMs), Comet can track experiments from frameworks like LangChain News and LlamaIndex News, providing a clear view of your prompt engineering and RAG (Retrieval-Augmented Generation) system performance, which often rely on vector databases like Pinecone, Milvus, or Chroma.

Conclusion

The modern AI landscape, with its constant stream of Anthropic News and innovations from Stability AI News, demands more than just clever algorithms; it requires disciplined, scalable, and reproducible engineering practices. Platforms like Comet ML are no longer a luxury but a necessity for teams looking to build reliable AI products. By providing a unified solution for experiment tracking, model versioning, and production monitoring, Comet empowers data scientists and MLOps engineers to collaborate effectively and accelerate the path from idea to impact.

By adopting the practices outlined in this article, you can move beyond messy spreadsheets and disconnected scripts to a streamlined, professional workflow. The key takeaway is that MLOps is a journey, and tools like Comet provide a powerful vehicle. We encourage you to explore the documentation, start a free project, and see how centralizing your ML lifecycle can unlock new levels of productivity and innovation for your team.