Supercharging LLM Inference: A Deep Dive into TensorRT-LLM’s MultiShot AllReduce and NVSwitch

The relentless pace of innovation in generative AI has been staggering. Models from research labs like Google DeepMind News and Meta AI News, and companies such as OpenAI News, Anthropic News, and Mistral AI News are growing larger and more capable, creating an insatiable demand for high-performance, low-latency inference. For organizations deploying these massive models at scale, the primary challenge often shifts from model development to efficient operationalization. The key bottleneck? Communication. When a large language model (LLM) is so large that it must be distributed across multiple GPUs—a technique known as tensor parallelism—the time spent synchronizing data between these processors can dominate the total inference time.

Addressing this communication overhead is the final frontier for unlocking true real-time performance. This is where recent advancements from the NVIDIA AI News ecosystem are making a profound impact. A groundbreaking technique within TensorRT-LLM, known as MultiShot AllReduce, when combined with high-bandwidth hardware interconnects like NVSwitch, fundamentally re-architects inter-GPU communication. This synergy dramatically accelerates a critical collective operation, AllReduce, leading to significant boosts in throughput and reductions in latency. This article provides a comprehensive technical exploration of this technology, its underlying principles, and how developers can leverage it to supercharge their generative AI workloads, impacting everything from PyTorch News to production deployments on Triton Inference Server News.

The Foundation: Tensor Parallelism and the AllReduce Bottleneck

To appreciate the significance of this new optimization, we must first understand the problem it solves. Modern LLMs, often sourced from hubs like Hugging Face News, can have hundreds of billions or even trillions of parameters, far exceeding the memory capacity of a single GPU. This necessitates model parallelism, where the model itself is split across multiple devices.

What is Tensor Parallelism?

Tensor parallelism is a specific form of model parallelism that partitions individual weight matrices (tensors) of a model across several GPUs. For example, in a Transformer block’s linear layer, the weight matrix can be split column-wise or row-wise. Each GPU computes a partial result using its slice of the weights. To obtain the final, correct result, these partial results must be aggregated from all GPUs. This approach is highly effective for scaling computations but introduces a critical dependency on inter-GPU communication. Frameworks like DeepSpeed News and Megatron-LM pioneered these techniques, which are now integral to both training and inference.

The AllReduce Communication Primitive

The aggregation of partial results in tensor parallelism is typically handled by a collective communication primitive called AllReduce. The AllReduce operation performs two steps:

- Reduce: It gathers data from all participating processes (GPUs) and applies a reduction operation (e.g., sum, average, max) to combine them into a single result.

- Broadcast: It distributes this final result back to all the participating processes.

In the context of a forward pass in an LLM, each GPU calculates a partial output. An AllReduce (typically a summation) is then performed to ensure every GPU has the complete, final output before proceeding to the next layer. While conceptually simple, this synchronization step can become a major performance bottleneck, as all GPUs must wait for the communication to complete.

Here is a conceptual example of how AllReduce is used in a distributed PyTorch setting, which forms the basis for many high-level frameworks.

import torch

import torch.distributed as dist

import os

def setup(rank, world_size):

"""Initializes the distributed environment."""

os.environ['MASTER_ADDR'] = 'localhost'

os.environ['MASTER_PORT'] = '12355'

dist.init_process_group("nccl", rank=rank, world_size=world_size)

torch.cuda.set_device(rank)

def all_reduce_example(rank, world_size):

"""Demonstrates a simple AllReduce operation."""

setup(rank, world_size)

# Each GPU creates a tensor with its rank.

# In a real model, this would be a partial result of a computation.

tensor = torch.tensor([rank + 1.0]).cuda(rank)

print(f"Rank {rank}: Before AllReduce, tensor is {tensor.item()}")

# The AllReduce operation sums the tensors from all GPUs

# and distributes the result back to everyone.

dist.all_reduce(tensor, op=dist.ReduceOp.SUM)

print(f"Rank {rank}: After AllReduce, tensor is {tensor.item()}")

dist.destroy_process_group()

# This example would be run in a multi-process/multi-GPU environment.

# For a world_size of 4, the final tensor on each GPU would be 1+2+3+4 = 10.

# To run this:

# torchrun --nproc_per_node=4 your_script_name.py

# (Assuming this code is saved in your_script_name.py and you have 4 GPUs)

As this example illustrates, the dist.all_reduce call is a blocking operation. The GPU cannot proceed until the data from all other GPUs has been received, summed, and broadcasted back. This is the latency we aim to minimize.

The Breakthrough: Introducing MultiShot AllReduce with TensorRT-LLM

Traditional AllReduce implementations perform the operation in a single, monolithic step. While efficient for some network topologies, this approach can lead to periods where the GPU’s computational cores are idle, waiting for the communication to finish. TensorRT News introduces a more sophisticated approach with MultiShot AllReduce, designed specifically for modern hardware architectures.

The Limitation of Monolithic AllReduce

With high-speed interconnects like NVLink and NVSwitch, the bandwidth between GPUs is immense. A single, large AllReduce operation can saturate this bandwidth, but it creates a rigid dependency: compute, then communicate, then compute again. This sequential process leaves performance on the table because it fails to effectively overlap computation with communication—the holy grail of distributed systems optimization. While one large data transfer is happening, the GPU’s powerful SMs (Streaming Multiprocessors) may be underutilized.

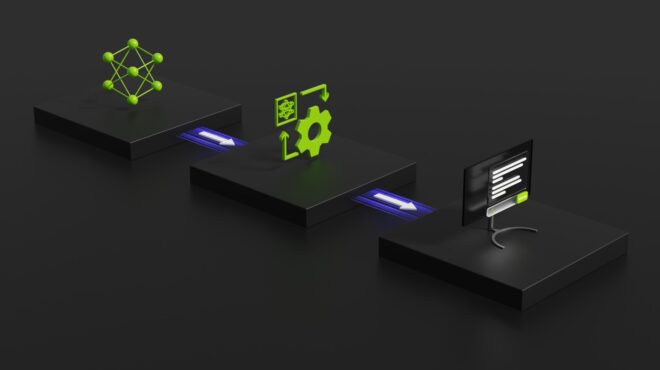

How MultiShot AllReduce Changes the Game

MultiShot AllReduce fundamentally changes this dynamic by breaking down a single large AllReduce operation into multiple smaller, independent “shots.” Instead of waiting for the entire tensor to be ready and sending it all at once, the system can begin communicating the first chunk of a result as soon as it’s computed. While that first chunk is in flight across the NVSwitch fabric, the GPU is already busy computing the second chunk.

This creates a pipeline effect, enabling a high degree of overlap between computation and communication. The key benefits are:

- Improved GPU Utilization: By keeping the compute cores busy while data is being transferred, overall hardware utilization increases significantly.

- Reduced Latency: The perceived latency of the AllReduce operation is masked by the ongoing computation, leading to a faster end-to-end forward pass.

- Optimized for NVSwitch: This technique is particularly effective on systems with NVSwitch, which provides all-to-all, non-blocking bandwidth between all GPUs in a server node. The fabric can handle multiple smaller transfers concurrently without contention, making it a perfect match for the MultiShot approach.

Enabling this feature is handled within the TensorRT-LLM library, which is NVIDIA’s open-source library for compiling and optimizing LLMs for inference. Developers don’t need to manually rewrite their models; instead, they can enable this optimization during the model compilation phase.

# This is a conceptual example of how MultiShot might be enabled in a

# future TensorRT-LLM Python API or configuration.

# The actual API may differ.

from tensorrt_llm.builder import Builder

from tensorrt_llm.network import net_guard

from tensorrt_llm.plugin import PluginConfig

# Assume 'network' is a TensorRT-LLM network definition

# and 'config' is a builder configuration object.

# --- Conceptual API ---

# Enable advanced optimizations for collective communications

plugin_config = PluginConfig()

# This flag would instruct the builder to use the MultiShot AllReduce plugin

# instead of the default implementation where beneficial.

plugin_config.enable_multishot_allreduce()

# The builder would then use this configuration when compiling the model engine.

# This abstracts the complexity away from the user.

builder = Builder()

build_engine = builder.build_engine(network, config, plugin_config=plugin_config)

# The resulting engine, when run on a multi-GPU system, would automatically

# leverage the pipelined MultiShot AllReduce for its tensor parallel regions.

Practical Implementation and Ecosystem Integration

Putting this technology into practice involves using the TensorRT-LLM toolkit to build an optimized engine and then deploying it using a high-performance serving framework like Triton Inference Server. This workflow is becoming standard for production-grade LLM deployments on platforms like AWS SageMaker News and Azure Machine Learning News.

Building an Engine with TensorRT-LLM

The first step is to convert a pre-trained model from a framework like PyTorch (often from the Hugging Face Transformers News library) into a TensorRT engine. TensorRT-LLM provides both a Python API and a command-line tool, `trtllm-build`, for this purpose. During this AOT (ahead-of-time) compilation, TensorRT-LLM applies numerous optimizations, including kernel fusion, quantization, and now, advanced communication strategies like MultiShot AllReduce.

The `trtllm-build` command allows you to specify the model architecture, the desired precision (e.g., FP16, INT8), and parallelism settings. The MultiShot optimization is typically enabled automatically by the builder when it detects a compatible hardware environment (like an HGX H100 system) and tensor parallelism is used.

# Example command to build a Llama 7B model for 2-way tensor parallelism.

# TensorRT-LLM's builder will automatically select the most performant kernels

# and communication strategies, including MultiShot AllReduce if applicable.

# 1. Download model weights from Hugging Face

# (Requires git-lfs)

git clone https://huggingface.co/meta-llama/Llama-2-7b-hf

# 2. Convert weights to the TensorRT-LLM format

python tensorrt_llm/examples/llama/convert_checkpoint.py --model_dir ./Llama-2-7b-hf \

--output_dir ./tllm_checkpoint_2gpu \

--dtype float16 \

--tp_size 2

# 3. Build the TensorRT engine

trtllm-build --checkpoint_dir ./tllm_checkpoint_2gpu \

--output_dir ./tllm_engine_2gpu \

--gemm_plugin float16 \

--log_level verbose \

--paged_kv_cache enable \

--remove_input_padding enable

# The resulting engine in './tllm_engine_2gpu' is now optimized for 2-GPU inference.

# The builder's heuristics would enable MultiShot AllReduce internally on supported hardware.

Serving with Triton Inference Server

Once the optimized engine is built, it needs to be served. Triton Inference Server News is NVIDIA’s open-source inference server that is tightly integrated with TensorRT-LLM. It provides a production-ready solution for deploying models with features like dynamic batching, concurrency, and multi-model serving.

Triton’s TensorRT-LLM backend handles the complexity of loading the multi-GPU engine and managing inference requests. From a client’s perspective, they are simply sending a request to a single endpoint; Triton and the backend manage the tensor parallelism, including the high-speed AllReduce operations, transparently.

Performance Gains, Best Practices, and Broader Impact

The combination of TensorRT-LLM’s software optimizations and NVSwitch’s hardware capabilities delivers substantial, measurable performance improvements.

Quantifying the Performance Uplift

By overlapping computation and communication, the MultiShot AllReduce technique can accelerate the AllReduce operation itself by up to 3x on NVSwitch-equipped systems. This translates directly to lower end-to-end latency and higher throughput for the entire LLM. For applications like chatbots, code generation assistants, or RAG (Retrieval-Augmented Generation) systems built with tools like LangChain News or LlamaIndex News and vector databases like Pinecone News or Milvus News, this speedup is critical. It means faster responses for users and the ability to serve more concurrent users with the same hardware, reducing operational costs.

Best Practices for Maximizing Performance

To get the most out of this technology, developers should consider the following:

- Hardware Matters: The full benefits of MultiShot AllReduce are realized on server platforms with NVSwitch, such as NVIDIA DGX and HGX systems, which provide the necessary all-to-all GPU bandwidth.

- Profile Your Workload: Use profiling tools like NVIDIA Nsight Systems to verify that inter-GPU communication is a significant bottleneck in your workload. This will confirm that optimizations targeting AllReduce will have a meaningful impact.

- Stay Updated: The AI software stack is evolving rapidly. Regularly updating to the latest versions of the NVIDIA drivers, CUDA, TensorRT-LLM, and Triton is crucial for accessing the latest performance optimizations and features. This is a key takeaway from recent TensorFlow News and JAX News as well—the whole ecosystem moves together.

- Combine Optimizations: For maximum performance, combine MultiShot AllReduce with other techniques like FP8 or INT8 quantization and paged-attention/in-flight batching. A holistic optimization approach yields the best results.

Conclusion and Next Steps

The introduction of MultiShot AllReduce in TensorRT-LLM represents a significant leap forward in solving the multi-GPU communication bottleneck for large-scale AI inference. It is a powerful example of co-design, where software innovations are created to exploit the full potential of underlying hardware like NVSwitch. By transforming the sequential “compute-then-communicate” paradigm into a highly parallel, pipelined process, this technology directly translates to faster, more efficient, and more scalable generative AI deployments.

For developers and MLOps engineers working in the rapidly expanding world of generative AI, this is an actionable and impactful development. The key takeaway is that performance optimization is no longer just about the model architecture or quantization; it is increasingly about mastering the art of distributed computation and communication. As you build your next AI service, we encourage you to explore the TensorRT-LLM library, experiment with its advanced features on appropriate hardware, and leverage tools like Triton Inference Server to bring these highly optimized models into production. The future of AI is not just about bigger models, but smarter, faster, and more efficient inference.