Azure AI in the Spotlight: A Technical Guide to Building Next-Gen Applications

The artificial intelligence landscape is undergoing a seismic shift, characterized by rapid innovation, fierce competition, and an explosion of powerful new models. In this dynamic environment, cloud platforms are the primary battlegrounds where enterprises build, deploy, and scale their AI solutions. While Amazon Web Services and Google Cloud Platform are formidable competitors, Microsoft Azure has carved out a strategic and powerful position, largely through its deep integration with OpenAI and its comprehensive, enterprise-focused Azure AI platform. The latest Azure AI News isn’t just about new features; it’s about a cohesive strategy to become the definitive platform for both proprietary and open-source AI development.

This article provides a comprehensive technical deep dive into the Azure AI ecosystem. We will explore its core components, walk through practical code examples for building sophisticated applications like Retrieval-Augmented Generation (RAG) systems, and delve into advanced MLOps and optimization techniques. Whether you’re a data scientist, an AI engineer, or a developer looking to integrate intelligence into your applications, this guide will provide actionable insights for leveraging the full power of Azure AI.

The Azure AI Ecosystem: A Unified Platform for Modern AI

Microsoft’s strategy revolves around creating a unified and accessible platform that caters to a wide spectrum of AI development needs. At the heart of this is the Azure AI Studio, a collaborative web-based portal that serves as the command center for all AI projects. It amalgamates tools that were previously separate, providing a seamless experience from data preparation to model deployment and monitoring.

Core Components: From Studio to Services

The Azure AI platform is built on several key pillars:

- Azure OpenAI Service: This is arguably the platform’s crown jewel, providing managed access to OpenAI’s most powerful models, including the GPT-4 family and DALL-E 3. The key value proposition is the addition of Azure’s enterprise-grade security, compliance, and private networking, making it suitable for organizations with stringent data privacy requirements. The latest OpenAI News of new models and capabilities almost always appears on Azure shortly after its public release.

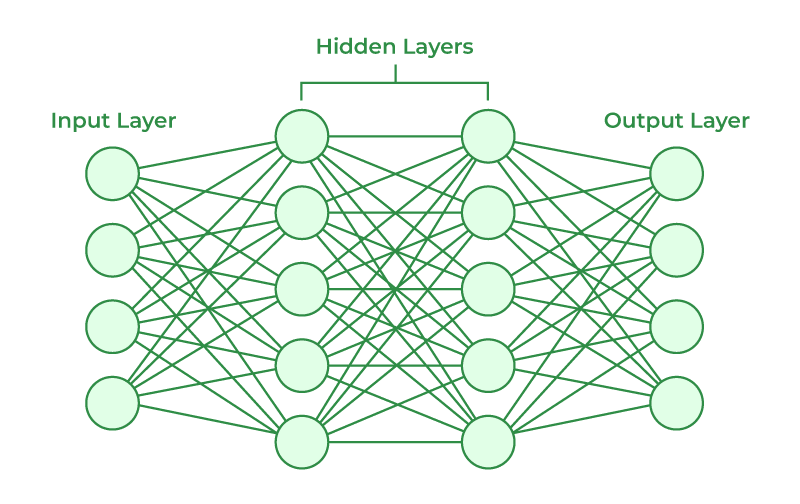

- Azure Machine Learning (AML): For teams that need to build, train, and manage custom models, Azure Machine Learning provides a robust MLOps platform. It supports classic machine learning and deep learning workloads, with first-class support for popular frameworks like PyTorch and TensorFlow. This is where you’d go for fine-tuning a model or training one from scratch. The latest Azure Machine Learning News often highlights new integrations and automation features.

- Model Catalog: Recognizing the industry’s multi-model future, Azure AI Studio features an extensive model catalog. This goes far beyond OpenAI, offering access to leading models from providers like Anthropic, Cohere, and Mistral AI. The recent buzz in Anthropic News and Mistral AI News about their powerful new models is reflected in their availability on Azure, often as endpoints that can be deployed with a few clicks. It also includes a vast collection of open-source models from sources like Hugging Face, including popular families from Meta AI like Llama.

Integrating Open-Source Powerhouses

Azure’s strength lies in its embrace of the open-source ecosystem. Developers are not locked into a specific toolchain. You can bring your existing skills and preferred libraries, such as Hugging Face Transformers or Sentence Transformers, and leverage Azure’s scalable compute and MLOps capabilities. For instance, you can use the Azure ML SDK to orchestrate training jobs that use standard PyTorch or TensorFlow code. This flexibility is critical for teams who want the power of the cloud without sacrificing control over their development process. Here’s a simple example of how to connect to the Azure OpenAI service using the Python SDK to get a chat completion.

# Make sure to install the library: pip install openai

import os

from openai import AzureOpenAI

# Best practice: Use environment variables for secrets

AZURE_OPENAI_ENDPOINT = os.environ.get("AZURE_OPENAI_ENDPOINT")

AZURE_OPENAI_API_KEY = os.environ.get("AZURE_OPENAI_API_KEY")

API_VERSION = "2024-05-01-preview"

# This is the name of your deployment in Azure AI Studio

DEPLOYMENT_NAME = "gpt-4o"

# Initialize the Azure OpenAI client

client = AzureOpenAI(

azure_endpoint=AZURE_OPENAI_ENDPOINT,

api_key=AZURE_OPENAI_API_KEY,

api_version=API_VERSION,

)

# Create a chat completion request

try:

response = client.chat.completions.create(

model=DEPLOYMENT_NAME,

messages=[

{"role": "system", "content": "You are a helpful AI assistant."},

{"role": "user", "content": "What is the difference between Azure AI Studio and Azure Machine Learning?"},

],

max_tokens=200,

temperature=0.7,

)

print("AI Assistant Response:")

print(response.choices[0].message.content)

except Exception as e:

print(f"An error occurred: {e}")

Building Your First AI Application on Azure: A Practical Guide

Let’s move from theory to practice by building a Retrieval-Augmented Generation (RAG) application. RAG is a powerful technique that enhances Large Language Models (LLMs) by providing them with external knowledge from a private data source, reducing hallucinations and enabling them to answer questions about specific, up-to-date information.

Setting Up Your Environment and Deploying a Model

Before writing code, you need to set up the necessary resources in Azure. This typically involves:

- Creating an Azure AI Hub Resource: This acts as a top-level container for your AI projects.

- Creating an Azure AI Project: Within the Hub, a project organizes all your assets, including data, models, and endpoints.

- Deploying a Model: From the Azure AI Studio’s model catalog, you can deploy a model like GPT-4o. This creates a real-time endpoint with a specific URL and key for API access.

- Setting up Azure AI Search: For our RAG application, we need a vector database. Azure AI Search (formerly Cognitive Search) is a fully managed service that provides powerful vector search capabilities. While other vector databases like Pinecone, Milvus, or Weaviate can be hosted on Azure VMs, Azure AI Search offers seamless integration with the rest of the AI stack.

Developing a RAG Application with LangChain and Azure AI Search

Frameworks like LangChain and LlamaIndex simplify building complex LLM applications. They provide abstractions for chaining together different components like models, data sources, and prompts. The latest LangChain News often includes improved integrations with cloud services like Azure. The following example demonstrates a simplified RAG flow using LangChain with Azure AI services.

# Install necessary libraries:

# pip install langchain-openai langchain-community langchain azure-search-documents

import os

from langchain_openai import AzureChatOpenAI

from langchain_community.vectorstores.azure_search import AzureSearch

from langchain_openai import AzureOpenAIEmbeddings

from langchain.chains import RetrievalQA

from langchain.prompts import PromptTemplate

# --- 1. Configuration ---

AZURE_OPENAI_ENDPOINT = os.environ.get("AZURE_OPENAI_ENDPOINT")

AZURE_OPENAI_API_KEY = os.environ.get("AZURE_OPENAI_API_KEY")

AZURE_OPENAI_DEPLOYMENT = "gpt-4o"

AZURE_EMBEDDINGS_DEPLOYMENT = "text-embedding-ada-002"

AZURE_SEARCH_ENDPOINT = os.environ.get("AZURE_SEARCH_ENDPOINT")

AZURE_SEARCH_KEY = os.environ.get("AZURE_SEARCH_KEY")

AZURE_SEARCH_INDEX_NAME = "product-docs-index"

# --- 2. Initialize Embeddings and LLM ---

embeddings = AzureOpenAIEmbeddings(

azure_deployment=AZURE_EMBEDDINGS_DEPLOYMENT,

api_key=AZURE_OPENAI_API_KEY,

azure_endpoint=AZURE_OPENAI_ENDPOINT,

)

llm = AzureChatOpenAI(

azure_deployment=AZURE_OPENAI_DEPLOYMENT,

api_key=AZURE_OPENAI_API_KEY,

azure_endpoint=AZURE_OPENAI_ENDPOINT,

temperature=0.1,

api_version="2024-05-01-preview"

)

# --- 3. Connect to Azure AI Search as the Vector Store ---

# This assumes you have already created an index and populated it with data.

# Populating the index would involve loading documents, splitting them,

# generating embeddings, and adding them to the AzureSearch index.

vector_store = AzureSearch(

azure_search_endpoint=AZURE_SEARCH_ENDPOINT,

azure_search_key=AZURE_SEARCH_KEY,

index_name=AZURE_SEARCH_INDEX_NAME,

embedding_function=embeddings.embed_query,

)

# --- 4. Create a RAG Chain ---

# Define a custom prompt to guide the LLM

prompt_template = """Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

{context}

Question: {question}

Helpful Answer:"""

QA_PROMPT = PromptTemplate(

template=prompt_template, input_variables=["context", "question"]

)

# Create the RetrievalQA chain

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vector_store.as_retriever(),

chain_type_kwargs={"prompt": QA_PROMPT},

return_source_documents=True

)

# --- 5. Ask a Question ---

question = "What are the new features in the latest Contoso widget?"

result = qa_chain.invoke({"query": question})

print(f"Question: {result['query']}\n")

print(f"Answer: {result['result']}\n")

print("Source Documents:")

for doc in result['source_documents']:

print(f"- {doc.page_content[:100]}...")

Advanced Techniques: MLOps, Fine-Tuning, and Optimization

For organizations moving beyond simple API calls, Azure offers a sophisticated suite of tools for managing the entire machine learning lifecycle (MLOps), from experimentation to production monitoring.

MLOps with Azure Machine Learning

Azure Machine Learning is the engine for operationalizing AI. It provides a workspace to track experiments, manage datasets, register models, and automate workflows using pipelines. A key feature is its deep integration with MLflow, an open-source platform for managing the ML lifecycle. The latest MLflow News often points to better cloud integrations, and Azure’s native MLflow support means you can use the familiar MLflow APIs for logging metrics and artifacts, which are then automatically stored and organized within your AML workspace. This provides a structured and reproducible way to manage complex projects, similar to what you might find with other tools like Weights & Biases or Comet ML.

Fine-Tuning and Optimizing Models for Performance

While pre-trained models are powerful, fine-tuning them on domain-specific data can significantly improve performance and accuracy. Azure AI Studio provides guided workflows for fine-tuning models from the catalog. For more control, you can use the Azure ML SDK to submit a training job. This job can execute a custom Python script using frameworks like PyTorch with libraries such as Hugging Face Transformers and DeepSpeed for distributed training on powerful NVIDIA GPUs.

Here is a conceptual example of how you might define an Azure ML job in a YAML file to start a fine-tuning process. This file would be submitted via the Azure CLI or SDK.

# fine-tune-job.yml

$schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json

code: ./src

command: >-

python train.py

--model_id "meta-llama/Llama-2-7b-chat-hf"

--training_data "./data/my-custom-data.jsonl"

--num_train_epochs 3

--learning_rate 2e-5

--output_dir ./outputs

environment:

image: mcr.microsoft.com/azureml/openmpi4.1.0-cuda11.8-cudnn8-ubuntu22.04

conda_file: ./environment/conda.yml

compute: azureml:gpu-cluster-v100

distribution:

type: pytorch

process_count_per_instance: 2 # e.g., for a 2-GPU node

display_name: llama-2-7b-finetune-project

experiment_name: custom-model-finetuning

Once a model is trained, performance at inference is critical. Azure supports various optimization techniques. You can convert models to the ONNX (Open Neural Network Exchange) format for cross-platform compatibility and performance gains. For NVIDIA hardware, leveraging TensorRT can provide significant speedups. For serving, Triton Inference Server, also from NVIDIA, can be deployed on Azure Kubernetes Service (AKS) for high-throughput, low-latency model serving, which is a key topic in recent NVIDIA AI News.

Best Practices and Navigating the AI Landscape

Building successful AI applications on Azure requires more than just technical skill; it requires a strategic approach to model selection, security, and governance.

Security, Governance, and Responsible AI

Azure’s enterprise focus is evident in its robust security and governance features. You can secure your AI services within a virtual network using private endpoints, use managed identities to avoid storing credentials in code, and apply Azure Policy to enforce organizational standards. Furthermore, Azure is a leader in Responsible AI. The Azure AI Studio includes a Responsible AI dashboard to help you evaluate models for fairness, explainability, error analysis, and causality, enabling you to build AI systems that are not only powerful but also trustworthy and ethical.

Choosing the Right Model and Tool

The proliferation of models from OpenAI, Anthropic, Google DeepMind, and the open-source community can be overwhelming. The choice depends on several factors:

- Performance: For state-of-the-art reasoning, a frontier model from Azure OpenAI or Anthropic might be best.

- Cost: For simpler tasks, a smaller, fine-tuned open-source model like one from Mistral AI or Meta AI can be far more cost-effective.

- Customization: If you need deep customization on proprietary data, fine-tuning an open-source model in Azure Machine Learning is the way to go.

This multi-model reality is where Azure’s strategy shines compared to competitors like Amazon Bedrock or Google Vertex AI. By providing a unified platform with a diverse model catalog, Azure allows developers to choose the best tool for the job without being locked into a single provider. For developers building UIs for their AI apps, Azure easily integrates with backend frameworks like FastAPI or Flask, which can then power frontends built with Streamlit, Gradio, or modern web frameworks.

Conclusion

The latest Azure AI News paints a clear picture: Microsoft is building a comprehensive, open, and enterprise-ready platform designed for the complexities of modern AI development. Its core strengths lie in the seamless integration of the powerful Azure OpenAI Service, a diverse and growing catalog of third-party and open-source models, and a robust MLOps foundation with Azure Machine Learning. This strategy allows organizations to harness state-of-the-art proprietary models while retaining the flexibility to build, fine-tune, and optimize custom solutions.

For developers and technical leaders, the key takeaway is that Azure AI is more than just an API provider; it’s a complete ecosystem. By mastering its components—from the AI Studio and Search to the Machine Learning SDK—you can build secure, scalable, and responsible AI applications that deliver real business value. The next step is to dive in: explore the Azure AI Studio, deploy a model from the catalog, and start building your first intelligent application on one of the industry’s leading AI platforms.