Beyond Beam Search: A Deep Dive into Contrastive Search for Smarter Text Generation with Hugging Face Transformers

Text generation is the engine driving the modern AI revolution. From powering sophisticated chatbots and drafting creative content to summarizing dense documents, the ability of Large Language Models (LLMs) to produce human-like text is fundamental. However, a persistent challenge has been controlling the *quality* of this generated text. For years, developers and researchers have navigated a difficult trade-off between coherence and diversity, often ending up with outputs that are either bland and repetitive or creative but nonsensical. This is a critical issue for anyone working with models from Meta AI, Google DeepMind, or Mistral AI, and for developers using frameworks like LangChain or LlamaIndex that depend on reliable model outputs.

In exciting Hugging Face Transformers News, a powerful new decoding method has been integrated into the library that directly addresses this problem: Contrastive Search. This innovative technique offers a compelling alternative to traditional methods like beam search and nucleus sampling. It aims to strike a near-perfect balance, generating text that is not only fluent and contextually relevant but also diverse and interesting. Best of all, it’s a drop-in replacement that works with nearly all existing autoregressive models on the Hugging Face Hub, requiring no model retraining. This article provides a comprehensive technical guide to understanding, implementing, and mastering Contrastive Search for your own NLP projects.

Understanding the Landscape of Text Generation Decoding

To appreciate the innovation of Contrastive Search, we must first understand the limitations of the methods that preceded it. The process of selecting the next word in a sequence, known as decoding, is where the magic—or the mess—happens.

The Pitfalls of Common Decoding Strategies

For any given prompt, an LLM produces a probability distribution over its entire vocabulary for the next token. The decoding strategy is the algorithm used to pick a token from this distribution. The most common methods include:

- Greedy Search: The simplest approach. At each step, it selects the single token with the highest probability. While fast and often coherent for short sequences, it is notoriously prone to getting stuck in loops, producing repetitive and uninspired text (e.g., “I think I think I think…”).

- Beam Search: An improvement over greedy search, beam search explores multiple possible sequences (or “beams”) simultaneously. It keeps the top `n` most probable sequences at each step, expanding them and re-ranking. While it often produces more fluent text than greedy search, it suffers from a well-known issue: it tends to favor generic, high-frequency phrases, leading to dull and predictable outputs. This is a common pain point discussed in PyTorch News and TensorFlow News circles.

- Sampling (Top-k and Nucleus/Top-p): To inject creativity, sampling methods introduce randomness. Top-k sampling restricts the choice to the `k` most likely tokens, while nucleus sampling selects from the smallest set of tokens whose cumulative probability exceeds a threshold `p`. These methods are excellent for creative tasks but can sometimes lead to incoherent or nonsensical outputs, as they can veer off the most probable path.

Introducing Contrastive Search: The Best of Both Worlds

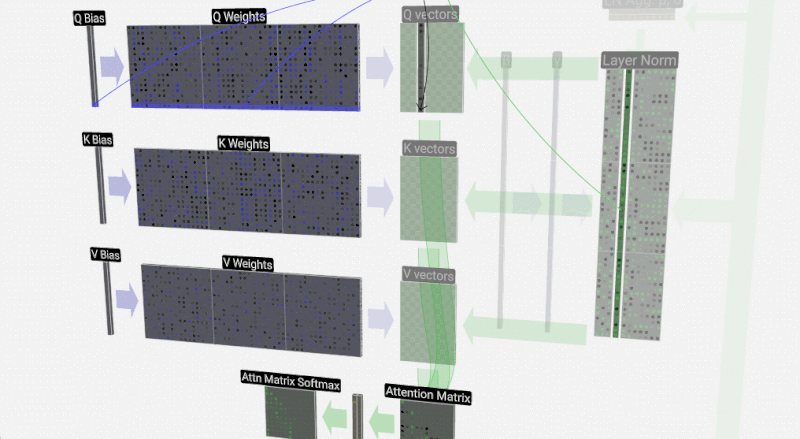

Contrastive Search elegantly sidesteps the dilemma of choosing between boring-but-safe and creative-but-risky. It is a deterministic method (like greedy and beam search) that aims to improve text quality by optimizing for two competing objectives simultaneously:

- Model Confidence (Likelihood): This is the standard probability assigned to a potential next token by the model. We want to choose tokens the model is confident about.

- Degeneration Penalty (Dissimilarity): This is the innovative part. The method penalizes candidate tokens that are too semantically similar to the tokens already generated in the context window. It encourages the model to choose words that are different from what it just said.

The final score for each candidate token is a combination of these two factors. The algorithm selects the token that best balances high model confidence with low similarity to the preceding context. This “contrastive” objective prevents the model from falling into repetitive loops while keeping it grounded in coherent, high-probability sequences.

Putting Contrastive Search into Practice with 🤗 Transformers

One of the most compelling aspects of this new feature is its seamless integration into the Hugging Face `transformers` library. You can start using it immediately with just a few lines of code and a couple of new parameters in the familiar .generate() method.

Setting Up Your Environment

First, ensure you have the necessary libraries installed. You’ll need `transformers` and a deep learning framework like PyTorch or TensorFlow. This example uses PyTorch.

# Make sure you have the latest versions

!pip install transformers torch accelerateYour First Contrastive Search Generation

Implementing Contrastive Search is incredibly straightforward. You activate it by setting the `penalty_alpha` parameter in the .generate() call. This parameter controls the strength of the degeneration penalty. A value of 0 would disable it, while a higher value (e.g., 0.6) applies a stronger penalty.

You also need to set `top_k`. In the context of Contrastive Search, `top_k` is used to create a candidate pool of tokens from which the algorithm will select the best one based on its contrastive objective.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Load a model and tokenizer

# Using a smaller, accessible model for this example

model_name = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# Set the device

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

# Define the prompt

prompt = "Artificial intelligence is a field of computer science that"

# Tokenize the input

inputs = tokenizer(prompt, return_tensors="pt").to(device)

# Generate text using Contrastive Search

# penalty_alpha controls the degeneration penalty

# top_k creates the candidate pool

contrastive_output = model.generate(

**inputs,

max_length=100,

penalty_alpha=0.6,

top_k=4,

eos_token_id=tokenizer.eos_token_id

)

print("--- Contrastive Search Output ---")

print(tokenizer.decode(contrastive_output[0], skip_special_tokens=True))Comparing Outputs: Contrastive vs. Beam Search

To truly see the difference, let’s compare the output of Contrastive Search with that of a standard beam search. We will use the same model and prompt to highlight the difference in the generated text’s structure and diversity.

import torch

from transformers import AutoTokenizer, AutoModelForCausalLM

# Re-using the model and tokenizer from the previous example

model_name = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

prompt = "The best way to learn a new skill is to"

inputs = tokenizer(prompt, return_tensors="pt").to(device)

# --- Generation with Contrastive Search ---

contrastive_output = model.generate(

**inputs,

max_length=75,

penalty_alpha=0.6,

top_k=5,

eos_token_id=tokenizer.eos_token_id

)

print("--- Contrastive Search Output ---")

print(tokenizer.decode(contrastive_output[0], skip_special_tokens=True))

print("\n" + "="*50 + "\n")

# --- Generation with Beam Search ---

beam_output = model.generate(

**inputs,

max_length=75,

num_beams=5,

early_stopping=True,

eos_token_id=tokenizer.eos_token_id,

no_repeat_ngram_size=2 # Often needed to manually prevent repetition

)

print("--- Beam Search Output ---")

print(tokenizer.decode(beam_output[0], skip_special_tokens=True))When you run this code, you will often observe that the beam search output, even with no_repeat_ngram_size, can be quite generic and formulaic. In contrast, the Contrastive Search output tends to be more varied and engaging, exploring a more interesting semantic path without sacrificing coherence.

Advanced Techniques and Fine-Tuning Contrastive Search

While the default parameters work well, you can achieve even better results by tuning the key parameters and understanding how they interact.

Tuning `penalty_alpha` and `top_k`

The two main levers you can pull are `penalty_alpha` and `top_k`. Finding the optimal values often requires experimentation based on your specific model and use case.

penalty_alpha: This is the most critical parameter. It controls the strength of the penalty for semantic repetition.- A low

alpha(e.g., 0.1-0.4) makes the generation closer to a standard greedy or beam search. It prioritizes model confidence and may be suitable for tasks requiring high factual precision. - A high

alpha(e.g., 0.6-0.8) imposes a strong penalty on repetition, encouraging more diverse and creative outputs. If set too high, it might force the model to choose less probable tokens, potentially reducing coherence. A value around 0.6 is often a good starting point.

- A low

top_k: This defines the size of the candidate pool. A larger `k` (e.g., 5-10) gives the contrastive algorithm more options to choose from, which can lead to better quality by allowing it to find a token that is both high-confidence and dissimilar. However, a very large `k` can slightly increase computational cost.

Here’s how you might experiment with different alpha values to see their effect:

# ... (model and tokenizer setup as before) ...

prompt = "To build a successful startup, the most important thing is"

inputs = tokenizer(prompt, return_tensors="pt").to(device)

alphas_to_test = [0.2, 0.6, 0.9]

for alpha in alphas_to_test:

print(f"--- Generating with penalty_alpha = {alpha} ---")

output = model.generate(

**inputs,

max_length=80,

penalty_alpha=alpha,

top_k=5,

eos_token_id=tokenizer.eos_token_id

)

print(tokenizer.decode(output[0], skip_special_tokens=True))

print("\n")Real-World Applications

The improved quality from Contrastive Search directly benefits a wide range of applications, especially those built using orchestration tools like those featured in LangChain News or Haystack News.

- Long-form Content Generation: For writing articles, stories, or reports, avoiding repetition is paramount. Contrastive Search helps maintain reader engagement over longer passages.

- Abstractive Summarization: It encourages summaries that are genuinely abstractive, rephrasing concepts rather than just extracting and stitching together sentences from the source.

- Conversational AI and Chatbots: Chatbots can sound more natural and less robotic by avoiding canned, repetitive responses. This is crucial for user experience on platforms like Azure AI News and Amazon Bedrock News.

- RAG Systems: In Retrieval-Augmented Generation, where models synthesize answers from retrieved documents, Contrastive Search can help generate answers that are more fluid and less prone to repeating phrases from the source context. This is highly relevant for users of vector databases like those in Pinecone News or Weaviate News.

Best Practices and Performance Considerations

Integrating Contrastive Search into your workflow is easy, but keeping a few best practices in mind will ensure you get the most out of it.

Model Compatibility and the Ecosystem

A major advantage of Contrastive Search is its broad compatibility. It is a decoding-level algorithm, meaning it can be applied to virtually any pre-trained autoregressive model available on the Hugging Face Hub without any architectural changes. Whether you’re using a model from the Meta AI News Llama family, a model from Mistral AI News, or a classic like GPT-2, you can leverage this technique instantly. This universality is a testament to the power of the open-source ecosystem championed by Hugging Face.

Performance and Optimization

Computationally, Contrastive Search is highly efficient. Its overhead compared to greedy search is minimal, as it only involves an extra step of calculating semantic similarities over a small `top_k` candidate set. It is often faster than a beam search with a moderate to large number of beams.

For production environments, you can further optimize the underlying model inference using tools like ONNX, OpenVINO, or NVIDIA AI News‘s TensorRT. Deploying these optimized models on inference servers like Triton Inference Server or using platforms like vLLM News‘s vLLM or Modal News can significantly boost throughput, making advanced decoding strategies like Contrastive Search viable even at scale. Tracking these production deployments can be managed with tools highlighted in MLflow News.

Experimentation and Evaluation

As with any generation technique, the key to success is experimentation. The ideal `penalty_alpha` and `top_k` can vary between models and tasks. It’s crucial to establish a robust evaluation pipeline.

- Track Your Experiments: Use MLOps platforms like Weights & Biases News‘s W&B or Comet ML to log prompts, generation parameters, and outputs. This makes it easy to compare results and identify the best settings.

- Use a Mix of Metrics: Combine automatic metrics (e.g., ROUGE for summarization, BLEU for translation) with human evaluation. Often, the improvements in quality, such as reduced repetition and increased interestingness, are best captured by human judgment.

- Leverage Evaluation Tools: Platforms like LangSmith are emerging to help developers evaluate and debug LLM applications, which is perfect for assessing the impact of different decoding strategies.

Conclusion: A New Default for High-Quality Text Generation

Contrastive Search represents a significant and practical advancement in the field of text generation. By intelligently penalizing semantic repetition directly within the decoding process, it solves one of the most persistent problems that have plagued LLMs for years. Its integration into the Hugging Face Transformers library makes this state-of-the-art technique immediately accessible to millions of developers and researchers worldwide.

The key takeaways are clear:

- Contrastive Search produces text that is more diverse and less repetitive than beam search, without sacrificing the coherence lost in pure sampling methods.

- It is incredibly easy to implement via the

.generate()method, using thepenalty_alphaandtop_kparameters. - It is compatible with the vast majority of existing autoregressive models, making it a versatile tool for any NLP practitioner.

As the AI landscape continues to evolve with news from OpenAI News to Anthropic News, foundational improvements like this are what truly push the entire ecosystem forward. We encourage you to update your `transformers` library, experiment with Contrastive Search in your own projects, and experience the remarkable improvement in text generation quality for yourself.