Infrastructure as Code for GenAI: Building Scalable RAG Systems with Terraform and Amazon Bedrock

The generative AI landscape is evolving at a breathtaking pace, with new models and techniques emerging constantly. While the capabilities of large language models (LLMs) from providers mentioned in OpenAI News and Anthropic News are astounding, their true enterprise value is unlocked when they are grounded in specific, proprietary data. This is the domain of Retrieval-Augmented Generation (RAG), a powerful architecture that allows LLMs to access and reason over external knowledge sources, reducing hallucinations and providing up-to-date, contextually relevant answers. Amazon Web Services has streamlined this process with Knowledge Bases for Amazon Bedrock, a fully managed RAG capability. However, as these systems move from experimentation to production, the need for robust, repeatable, and scalable deployment becomes paramount. This is where Infrastructure as Code (IaC) with Terraform enters the picture, transforming a complex manual setup into an automated, version-controlled workflow. This article provides a comprehensive guide to deploying Amazon Bedrock Knowledge Bases using Terraform, enabling you to build production-grade RAG applications with confidence.

The Anatomy of a RAG System in Amazon Bedrock

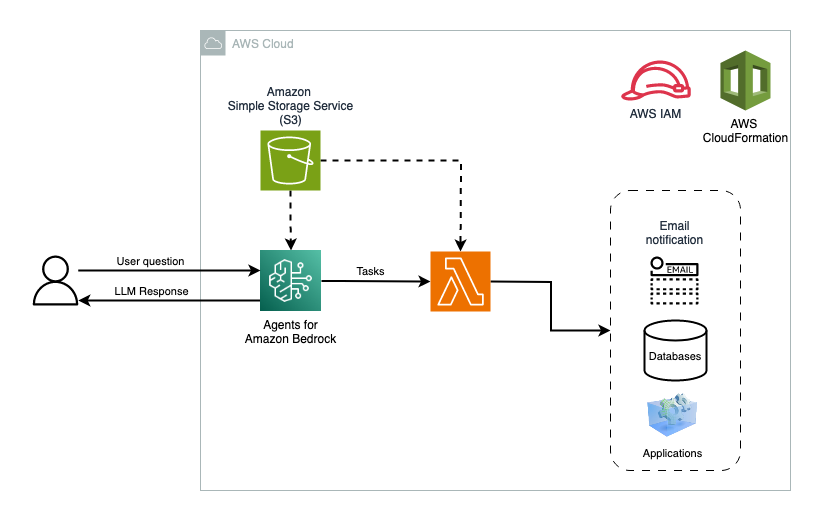

Before diving into the Terraform code, it’s essential to understand the core components that constitute a Knowledge Base within the Amazon Bedrock News ecosystem. This managed service abstracts away much of the complexity, but the underlying architecture consists of three key pillars: a data source, a vector store, and an embedding model. Managing these components effectively is crucial for building a high-performing RAG pipeline, a topic often discussed in circles following LangChain News and LlamaIndex News.

The Data Source: Your Fountain of Knowledge

The foundation of any RAG system is the data you want the LLM to access. For Amazon Bedrock Knowledge Bases, the primary data source is an Amazon S3 bucket. You can upload your documents (e.g., PDFs, Word documents, HTML, plain text files) to a designated S3 location. During a process called data ingestion, the Knowledge Base will automatically read these files, extract the text, and prepare it for the next stage. This seamless integration with S3 provides a durable and scalable foundation for your knowledge corpus.

The Vector Store: The Brain’s Index

Once the text is extracted, it must be converted into a machine-readable format and stored in a specialized database for efficient retrieval. This is the role of the vector store. The Knowledge Base chunks the source text into smaller segments, uses an embedding model to convert each chunk into a numerical vector, and then indexes these vectors. When a user asks a question, their query is also converted into a vector, and the vector store performs a similarity search to find the most relevant chunks of text. Amazon Bedrock offers several managed options for vector stores:

- Amazon OpenSearch Serverless: A fully managed, serverless option that simplifies operations.

- Pinecone: A popular, fully managed vector database known for its performance and ease of use. This is a common choice, often highlighted in Pinecone News.

- Redis Enterprise Cloud: A high-performance, in-memory data store that supports vector search capabilities.

- Amazon Aurora (PostgreSQL-compatible): Recent announcements have added support for vector search using the

pgvectorextension, bringing the power of RAG to traditional relational databases.

While Bedrock manages the integration, understanding the options, including open-source alternatives often featured in Milvus News or Weaviate News, helps in architecting the right solution.

The Embedding Model: Translating Words to Vectors

The embedding model is the crucial translator that converts text into dense vector representations. The quality of this model directly impacts the effectiveness of the retrieval process. A good embedding model will place semantically similar text chunks close to each other in the vector space. Amazon Bedrock provides access to high-performance embedding models like Amazon Titan Text Embeddings and models from Cohere. The choice of model can be a critical factor, a topic frequently covered in both Cohere News and the broader Hugging Face Transformers News community.

# Example: Provisioning an Amazon OpenSearch Serverless Collection with Terraform

# This collection will serve as the vector store for our Knowledge Base.

resource "aws_opensearchserverless_collection" "kb_vector_store" {

name = "kb-rag-collection"

type = "VECTORSEARCH"

}

# Define an access policy to allow Bedrock to manage the collection

resource "aws_opensearchserverless_access_policy" "kb_access_policy" {

name = "kb-access-policy"

type = "data"

policy = jsonencode([

{

"Rules" : [

{

"ResourceType" : "collection",

"Resource" : [

"collection/kb-rag-collection"

],

"Permission" : [

"aoss:CreateCollectionItems",

"aoss:DeleteCollectionItems",

"aoss:UpdateCollectionItems",

"aoss:DescribeCollectionItems"

]

},

{

"ResourceType" : "index",

"Resource" : [

"index/kb-rag-collection/*"

],

"Permission" : [

"aoss:CreateIndex",

"aoss:DeleteIndex",

"aoss:UpdateIndex",

"aoss:DescribeIndex",

"aoss:ReadDocument",

"aoss:WriteDocument"

]

}

],

"Principal" : [

"arn:aws:iam::${data.aws_caller_identity.current.account_id}:root" # Replace with more restrictive principal

],

"Description" : "Access policy for Bedrock Knowledge Base"

}

])

}Infrastructure as Code in Action: A Step-by-Step Terraform Deployment

With the core concepts understood, we can now translate them into a repeatable Terraform configuration. This approach ensures that your RAG infrastructure is version-controlled, easily reproducible across different environments (dev, staging, prod), and integrated into modern CI/CD pipelines, a core tenet of MLOps practices discussed in MLflow News and ClearML News.

Defining the Knowledge Base Resource

The central resource in our configuration is aws_bedrock_knowledge_base. This resource ties together the name, the IAM role that grants it permissions, and the vector store configuration. The IAM role is particularly critical; it must have permissions to access the embedding model and to read/write to the vector store’s control plane.

# main.tf - Terraform configuration for the Bedrock Knowledge Base

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

# IAM Role for the Knowledge Base

resource "aws_iam_role" "bedrock_kb_role" {

name = "AmazonBedrockKBExecutionRole"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "bedrock.amazonaws.com"

}

}

]

})

}

# Attach necessary policies (e.g., access to S3, OpenSearch, and Bedrock models)

# NOTE: For brevity, policy attachments are omitted but are crucial for a working setup.

resource "aws_bedrock_knowledge_base" "main" {

name = "company-internal-docs-kb"

role_arn = aws_iam_role.bedrock_kb_role.arn

knowledge_base_configuration = {

type = "VECTOR"

vector_knowledge_base_configuration = {

embedding_model_arn = "arn:aws:bedrock:${data.aws_region.current.name}::foundation-model/amazon.titan-embed-text-v1"

}

}

storage_configuration = {

type = "OPENSEARCH_SERVERLESS"

opensearch_serverless_configuration = {

collection_arn = aws_opensearchserverless_collection.kb_vector_store.arn

vector_index_name = "doc-embeddings"

field_mapping = {

vector_field = "bedrock-knowledge-base-default-vector"

text_field = "AMAZON_BEDROCK_TEXT_CHUNK"

metadata_field = "AMAZON_BEDROCK_METADATA"

}

}

}

tags = {

Environment = "production"

Project = "RAG-System"

}

}Configuring the Data Source and Ingestion

Once the Knowledge Base “shell” is defined, you must specify where it should get its data. This is done using the aws_bedrock_knowledge_base_data_source resource. This resource links to the parent Knowledge Base, points to the S3 bucket containing your documents, and defines the ingestion strategy. The chunking_configuration block is particularly important, as it determines how your documents are split into smaller pieces before being embedded. The optimal chunking strategy can significantly impact retrieval quality.

# S3 bucket to store the source documents for the Knowledge Base

resource "aws_s3_bucket" "kb_documents" {

bucket = "my-company-kb-source-docs-${data.aws_caller_identity.current.account_id}"

}

# Define the data source for the Knowledge Base

resource "aws_bedrock_knowledge_base_data_source" "main" {

knowledge_base_id = aws_bedrock_knowledge_base.main.id

name = "internal-wiki-s3-source"

data_source_configuration = {

type = "S3"

s3_configuration = {

bucket_arn = aws_s3_bucket.kb_documents.arn

}

}

vector_ingestion_configuration = {

chunking_configuration = {

chunking_strategy = "FIXED_SIZE"

fixed_size_chunking_configuration = {

max_tokens = 400

overlap_percentage = 20

}

}

}

}After applying this Terraform configuration, AWS will provision all the necessary resources. To trigger the data ingestion, you can upload your documents to the S3 bucket and start an ingestion job via the AWS Console or API. This separation of infrastructure provisioning and data ingestion is a powerful paradigm for managing RAG systems at scale.

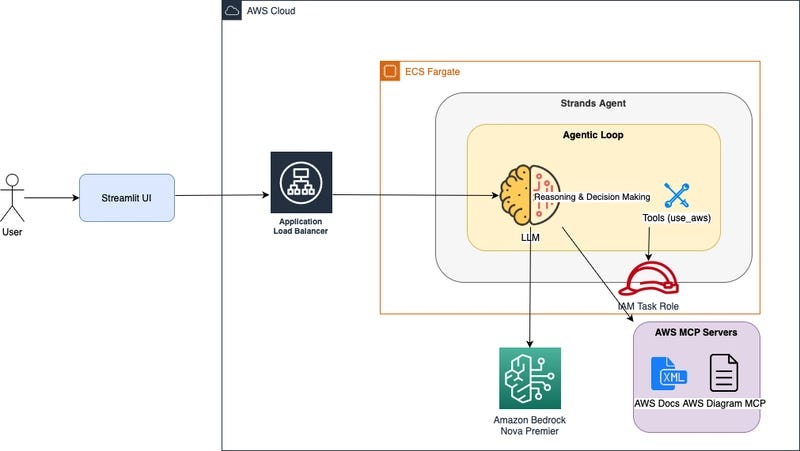

Beyond Deployment: Application Integration and Advanced RAG

Provisioning the infrastructure is only half the battle. The next step is to integrate the Knowledge Base into your application so users can start asking questions. This typically involves using the AWS SDK in your backend service, which could be built with popular frameworks like FastAPI News or Flask.

Querying the Knowledge Base with Boto3

The AWS SDK for Python, Boto3, provides a straightforward way to interact with your deployed Knowledge Base. The bedrock-agent-runtime client offers the RetrieveAndGenerate API, which is a high-level abstraction that performs both the retrieval from the vector store and the generation of a human-readable answer in a single call. This simplifies application development significantly.

import boto3

import json

# Initialize the Bedrock Agent Runtime client

# Ensure your AWS credentials are configured (e.g., via environment variables)

bedrock_agent_runtime = boto3.client(

"bedrock-agent-runtime",

region_name="us-east-1" # Use the region where your KB is deployed

)

KNOWLEDGE_BASE_ID = "YOUR_KNOWLEDGE_BASE_ID" # Get this from Terraform output or AWS console

MODEL_ARN = "arn:aws:bedrock:us-east-1::foundation-model/anthropic.claude-3-sonnet-20240229-v1:0" # Example model

def query_knowledge_base(query_text):

"""

Queries the Bedrock Knowledge Base and generates a response.

"""

try:

response = bedrock_agent_runtime.retrieve_and_generate(

input={

'text': query_text

},

retrieveAndGenerateConfiguration={

'type': 'KNOWLEDGE_BASE',

'knowledgeBaseConfiguration': {

'knowledgeBaseId': KNOWLEDGE_BASE_ID,

'modelArn': MODEL_ARN

}

}

)

output_text = response['output']['text']

citations = response.get('citations', [])

print(f"Query: {query_text}")

print(f"Answer: {output_text}\n")

if citations:

print("Citations:")

for i, citation in enumerate(citations):

retrieved_text = citation['retrievedReference']['content']['text']

s3_location = citation['retrievedReference']['location']['s3Location']['uri']

print(f" [{i+1}] Source: {s3_location}")

# print(f" Retrieved Chunk: {retrieved_text[:200]}...") # Uncomment for more detail

except Exception as e:

print(f"An error occurred: {e}")

if __name__ == "__main__":

user_query = "What were the key product updates in the last quarter?"

query_knowledge_base(user_query)Leveraging Orchestration Frameworks

While Boto3 is powerful, frameworks like LangChain and LlamaIndex provide higher-level abstractions for building complex chains and agents. The latest LangChain News highlights increasingly deep integrations with cloud services. You can easily create a custom LangChain retriever that uses the Bedrock Knowledge Base as its backend. This allows you to plug your secure, managed RAG system into the broader ecosystem of tools, agents, and chains, combining the best of managed services with the flexibility of open-source frameworks. This approach is gaining traction, mirroring trends seen in the Azure AI News and Vertex AI News communities where managed services are being integrated into flexible development frameworks.

Production-Ready RAG: Best Practices and Optimization

Deploying a RAG system to production requires careful consideration of security, cost, and performance. Using Terraform already puts you on the right path by enforcing consistency and enabling automation.

Security and IAM

Always follow the principle of least privilege. The IAM roles created for your Knowledge Base should have narrowly scoped permissions. For example, the role should only be able to read from the specific S3 data source bucket and interact with the designated vector store. Avoid using wildcard permissions in production. The role for your application querying the knowledge base should only have `bedrock:RetrieveAndGenerate` permissions for that specific knowledge base ARN.

Cost Management

Be mindful of the costs associated with your RAG system:

- Ingestion: You pay for the model usage to create embeddings during the data sync process.

- Storage: Costs are incurred for storing documents in S3 and for the vector index in your chosen vector store (e.g., Amazon OpenSearch Serverless).

- Inference: You pay for each call to the

RetrieveAndGenerateAPI, which includes both the retrieval operation and the LLM generation.

Monitor your usage through AWS Cost Explorer and set up budgets and alerts to avoid surprises.

CI/CD for GenAI Infrastructure

Integrate your Terraform code into a CI/CD pipeline (e.g., GitHub Actions, AWS CodePipeline). This enables a GitOps workflow where changes to your RAG infrastructure are reviewed, approved, and applied automatically. This is a critical MLOps practice that brings the same rigor of software development to your AI systems, a trend also seen in platforms like AWS SageMaker and Azure Machine Learning.

Choosing the Right Chunking Strategy

The chunking_configuration in your data source is a key performance lever. A smaller chunk size provides more granular, specific context but may miss broader themes. A larger chunk size captures more context but may introduce noise. The default “Fixed Size” strategy is a good starting point, but Bedrock also offers a “No Chunking” option if your documents are already pre-processed. Experiment with different chunk sizes and overlaps to see what works best for your specific dataset and query patterns.

Conclusion

Automating the deployment of Amazon Bedrock Knowledge Bases with Terraform is a game-changer for building scalable, enterprise-grade RAG applications. By defining your entire RAG infrastructure as code, you gain repeatability, version control, and the ability to integrate into sophisticated MLOps workflows. This approach moves beyond manual, console-based setups to a professional, engineering-driven process.

You have learned how to provision the necessary components—a vector store, the Knowledge Base itself, and the data source—using HCL. You have also seen how to interact with the deployed system using the AWS SDK, bridging the gap between infrastructure and application. As your next steps, consider integrating this Terraform setup into a CI/CD pipeline, experimenting with different foundation models from providers like Anthropic or Cohere, and building a user-facing application with a framework like Streamlit News or Gradio. By combining the managed power of Amazon Bedrock with the automation of Terraform, you are well-equipped to build the next generation of intelligent, data-driven AI applications.