Mastering Vector Search: A Deep Dive into Pinecone for Modern AI Applications

The artificial intelligence landscape is undergoing a seismic shift, driven by the power of large language models (LLMs) and generative AI. While models from sources like OpenAI News, Meta AI News, and Mistral AI News demonstrate incredible capabilities, they have a fundamental limitation: their knowledge is frozen at the time of training. To build truly dynamic, intelligent, and context-aware applications, developers need a way to provide these models with external, real-time knowledge. This is where vector databases enter the picture, and Pinecone has emerged as a leading, managed solution for this critical infrastructure layer.

Vector databases are the specialized storage systems powering the next generation of AI applications, from semantic search and recommendation engines to Retrieval-Augmented Generation (RAG). They are designed to efficiently store and query high-dimensional vectors—the numerical representations of unstructured data like text, images, and audio. In this comprehensive guide, we will explore the core concepts behind Pinecone, walk through practical implementations with code examples, discuss advanced features, and cover best practices for building robust, scalable AI systems. This article will serve as your go-to resource for understanding the latest in Pinecone News and its pivotal role in the modern AI stack.

The Foundation: Vector Embeddings and Similarity Search

Before diving into Pinecone’s architecture, it’s crucial to understand the concept that underpins all vector databases: vector embeddings. These embeddings are the bridge between the unstructured data humans understand and the numerical world that machines process.

What are Vector Embeddings?

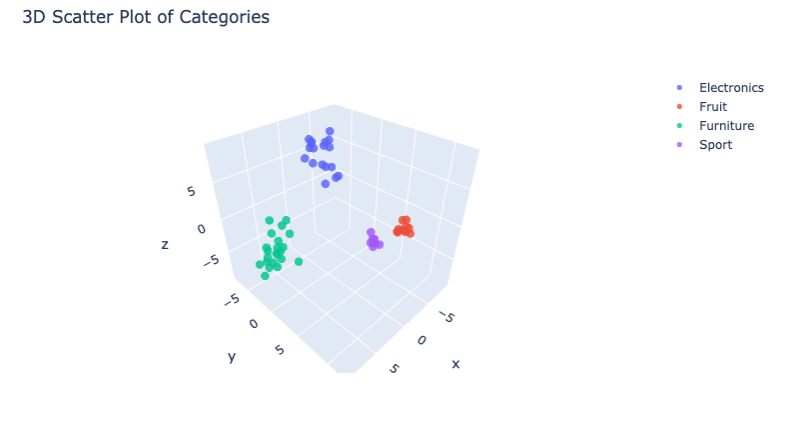

A vector embedding is a dense, numerical representation of a piece of data. Models trained on vast datasets, often from frameworks highlighted in TensorFlow News or PyTorch News, learn to capture the semantic meaning of data and encode it into a fixed-length list of numbers. For example, a sentence embedding model from the Hugging Face Transformers News ecosystem, like those provided by the Sentence Transformers library, can convert sentences into vectors. Sentences with similar meanings will have vectors that are “close” to each other in multi-dimensional space.

This process transforms the problem of finding “similar text” into a mathematical problem of finding the “nearest vectors.” This is the fundamental principle that enables semantic search, where the search is based on meaning and context rather than just keyword matching.

How Pinecone Manages and Searches Vectors

Pinecone is a managed vector database designed to perform this “nearest neighbor” search at incredible speed and scale. It uses sophisticated Approximate Nearest Neighbor (ANN) algorithms to find the most similar vectors to a given query vector without having to compare it against every single vector in the database. This makes it possible to search through billions of items in milliseconds.

The primary operations in Pinecone are:

- Index: An index is the highest-level organizational unit in Pinecone, storing vectors of the same dimensionality and using the same distance metric (e.g., cosine similarity, euclidean distance).

- Upsert: This is the process of adding or updating vectors in an index. Each vector is given a unique ID and can be associated with optional metadata.

- Query: This involves providing a query vector to find the top-k most similar vectors from the index.

Your First Steps: Initializing and Upserting Data

Getting started with Pinecone is straightforward. You’ll need the pinecone-client and an embedding model. Here, we’ll use the popular sentence-transformers library to generate our embeddings.

# First, install the necessary libraries

# pip install pinecone-client sentence-transformers

import os

from pinecone import Pinecone, ServerlessSpec

from sentence_transformers import SentenceTransformer

# Initialize Pinecone

# Make sure to set your PINECONE_API_KEY environment variable

api_key = os.environ.get("PINECONE_API_KEY")

pc = Pinecone(api_key=api_key)

# Define the index name

index_name = "technical-article-demo"

# Initialize the embedding model

# This model creates 384-dimensional vectors

model = SentenceTransformer('all-MiniLM-L6-v2')

dimension = model.get_sentence_embedding_dimension()

# Check if the index already exists. If not, create it.

if index_name not in pc.list_indexes().names():

print(f"Creating new index: {index_name}")

pc.create_index(

name=index_name,

dimension=dimension,

metric="cosine", # Cosine similarity is great for text embeddings

spec=ServerlessSpec(

cloud='aws',

region='us-east-1'

)

)

# Connect to the index

index = pc.Index(index_name)

# Sample data to be indexed

documents = [

{"id": "doc1", "text": "Pinecone is a vector database for AI applications."},

{"id": "doc2", "text": "LangChain helps build applications with LLMs."},

{"id": "doc3", "text": "Vector search enables semantic understanding of data."},

{"id": "doc4", "text": "Recent OpenAI news announced new model capabilities."},

{"id": "doc5", "text": "AWS SageMaker is a platform for building ML models."}

]

# Generate embeddings and prepare for upsert

vectors_to_upsert = []

for doc in documents:

embedding = model.encode(doc["text"]).tolist()

vectors_to_upsert.append({"id": doc["id"], "values": embedding})

# Upsert the vectors into the Pinecone index

print("Upserting vectors...")

index.upsert(vectors=vectors_to_upsert)

# Check the index status

print(index.describe_index_stats())Practical Implementation: Building a Semantic Search Engine

With our data indexed, we can now build the core of a semantic search application. This involves taking a user’s query, converting it into a vector, and using Pinecone to retrieve the most relevant documents.

The Semantic Search Workflow

The process is simple yet powerful:

- User Input: A user provides a natural language query (e.g., “What tools are used for LLM applications?”).

- Embedding: The same embedding model used for indexing converts the user’s query into a query vector.

- Querying Pinecone: The query vector is sent to the Pinecone index.

- Retrieval: Pinecone’s ANN search finds the vectors most similar to the query vector and returns their IDs and similarity scores.

- Presenting Results: The application uses the returned IDs to fetch the original content (the text of the documents) and presents it to the user.

Performing Your First Query

Let’s continue our previous example by querying the index we just populated. We’ll ask a question and see which of our documents Pinecone finds most relevant.

# (Assuming the code from the previous block has been run)

# The user's query

query_text = "What is used to build apps with language models?"

# 1. Embed the query

query_embedding = model.encode(query_text).tolist()

# 2. Query Pinecone

# We want the top 3 most similar documents

query_results = index.query(

vector=query_embedding,

top_k=3,

include_metadata=False # We didn't add metadata yet

)

# 3. Process and display results

print(f"Query: '{query_text}'\n")

print("Top 3 most relevant documents:")

for result in query_results['matches']:

# In a real app, you'd use the ID to look up the full document text

# from another database (like PostgreSQL, DynamoDB, etc.)

print(f" - ID: {result['id']}, Score: {result['score']:.4f}")

# Expected output would likely rank 'doc2' ("LangChain helps build...") highest.Enhancing Search with Metadata Filtering

Vector search alone is powerful, but combining it with metadata filtering is a game-changer. This allows you to restrict the search space before the vector similarity search even begins, improving both relevance and performance. For instance, you could search for “AI news” but only within articles published in the last month or from a specific source.

Let’s add metadata to our vectors and perform a filtered query.

# Let's re-upsert our data, this time with metadata

documents_with_metadata = [

{"id": "doc1", "text": "Pinecone is a vector database for AI applications.", "metadata": {"category": "database", "year": 2023}},

{"id": "doc2", "text": "LangChain helps build applications with LLMs.", "metadata": {"category": "framework", "year": 2023}},

{"id": "doc3", "text": "Vector search enables semantic understanding of data.", "metadata": {"category": "concept", "year": 2022}},

{"id": "doc4", "text": "Recent OpenAI news announced new model capabilities.", "metadata": {"category": "company_news", "year": 2024}},

{"id": "doc5", "text": "AWS SageMaker is a platform for building ML models.", "metadata": {"category": "cloud_platform", "year": 2024}}

]

# Generate embeddings and prepare for upsert with metadata

vectors_to_upsert_meta = []

for doc in documents_with_metadata:

embedding = model.encode(doc["text"]).tolist()

vectors_to_upsert_meta.append({

"id": doc["id"],

"values": embedding,

"metadata": doc["metadata"]

})

# Upsert the vectors with metadata

index.upsert(vectors=vectors_to_upsert_meta)

# Now, perform a filtered query

query_text_filtered = "What are some new developments in AI?"

query_embedding_filtered = model.encode(query_text_filtered).tolist()

# Find relevant documents but ONLY from the year 2024

filtered_results = index.query(

vector=query_embedding_filtered,

top_k=2,

filter={

"year": {"$eq": 2024}

},

include_metadata=True

)

print(f"\nFiltered Query: '{query_text_filtered}' (Year = 2024)\n")

print("Top 2 relevant documents from 2024:")

for result in filtered_results['matches']:

print(f" - ID: {result['id']}, Score: {result['score']:.4f}, Metadata: {result['metadata']}")Scaling and Integration: Unleashing Pinecone’s Full Potential

As applications grow, so do the demands on the underlying infrastructure. Pinecone offers advanced features and deep integrations with the AI ecosystem to handle this scale, reflecting the latest in Pinecone News and industry trends.

Pinecone Serverless: Efficiency and Cost-Effectiveness

A significant recent development is Pinecone Serverless. Traditionally, users had to provision “pods” of a specific size, which required careful capacity planning. Pinecone Serverless abstracts this away, offering a multi-tenant, pay-per-use architecture. This model separates storage and compute, automatically scaling resources based on demand. It’s an ideal choice for applications with variable traffic or for developers who want to minimize operational overhead, a common theme in discussions around Azure AI News and Amazon Bedrock News.

Seamless Integration with the AI Stack (LangChain & LlamaIndex)

The true power of Pinecone is amplified when used with orchestration frameworks. The latest LangChain News and LlamaIndex News consistently highlight tighter integrations with vector stores. These frameworks provide high-level abstractions for building complex chains and agents, with Pinecone acting as the “memory” component in a RAG system.

Using Pinecone with LangChain is incredibly simple, allowing you to focus on the application logic rather than the database specifics.

# First, install the necessary LangChain libraries

# pip install langchain langchain-pinecone langchain-openai langchain-community

import os

from langchain_pinecone import PineconeVectorStore

from langchain_openai import OpenAIEmbeddings

# Make sure to set your OPENAI_API_KEY environment variable

# Initialize the OpenAI embedding model

embeddings = OpenAIEmbeddings()

# Initialize the Pinecone VectorStore from an existing index

vectorstore = PineconeVectorStore(index_name=index_name, embedding=embeddings)

# Now you can use this vectorstore as a retriever in a LangChain RAG chain

# For example, let's add more text to our store via the LangChain interface

vectorstore.add_texts(

["The latest NVIDIA AI news focuses on new GPU architectures.", "Meta AI News often covers open-source model releases."],

metadatas=[{"category": "company_news"}, {"category": "company_news"}]

)

# Perform a similarity search using the LangChain retriever interface

query = "What's new with AI hardware companies?"

retrieved_docs = vectorstore.similarity_search(query, k=1)

print(f"\nQuery via LangChain: '{query}'\n")

print("Retrieved Document:")

print(retrieved_docs[0].page_content)

Production-Ready: Optimizing Your Pinecone Implementation

Moving from a prototype to a production system requires careful consideration of performance, cost, and reliability. Here are some best practices for using Pinecone effectively.

Choosing the Right Index Configuration

- Serverless vs. Pod-based: For new projects, variable workloads, or cost-sensitive applications, Serverless is often the best choice. For applications requiring the absolute lowest latency and dedicated resources, pod-based indexes might be more suitable.

- Metric Selection: The choice of distance metric (

cosine,dotproduct,euclidean) should align with your embedding model. Most text embedding models trained with contrastive loss, like those from Hugging Face News, perform best with cosine similarity. - Vector Dimensionality: Higher dimensions can capture more nuance but increase storage costs and potentially query latency. Choose a model that provides a good balance. For example, many top

Sentence Transformersmodels have dimensions between 384 and 768.

Efficient Data Handling

One of the most critical optimizations is batching. Instead of upserting or querying one vector at a time, group them into batches. This significantly reduces the number of network round-trips and improves throughput. Pinecone’s API is designed for batch operations, and you should always leverage this capability in production code.

When updating vectors, you can simply `upsert` with the same ID, and Pinecone will overwrite the existing vector. For deletions, use the `delete` operation, which can also be performed in batches.

Monitoring and Security

Use the `describe_index_stats` method to monitor your index’s vector count and fullness. This is crucial for capacity planning in pod-based indexes. Always store your Pinecone API key securely, for example, as an environment variable or using a secret management service, and never expose it in client-side code.

Conclusion: The Future of AI is Vector-Powered

Pinecone has solidified its position as a critical piece of the modern AI infrastructure, standing alongside alternatives like Milvus News, Weaviate News, and Chroma News. Its managed, scalable, and easy-to-use platform empowers developers to build sophisticated AI applications that were once the domain of large tech companies. By providing a fast and reliable “memory” layer, Pinecone enables LLMs to interact with dynamic, proprietary data, unlocking a vast range of use cases from intelligent chatbots to complex data analysis tools.

The key takeaways are clear: understanding vector embeddings is fundamental, practical implementation is straightforward with tools like the pinecone-client, and deep integration with frameworks like LangChain and LlamaIndex accelerates development. As the AI ecosystem continues to evolve, with constant updates from Google DeepMind News to Azure Machine Learning News, the role of specialized databases like Pinecone will only become more vital. The next step is for you to start building. Take the code examples from this article, connect to your own data, and begin exploring the possibilities of vector search.