MLflow’s Evolution: Mastering LLMOps with the AI Gateway and Advanced RAG Evaluation

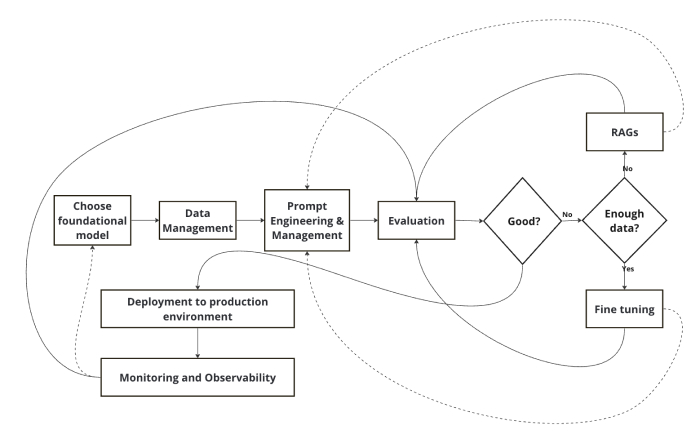

The landscape of machine learning operations (MLOps) is undergoing a seismic shift. While traditional MLOps focused on the lifecycle of models trained on structured data, the rise of Large Language Models (LLMs) has introduced a new paradigm: LLMOps. This new frontier is less about training from scratch and more about prompt engineering, model integration, and robust evaluation of generative systems. In this dynamic environment, staying updated with the latest MLflow News is crucial for any team building production-grade AI applications. MLflow, a cornerstone of the open-source MLOps ecosystem, has responded to this shift with powerful new features designed to streamline the development, deployment, and evaluation of LLM-based applications.

This article dives deep into two of the most significant recent advancements in MLflow: the AI Gateway and the enhanced evaluation suite for Retrieval-Augmented Generation (RAG) models. We’ll explore how the AI Gateway provides a unified, secure, and provider-agnostic interface to various LLM APIs, simplifying everything from key management to model swapping. We will then dissect the new RAG evaluation capabilities, offering a standardized way to measure the performance of the retrieval component that is so critical to modern AI systems. Through practical code examples and best practices, you’ll gain the knowledge to leverage these tools to build more robust, scalable, and manageable LLM applications.

The AI Gateway: A Unified Command Center for LLMs

As organizations adopt LLMs, they often find themselves juggling APIs from multiple providers. One team might use OpenAI for its powerful GPT models, another might prefer Anthropic for its focus on safety, and a third might leverage open-source models hosted via Cohere or a custom endpoint. This fragmentation creates significant operational overhead. Developers must manage different API keys, handle disparate request/response formats, and rewrite code to switch between providers. This is where the MLflow AI Gateway steps in, acting as a centralized, intelligent proxy for all your LLM interactions.

Core Concepts of the AI Gateway

The AI Gateway is a standalone server that sits between your applications and the various LLM provider APIs. It exposes a single, standardized endpoint (e.g., /v1/completions, /v1/chat, /v1/embeddings) that mirrors the popular OpenAI API format. This design choice is strategic, as it allows for seamless integration with a vast ecosystem of tools, including popular frameworks covered in LangChain News and LlamaIndex News, which are often built with the OpenAI SDK in mind.

You define “routes” in a simple YAML configuration file. Each route maps a user-defined name to a specific model from a provider, along with the necessary credentials. Your application then simply makes a request to the gateway, specifying the route name, and the gateway handles the rest—authenticating with the provider, translating the request if necessary, and returning the response in a standardized format.

Let’s see how to configure and run the gateway. First, create a configuration file named gateway-config.yaml:

routes:

- name: "completions-gpt4"

route_type: "llm/v1/completions"

model:

provider: "openai"

name: "gpt-4"

config:

openai_api_key: "$OPENAI_API_KEY"

- name: "chat-claude"

route_type: "llm/v1/chat"

model:

provider: "anthropic"

name: "claude-2"

config:

anthropic_api_key: "$ANTHROPIC_API_KEY"

- name: "embeddings-cohere"

route_type: "llm/v1/embeddings"

model:

provider: "cohere"

name: "embed-english-light-v2.0"

config:

cohere_api_key: "$COHERE_API_KEY"In this configuration, we’ve set up three routes: one for completions with OpenAI’s GPT-4, one for chat with Anthropic’s Claude 2, and one for embeddings with a Cohere model. Notice the use of environment variables ($VAR_NAME) for securely managing API keys.

![LLMOps workflow diagram - GenAI Kickoff[29of30]: LLMOps on Azure](https://aidev-news.com/wp-content/uploads/2025/12/inline_38e4f439.png)

To start the gateway server, you run a simple command in your terminal:

# Make sure your API keys are set as environment variables

# export OPENAI_API_KEY=...

# export ANTHROPIC_API_KEY=...

# export COHERE_API_KEY=...

mlflow gateway start --config-path gateway-config.yaml --host 0.0.0.0 --port 5000With the gateway running, you now have a single, secure entry point for interacting with multiple cutting-edge models from providers frequently featured in OpenAI News and Anthropic News.

Querying Models Through the AI Gateway

Once the AI Gateway is running, interacting with your configured models becomes remarkably simple and consistent. Instead of importing and initializing different client libraries for each provider, you can use a single client, like Python’s requests library or MLflow’s own gateway client, to query any route.

Practical Implementation in Python

The key benefit is code simplification and flexibility. Let’s say you want to build an application that can switch between GPT-4 and Claude 2 for chat completion. Without the gateway, this would require conditional logic and separate SDKs. With the gateway, it’s as simple as changing the route name in your request.

Here’s how you can query the `chat-claude` route we defined earlier using MLflow’s built-in Python client. This approach is cleaner and more integrated than using raw HTTP requests.

import mlflow.gateway as gateway

from mlflow.gateway import set_gateway_uri

# Set the URI of your running MLflow AI Gateway

set_gateway_uri("http://localhost:5000")

# Define the chat payload

chat_payload = {

"messages": [

{"role": "user", "content": "Explain the importance of MLOps in 50 words."}

],

"temperature": 0.7,

"max_tokens": 100,

}

try:

# Query the 'chat-claude' route

response = gateway.query("chat-claude", data=chat_payload)

print("Response from Claude:")

print(response['choices'][0]['message']['content'])

# To switch to OpenAI, you would just change the route name.

# The payload structure remains the same!

# response_gpt = gateway.query("completions-gpt4", data={"prompt": "...", ...})

except Exception as e:

print(f"An error occurred: {e}")

This standardized interaction is a game-changer for LLMOps. It allows you to A/B test different models, create fallbacks in case a provider’s API is down, or optimize for cost by routing requests to cheaper models for less critical tasks—all without changing your application code. This level of abstraction is becoming a standard practice, echoing trends seen in platforms like Amazon Bedrock News and Azure AI News, which also aim to provide unified access to a variety of foundation models.

Advanced RAG Evaluation with MLflow

Retrieval-Augmented Generation (RAG) has emerged as the dominant pattern for building LLM applications that can reason over private or up-to-date information. However, evaluating a RAG system is notoriously difficult. The quality of the final generated answer depends heavily on the quality of the initial retrieval step. If the wrong documents are retrieved, even the most powerful LLM can’t produce a correct answer. Recognizing this, MLflow has introduced a specialized evaluation component for the retrieval portion of RAG pipelines.

Measuring Retrieval Quality

MLflow’s evaluation suite now includes metrics specifically designed for information retrieval tasks. These metrics help you quantify how well your retriever is performing before the context is even passed to the LLM. Key metrics include:

- Precision@K: Out of the top K documents retrieved, what fraction are actually relevant?

- Recall@K: Out of all possible relevant documents, what fraction did you find in your top K results?

- NDCG@K (Normalized Discounted Cumulative Gain): A more sophisticated metric that rewards retrieving highly relevant documents at higher ranks.

To use these metrics, you need a “ground truth” evaluation dataset. This dataset contains a set of questions and, for each question, a list of document IDs that are considered relevant for answering it. You then run your retriever against these questions and use `mlflow.evaluate()` to compare the retrieved document IDs against the ground truth.

Let’s walk through a complete example. Imagine we have a simple RAG system that retrieves documents from a small corpus. We’ll use MLflow to evaluate its retrieval step.

import mlflow

import pandas as pd

# 1. Define a mock retriever function

# In a real-world scenario, this would query a vector DB like Pinecone, Chroma, or Milvus.

def simple_retriever(questions):

# This function simulates retrieving document IDs for a batch of questions.

retrieved_docs = []

for question in questions:

if "MLflow" in question:

retrieved_docs.append(["doc-101", "doc-105", "doc-201"]) # Simulating retrieval

elif "Spark" in question:

retrieved_docs.append(["doc-301", "doc-302", "doc-101"])

else:

retrieved_docs.append([])

return pd.Series(retrieved_docs)

# 2. Create the evaluation dataset (ground truth)

eval_data = pd.DataFrame({

"questions": [

"What is MLflow Tracking?",

"How does Spark work with MLflow?",

"What is a neural network?"

],

"ground_truth": [

["doc-101", "doc-102", "doc-105"], # Relevant docs for Q1

["doc-301", "doc-302"], # Relevant docs for Q2

["doc-404"] # Relevant docs for Q3

]

})

# 3. Run the evaluation using mlflow.evaluate()

with mlflow.start_run() as run:

results = mlflow.evaluate(

model=simple_retriever,

data=eval_data,

targets="ground_truth",

model_type="retriever", # CRITICAL: Specify the new model type

evaluators=["default"]

)

print("Evaluation Results:")

print(results.metrics)

# The results, including precision_at_k and recall_at_k,

# will be logged to the MLflow UI for this run.

When you run this code, MLflow will execute the `simple_retriever` on the `questions` column, compare its output to the `ground_truth` column, and automatically calculate metrics like `retrieval_precision_at_k` and `retrieval_recall_at_k`. These results are logged to the MLflow Tracking server, allowing you to systematically compare different retrieval strategies, embedding models, or chunking methods. This brings the same rigor and experiment tracking capabilities we use for traditional ML, as seen in tools like Weights & Biases News, to the forefront of LLMOps.

Best Practices and Ecosystem Integration

To maximize the value of these new MLflow features, it’s important to adopt best practices and understand how they fit into the broader AI ecosystem, which includes updates from PyTorch News, TensorFlow News, and the rapidly evolving world of vector databases.

Tips and Considerations

- Secure the Gateway: In a production environment, never run the AI Gateway on a publicly accessible IP without authentication. Place it behind a reverse proxy (like Nginx), enforce TLS, and use API key authentication or OAuth for client requests.

- Version Everything: Use MLflow Tracking to version not just your code, but also your gateway configurations and RAG evaluation datasets. This ensures reproducibility and allows you to trace performance changes back to specific configuration updates.

- Combine Gateway and Evaluation: Create an end-to-end evaluation pipeline. Use the AI Gateway to query an LLM to generate answers based on retrieved context, and then use `mlflow.evaluate` with LLM-as-a-judge metrics (like `faithfulness` or `relevance`) to assess the quality of the final generated text.

- Integrate with Vector Databases: Your RAG retriever will almost certainly use a vector database. The `simple_retriever` function in our example should be replaced with actual client code for services like Pinecone News, Weaviate News, or open-source libraries like Chroma News or FAISS. MLflow helps you evaluate and compare the effectiveness of these different backends.

- Automate Evaluation: Integrate RAG evaluation into your CI/CD pipeline. Whenever you update your embedding model, chunking strategy, or retrieval logic, the automated evaluation run can act as a quality gate, preventing regressions from reaching production. This aligns with modern DevOps principles and is a key theme in enterprise AI platform news from Vertex AI and AWS SageMaker.

Conclusion

The latest advancements in MLflow mark a significant step forward in maturing the field of LLMOps. The AI Gateway tackles the critical challenge of multi-provider LLM management, offering a unified, secure, and flexible abstraction layer that simplifies development and enhances operational control. Simultaneously, the introduction of dedicated RAG evaluation metrics provides a much-needed, standardized framework for measuring and improving the performance of the most common type of generative AI application today.

By embracing these tools, teams can move from ad-hoc experimentation to a systematic, engineering-driven approach for building LLM-powered systems. The ability to centrally manage model access, rigorously evaluate retrieval quality, and track every experiment provides the foundation for creating reliable, scalable, and high-performing AI products. As the AI landscape continues to evolve with news from Meta AI News and Google DeepMind News, tools like MLflow that bring structure and discipline to the development lifecycle will only become more indispensable.