Scaling the Senses: A Deep Dive into Deploying Omni-Modal AI with Modal

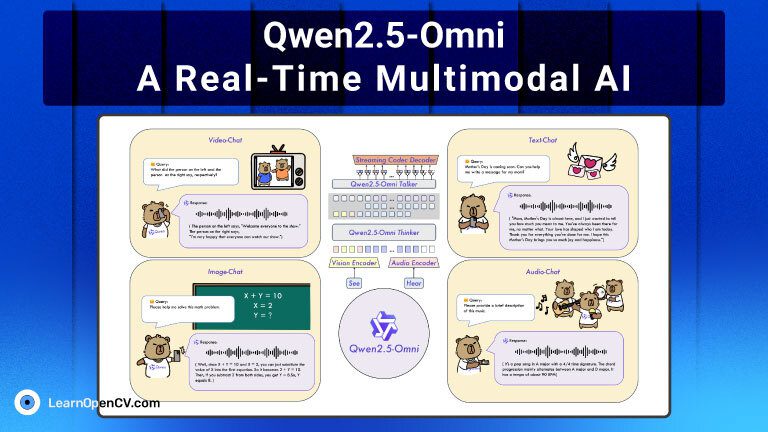

The artificial intelligence landscape is undergoing a seismic shift. For years, the focus has been on mastering individual domains: text with Large Language Models (LLMs), images with computer vision models, and audio with speech recognition. Now, we are firmly in the era of omni-modal AI—powerful models capable of understanding, reasoning about, and generating content across a rich tapestry of data types simultaneously. The latest Google DeepMind News and Meta AI News constantly highlight models that can watch a video, listen to the audio, and answer nuanced questions about the content. While this leap in capability is exciting, it presents a formidable engineering challenge. Deploying these complex, resource-hungry models into production is a task that can cripple even seasoned MLOps teams.

Omni-modal models are not just large; they are intricate ecosystems of dependencies. A single model might require libraries for video processing (FFmpeg), audio manipulation (librosa), and deep learning (PyTorch, TensorFlow), all while demanding specific, high-end NVIDIA GPUs. This is where traditional deployment strategies often fall short, mired in dependency conflicts and infrastructure management overhead. This article explores a modern solution: using Modal, a serverless compute platform, to tame this complexity. We will delve into how Modal’s unique architecture provides a frictionless path from a local Python script to a scalable, production-grade omni-modal API, making cutting-edge AI more accessible than ever.

The Foundations: Understanding Modal and its Role in the Omni-Modal Revolution

At its core, Modal is a serverless platform designed for running code—from simple functions to complex machine learning models—in the cloud without managing servers. You write standard Python code, add a few decorators, and Modal handles the rest: packaging your code, provisioning the right hardware (including A100s and H100s), and executing it in a containerized environment. This paradigm is a significant departure from platforms like AWS SageMaker or Azure Machine Learning, which often require more extensive configuration of instances, networking, and storage.

Why Modal is a Game-Changer for Multi-Modal AI

The challenges of deploying omni-modal models are multi-faceted, and Modal addresses them directly:

- Dependency Management: Omni-modal models often have a sprawling list of dependencies. Modal allows you to define your entire environment in code using a

modal.Imageobject. You can specify system packages (likeffmpeg), Python libraries from PyPI, or even build from a custom Dockerfile. This codifies your environment, ensuring perfect reproducibility and eliminating the “it works on my machine” problem. - Hardware Accessibility: The latest models often perform best on specific hardware. The latest NVIDIA AI News might announce a new GPU, and getting access to it can be difficult. Modal provides on-demand access to a wide range of GPUs, from cost-effective T4s to powerful H100s, allowing you to select the perfect hardware for your inference or fine-tuning task without any procurement overhead.

- Effortless Scaling: A single user request might trigger a complex pipeline of tasks. Modal functions can call other functions and use primitives like

.map()to parallelize work across hundreds or thousands of containers instantly, providing seamless horizontal scaling.

Your First Modal App: A Simple Image Captioning Example

Let’s see how simple it is to get started. We’ll build a web endpoint that takes an image URL and returns a caption using a model from the Hugging Face Hub. This example highlights the core concepts of Modal in just a few lines of code.

First, ensure you have Modal installed (pip install modal) and set up (modal setup).

import modal

# Define the environment for our function.

# We start from a slim Debian image and install the necessary Python libraries.

stub = modal.Stub(

"image-captioner",

image=modal.Image.debian_slim().pip_install(

"torch", "transformers", "requests", "Pillow"

),

)

# Use a container lifecycle hook to load the model into memory once when the container starts.

# This avoids reloading the model on every single request, which is crucial for performance.

@stub.cls(gpu="T4")

class ImageCaptioner:

def __enter__(self):

from transformers import BlipProcessor, BlipForConditionalGeneration

from PIL import Image

import requests

print("🤖 Loading model and processor...")

self.processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-large")

self.model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-large").to("cuda")

self.Image = Image

self.requests = requests

print("✅ Model loaded successfully!")

@modal.method()

def generate_caption(self, image_url: str) -> str:

"""

Takes an image URL, downloads it, and generates a descriptive caption.

"""

try:

raw_image = self.Image.open(self.requests.get(image_url, stream=True).raw).convert("RGB")

inputs = self.processor(raw_image, return_tensors="pt").to("cuda")

out = self.model.generate(**inputs, max_new_tokens=50)

caption = self.processor.decode(out[0], skip_special_tokens=True)

return caption

except Exception as e:

return f"Error processing image: {e}"

# This is the entrypoint for local execution.

# You can run this with `modal run caption_app.py --image-url "some_url"`

@stub.local_entrypoint()

def main(image_url: str):

captioner = ImageCaptioner()

caption = captioner.generate_caption.remote(image_url)

print(f"📷 Image URL: {image_url}")

print(f"🎭 Generated Caption: {caption}")

In this example, the @stub.cls(gpu="T4") decorator tells Modal to run this class in a container with a T4 GPU. The __enter__ method is a powerful feature that runs once when the container boots, allowing us to load the heavyweight model from the latest Hugging Face Transformers News into memory. Subsequent calls to generate_caption are incredibly fast because the model is already warm. To deploy this, you’d simply add a @stub.webhook decorator to the method and run modal deploy.

Building a Production-Ready Omni-Modal Endpoint

Moving beyond a simple function, let’s design a more robust service. Imagine an API that can process audio: it transcribes the audio and then uses the transcription as a prompt to generate a relevant image. This requires orchestrating two different models—an audio transcription model (like OpenAI’s Whisper) and a text-to-image model (like Stable Diffusion).

Defining a Multi-Purpose Environment

A key advantage of Modal is defining a single environment that can serve multiple models. This is crucial for omni-modal applications where different components have different dependencies.

import modal

from pathlib import Path

# Define a shared image for both our audio and image models

# This includes libraries for audio processing, image generation, and the core ML frameworks.

# Staying up-to-date with PyTorch News helps in choosing the right versions.

app_image = modal.Image.debian_slim(python_version="3.10").pip_install(

"torch",

"torchaudio",

"transformers",

"diffusers",

"accelerate",

"ffmpeg-python",

"fastapi", # For webhook data handling

)

stub = modal.Stub("omni-modal-pipeline", image=app_image)

# Create a persistent Volume to cache model weights.

# This significantly speeds up container cold starts after the first download.

model_cache_volume = modal.Volume.from_name("model-cache-vol", create_if_missing=True)

MODEL_DIR = Path("/cache")

Here, we’ve created a reusable image and a modal.Volume. This Volume acts as a shared, persistent network file system. We’ll use it to store our model weights, so they only need to be downloaded once, not every time a new container starts.

Implementing the Inference Pipeline

Now, we’ll implement the two parts of our pipeline as separate Modal functions. We use the @enter hook and the Volume to efficiently load models.

from fastapi import Response

# --- 1. Audio Transcription Component ---

@stub.cls(gpu="A10G", volumes={str(MODEL_DIR): model_cache_volume})

class WhisperTranscriber:

@modal.enter()

def load_model(self):

from transformers import pipeline

import torch

print("🎤 Loading Whisper model...")

self.transcriber = pipeline(

"automatic-speech-recognition",

model="openai/whisper-large-v3",

torch_dtype=torch.float16,

device="cuda",

cache_dir=MODEL_DIR,

)

print("✅ Whisper model loaded.")

@modal.method()

def transcribe(self, audio_bytes: bytes) -> str:

print("📣 Transcribing audio...")

result = self.transcriber(audio_bytes)

return result["text"]

# --- 2. Text-to-Image Component ---

@stub.cls(gpu="A10G", volumes={str(MODEL_DIR): model_cache_volume})

class ImageGenerator:

@modal.enter()

def load_model(self):

from diffusers import AutoPipelineForText2Image

import torch

print("🖼️ Loading Stable Diffusion model...")

self.pipe = AutoPipelineForText2Image.from_pretrained(

"stabilityai/sdxl-turbo",

torch_dtype=torch.float16,

variant="fp16",

cache_dir=MODEL_DIR,

).to("cuda")

print("✅ Stable Diffusion model loaded.")

@modal.method()

def generate(self, prompt: str) -> bytes:

from io import BytesIO

print(f"🎨 Generating image for prompt: '{prompt}'")

image = self.pipe(prompt=prompt, num_inference_steps=1, guidance_scale=0.0).images[0]

# Return the image as raw PNG bytes

buffer = BytesIO()

image.save(buffer, format="PNG")

return buffer.getvalue()

# --- 3. The Webhook that ties it all together ---

@stub.webhook(method="POST")

def process_audio_to_image(audio: bytes = modal.concurrency.asgi.File()):

"""

An API endpoint that accepts an audio file, transcribes it,

and returns a generated image based on the transcription.

"""

# Create instances of our classes

transcriber = WhisperTranscriber()

generator = ImageGenerator()

# Call the functions remotely. Modal handles the execution.

print("🚀 Starting pipeline...")

transcribed_text = transcriber.transcribe.remote(audio)

image_bytes = generator.generate.remote(transcribed_text)

print("🏁 Pipeline complete. Returning image.")

return Response(content=image_bytes, media_type="image/png")

This code defines a complete, deployable pipeline. When a POST request with an audio file hits the webhook, Modal automatically spins up containers for both the WhisperTranscriber and ImageGenerator (if they aren’t already running). It executes the transcription and generation steps, potentially in parallel, and streams the final image back to the user. This is a powerful demonstration of orchestrating a multi-step, multi-modal workflow with minimal boilerplate.

Advanced Modal Techniques for Complex Pipelines

For truly complex, production-grade systems, you’ll need to leverage more of Modal’s advanced features. This includes large-scale parallel processing and integration with the broader MLOps ecosystem, including vector databases and orchestration frameworks.

Scaling Out with .map() for Batch Processing

Imagine you need to process an entire video, not just a single image. A common approach is to extract frames and run an object detection model on each one. This is an “embarrassingly parallel” problem, and Modal’s .map() is designed for it.

# (Assuming an 'ObjectDetector' class is defined similar to the examples above)

@stub.function

def process_batch_of_frames(frame_batch: list[bytes]):

"""Processes a list of video frames in parallel using .map()."""

detector = ObjectDetector()

# .map() distributes the items in the list across multiple containers

# and runs the 'detect_objects' method on each one.

results = list(detector.detect_objects.map(frame_batch, order_outputs=True))

return results

# In a real application, you'd have a function to extract frames from a video

# and then call process_batch_of_frames.remote(all_frames).

This pattern is incredibly powerful. With a single line of code, you can process thousands of data points across hundreds of GPUs. This is a task that would require complex setup with tools like Ray or Apache Spark MLlib, but is a native primitive in Modal.

Integrating with Vector Databases for RAG

Omni-modal models are often used in Retrieval-Augmented Generation (RAG) systems. For instance, you could embed a library of images and text descriptions into a vector database like Pinecone, Weaviate, or Milvus. A user could then provide a new image, and the system would find the most visually similar items.

A Modal function can easily connect to these services. You would use modal.Secret to securely store your database API keys.

import modal

stub = modal.Stub("rag-example")

# Store your Pinecone API key securely in a Modal Secret

# You can create this via the Modal UI or CLI:

# modal secret create pinecone-secret PINECONE_API_KEY=... PINECONE_ENVIRONMENT=...

@stub.function(secrets=[modal.Secret.from_name("pinecone-secret")])

def find_similar_images(image_embedding: list[float]):

import os

import pinecone

pinecone.init(

api_key=os.environ["PINECONE_API_KEY"],

environment=os.environ["PINECONE_ENVIRONMENT"],

)

index = pinecone.Index("image-index")

query_response = index.query(

vector=image_embedding,

top_k=5,

include_metadata=True,

)

return query_response["matches"]

# This function would be part of a larger pipeline orchestrated by a framework

# like LangChain or LlamaIndex. The latest LangChain News often features

# integrations that would work seamlessly inside a Modal function.

This modular approach allows you to build sophisticated RAG pipelines where different components (embedding, querying, synthesis) are handled by dedicated, scalable serverless functions.

Best Practices, Optimization, and the Broader Ecosystem

Deploying a model is just the beginning. To run it efficiently and reliably in production, consider these best practices.

Performance and Cost Optimization

- Minimize Cold Starts: Use the

@enteror__enter__lifecycle hooks to load models once per container. This is the single most important optimization for responsive API endpoints. - Right-Sized Hardware: Don’t default to the most powerful GPU. For many inference tasks, a T4 or L4 GPU is far more cost-effective than an A100G. Profile your model to understand its memory and compute requirements.

- Use Volumes for Caching: As shown, use

modal.Volumeto cache model weights. This dramatically reduces the time it takes for new containers to become ready, as they can skip the lengthy download process. - Keep Containers Warm: For latency-sensitive applications, you can configure a

keep_warmparameter in your function definition to ensure a minimum number of containers are always running and ready to accept requests.

CI/CD and MLOps Integration

Modal is designed to fit into modern software development workflows. The modal deploy command can be easily integrated into a GitHub Actions or GitLab CI pipeline to automate deployments on every merge to your main branch. For observability, you can integrate logging and monitoring tools within your Python code. For a complete MLOps solution, you might track experiments with Weights & Biases or MLflow during training, and then use Modal for the final deployment step, creating a seamless path from research to production.

The Evolving Inference Landscape

The world of AI inference is evolving rapidly. Platforms like Modal, Replicate, and RunPod are abstracting away infrastructure. At the same time, tools like Ollama are making it easier to run powerful models locally. Modal provides a perfect bridge: you can develop and test against a model locally, and then use the exact same code to deploy it to the cloud for scalable inference. Furthermore, you can use Modal to run containers with highly optimized inference servers like NVIDIA’s Triton Inference Server or frameworks like vLLM for maximum throughput.

Conclusion: The Future is Omni-Modal and Serverless

The rise of omni-modal AI represents a new frontier, one that demands a corresponding evolution in our MLOps tools and practices. The complexity of managing diverse dependencies, specialized hardware, and dynamic scaling requirements can no longer be efficiently handled by traditional, server-full approaches. As we’ve explored, serverless compute platforms like Modal offer a compelling solution by abstracting away this infrastructure complexity entirely.

By defining environments as code, providing on-demand access to a spectrum of GPUs, and offering simple primitives for scaling and orchestration, Modal empowers developers and data scientists to focus on what truly matters: building innovative applications with these powerful new models. Whether you are creating a real-time audio-to-image pipeline, a batch video analysis system, or a sophisticated RAG application, the path from concept to scalable production deployment has never been more direct. As you embark on your next omni-modal project, consider leveraging a serverless approach to accelerate your development and unlock the full potential of this exciting new wave of artificial intelligence.