Building High-Integrity Data Services with FastAPI: From Validation to Asynchronous Tasks

The Rise of High-Performance, Data-Centric APIs with FastAPI

In today’s interconnected digital landscape, the reliability and integrity of data have become paramount. From financial systems processing transactions to AI platforms serving models, the backend services that power these applications must be fast, robust, and, above all, trustworthy. This is where modern web frameworks shine, and leading the charge in the Python ecosystem is FastAPI. Built on Starlette for performance and Pydantic for data validation, FastAPI provides developers with the tools to create highly efficient and secure APIs with minimal boilerplate.

The latest FastAPI News isn’t just about new features; it’s about the innovative ways developers are using it to solve complex problems. We’re seeing a trend towards building “high-integrity” services—applications responsible for validating, processing, and periodically pushing critical data to other systems, such as public ledgers or enterprise data warehouses. These services demand more than just speed; they require strict data validation, resilient handling of external communications, and the ability to perform tasks on a schedule. This article dives deep into how you can leverage FastAPI’s powerful features to build such a system, covering everything from foundational data validation to advanced asynchronous task processing and production best practices.

Core Concepts: The Foundation of Data Integrity with Pydantic

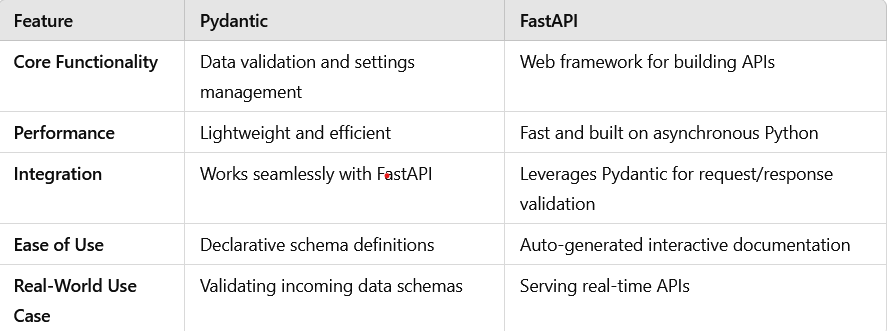

At the heart of any high-integrity system is the guarantee that the data it accepts and processes is correct and well-structured. FastAPI achieves this seamlessly through its integration with Pydantic, a data validation library that uses Python type hints to define data schemas. This approach not only enforces data correctness at the API boundary but also automatically generates OpenAPI and JSON Schema documentation, making your API self-describing and easy for consumers to understand.

Declarative Data Validation with Pydantic Models

Instead of writing verbose and error-prone manual validation logic, you define the “shape” of your data using a Pydantic model. FastAPI then uses this model to validate incoming request bodies, query parameters, and more. If the incoming data doesn’t conform to the schema—for instance, a required field is missing or a string is provided where an integer is expected—FastAPI automatically returns a clear, detailed 422 Unprocessable Entity error response.

Consider a service that needs to validate collateral data before processing. The data must include an asset identifier, a quantity, and a valuation. Here’s how you would define this with a Pydantic model:

from fastapi import FastAPI

from pydantic import BaseModel, Field

from decimal import Decimal

# Initialize the FastAPI application

app = FastAPI(

title="Collateral Validation Service",

description="An API for validating and submitting collateral data.",

version="1.0.0"

)

# Pydantic model for incoming collateral data

class CollateralPayload(BaseModel):

"""

Defines the schema for collateral data.

- asset_id: A unique identifier for the asset (e.g., 'BTC', 'ETH').

- quantity: The amount of the asset, must be a positive number.

- valuation_usd: The value of the collateral in USD, must be positive.

"""

asset_id: str = Field(..., min_length=1, max_length=10, description="The asset's unique ticker symbol.")

quantity: Decimal = Field(..., gt=0, description="The quantity of the asset held as collateral.")

valuation_usd: Decimal = Field(..., gt=0, description="The total valuation of the asset in USD.")

class Config:

# Pydantic configuration to enable example generation in OpenAPI docs

schema_extra = {

"example": {

"asset_id": "ETH",

"quantity": "10.5",

"valuation_usd": "35000.75"

}

}

@app.post("/validate-collateral/")

async def validate_and_submit_collateral(payload: CollateralPayload):

"""

Accepts collateral data, validates it against the schema,

and returns a confirmation message.

"""

# At this point, FastAPI has already validated the payload.

# If the data was invalid, the client would have received a 422 error.

print(f"Validated data for {payload.asset_id}: Quantity={payload.quantity}, Value=${payload.valuation_usd}")

# In a real application, you would now add this data to a processing queue

# or save it to a database.

return {"status": "success", "message": "Collateral data validated successfully.", "data": payload}In this example, the CollateralPayload model ensures that asset_id is a non-empty string and that both quantity and valuation_usd are positive decimal numbers. This declarative approach is clean, readable, and incredibly effective at preventing bad data from entering your system.

Implementation Details: Building a Robust Asynchronous Pipeline

Once data is validated, the next step is to process it. This often involves interacting with external systems like databases, message queues, or other APIs. These I/O-bound operations are the primary source of latency in web applications. FastAPI is built on an ASGI (Asynchronous Server Gateway Interface) framework, which allows it to handle these operations concurrently using Python’s async/await syntax, dramatically improving throughput.

Leveraging Asynchronous Operations and Dependency Injection

FastAPI’s support for async def endpoints is a game-changer. It allows the server to handle other requests while waiting for a slow I/O operation to complete. To manage resources like database connections or API clients effectively, FastAPI includes a powerful and intuitive Dependency Injection (DI) system.

Let’s extend our example by creating a dependency that provides a (simulated) database session and an asynchronous endpoint that uses it to save the validated data.

import asyncio

from fastapi import FastAPI, Depends, HTTPException

from pydantic import BaseModel, Field

from decimal import Decimal

from typing import Dict, Any

# --- (Assuming CollateralPayload model from previous example) ---

# --- Mock Database ---

# In a real app, this would be a connection to PostgreSQL, MongoDB, etc.

mock_db = {"collateral_records": {}}

# --- Dependency Injection ---

# A dependency is just a function that can take other dependencies.

# This one simulates getting a database session.

async def get_db_session():

"""A dependency that yields a database session."""

print("Opening database connection...")

try:

yield mock_db

finally:

# This code runs after the response is sent

print("Closing database connection.")

# --- API Application ---

app = FastAPI()

class CollateralPayload(BaseModel):

asset_id: str = Field(..., min_length=1, max_length=10)

quantity: Decimal = Field(..., gt=0)

valuation_usd: Decimal = Field(..., gt=0)

@app.post("/collateral/")

async def save_collateral_record(

payload: CollateralPayload,

db: Dict[str, Any] = Depends(get_db_session)

):

"""

Validates and saves a new collateral record to the database.

This is an asynchronous operation.

"""

record_id = f"{payload.asset_id}-{payload.quantity}"

if record_id in db["collateral_records"]:

raise HTTPException(status_code=409, detail="Record already exists.")

# Simulate an async database write operation

await asyncio.sleep(0.1) # Represents network latency to a real DB

db["collateral_records"][record_id] = payload.dict()

return {"status": "success", "record_id": record_id, "data": payload}

@app.get("/collateral/{record_id}")

async def get_collateral_record(

record_id: str,

db: Dict[str, Any] = Depends(get_db_session)

):

"""Retrieves a collateral record by its ID."""

record = db["collateral_records"].get(record_id)

if not record:

raise HTTPException(status_code=404, detail="Record not found.")

return {"data": record}The Depends(get_db_session) in the function signature tells FastAPI to execute get_db_session before running the endpoint logic and to inject its returned value (the `yield`ed `mock_db`) into the `db` parameter. This pattern decouples your business logic from resource management, making your code cleaner, easier to test, and more maintainable.

Advanced Techniques: Scheduling and Background Processing

Many high-integrity systems require tasks to be executed outside the standard request-response cycle. This could be a periodic job that aggregates data, a fire-and-forget task to send a notification, or a long-running process to train a machine learning model. The latest LangChain News and Hugging Face Transformers News often highlight the need for robust backends to power complex AI agents and inference jobs, which are perfect candidates for background processing.

Using `BackgroundTasks` for Simple, Out-of-Band Operations

FastAPI provides a simple, built-in mechanism for running tasks in the background with the `BackgroundTasks` class. These tasks run in the same process and event loop as the main application. They are ideal for lightweight operations that you don’t want to block the client’s response, such as sending an email or logging an event to an external service.

Let’s add a background task to our endpoint that simulates pushing the validated data to a public, external system.

import asyncio

from fastapi import FastAPI, Depends, BackgroundTasks

from pydantic import BaseModel, Field

from decimal import Decimal

# --- (Assuming CollateralPayload model and get_db_session dependency) ---

app = FastAPI()

class CollateralPayload(BaseModel):

asset_id: str = Field(..., min_length=1, max_length=10)

quantity: Decimal = Field(..., gt=0)

valuation_usd: Decimal = Field(..., gt=0)

async def push_to_public_ledger(asset_id: str, data: dict):

"""

Simulates a slow, I/O-bound task of pushing data to an external service.

This function will run in the background.

"""

print(f"Starting background task: Pushing {asset_id} to public ledger...")

# Simulate a network call that takes 5 seconds

await asyncio.sleep(5)

print(f"Background task complete: Successfully pushed {asset_id}.")

# In a real system, you might interact with a blockchain or a public API here.

@app.post("/collateral/submit-and-push")

async def submit_and_push_to_ledger(

payload: CollateralPayload,

background_tasks: BackgroundTasks

):

"""

Accepts collateral data, immediately returns a response,

and triggers a background task to push the data to a public ledger.

"""

# The client gets this response immediately

response_message = f"Accepted collateral for {payload.asset_id}. Pushing to ledger in the background."

# Add the long-running task to be executed after the response is sent

background_tasks.add_task(push_to_public_ledger, payload.asset_id, payload.dict())

return {"status": "accepted", "message": response_message}When a request hits this endpoint, the server immediately adds the `push_to_public_ledger` function to its background task queue and sends a response to the client. The 5-second `asyncio.sleep` call happens *after* the client has disconnected, preventing timeouts and improving the user experience.

For More Demanding Workloads: Celery and ARQ

While `BackgroundTasks` is useful, it has limitations. Since tasks run in the same process, a CPU-intensive task can block the server’s event loop, and if the server crashes, the task is lost. For mission-critical, periodic, or heavy-duty background jobs—such as running inference with a model from the latest PyTorch News or processing a large dataset with tools mentioned in Ray News—a dedicated task queue like Celery or ARQ is the recommended approach. These tools run in separate worker processes, providing isolation, scalability, and retry mechanisms, making your system far more resilient.

Best Practices and Optimization for Production

Building a robust service goes beyond writing the core logic. Deploying and maintaining a high-integrity application requires attention to configuration, testing, security, and observability.

Configuration and Secrets Management

Avoid hardcoding settings like database URLs or API keys. Use Pydantic’s `BaseSettings` to load configuration from environment variables. This is a secure and flexible way to manage different settings for development, staging, and production environments.

from pydantic import BaseSettings

class AppSettings(BaseSettings):

"""Loads application settings from environment variables."""

DATABASE_URL: str = "sqlite:///./test.db"

PUBLIC_LEDGER_API_KEY: str

LOG_LEVEL: str = "INFO"

class Config:

# Pydantic will look for a .env file if one exists

env_file = ".env"

# Usage in your application

settings = AppSettings()

print(f"Connecting to database: {settings.DATABASE_URL}")Testing Your Application

FastAPI makes testing straightforward with its `TestClient`. You can write tests that make requests to your application without needing a running server. Use these tests to verify endpoint logic, validation rules, and error handling. For dependencies like databases, use mocking libraries or set up a dedicated test database to ensure your tests are isolated and repeatable.

Containerization with Docker

Package your FastAPI application, its dependencies, and a production-grade ASGI server like Uvicorn with Gunicorn into a Docker container. This creates a portable, consistent, and scalable deployment unit that can be run anywhere, from a local machine to a cloud platform like AWS SageMaker or Azure Machine Learning.

Security Considerations

FastAPI provides easy-to-use tools for implementing authentication and authorization, such as OAuth2 password flows and API key validation. Always protect endpoints that modify data or expose sensitive information. Use FastAPI’s `Security` dependency to enforce these protections.

Conclusion: FastAPI as the Backbone for Modern Data Services

FastAPI has firmly established itself as a top-tier choice for building modern, high-performance Python backends. Its combination of speed, developer-friendly features, and a strong emphasis on data validation makes it uniquely suited for creating the high-integrity data services that today’s applications demand. By leveraging Pydantic for rock-solid data schemas, `async`/`await` for non-blocking I/O, and its powerful dependency injection system, you can build services that are not only fast but also reliable, maintainable, and scalable.

Whether you are building a backend for a DeFi application, an API to serve models from the latest OpenAI News, or a data ingestion pipeline for an enterprise system, the principles discussed here provide a solid foundation. The journey doesn’t end here; explore the rich ecosystem around FastAPI, including task queues like Celery, database ORMs like SQLAlchemy, and deployment tools like Docker, to take your applications to the next level. The ongoing stream of FastAPI News continues to show its growing adoption and versatility, proving it’s a framework built for the future of web development.