Unlocking Enterprise Productivity: A Technical Deep Dive into Integrating Anthropic’s Claude with Slack

Introduction

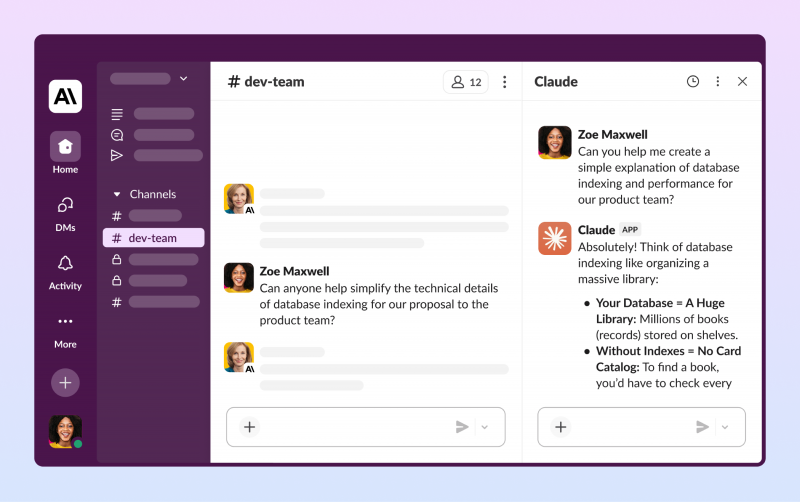

In today’s fast-paced digital workplace, collaboration platforms like Slack are the central nervous system of an organization. They are hubs for communication, file sharing, and project management. However, this constant flow of information can lead to overload, making it difficult to find critical data, stay updated on long conversations, or efficiently onboard new team members. The latest wave of generative AI, spearheaded by advancements highlighted in recent Anthropic News and OpenAI News, offers a powerful solution. By integrating Large Language Models (LLMs) like Anthropic’s Claude directly into these platforms, we can transform them from simple communication tools into intelligent, proactive assistants. This integration automates mundane tasks, surfaces relevant information instantly, and enhances collaborative workflows, unlocking unprecedented levels of productivity. This article provides a comprehensive technical guide for developers looking to build a sophisticated, context-aware Slack assistant powered by Claude, covering everything from initial setup and API integration to advanced features like semantic search and automated summarization.

The Foundation: Connecting Slack and LLMs

The core architecture of an AI-powered Slack assistant involves three main components: the Slack App, a backend server, and the LLM API. The Slack App serves as the user interface, listening for events like direct mentions or slash commands. The backend server, typically a web application built with a framework like Flask or FastAPI, acts as the intermediary. It receives event payloads from Slack, processes the data, makes a call to the LLM API (in this case, Anthropic’s Claude), and then uses the Slack API to post the generated response back to the appropriate channel or user. This event-driven model is highly efficient and scalable.

Setting Up the Initial Slack Bot

To begin, you need a Slack App and a way to handle its events. The Slack Bolt for Python library is an excellent tool that abstracts away much of the boilerplate code required for verifying requests and routing events. Your first step is to create a simple bot that can listen for mentions and respond, confirming the connection is working before introducing the complexity of an LLM.

Here’s a basic example of a Slack Bolt application that listens for an app_mention event and posts a simple acknowledgment. This script forms the skeleton upon which we will build our intelligent features.

# main.py

import os

from slack_bolt import App

from slack_bolt.adapter.socket_mode import SocketModeHandler

# Install with: pip install slack_bolt anthropic

# You'll need a Bot User OAuth Token (xoxb-...) and an App-Level Token (xapp-...)

# It's best practice to store these as environment variables

SLACK_BOT_TOKEN = os.environ.get("SLACK_BOT_TOKEN")

SLACK_APP_TOKEN = os.environ.get("SLACK_APP_TOKEN")

# Initializes your app with your bot token

app = App(token=SLACK_BOT_TOKEN)

@app.event("app_mention")

def handle_mention(event, say):

"""

This function is triggered when the bot is @-mentioned in a channel.

"""

user = event["user"]

# Acknowledge the mention before processing

say(f"Hi <@{user}>! I received your message. I'm thinking...")

# In the next sections, we will replace this with a call to the Claude API.

# For now, let's just send a placeholder response.

placeholder_response = "This is where the AI-generated response will go."

say(text=placeholder_response, thread_ts=event.get("ts"))

# Start your app

if __name__ == "__main__":

print("🤖 Slack bot is running...")

handler = SocketModeHandler(app, SLACK_APP_TOKEN)

handler.start()To run this, you would set your environment variables and execute the Python script. This simple yet crucial step validates your Slack API credentials and event subscription setup, paving the way for more advanced integrations.

Building a Context-Aware Conversational Assistant

A bot that only responds to single messages is of limited use. The real power of an LLM assistant in a collaborative environment is its ability to understand and participate in ongoing conversations. To achieve this, the bot must be “context-aware,” meaning it needs to process the history of a conversation thread before generating a response. This allows it to answer follow-up questions, understand references to earlier messages, and maintain a coherent dialogue.

Implementing Thread-Awareness

When your bot is mentioned in a Slack thread, the event payload contains a thread_ts (timestamp) identifier. You can use this timestamp with the Slack API’s conversations.replies method to fetch all messages in that specific thread. Once you have the message history, you can format it into a structured prompt for the Claude API. This prompt provides the necessary context for the model to generate a relevant and informed response. Frameworks discussed in recent LangChain News and LlamaIndex News offer sophisticated tools for managing conversational memory, but a direct implementation using the Slack and Anthropic SDKs is also highly effective.

The following code extends our previous example to fetch thread history and pass it to Anthropic’s Claude API.

# main_context_aware.py

import os

from slack_bolt import App

from slack_bolt.adapter.socket_mode import SocketModeHandler

import anthropic

# --- Slack App Initialization (same as before) ---

SLACK_BOT_TOKEN = os.environ.get("SLACK_BOT_TOKEN")

SLACK_APP_TOKEN = os.environ.get("SLACK_APP_TOKEN")

app = App(token=SLACK_BOT_TOKEN)

# --- Anthropic Client Initialization ---

ANTHROPIC_API_KEY = os.environ.get("ANTHROPIC_API_KEY")

client = anthropic.Anthropic(api_key=ANTHROPIC_API_KEY)

@app.event("app_mention")

def handle_contextual_mention(event, say, client as slack_client):

"""

Handles mentions, fetches thread context, and gets a response from Claude.

"""

user = event["user"]

channel_id = event["channel"]

thread_ts = event.get("thread_ts", event.get("ts"))

# Acknowledge the user immediately for better UX

ack_text = f"Hi <@{user}>! I'm processing the conversation..."

posted_message = say(text=ack_text, thread_ts=thread_ts)

try:

# Fetch conversation history from the thread

history = slack_client.conversations_replies(

channel=channel_id,

ts=thread_ts

)

messages = history["messages"]

# Format messages for the Anthropic API

# We'll create a list of dictionaries with 'role' and 'content'

prompt_messages = []

for msg in messages:

role = "user" if msg["user"] != "YOUR_BOT_USER_ID" else "assistant"

# Replace YOUR_BOT_USER_ID with your bot's actual user ID

prompt_messages.append({"role": role, "content": msg["text"]})

# Call the Claude API

api_response = client.messages.create(

model="claude-3-sonnet-20240229", # Or another suitable model

max_tokens=2048,

messages=prompt_messages

)

ai_response = api_response.content[0].text

# Update the original acknowledgment message with the final response

slack_client.chat_update(

channel=channel_id,

ts=posted_message['ts'],

text=ai_response

)

except Exception as e:

print(f"Error: {e}")

slack_client.chat_update(

channel=channel_id,

ts=posted_message['ts'],

text=f"Sorry, I encountered an error: {e}"

)

if __name__ == "__main__":

print("🤖 Context-aware Slack bot is running...")

handler = SocketModeHandler(app, SLACK_APP_TOKEN)

handler.start()This implementation dramatically improves the bot’s utility. It can now join a discussion, understand the context, and provide meaningful contributions, acting as a true collaborative partner rather than a simple command-response tool.

Advanced Features: Summarization and Semantic Search

To deliver maximum value, an enterprise AI assistant must go beyond simple conversation. Advanced features like on-demand channel summarization and semantic search over internal knowledge bases can solve critical business problems. These capabilities help employees quickly catch up on missed conversations and find answers to complex questions without manual searching.

On-Demand Channel Summarization

A slash command, such as /summarize, can be configured to trigger a summarization task. When invoked, the bot fetches the last N messages from the current channel, constructs a specialized prompt instructing the LLM to create a concise summary, and posts the result. This is invaluable for busy channels where important decisions can get buried.

# Add this to your Slack Bolt app

@app.command("/summarize")

def handle_summarize_command(ack, body, client as slack_client, say):

"""

Handles the /summarize slash command to summarize recent channel activity.

"""

ack() # Acknowledge the command immediately

channel_id = body["channel_id"]

user_id = body["user_id"]

try:

say(text=f"Sure, <@{user_id}>! Generating a summary for this channel...", in_channel=False)

# Fetch the last 100 messages from the channel

history = slack_client.conversations_history(channel=channel_id, limit=100)

messages = [msg["text"] for msg in history["messages"]]

conversation_text = "\n".join(messages)

# Construct a prompt for the summarization task

system_prompt = "You are a helpful assistant. Summarize the following conversation into key points, action items, and decisions made. Use bullet points."

api_response = client.messages.create(

model="claude-3-haiku-20240307", # Use a fast model for summarization

max_tokens=1024,

system=system_prompt,

messages=[

{"role": "user", "content": conversation_text}

]

)

summary = api_response.content[0].text

say(text=f"Here is the summary of the recent conversation:\n\n{summary}")

except Exception as e:

print(f"Error during summarization: {e}")

say(text=f"I'm sorry, I couldn't generate a summary. Error: {e}")Semantic Search with RAG and Vector Databases

For answering questions that require external knowledge (e.g., “What is our Q3 marketing strategy?”), the assistant needs to perform Retrieval-Augmented Generation (RAG). This involves indexing internal documents (from Confluence, Google Drive, etc.) into a vector database like those featured in Pinecone News, Weaviate News, or Chroma News. When a user asks a question, the bot first converts the query into a vector embedding and searches the database for the most semantically similar document chunks. These chunks are then injected into the LLM prompt as context, enabling Claude to provide an accurate, source-backed answer. This approach is far more powerful than simple keyword search and is a cornerstone of modern enterprise AI solutions, often leveraging tools from the Hugging Face Transformers News ecosystem like `Sentence-Transformers` for creating high-quality embeddings.

# Conceptual RAG implementation for a Slack bot

# Assumes you have a vector_db_client (e.g., pinecone, chroma, etc.)

# and an embedding_model (e.g., from sentence-transformers)

def get_rag_response(query: str) -> str:

"""

Performs a RAG-based query to answer a user's question.

"""

# 1. Create an embedding for the user's query

query_embedding = embedding_model.encode(query).tolist()

# 2. Search the vector database for relevant context

search_results = vector_db_client.query(

vector=query_embedding,

top_k=5, # Retrieve the top 5 most relevant document chunks

include_metadata=True

)

# 3. Format the retrieved context

context_str = ""

for match in search_results['matches']:

context_str += f"Source: {match['metadata']['source']}\nContent: {match['metadata']['text']}\n\n"

# 4. Build the prompt for the LLM

rag_prompt = f"""

Based on the following context, please provide a comprehensive answer to the user's question.

If the context does not contain the answer, state that you could not find the information.

--- CONTEXT ---

{context_str}

--- END CONTEXT ---

User Question: {query}

"""

# 5. Call the LLM with the augmented prompt

response = client.messages.create(

model="claude-3-opus-20240229", # Use a powerful model for reasoning

max_tokens=2048,

messages=[{"role": "user", "content": rag_prompt}]

)

return response.content[0].textBest Practices, Security, and Deployment

Building a robust and secure enterprise AI assistant requires careful attention to detail. Security is paramount; never hardcode API keys or other secrets in your source code. Use environment variables or a dedicated secrets management service. Always validate the signing secret of incoming requests from Slack to ensure they are authentic—the Slack Bolt library handles this for you, but it’s a critical security measure to be aware of. Furthermore, be mindful of data privacy. Understand your LLM provider’s data usage policies and ensure they meet your organization’s compliance standards. Anthropic, for instance, provides enterprise-grade security and privacy commitments.

Performance and Optimization

For long-running tasks like summarizing a vast channel history, respond to the user immediately and perform the work asynchronously in a background task to avoid Slack API timeouts. For high-throughput inference, consider deployment strategies using optimized engines discussed in TensorRT News or serving frameworks like vLLM News. Model selection is also key: use faster, more economical models like Claude 3 Haiku for simple tasks like summarization, and reserve more powerful models like Claude 3 Opus for complex reasoning and RAG. For debugging and monitoring these complex AI chains, tools mentioned in LangSmith News are becoming indispensable.

Deployment Strategies

Your bot’s backend can be deployed in various ways. Serverless platforms like AWS Lambda or Google Cloud Functions are cost-effective for event-driven, intermittent workloads. For more complex applications, containerizing your app with Docker and deploying it on a service like AWS Fargate, Google Cloud Run, or a managed platform like AWS SageMaker News or Azure Machine Learning provides greater control and scalability. Emerging platforms like Modal News and Replicate News are also gaining traction for simplifying the deployment of GPU-powered AI applications.

Conclusion

We have journeyed from a simple “hello world” Slack bot to a sophisticated, context-aware AI assistant capable of understanding conversations, summarizing channels, and performing semantic searches across a knowledge base. The integration of powerful LLMs like Anthropic’s Claude directly into enterprise collaboration tools represents a paradigm shift in how we work. As developments from major labs like Google DeepMind News and Meta AI News continue to push the boundaries of AI, these integrated assistants will only become more capable and indispensable. The tools and techniques discussed—from the Slack Bolt framework and Anthropic’s API to the principles of RAG with vector databases—provide a solid foundation for any developer looking to build the next generation of productivity-enhancing applications. The future of work is not just collaborative; it’s intelligently augmented, and the time to start building that future is now.